Alteryx Server Ideas

Share your Server product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Server: Top Ideas

Featured Ideas

Hello all,

This may be a little controversial. As of today, when you buy an Alteryx Server, the basic package covers up to 4 cores :

https://community.alteryx.com/t5/Alteryx-Server-Knowledge-Base/How-Alteryx-defines-cores-for-licensing-our-products/ta-p/158030

I have always known that. But these last years, the technology, the world has evolved. Especially the number of cores in a server. As an example, AMD Epyc CPU for server begin at 8 cores :

https://www.amd.com/en/processors/epyc-7002-series

So the idea is to update the number of cores in initial package for 8 or even 16 cores. It would :

-make Alteryx more competitive

-cost only very few money

-end some user frustration

Moreover, Alteryx Server Additional Capacity license should be 4 cores.

Best regards,

Simon

In larger organizations or organizations with global coverage, it is possible for users to be in different time zones from each other and the Alteryx server.

WIth our implementation of Alteryx server, we have the server time zone set to that of our data warehouse, but this time zone is different than my own by -2 hours. In the case of scheduling a workflow in the future, I normally correct for this by setting the scheule in the time zone of the server and things work alright.

Problem 1:

It falls apart is when I want to schedule something in the future, but it is within the timespan between my time zone and the server time zone. For example: It is 6PM my time, and 4PM server time. I want to schedule a workflow to run at 5PM server time (7PM my time). When I try to set this up with the Version 10 scheduler built into Alteryx desktop, it overides the date field upon saving to run the following day instead of today as it thinks im trying to schedule the run at a time that has already passed (which it has in my timezone, but not in the server time zone). Upon trying to edit this schedule to reset the date to today, it again reverts upon saving.

The only way I have found to get around this is to reset the time zone of my computer to match the server time zone, then set the schedule, then reset the time zone of my machine back to local time again. This is not the best experience, and will likely be required more frequently for users as their time zone difference increases.

Problem 2:

When wanting to run something server side immediately (I do this in cases where the runtime will be long, or im mobile and on a machine with a lower amount of processing resources) the "Once" option can be used in scheduler to push something to server and run it. In my case, due to the timezone difference, the default time set when trying to do this is the current time in my time zone, but 2 hours ahead of the current server time.

I have two proposed resolutions:

1) Transmit Server time zone information to the local scheduler to have everything involving scheduling in Server time. Also for any timestamp fields, include the time zone designation so its clear to the user.

2) Use information from the user's machine and information from the server to do timezone conversions behind the scenes so whenever scheduling or viewing schedules, all timestamps are in local time to the user. I feel this is the prefered solution for the best user experience, but would also be the more complex to implement.

Thanks,

Ryan

Hi,

We have more than 100 workflows scheduled in different intervals for a day. Inorder to monitor this we have to manually check in the workflow results if the workflow ran successfully or not.

Say a workflow is scheduled every 30mins then we have to check this 48times in a day manually in the Gallery results if it ran successfully or not.

Similarly we will have N number of workflows scheduled with different intervals.

We feel its too time consuming to manually verify this.

It will be good if there is a notification when there is a failure in the run, so we can check it only then rather than checking it every 30mins.

It is currently (Alteryx Server 2018) not possible to delete Districts one created - we can only disable them. See also: https://community.alteryx.com/t5/Alteryx-Connect-Gallery/Deleting-Gallery-Districts/m-p/32684

Frankly, this is rather inconvenient if one wants to restructure a Gallery. Renaming them is an option, but there might still be a number of districts we want to delete. Would appreciate if this could be considered for a future update.

Please support GZIP files in input tool in Server. This is related to https://community.alteryx.com/t5/Alteryx-Product-Ideas/Support-for-GZIP-gz-compressed-files-in-input..., but logged in a separate Idea because the staff indicated in the thread that they are planning support for GZIP in Designer only in 2018.2. We need it in Server as well.

Hi there,

On the server product you have the ability to set up timeouts to avoid server resources being hogged by any one canvas.

Currently this setting only applies to scheduled canvasses - however this leaves a gap where users can just run this manually.

Please can you extend this setting to also cover manually initiated jobs too?

Currently when running an Alteryx Workflow from the gallery via the API, it doesn't get logged in the user interface in the same way that running it interactively in the gallery GUI does - a shame as it then becomes harder to know the traffic and performance of a workflow run in this way.

Hello, I would like improved user management features and/or training

- Display 100 does not seem to work consistently

- Click /navigate from user to user – once I select a user would like to go to next user

- Changes I make with filters refresh every time I go back – which means I have to constantly reset my view in between each user

- Modify user settings in collections – would like to modify user settings instead of delete and re add user

Starting with Windows Sever 2016 edition one could use Docker containers technology on windows environments. My idea is to dynamically convert Designer jobs/workflows to Docker containers at runtime.

Currently the only filtering available in the Results tab in View Schedules is by Workflow Name. It would be nice to be able to filter by Status, so we can just look for potential problems, like warnings or errors. Currently, I have a job that runs every 5 minutes. That means I have to scroll through hundreds of rows, or about 12 pages using the default 25 rows per page, just to scan for potential problems in the last 24 hours.

I would like to see the ability to post comments under workflow information to help with development and elicit feedback. Currently if there is a problem with the macro/workflow you must contact the owner of the workflow directly. It would be nice to leave the creator of the workflow a constructive comment, operating question or a thank you comment. Especially in the Public Gallery where the development time of new version could be reduced for both private individuals looking to share and macros developed by Alteryx that eventually turn into standard tools.

I just underwent an exercise of recovering my controller in the event of a catastrophic failure. One of the steps is to recover the DCME keys (DCM Encryption keys) - which is documented here: https://help.alteryx.com/20221/en/server/install/server-host-recovery-guide/dcme-keys-to-backup.html...

This DCME recovery needs to be revisited. This document assumes that the previous controller is running. In a disaster recovery situation, this is not possible. What, if any, can be done to recover the DCME keys if the host has is completely irrecoverable?

For context, having an irrecoverable host has happened. Complete hard drive failure (showing my age), nuked virtual machine and its backups (no one paid attention to the notices that the data center was shutting down), and fire.

Hi all,

In an enterprise environment - DB connections need to be set up from the server and pushed down to your users; and they need to be managed across the various servers in your software lifecycle.

In other words - you may have a sandpit / dev server env; a UAT env; a pre-prod; and a prod env - and each of these need to have the same DCM credential IDs so that users can access these.

(before you say "you can do this from the desktop) - that is true, however that's not a workable solution in an enterprise env because that means that users can change the password from their desktop into a prod env which is a breach of IT General Controls)

The solution here is to break DCM out in to a separate service - where

- all your servers (dev; UAT; Pre-Prod; Prod) can all point to one instance of DCM

- users can maintain their own connections and credentials

- Each needs to have up to 2 owners so that you can deal with people moving jobs / leaving the firm

- users can also entitle these connections and credentials to their team members so that when the team member logs in, it shows a popup saying "you've just been given access to new credentials / connections"

- A particular connection may have multiple different variants - depending on the environment.

- HR Data may point to a UAT version of HR data if you're on the UAT server; and to Prod if you're on the Prod server

- if a connection is environment specific - then it also needs to have segregated credentials (since the login to your UAT HR Data may not be the same as prod).

Thank you all

sean

cc: @wesley-siu @_PavelP

The idea is to have tabs on separate pages in the Gallery (or the option to allow that type of behavior). Right now, if you created an analytic app that has questions on different tabs they will be on separate pages in Designer, but when you push the workflow to the Gallery all the questions will be on the same page. The tabs act as a navigation bar instead of taking you to separate pages.

Here's two different discussions that ask about this in case there is any confusion:

While working in the Gallery, I think the file browse tool should allow the user to import a file without selecting a sheet or <list of sheet names> as is does locally.

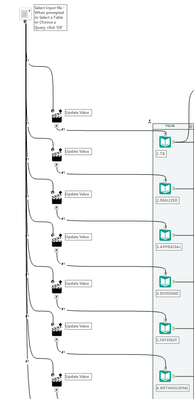

For example, I have created an app in which the user is able to import a file with multiple sheets, and all sheets are brought into separate input data tools with a single file browse tool (shown below).

Since the user does not select a sheet name, the file browse tool only brings in "SelectedFileName.xlsx|". The action tool is then set to replace a specific string "SampleFileName.xlsx|". This allows the input data tools to take this new file name, and add each respective sheet name to the end.

However, when working in the Gallery, the user is required to select a sheet name or list of sheet names, meaning a file browse tool is required for each sheet that you would like to import (shown below). This is a huge inconvenience for the application user, as they have to select the same file numerous times to import every sheet.

Please let me know if you would like me to provide any more information, I would be happy to do so.

Thanks,

Matt

Issue: When Workflow names have similar beginnings on server a user cannot distinguish between them because the columns on this GUI are not expandable as would be expected. A user has to make their browser larger and all columns open proportionately.

Solution: Change column settings so users can drag column widths to make changes. A bonus would be to allow a user to set a default along with an option to always auto expand all.

Please provide the ability to bulk add/delete users to a gallery. This would be useful for on-boarding/off-boarding large companies, departments, and external customers. For public-facing galleries, this would provide us the ability to on-board/off-board entire customers of ours.

The publishing endpoint, a POST to api/admin/v1/workflows/, is useless whenever workflows, apps, or macros contain Gallery Data Connections. The workflow will get published but valid Gallery Data Connections are ignored and the apps will not run.

Please add the same dependency checks against Gallery Data Connections as is performed when a workflow or app is manually published from Designer.

This might be considered a subset of the Idea Server API to extract / submit workflows.

Thank you for your consideration.

Sincerely,

David

The Schedules tab of the Gallery currently lists all schedules, 20 to a page, with no option to filter. I am currently managing 183 active schedules. In order to find a single schedule, I have to sort by Workflow name, then page through 10 pages of schedules to find the schedule I'm looking for.

Please add an option to filter this list.

Ideally, I would like to be able to filter on multiple fields at once (example: Priority = High, Status = Active, Owner = Bob Smith, Times Run > 20). Barring that, a simple search option on workflow name, similar to the search option on the Collections tab, would be enough.

Using current version of the server - you can see that there is no OAuth managed or published API endpoint for canvas delete (screenshot 1). However this API does CLEARLY exist as you can see if you inspect what happens when you hit the delete button (screenshot 2 clearly shows the API being called - but it requires user login security token)

Please can you enable this API for OAuth - the API already exists, it just needs to be exposed with the others.

CC: @BlytheE

- New Idea 372

- Comments Requested 4

- Under Review 66

- Accepted 34

- Ongoing 2

- Coming Soon 1

- Implemented 75

- Not Planned 48

- Revisit 16

- Partner Dependent 0

- Inactive 65

-

Admin UI

35 -

Administration

68 -

AdminUI

7 -

Alteryx License Server

8 -

AMP Engine

1 -

API

44 -

API SDK

1 -

Apps

20 -

Category Data Investigation

1 -

Collections

21 -

Common Use Cases

11 -

Configuration

32 -

Data Connection Manager

13 -

Database

16 -

Documentation

8 -

Engine

8 -

Enhancement

185 -

Feature Request

3 -

Gallery

235 -

General

71 -

General Suggestion

1 -

Installation

11 -

Licensing

3 -

New Request

125 -

Permissions

20 -

Persistence

3 -

Public Gallery

10 -

Publish

10 -

Scaling

25 -

Schedule

1 -

Scheduler

71 -

Server

540 -

Settings

108 -

Sharing

16 -

Tool Improvement

1 -

User Interface

31 -

User Settings

1 -

UX

86

- « Previous

- Next »

- moinuddin on: Enable Multi tenancy by Installing Multiple Altery...

- Sunit125 on: Expand the v3/jobs API endpoint

-

Kenda on: Display Commas in Gallery for Numeric Up Down

- TheCoffeeDude on: Ability to increase the default for 'Rows Per Page...

- Julie_7wayek on: Allow Changing \Alteryx\ErrorLogs Path

- tristank on: Alteryx to support Delinea as a DCM external vault

- mbaerend on: Alteryx Server Scheduling

-

patrick_digan on: Expand dcm admin apis

- hroderick-thr on: DCME key disaster recovery

- MJ on: Allow Folders within Collections and Workspaces fo...