Alteryx Server Ideas

Share your Server product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Server: New Ideas

Featured Ideas

Hello all,

This may be a little controversial. As of today, when you buy an Alteryx Server, the basic package covers up to 4 cores :

https://community.alteryx.com/t5/Alteryx-Server-Knowledge-Base/How-Alteryx-defines-cores-for-licensing-our-products/ta-p/158030

I have always known that. But these last years, the technology, the world has evolved. Especially the number of cores in a server. As an example, AMD Epyc CPU for server begin at 8 cores :

https://www.amd.com/en/processors/epyc-7002-series

So the idea is to update the number of cores in initial package for 8 or even 16 cores. It would :

-make Alteryx more competitive

-cost only very few money

-end some user frustration

Moreover, Alteryx Server Additional Capacity license should be 4 cores.

Best regards,

Simon

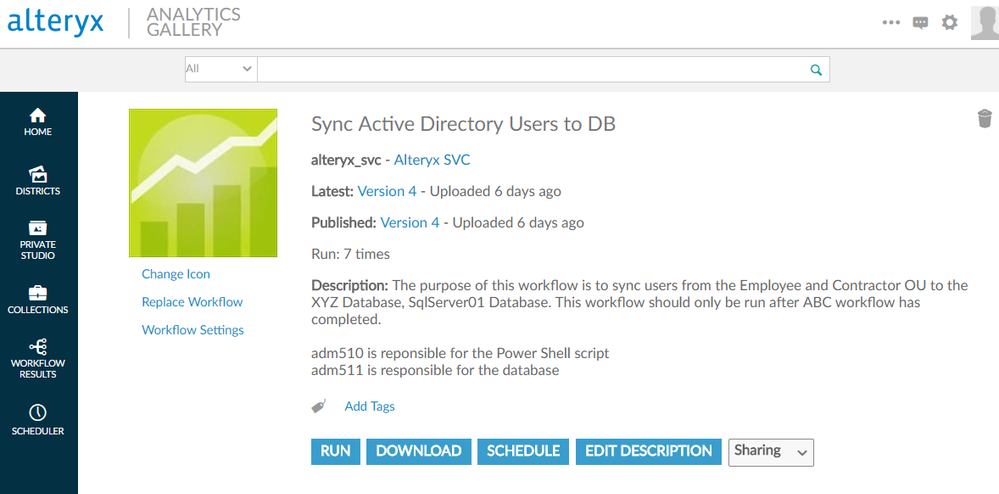

I would like the ability to add a workflow description on Alteryx server. This step would be done after the workflow is published, this way future edits can be made without having to republish the workflow. A checkbox to allow HTML content would also be appreciated.

The default view at gallery home page time will not support long titles for your workflows. I have several that get cut off with . . . . . and you need to hover over in order to get the full title. You can select a list view, which helps, and gives you more metadata, but you have no mechanism to save your table / list view as a default. Administrators should have the option to define a default view (tabular / list) and have it default that way for everyone who gets there, authenticated or not.

If you goto a private studio, you get the list view option. If you go to a collection, you get the list view option. If you goto a district, you don't get a list / table option set, just table set. Why?

There does not seem to be a way to set a (list of) favorite(s), even as an administrator. There should be a quick-hit button option to get you there, add it to a favorites collection, something. It seems like we went a long way to generate three different builds of ways to organize workflows, but left out the one that is most universal in similar tools. 'My favorites'.

The search criteria for adding a workflow to a collection *mandates* that you have a space, but doesn't tell you that is why you are not getting any hits; i.e., 'Water' and 'Water ' will give you 'no results found', or anything with water in the title respectively. Ugh.

The WebDAV protocol isn't supported by the Alteryx Scheduler, due to the security constraints associated with connecting to SharePoint. This is a server side limitation that should be aligned with the ability of the desktop to preform the WebDAV action.

In prior projects (before the gallery started acting more like a management console (still would like to see a few more features there too ;) ) , we were able to automate the deployment of the workflows. But there's no good way to automate the deployment of the schedules.

The best I've heard is doing a complete dump of the mongo instance on the lower environment and moving that file and ultimately restoring that to the prod environment (messy).

Even using the Gallery, there will still need to be an admin who goes through the process of setting up schedules to deployed apps.

There should be a REST call to export and import schedules of apps from one environment to the next within the gallery.

Best,

dK

Along with setting a schedule for an app in the gallery, the user should also be able to set the different interface values for each schedule he/she makes.

My main use case is regarding having generic templates for ETL processes, that have multiple different types of runs based on configuration values, but I'm sure there are plenty others.

Best,

dK

In the Alteryx Gallery UI, it's possible to set up workflow credentials so that a workflow published to the gallery runs as a specific user.

Unfortunately when that workflow is run from the Alteryx Gallery API, it appears to only ever run as the Alteryx Server Run-As account.

Our developers in working with this figured out that if they called the (undocumented) API that runs the actual Alteryx Gallery directly, they can achieve what they want, but it seems a risky strategy.

The idea would be:

-Either unify the APIs so that the Gallery itself uses the same API to run workflows as what you present as the "Gallery API" (the eat your own dogfood way)

-Alter the Gallery API to enable us to run as a different workflow credential

Without this, we're forced to permission the run-as account to access anything that uses this method, which in turn then becomes a bit of a security hole (any workflow run will have access to everything that the run-as account uses)

Currently when running an Alteryx Workflow from the gallery via the API, it doesn't get logged in the user interface in the same way that running it interactively in the gallery GUI does - a shame as it then becomes harder to know the traffic and performance of a workflow run in this way.

Please provide the ability to bulk add/delete users to a gallery. This would be useful for on-boarding/off-boarding large companies, departments, and external customers. For public-facing galleries, this would provide us the ability to on-board/off-board entire customers of ours.

Organizations using Alteryx Server with an embedded MongoDB can benefit from an option to change the MongoDB User and Admin User passwords.

Current deployments of Alteryx Server allow regeneration of the Controller Token, and for many of the same reasons, the ability to change the MongoDB passwords would be beneficial to customers.

Many organizations rely on a centralized team for daily administration of the Alteryx Server and MongoDB. With the current functionality, when members of this team change positions, they continue to know the MongoDB authentication information indefinitely. Providing organizations with this capability allows them to make the determination of how/when a change is required to mitigate any risk of misuse.

Hi,

@patrick_digan pointed out to me today that in my ignorance I thought that the "On Success - Show Results to User" option in Interface Designer did something on Alteryx Gallery, like it does when run locally. I tried to prove him wrong (I've been manually checking off what outputs I want since day 1! How could it not have been doing anything?!) and failed miserably. As far as we can tell, this functionality is simply non-existent within Gallery.

Please add this functionality so that we can suppress files (without reverting to hacky tricks like changing the output location so the Gallery can't see the output) with a simple check box.

Link to my shame, where I very openly explained exactly how I thought I had been solving this problem the whole time, and how after testing and review, it looks like I hadn't done anything with this configuration.

The ability to select a workflow or app within the Gallery web interface and change its name. This would maintain its historical run data, version control revisions, placement in collections, etc.

Use case: As a workflow or app continues to be developed over time, the name may need a revision to continue reflecting the workflow's function.

Best regards,

Ryan

Hi all,

i hopped onto Azure tonight to see if I could create an Alteryx server to start learning Alteryx server as a skill. I was expecting the cost to be somewhere in the range of Microsoft SQL server on Azure, or Microsoft Power BI (a few dollars, but not more than $20 per month for limited usage). I was fairly shocked by the price on offer (8k - 10k per month), with no option to price this by usage.

Could we work together to create options for people to stand up their own small Alteryx server like this for the purposes of learning - right now there is no way to learn Alteryx server unless you are actually an admin of a live server, which makes it impossible for people to learn this at home to build their skills (and impedes Alteryx building a base of skilled users in the market).

Happy to work with the team to help make this a reality.

cheers

Sean

Hi all, Per this thread, it would be helpful if we could have finer control of scheduled jobs in Alteryx Server: https://community.alteryx.com/t5/Publishing-Gallery/Resource-control-on-scheduled-jobs/m-p/61016/hig...

Specifically:

- Dependancies (one job should run after another in a chain)

- lower priority (some jobs should go down the priority queue to allow for others so that high priority jobs are not blocked by other less important ones)

Thank you Sean

Many people maintain valuable information in Excel files, and many organizations, like ours, also use SharePoint to store and share structured and unstructured information. We see most user-generated and maintained data in Excel files in SharePoint document libraries, and one of the great benefits of Alteryx is the ability to join that Excel data with other data sources. Unfortunately, the v11.0 Scheduler cannot resolve the UNC-style ( \\server@ssl\DavWWWRoot\site-name\document-library\filename ) addresses, so workflows that access this valuable SharePoint Excel data must be run manually. The SharePoint List Input tool can read the list-style metadata for Document Library files, but does not access the file content.

The Scheduler should be enhanced so that scheduled workflows can read Excel data stored in SharePoint Document Libraries.

Sometimes when using someone else's Gallery App which has a long list of options to select from, I will hit Run before realizing that I haven't set all of the options. Then, the App fails (obviously).

Rather than just getting a message that my app run failed on the Gallery, it would be nice to have a link then that automatically re-loads the previous options I had set so I would be able to see which option I didn't fill out properly.

If a job fails it would be perfect if we could set something in the workflow settings so that the job would retry again in X number of minutes for the next Y number of times. We have jobs that connect to external resources and sometimes the network will reset and will cause the connections to all drop. An example would be I want a workflow to try again in 10 minutes for a maximum of 5 times so over the next 50 mins it will retry every 10 mins if it fails

When viewing the schedule viewer with more than the default per page workflow amount of 25 workflows, if you sort it only sorts in the 25 in the current page. I would expect it to first sort all workflows then show the first 25, and actually used the interface with this as my expectation for longer than i'd care to admit, leading to a large amount of confusion when i for some reason couldn't figure out why workflows that i scheduled were not showing up. Obviously this is down to user error and now that i understand it i don't have any issue, but at the same time, why would the sort not apply before population of the list?

Interested to know if i'm the only one who's been annoyed by this.

~ Clive

In some organizations, it may be difficult, if not impossible, for permissions to be applied or exemptions made to enable wide ranges of users the “Logon as batch job” permission needed to run workflows in a Server with the current run-as credential capability.

If possible, could the Alteryx process still run as the server admin or "Run As" account, but enable the workflow to access the various different data sources (windows authentication) using specific credentials entered when running the workflow. So while the whole process runs as Service Account A, the access to databases, file systems, etc. may be done using their own specified credentials.

Some of this can be accomplished today by embedding credentials in database connections, but this isn’t an ideal scenario, and a more holistic solution that covers a wider array (or all supported) data sources would be preferred.

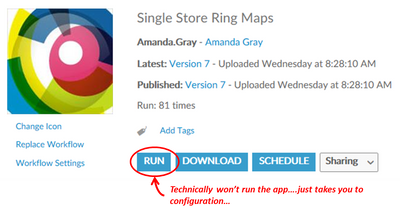

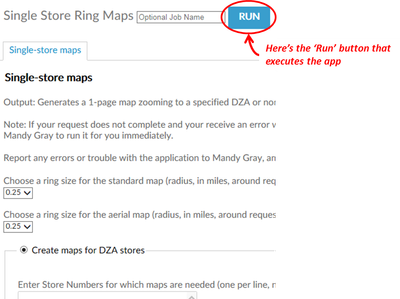

We've noticed on our Server Gallery that users must click 'Run' two separate times when running an app. The first time they see 'Run', its really taking them to a configuration screen, rather than actually executing the tool. What if that first 'Run' button was changed to 'Configure'? We've seen that users hesitate to run apps because they aren't sure what they're getting when they click 'Run' the first time.

- New Idea 385

- Comments Requested 4

- Under Review 72

- Accepted 32

- Ongoing 2

- Coming Soon 1

- Implemented 75

- Not Planned 46

- Revisit 16

- Partner Dependent 0

- Inactive 65

-

Admin UI

35 -

Administration

68 -

AdminUI

7 -

ALS

1 -

Alteryx License Server

8 -

AMP Engine

1 -

API

46 -

API SDK

1 -

Apps

20 -

Category Data Investigation

1 -

Collections

22 -

Common Use Cases

11 -

Configuration

32 -

Data Connection Manager

13 -

Database

18 -

Documentation

8 -

Engine

9 -

Enhancement

194 -

Feature Request

3 -

Gallery

235 -

General

71 -

General Suggestion

1 -

Installation

12 -

Licensing

3 -

New Request

132 -

Permissions

22 -

Persistence

3 -

Public Gallery

10 -

Publish

10 -

Scaling

29 -

Schedule

1 -

Scheduler

72 -

Server

555 -

Settings

113 -

Sharing

16 -

Tool Improvement

1 -

User Interface

31 -

User Settings

1 -

UX

88

- « Previous

- Next »

-

gawa on: Improve Customisability of Workflow Validation Run...

-

TheOC on: Read All Button for Notification

- seven on: BUG: Server API for workbook versions always downl...

-

TheOC on: Deletion of Logs files from Engine, Service and Ga...

- jrlindem on: Option to notify users when schedule fails

- moinuddin on: Enable Multi tenancy by Installing Multiple Altery...

- simonaubert_bd on: Expand the v3/jobs API endpoint

-

TheOC on: Favorite Workflows

-

Kenda on: Display Commas in Gallery for Numeric Up Down

-

TheOC on: Ability to increase the default for 'Rows Per Page...