Alteryx Server Ideas

Share your Server product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Server: New Ideas

Featured Ideas

Hello all,

This may be a little controversial. As of today, when you buy an Alteryx Server, the basic package covers up to 4 cores :

https://community.alteryx.com/t5/Alteryx-Server-Knowledge-Base/How-Alteryx-defines-cores-for-licensing-our-products/ta-p/158030

I have always known that. But these last years, the technology, the world has evolved. Especially the number of cores in a server. As an example, AMD Epyc CPU for server begin at 8 cores :

https://www.amd.com/en/processors/epyc-7002-series

So the idea is to update the number of cores in initial package for 8 or even 16 cores. It would :

-make Alteryx more competitive

-cost only very few money

-end some user frustration

Moreover, Alteryx Server Additional Capacity license should be 4 cores.

Best regards,

Simon

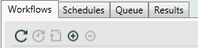

If you are viewing schedules, on the Queue Panel, you should have the option to refresh the information to get an idea how far things are progressing. If you swipe back and forth between panels you get updated % done information, so it is definitely available. Not the most pressing need, but a very easy fix.

Ninja Edit: Did not know there was a dedicated server ideas forum so I removed a part.

-

Scheduler

-

Server

The AlteryxService addtoqueue command is great, but it leaves me wanting more. My simple use case is to run WorkflowB via the addtoqueue command in an after run event from WorkflowA, which is run daily. The result is that i end up with a cluttered scheduler with many instances of WorkflowB that i need to manually clean up, since addtoqueue creates a new record in AS_Applications each time it runs

It would be useful if there was an AlteryxService command for each of the subroutines already built in to the scheduler front end app: schedule workflow, update workflow, add workflow, remove workflow, edit schedule, etc

MongoDB objects could be identified by oid which the user can get from querying the AlteryxService database

I don't want to rebuild what you've already built, just need a little more control over it 🙂

-

Scheduler

-

Server

As the Server Admin I'd like to have the ability to view ALL "Workflow Results" for all Subscriptions.This will give the highest level admin the ability to monitor all schedules (on the entire server instance) and monitor if they are unable to complete successfully (example- unable to allocate memory) and any other errors are occurring.

Knowing this information will help the server administrator understand if there are issues with the server itself (e.g. if we need more workers or to simply adjust actual server system settings..etc..)

-

Administration

-

Gallery

-

Scheduler

-

Server

I wish Alteryx would allow more control over user access to scheduling workflows. Currently there is only a radio button that globally allows users to access the scheduler. I wish we had another layer where we could limit access by Permissions. For example, we would like all Artisans and Curators to have access to the scheduler, but not viewers.

In order for us to manage the large number of canvasses on our server - we need to add the ability for Admin teams to require additional attributes on every canvas:

For us, these mandatory attributes would be:

- Which team do you belong to (dropdown)

- What business process does this serve (dropdown - multiselect)

- Primary & secondary canvas owner (validated kerberos)

For the ones that have dropdown lists - we can provide the master data into a drop location or into a manually configured list on the server.

Currently, this is completely manual with whoever is assuming the schedule creating it under their profile and then the old schedule being deleted.

This can happen often in organization where a user leaves the company or assumes a new role requiring some else to maintain those schedules. It would be convenient if there was an option to reassign the owner of a schedule to simply this process.

-

Administration

-

Gallery

-

Scheduler

-

Server

Hi,

We have more clients that would like to prioritize their scheduled jobs based on run time and importance.

It could be a simple priority number, that decides which job that need to run next. Further it could be nice to be able to allocate certain workers to certain jobs.

I am looking forward to your feedback.

Daniel

-

Administration

-

Gallery

-

Scheduler

-

Server

In prior projects (before the gallery started acting more like a management console (still would like to see a few more features there too ;) ) , we were able to automate the deployment of the workflows. But there's no good way to automate the deployment of the schedules.

The best I've heard is doing a complete dump of the mongo instance on the lower environment and moving that file and ultimately restoring that to the prod environment (messy).

Even using the Gallery, there will still need to be an admin who goes through the process of setting up schedules to deployed apps.

There should be a REST call to export and import schedules of apps from one environment to the next within the gallery.

Best,

dK

-

Scheduler

-

Server

If a job fails it would be perfect if we could set something in the workflow settings so that the job would retry again in X number of minutes for the next Y number of times. We have jobs that connect to external resources and sometimes the network will reset and will cause the connections to all drop. An example would be I want a workflow to try again in 10 minutes for a maximum of 5 times so over the next 50 mins it will retry every 10 mins if it fails

Hi

My idea is to facilitate scheduling through Gallery vs the separate scheduler built into Designer. The reasons for this:

- The current scheduler authentication with Alteryx server via token is a big security concern. This allows all users to see all schedules, workflows, output logs, etc. for the entire server.

- It will better interact with the Alteryx 10 version contol. Current scheduler shows all prior versions in the workflow list.

- Allow the server/gallery admin to setup scheduling rules. Ex: No scheduling during certain windows (for maintance downtimes), max frequency of scheduling, prevent users from scheduling too many jobs at the same time, user level rules.

- Allow scheduled workflow output to be retrieved from the "Workflow Results" section by the user who scheduled the workflow. Ex: I schedule a workflow that takes 2 hours to run at 6AM each day, then by the time I arrive at the office at 8AM, I can login to the gallery and download the output.

Thanks,

Ryan

When a process is running in foreground, the GUI does an excellent job of giving feedback to the user as to which "step" the job is running and how much data is processing through the active tools. When that same job is scheduled, the amount of information is limited to when the job began execution.

If a tool was able to give checkpoint status out to the user, we could better monitor the progress of scheduled jobs. The visibility to the job is most important for long running jobs. We've unfortunately had instances where we have had to cancel jobs and to restart them not knowing how close or how far from finishing they were.

Thanks for your consideration,

Mark

- New Idea 372

- Comments Requested 4

- Under Review 66

- Accepted 34

- Ongoing 2

- Coming Soon 1

- Implemented 75

- Not Planned 48

- Revisit 16

- Partner Dependent 0

- Inactive 65

-

Admin UI

35 -

Administration

68 -

AdminUI

7 -

Alteryx License Server

8 -

AMP Engine

1 -

API

44 -

API SDK

1 -

Apps

20 -

Category Data Investigation

1 -

Collections

21 -

Common Use Cases

11 -

Configuration

32 -

Data Connection Manager

13 -

Database

16 -

Documentation

8 -

Engine

8 -

Enhancement

185 -

Feature Request

3 -

Gallery

235 -

General

71 -

General Suggestion

1 -

Installation

11 -

Licensing

3 -

New Request

125 -

Permissions

20 -

Persistence

3 -

Public Gallery

10 -

Publish

10 -

Scaling

25 -

Schedule

1 -

Scheduler

71 -

Server

540 -

Settings

108 -

Sharing

16 -

Tool Improvement

1 -

User Interface

31 -

User Settings

1 -

UX

86

- « Previous

- Next »

- moinuddin on: Enable Multi tenancy by Installing Multiple Altery...

- Sunit125 on: Expand the v3/jobs API endpoint

-

Kenda on: Display Commas in Gallery for Numeric Up Down

- TheCoffeeDude on: Ability to increase the default for 'Rows Per Page...

- Julie_7wayek on: Allow Changing \Alteryx\ErrorLogs Path

- tristank on: Alteryx to support Delinea as a DCM external vault

- mbaerend on: Alteryx Server Scheduling

-

patrick_digan on: Expand dcm admin apis

- hroderick-thr on: DCME key disaster recovery

- MJ on: Allow Folders within Collections and Workspaces fo...