Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: New Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

It would be helpful to redirect Help Documentation searches to the latest version number. When Googling problems I often get help page links that are for version 9.5 while I'm on version 11.7. I'll usually change the version number in the URL to get to the right documentation. It would be great if the version <11 documentation would automatically port forward to the current documentation with the option to go to older documentation for users on those versions.

When building an Alteryx Macro - one of the tough parts is that the input data you put into the Macro Input is used for testing, but you cannot set the type.

So for example - I want to test with the value 1, and Alteryx automatically assumes this is a Byte.

However 1 is just a useful test value, but I need this to be an int 64.

Can we provide the option to strongly type the macro inputs - this way, we can give advanced users the ability to control the type on Macro Inputs, and not run into this sort of issue with test data implicitly defining the type?

Note: this is similar to the idea here:

Desperately looking for a way to connect to SQL Server Analysis Services through Alteryx as more and more of our large datasets from our older systems are moving to here in the next few months. We can connect using PowerBi with limitations (connecting 'Live' does not allow merge and connecting with Import, you need to use MDX or DAX syntax). We run into import and export limitations, too. We are not allowed access to the underlying tables, but the tables with the measures, dimensions and fields. PowerBi is a big step up from pivot tables, but Alteryx would be so much better. Ideas for connecting this up are are welcome!

A user may need to perform regression test on their workflows when there is a version upgrade of Alteryx. To save users time and effort, users can be encouraged to submit a few workflows in a secure area of the Alteryx Gallery. Prior to a version release, the Alteryx product testing team can perform a regression test of these workflows using automation. Thus when users receive an upgraded version of Aleryx it is more robust and with the added assurance that the workflows they had submitted will continue to work without errors.

Hello,

We use the pre-sql statement of the input to set some parameters of connections. Sadly, we cannot do that in a in-db workflow. This would be a total game-changing feature for us.

Best Regards,

Simon

Alteryx has the ability to connect to data sources using fat clients and ODBC but not JDBC. If the ability to use JDBC could be added to the product it could remove the need to install fat clients.

I need to be able to connect to Salesforce CampaignInfluence object which is only available through API v37 or later. Currently, the Salesforce Connectors of the REST API is on v36 whereas the latest version is v41 (about a year gap between v36 and v41). I was told that there was no immediate plan to update the default connector to the latest version. It would be nice to have visibility on objects available on the newer versions.

Apache ORC is commonly used in the context of Hive, Presto, and AWS Athena. A common pattern for us is to use Alteryx to generate Apache Avro files, then convert them to ORC using Hive. For smaller data sizes, it would be convenient to be able to simply output data in the ORC format using Alteryx and skip the extra conversion step.

ORC supports a variety of storage options that users may wish to override from sensible Alteryx defaults. We typically use the SNAPPY compression codec.

In the community and in mixed teams - it's very common for people to be caught on the error that "This document was created in a more recent version". Although there are several workarounds (e.g. this one from @WayneWooldridge here https://community.alteryx.com/t5/Alteryx-Knowledge-Base/Adjusting-Alteryx-Files-for-Different-Versio...), this seems like it may be an easy problem to solve more permanently.

Could we add an option to Alteryx to save the file with the lowest compatible version number?

So - for example - if i'm only using components that shipped with version 10, then please mark the file as version 10. If I've used a tool that shipped in 11.0.6 then that needs to be the version number.

This way - files will be back-compatible as far as is possible by default unless using newer components.

Many thanks

Sean

I know this has been posted before, but the posts are fairly old, and I have just confirmed with Support that it is still an issue. Seems to be a pretty basic request, so I'm putting it out there again under this new heading.

The issue is that if you have data in a field, and you have that data separated by a new line (\n), it will show up fine in a browse tool, or pretty much any other output (database file, Office Document file, etc.). But if you try to use the Table Tool under Reporting, it ignores the line break and strings the data together.

Example:

The field data looks like this in a browse or most other outputs:

Hello, my name is

Michael Barone

and I love

Alteryx

But when I try to pull this field into a Table Tool, it shows up like this:

Hello, my name is Michael Barone and I love Alteyrx

Putting this out here again in hopes that it gets lots and lots of stars so it gets put on the road map!!

We have Alteryx running in AWS which seems to be a common setup.Our AWS instances are set-up with IAM roles which has been one of the security measures applied in order to finally allow our enterprise company to allow some development in the cloud. IT will not allow the sharing of Access keys to connect to S3.

- Would like to use the AWS S3 Tools from the connectors palette as the AWS CLI has limited ability to handle/report exceptions or issues with any detail. At the moment, we are limited on what goes into production as we are using CLI for what we can.

- Ideally, an option would be to add to the S3 Tools allowing the user to select IAM Roles rather than Key Access. Refer the screen attached.

When you click on 'Open results in a new window' or in when viewing results in the 'Results - Browse' sometimes it would be nice to have a freeze pane feature so you can pin column(s) to the left and scroll to the right.

Hello,

I had a business case requiring a cost effective and quick storage solution for real time online sourced survey data from customers. A MongoDB instance would fit the need, so I quickly spun up a cluster on Mongo Atlas. Atlas was launched by MongoDB in 2016 as a database-as-a-service deployed on AWS. All instances for Atlas require TLS/SSL to connect. Currently, the Alteryx MongoDB connector does not support TLS/SSL connections and doesn't work against Atlas. So, I was left with a breakdown in my plan that would require manual intervention before ingesting data to Alteryx (not ideal).

Please consider expanding this functionality on all connectors. I am building Alteryx out in my agency as a data platform that handles sensitive customer information (name, address, email, etc.). Most tools I use to connect to secure servers today support this type of connection and should be a priority for Alteryx to resolve.

Thanks,

Mike Schock

Currently the BROWSE tool shows numeric data in raw format. It would be easier to evaluate a column of data if the data was right justified and formatted with the decimal point aligned.

Thiwould change this

234.56788

12.0

.098

to

234.567

12.000

.098

Please enhance the input tool to have a feature you could select to test if the file is there and another to allow the workflow to pause for a definable period if the input file is locked by another user, then retry opening. The pause time-frame would be definable for N seconds and the number of iterations it would cycle through should be definable so you can limit how many attempts to open a file it would try.

File presence should be something we could use to control workflow processing.

A use case would be a process that runs periodically and looks to see if a file is there and if so opens and processes it. But if the file is not there then goes to sleep for a definable period before trying again or simply ends processing of the workflow without attempting to work any downstream tools that might otherwise result in "errors" trying to process a null stream.

An extension of this idea and the use case would be to have a separate tool that could evaluate a condition like a null stream or field content or file not found condition and terminate the process without causing an error indicator, or perhaps be configurable so you could cause an error to occur or choose not to cause an error to occur.

Using this latter idea we have an enhanced input tool that can pass a value downstream or generate a null data stream to the next tool, then this next tool can evaluate a condition, like a filter tool, which may be a null stream or file not found indicator or other condition and terminate processing per the configuration, either without a failure indicated or with a failure indicated, according to the wishes of the user. I have had times when a file was not there and I just want the workflow to stop without throwing errors, other times I may want it to error out to cause me to investigate, other scenarios or while processing my data goes through a filter or two and the result is no data passes the last filter and downstream tools still run and generally cause a failure as they have no data to act on and I don't want that, it may be perfectly valid that on a Sunday or holiday no data passes the filters.

Having meandered through this I sum up with the ideal being to enhance the input tool to be able to test file presence and pass that info on to another tool that can evaluate that and control the workflow run accordingly, but as a separate tool it could be applied to a wider variety of scenarios and test a broader scope of conditions to decide if to proceed or term the workflow.

This functionality would allow the user to select (through a highlight box, or ctrl+click), only the tools in a workflow they would want to run, and the tools that are not selected would be skipped. The idea is similar to the new "add selected tools to a new tool container", but it would run them instead.

I know the conventional wisdom it to either put everything you don't want run into a tool container and disable it, or to just copy/paste the tools you want run into a blank workflow. However, for very large workflows, it is very time consuming to disable a dozen or more containers, only to re-enable them shortly afterwards, especially if those containers have to be created to isolate the tools that need to be run. Overall, this would be a quality of life improvement that could save the user some time, especially with large or cumbersome workflows.

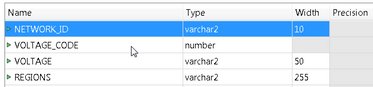

Currently when creating a table in Oracle in Alteryx there is a lot of "magic" that happens under the hood in converting Alteryx data types to Oracle data types.

For example fixed decimal creates NUMBER, String created CHAR and V_String created VARCHAR.

It would be great to have an option to review the Oracle data types in the Output Data Tool when writing to Oracle. This would improve efficiency when using Alteryx to create Oracle tables.

See picture for example of what would display in output configuration.

.

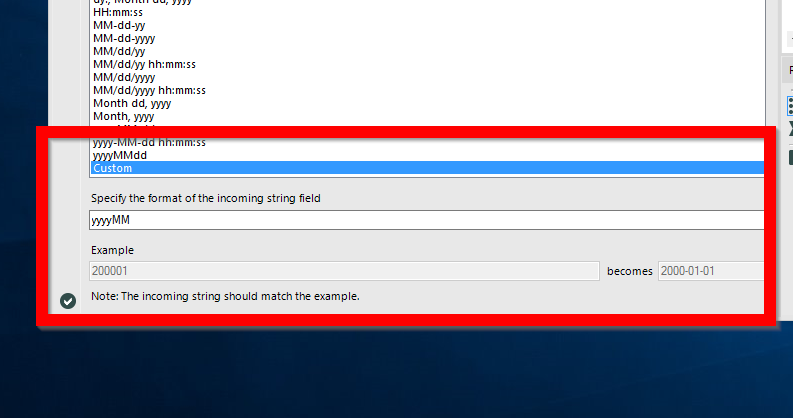

I love the new Custom Format option with the DateTime tool in Alteryx 11.0, this makes working with dates SO MUCH easier... BUT it would be great if you could update an existing field rather than having to create a new column (e.g. DateTime_Out) and then use a select to put this back to the original Date field.

Hey there,

The performance profiling option on the "runtime" tab is very helpful to identify bottlenecks on a long-running workflow. However this is missing (along with the entire "Runtime" tab) if I change this to a macro.

Given that the only way to build relatively complex dependant chain jobs is to wrap them in dummy batch macros (using a macro like a sub-procedure with flow-of-control on the master-canvas) - most of our work is done in Macros - so it would be helpful to be able to performance profile them during testing.

It would be good if the Email Tool could be enhanced so that it can send HTML e-mails, by that I mean the body of the e-mail is HTML based on a field in the workflow that contains a string of HTML.

Currently we are having to use batch files with command line e-mail clients to send e-mail with HTML generated within Alteryx workflows.

- New Idea 249

- Accepting Votes 1,818

- Comments Requested 25

- Under Review 167

- Accepted 57

- Ongoing 5

- Coming Soon 10

- Implemented 481

- Not Planned 118

- Revisit 65

- Partner Dependent 4

- Inactive 674

-

Admin Settings

19 -

AMP Engine

27 -

API

11 -

API SDK

218 -

Category Address

13 -

Category Apps

112 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

244 -

Category Data Investigation

76 -

Category Demographic Analysis

2 -

Category Developer

208 -

Category Documentation

80 -

Category In Database

214 -

Category Input Output

636 -

Category Interface

238 -

Category Join

102 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

76 -

Category Predictive

77 -

Category Preparation

390 -

Category Prescriptive

1 -

Category Reporting

198 -

Category Spatial

81 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

87 -

Configuration

1 -

Data Connectors

957 -

Data Products

1 -

Desktop Experience

1,518 -

Documentation

64 -

Engine

125 -

Enhancement

309 -

Feature Request

212 -

General

307 -

General Suggestion

4 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

11 -

Localization

8 -

Location Intelligence

80 -

Machine Learning

13 -

New Request

184 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

23 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

77 -

UX

222 -

XML

7

- « Previous

- Next »

-

caltang on: Identify Indent Level

- simonaubert_bd on: OpenAI connector : ability to choose a non-default...

- nzp1 on: Easy button to convert Containers to Control Conta...

-

Qiu on: Features to know the version of Alteryx Designer D...

- DataNath on: Update Render to allow Excel Sheet Naming

- aatalai on: Applying a PCA model to new data

- charlieepes on: Multi-Fill Tool

- seven on: Turn Off / Ignore Warnings from Parse Tools

- vijayguru on: YXDB SQL Tool to fetch the required data

- bighead on: <> as operator for inequality

| User | Likes Count |

|---|---|

| 212 | |

| 15 | |

| 14 | |

| 10 | |

| 8 |