Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: Top Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

Please enhance the input tool to have a feature you could select to test if the file is there and another to allow the workflow to pause for a definable period if the input file is locked by another user, then retry opening. The pause time-frame would be definable for N seconds and the number of iterations it would cycle through should be definable so you can limit how many attempts to open a file it would try.

File presence should be something we could use to control workflow processing.

A use case would be a process that runs periodically and looks to see if a file is there and if so opens and processes it. But if the file is not there then goes to sleep for a definable period before trying again or simply ends processing of the workflow without attempting to work any downstream tools that might otherwise result in "errors" trying to process a null stream.

An extension of this idea and the use case would be to have a separate tool that could evaluate a condition like a null stream or field content or file not found condition and terminate the process without causing an error indicator, or perhaps be configurable so you could cause an error to occur or choose not to cause an error to occur.

Using this latter idea we have an enhanced input tool that can pass a value downstream or generate a null data stream to the next tool, then this next tool can evaluate a condition, like a filter tool, which may be a null stream or file not found indicator or other condition and terminate processing per the configuration, either without a failure indicated or with a failure indicated, according to the wishes of the user. I have had times when a file was not there and I just want the workflow to stop without throwing errors, other times I may want it to error out to cause me to investigate, other scenarios or while processing my data goes through a filter or two and the result is no data passes the last filter and downstream tools still run and generally cause a failure as they have no data to act on and I don't want that, it may be perfectly valid that on a Sunday or holiday no data passes the filters.

Having meandered through this I sum up with the ideal being to enhance the input tool to be able to test file presence and pass that info on to another tool that can evaluate that and control the workflow run accordingly, but as a separate tool it could be applied to a wider variety of scenarios and test a broader scope of conditions to decide if to proceed or term the workflow.

This functionality would allow the user to select (through a highlight box, or ctrl+click), only the tools in a workflow they would want to run, and the tools that are not selected would be skipped. The idea is similar to the new "add selected tools to a new tool container", but it would run them instead.

I know the conventional wisdom it to either put everything you don't want run into a tool container and disable it, or to just copy/paste the tools you want run into a blank workflow. However, for very large workflows, it is very time consuming to disable a dozen or more containers, only to re-enable them shortly afterwards, especially if those containers have to be created to isolate the tools that need to be run. Overall, this would be a quality of life improvement that could save the user some time, especially with large or cumbersome workflows.

When using the output data tool, it would save me and my cluttered organizational skills a lot of effort if the writing workflow was saved as part of the yxdb metadata.

I've often had to search to find a workflow which created the yxdb. I tend to use naming conventions to help me, but it would be easier if the file and or path was easily found.

cheers,

mark

Hey there,

The performance profiling option on the "runtime" tab is very helpful to identify bottlenecks on a long-running workflow. However this is missing (along with the entire "Runtime" tab) if I change this to a macro.

Given that the only way to build relatively complex dependant chain jobs is to wrap them in dummy batch macros (using a macro like a sub-procedure with flow-of-control on the master-canvas) - most of our work is done in Macros - so it would be helpful to be able to performance profile them during testing.

Presently when mapping an Excel file to an input tool the tool only recognizes sheets it does not recognize named tables (ranges) as possible inputs. When using PowerBI to read Excel inputs I can select either sheets or named ranges as input. Alteryx input tool should do the same.

Roughly, in all versions of Alteryx Designer, you can use the Annotations tab and rename a tool. This is awesome for execution in designer, because you can then easily search for certain tool names, better document your workflow, and see the custom tool name in the Workflow Results.

However, when log files are generated, either via email, the AlteryxGallery settings, or an AlteryxEngineCMD command, each tool is recorded using only its default name of "ToolId Toolnumber", which is not particularly descriptive and makes these log files harder to parse in the case of an error.

Having the custom names show in these log files would go a long way towards improving log readability for enterprise systems, and would be an amazing feature add/fix. For users who prefer that the default format be shown, this could be considered as a request to ADD renames in addition to the existing format. EG "Input Data 1" that I have renamed to "Load business Excel File" could be shown in the log as:

00:00:0.003 - ToolId 1 - Load business Excel File: 1 record was read from File Finished in 00:00:0.004

Hi Alteryx Devs -

It would be *really tight* to have a drop down interface tool that would support auto completion based on a odbc connection to a table/column or ajax call. I recently had a situation wherein we need to give the users the ability to select an address, then run a workflow. But the truth is, our address data is terrible, and what I really needed was to be able to let the users start typing the address, then give them a list of choices to pick from, they pick the correct (but usually wrongly formatted) address, and then I send that value into the workflow.

I could not find a decent way to give a gallery user a reliable way to pick an address from our list, so eventually wound up having to write an ajax piece to handle the auto completion, capture the user input, then post to a service that would in turn, interact with gallery through the API, get the response, and send it back calling page, and back to the user. A significant amount of work to put into something that is an exceedingly common web operation of auto completion.

This would make a lot of gallery operations flow so much more naturally.

Thanks for listening!

brian

I would like to see the same functionalitly that the Output Tool has in the Render tool. In the Output Tool, you can specify the Excel Worksheet along with the Sheet Name that you want to output too. Meaning Same Worksheet, different tab:

C:Output FilesExample_Worksheet.xlsx|Report_1 C:Output FilesExample_Worksheet.xlsx|Report_2

This functionality is not currently available in the Render Tool and would be very useful and cut out some manual operations on the back end that requires us to copy/paste from one file to another.

I tried using the Section Break technique that was offered as a suggestion, but it did not perform what I needed.

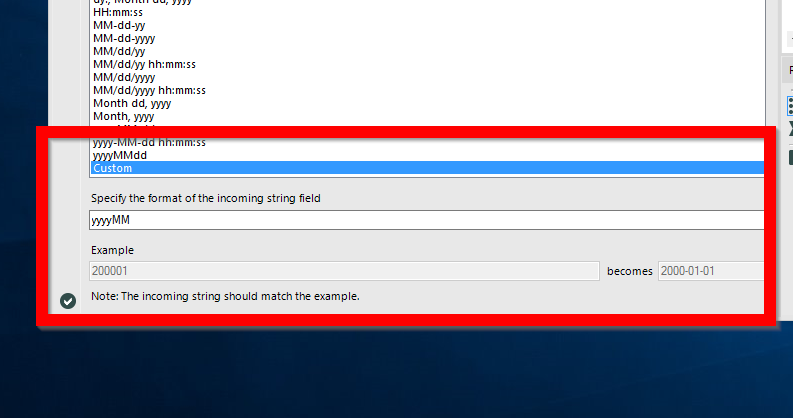

I love the new Custom Format option with the DateTime tool in Alteryx 11.0, this makes working with dates SO MUCH easier... BUT it would be great if you could update an existing field rather than having to create a new column (e.g. DateTime_Out) and then use a select to put this back to the original Date field.

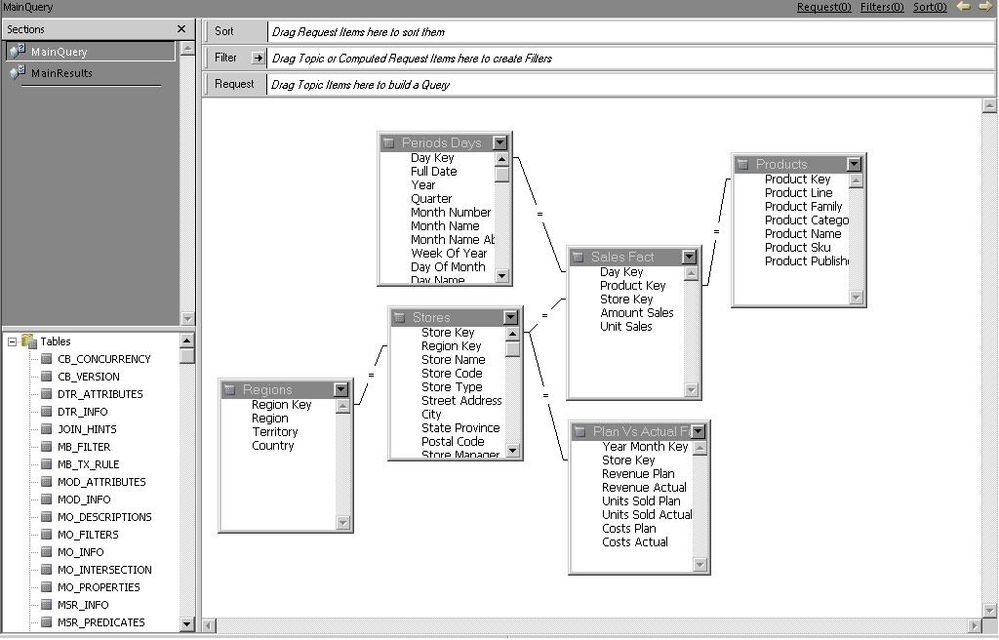

When you use the Visual Query Builder, you can drag and drop tables to arrange them clearly (to show the star or snowflake schema, for instance).

When you close the Visual Query Builder and reopen it, the tables are all left-aligned in a long column, with the joins overlapping each other. Since many of our tables are very wide (i.e., with many columns), this makes it cumbersome to locate the correct table and field.

I would like the manual positioning of the tables to be saved in the Visual Query Builder, to

- Make the logical arrangement clearer to the developer and later users

- Make it easier to locate tables/fields without scrolling downward

This is a feature that our users were very accoustomed to in Hyperion Intelligence, our legacy BI tool, which works similarly to the Visual Query Builder (shown below).

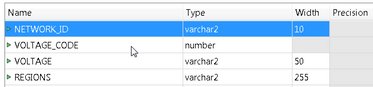

Currently when creating a table in Oracle in Alteryx there is a lot of "magic" that happens under the hood in converting Alteryx data types to Oracle data types.

For example fixed decimal creates NUMBER, String created CHAR and V_String created VARCHAR.

It would be great to have an option to review the Oracle data types in the Output Data Tool when writing to Oracle. This would improve efficiency when using Alteryx to create Oracle tables.

See picture for example of what would display in output configuration.

.

I reported this to the support team but was told it was by design and to post here.

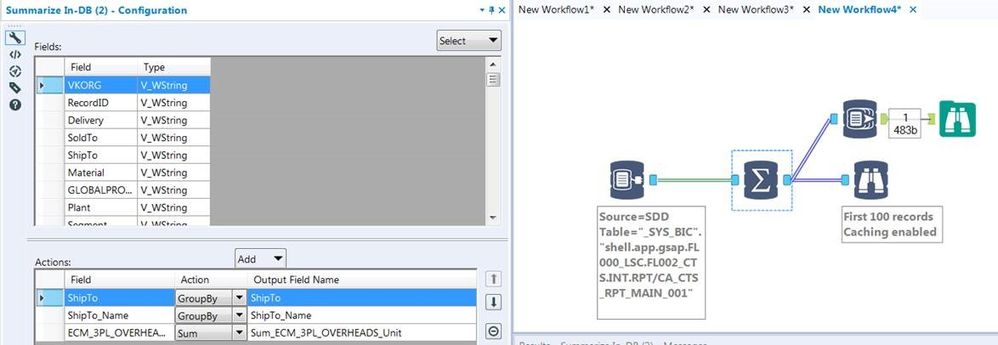

In-DB Inefficient SQL

I would like to report that the In-DB tools are generating horribly inefficient SQL code for simple operations. It seems no matter what tools you use every statement is starting with a nested 'Select * From'.

Example Simple workflow:

This is a simple Select and Group by but the SQL Generated is:

SELECT "ShipTo", "ShipTo_Name", SUM("ECM_3PL_OVERHEADS_Unit") AS "Sum_ECM_3PL_OVERHEADS_Unit"

FROM (SELECT * FROM "_SYS_BIC"."shell.app.gsap.FL000_LSC.FL002_CTS.INT.RPT/CA_CTS_RPT_MAIN_001") AS "a"

GROUP BY "ShipTo", "ShipTo_Name"

This is taking a very long time to execute:

Statement 'SELECT "ShipTo", "ShipTo_Name", SUM("ECM_3PL_OVERHEADS_Unit") AS "Sum_ECM_3PL_OVERHEADS_Unit" FROM ...'

successfully executed in 15.752 seconds (server processing time: 15.699 seconds)

Whereas if I take the same query and remove the nested Select *:

SELECT "ShipTo", "ShipTo_Name", SUM("ECM_3PL_OVERHEADS_Unit") AS "Sum_ECM_3PL_OVERHEADS_Unit"

FROM "_SYS_BIC"."shell.app.gsap.FL000_LSC.FL002_CTS.INT.RPT/CA_CTS_RPT_MAIN_001" AS "a"

GROUP BY "ShipTo", "ShipTo_Name"

It is very quick:

Statement 'SELECT "ShipTo", "ShipTo_Name", SUM("ECM_3PL_OVERHEADS_Unit") AS "Sum_ECM_3PL_OVERHEADS_Unit" FROM ...'

successfully executed in 1.211 seconds (server processing time: 1.157 seconds)

So Alteryx is generating queries up to x13 slower than they should be thereby defeating the point of using In-DB. As you can imagine in a workflow where we have multiple Connect In-DB tools this is a really substantial amount of time. Example used above is from SAP HANA DB has 1.9m rows and ~90 columns but we have much bigger tables/views than this.

If you look you will see its same behaviour for all In-DB tools where each tool creates another nested Select with its particular operator.

MY SUGGESTION:

So my suggestion is that Alteryx should combine the SQL of the first few tools and avoid using SELECT * completely unless no Select tools have been used. So it should combine:

- Connect In-DB + Select

- Connect In-DB + Filter

- Connect In-DB + Summarise

Preferably it should combine/flatten everything up until the first join or union. But Select + Filter are a must!

Note it seems some DB's can cope OK with un-nesting these big nested queries in the query plans for some Tables but normally not for Views. But some cannot cope at all and so the In-DB tools cannot even be used to Browse 100 records (due to select *).

During the design phase, we make some experimentations and create tables with Alteryx.

But, sometimes, after this phase or after a mistake, we need to drop those tables.

We know that it's possible to write a drop table statement in Pre-SQL or Post-SQL but it requires SQL skills and it could be done only if you write in a table.

It will be great if we could drop a table directly in the Query builder of the Input tool by making a right click on the table in the discovery tree.

Extension : It also be great to have the same thing in the HDFS browse.

When converting data types while In-DB, it would be really helpful if I could change the data type with the "Select In-DB" tool in a similar manner to the "Select" tool. Currently, we are having to use the "Formula In-DB" tool in order to create a "Cast" Statement.

I would like to suggest 2 small changes to make working with Interface tools easier:

1) Let a user change the name of Question Constants from the workflow tab. For example, I would love the ability to change the names of my list boxes below. Currently, I can click in the name box and write new names in, but it doesn't stick.

2) Let the user add the value on the Interface tools under the Annotation tab. Currently, I drop an interface tool on, change the name on the annotation tab, and then have to go the the workflow tab to setup a value. It would be easier if the value box was also on the annotation tab:

Pushing data to Salesforce from Oracle would bemuch easier if we were able to perform an UPSERT (Update if existing, Insert if not existing) function on any unique ID field in Salesforce. Instead of us having to do a filter to find the records that have or don't have an ID and run an Update or Insert based on the filter.

<Row ID="18606" Order_Priority="Not Specified" Discount="0.01" Unit_Price="2.88" Shipping_Cost="0.5"/>

As well as well formatted output, like this:

<Property>

<name>measured_depth</name>

<dataType>FLOAT32</dataType>

<numberOfDimensions>1</numberOfDimensions>

<units>m</units>

<data>1456.3453</data>

</Property>

One of the most common causes for Admin trauma for our central Alteryx Gallery team - is dealing with drivers that may not be on the server; or a particular worker; or on a designer.

What we're looking for, is for the Alteryx team to maintain a packaged set of drivers as a single installer - which we can download at the same location as the Alteryx designer / server versions.

This would allow us to have 1 version of all drivers across ALL designer clients; as well as on our workers and servers.

CC: @rijuthav @jithinmony @HengHe @RajK @ydmuley @revathi @Deeksha @MPistone @Ari_Fuller @Arianna_Fuller @JoshKushner @samnelson @avinashbonu @Sunder_Sriram @Rahul_Thakur @Rahul_Singh

When building an Alteryx Macro - one of the tough parts is that the input data you put into the Macro Input is used for testing, but you cannot set the type.

So for example - I want to test with the value 1, and Alteryx automatically assumes this is a Byte.

However 1 is just a useful test value, but I need this to be an int 64.

Can we provide the option to strongly type the macro inputs - this way, we can give advanced users the ability to control the type on Macro Inputs, and not run into this sort of issue with test data implicitly defining the type?

Note: this is similar to the idea here:

- New Idea 249

- Accepting Votes 1,818

- Comments Requested 25

- Under Review 167

- Accepted 57

- Ongoing 5

- Coming Soon 10

- Implemented 481

- Not Planned 118

- Revisit 65

- Partner Dependent 4

- Inactive 674

-

Admin Settings

19 -

AMP Engine

27 -

API

11 -

API SDK

218 -

Category Address

13 -

Category Apps

112 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

244 -

Category Data Investigation

76 -

Category Demographic Analysis

2 -

Category Developer

208 -

Category Documentation

80 -

Category In Database

214 -

Category Input Output

636 -

Category Interface

238 -

Category Join

102 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

76 -

Category Predictive

77 -

Category Preparation

390 -

Category Prescriptive

1 -

Category Reporting

198 -

Category Spatial

81 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

87 -

Configuration

1 -

Data Connectors

957 -

Data Products

1 -

Desktop Experience

1,518 -

Documentation

64 -

Engine

125 -

Enhancement

309 -

Feature Request

212 -

General

307 -

General Suggestion

4 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

11 -

Localization

8 -

Location Intelligence

80 -

Machine Learning

13 -

New Request

184 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

23 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

77 -

UX

222 -

XML

7

- « Previous

- Next »

-

caltang on: Identify Indent Level

- simonaubert_bd on: OpenAI connector : ability to choose a non-default...

- nzp1 on: Easy button to convert Containers to Control Conta...

-

Qiu on: Features to know the version of Alteryx Designer D...

- DataNath on: Update Render to allow Excel Sheet Naming

- aatalai on: Applying a PCA model to new data

- charlieepes on: Multi-Fill Tool

- seven on: Turn Off / Ignore Warnings from Parse Tools

- vijayguru on: YXDB SQL Tool to fetch the required data

- bighead on: <> as operator for inequality

| User | Likes Count |

|---|---|

| 212 | |

| 15 | |

| 14 | |

| 10 | |

| 8 |