Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: New Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

Only csv is provided (and json etc) but not .xlsx

Let us know when this can be added to Alteryx

Thanks

I reported this to the support team but was told it was by design and to post here.

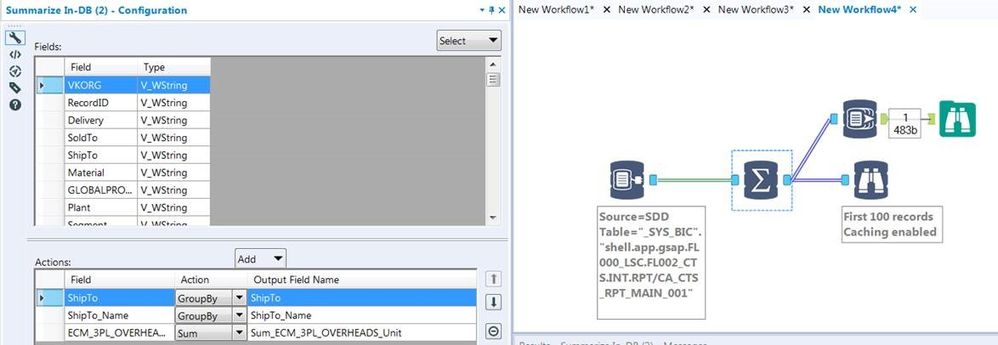

In-DB Inefficient SQL

I would like to report that the In-DB tools are generating horribly inefficient SQL code for simple operations. It seems no matter what tools you use every statement is starting with a nested 'Select * From'.

Example Simple workflow:

This is a simple Select and Group by but the SQL Generated is:

SELECT "ShipTo", "ShipTo_Name", SUM("ECM_3PL_OVERHEADS_Unit") AS "Sum_ECM_3PL_OVERHEADS_Unit"

FROM (SELECT * FROM "_SYS_BIC"."shell.app.gsap.FL000_LSC.FL002_CTS.INT.RPT/CA_CTS_RPT_MAIN_001") AS "a"

GROUP BY "ShipTo", "ShipTo_Name"

This is taking a very long time to execute:

Statement 'SELECT "ShipTo", "ShipTo_Name", SUM("ECM_3PL_OVERHEADS_Unit") AS "Sum_ECM_3PL_OVERHEADS_Unit" FROM ...'

successfully executed in 15.752 seconds (server processing time: 15.699 seconds)

Whereas if I take the same query and remove the nested Select *:

SELECT "ShipTo", "ShipTo_Name", SUM("ECM_3PL_OVERHEADS_Unit") AS "Sum_ECM_3PL_OVERHEADS_Unit"

FROM "_SYS_BIC"."shell.app.gsap.FL000_LSC.FL002_CTS.INT.RPT/CA_CTS_RPT_MAIN_001" AS "a"

GROUP BY "ShipTo", "ShipTo_Name"

It is very quick:

Statement 'SELECT "ShipTo", "ShipTo_Name", SUM("ECM_3PL_OVERHEADS_Unit") AS "Sum_ECM_3PL_OVERHEADS_Unit" FROM ...'

successfully executed in 1.211 seconds (server processing time: 1.157 seconds)

So Alteryx is generating queries up to x13 slower than they should be thereby defeating the point of using In-DB. As you can imagine in a workflow where we have multiple Connect In-DB tools this is a really substantial amount of time. Example used above is from SAP HANA DB has 1.9m rows and ~90 columns but we have much bigger tables/views than this.

If you look you will see its same behaviour for all In-DB tools where each tool creates another nested Select with its particular operator.

MY SUGGESTION:

So my suggestion is that Alteryx should combine the SQL of the first few tools and avoid using SELECT * completely unless no Select tools have been used. So it should combine:

- Connect In-DB + Select

- Connect In-DB + Filter

- Connect In-DB + Summarise

Preferably it should combine/flatten everything up until the first join or union. But Select + Filter are a must!

Note it seems some DB's can cope OK with un-nesting these big nested queries in the query plans for some Tables but normally not for Views. But some cannot cope at all and so the In-DB tools cannot even be used to Browse 100 records (due to select *).

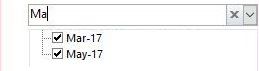

Hi there,

My idea comes when I've built an application, where user select filter from drop-down list. However it contains thousands of records, so it takes lot's of time to find desired record.

In Excel and MS Access when you use filter you can put many letter and filter shows rows that match the input. In Alteryx user can only put first letter, which is huge drawback to my users.

This is how it works in Excel:

Hope you like it!

A common problem with the R tool is that it outputs "False Errors" like the following: "The R.exe exit code (4294967295) indicted an error"

I call this a false error because data passes out of the R script the same as if there were no error. As such, this error can generally be ignored. In my use case, however, my R tool is embedded within an iterative macro, and the error causes the iterator to stop running.

I was able to create a workaround by moving the R tool to a separate workflow and calling it from the CReW runner macro within my iterator, effectively suppressing the error message, but this solution is a bit clumsy, requires unnecessary read/writes, and uses nonstandard macros.

I propose the solution suggested by @mbarone (https://community.alteryx.com/t5/Alteryx-Designer-Discussions/Boosted-Model-Error/td-p/5509) to only generate an error when the R return code is 1, indicating a true error, and to either ignore these false errors or pass them as warnings. This will allow R scripts and R-based tools to be embedded within iterative macros without breaking.

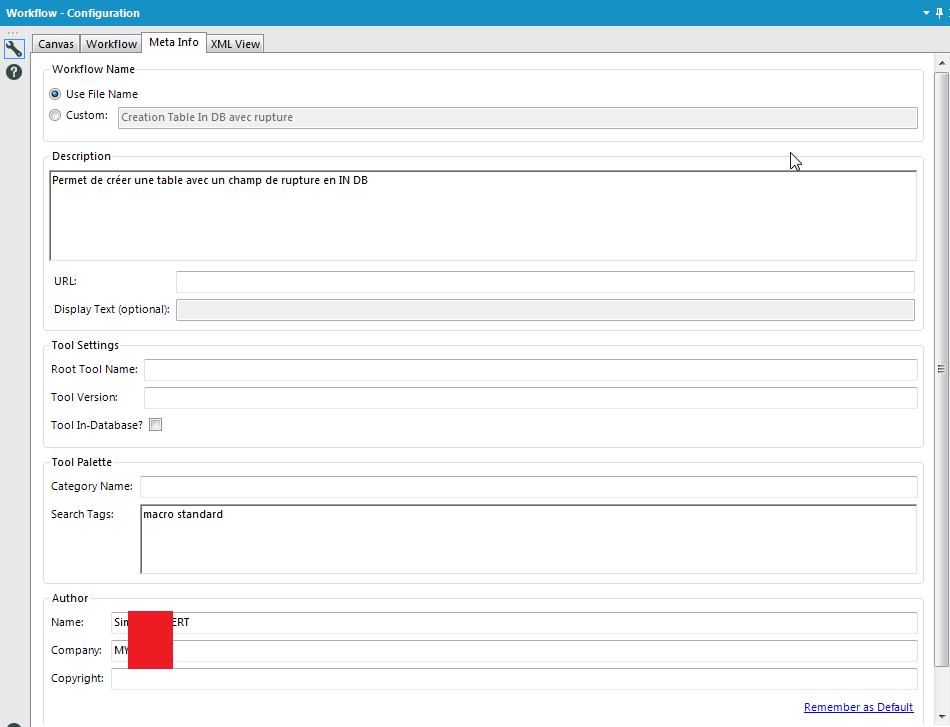

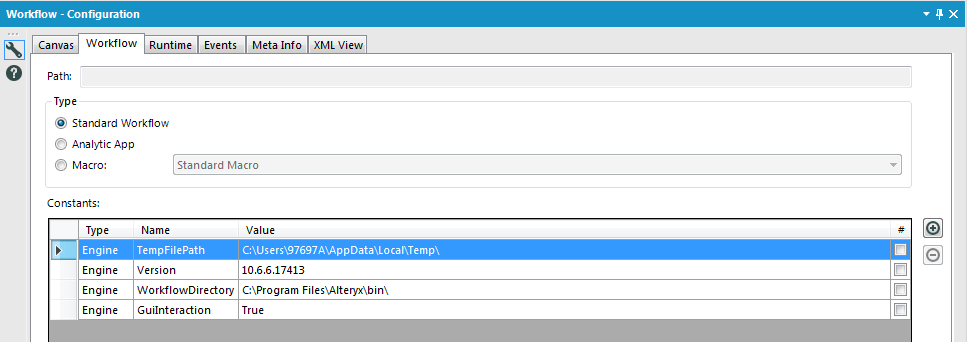

I love Workflow Meta info, especially the ability to put the Author, the search tags,the version, the description, etc...

But why can't we use it as Engine Constant? It doesn't seem very hard to implement and it would change life for development.

I would like to suggest creating a fix to allow In-DB Connect tool's custom SQL to read Common Table Expressions. As of 2018.2, the SQL fails due to the fact that In-DB tools wrap everything in a select * statement. Since CTE's need to start with With, this causes the SQL to error out. This would be a huge help instead of having to write nested sub selects in a long, complex SQL code!

Presently when mapping an Excel file to an input tool the tool only recognizes sheets it does not recognize named tables (ranges) as possible inputs. When using PowerBI to read Excel inputs I can select either sheets or named ranges as input. Alteryx input tool should do the same.

It would be wonderful for Alteryx to be able to connect to and query OData feeds natively, rather than using a 3rd-party driver or custom macro.

OData querying is supported by quite a few familiar products, including Excel and PowerBI, SSIS/SSRS, FME Safe, Tableau, and many others. And the protocol is used to publish feeds from Microsoft Dynamics and Sharepoint, as well as many of the 10,000 publically available government datasets with API's (esp. those hosted by Socrata)

I didn't see it as in the Idea section, but questions and workarounds have been discussed in the community a few times (11/15, 3/18, 4/18), and suggestions seem to be just to buy the $400-600 ODBC driver from CDATA (or ZappySys), or I could use a VBA script in Excel trigger a refresh, or create my own Alteryx connector macro (great series btw, though most was beyond my understanding!)

While not opposed paying, kludging, or learning to program, they're just one more thing to build/buy, install, maintain, and break at the most inconvenient time 🙂

Thanks,

Chadd

OData Overview:

OData (Open Data Protocol) is an ISO/IEC approved, OASIS standard that defines a set of best practices for building and consuming RESTful APIs. OData helps you focus on your business logic while building RESTful APIs without having to worry about the various approaches to define request and response headers, status codes, HTTP methods, URL conventions, media types, payload formats, query options, etc. OData also provides guidance for tracking changes, defining functions/actions for reusable procedures, and sending asynchronous/batch requests. OData RESTful APIs are easy to consume. The OData metadata, a machine-readable description of the data model of the APIs, enables the creation of powerful generic client proxies and tools.

More info at at http://odata.org

It would be awesome if there was a cross tab in DB option because right now I have to stream out millions of records to build a cross tab.

From Wikipedia :

In a database, a view is the result set of a stored query on the data, which the database users can query just as they would in a persistent database collection object. This pre-established query command is kept in the database dictionary. Unlike ordinary base tables in a relational database, a view does not form part of the physical schema: as a result set, it is a virtual table computed or collated dynamically from data in the database when access to that view is requested. Changes applied to the data in a relevant underlying table are reflected in the data shown in subsequent invocations of the view. In some NoSQL databases, views are the only way to query data.

Views can provide advantages over tables:

Views can represent a subset of the data contained in a table. Consequently, a view can limit the degree of exposure of the underlying tables to the outer world: a given user may have permission to query the view, while denied access to the rest of the base table.

Views can join and simplify multiple tables into a single virtual table.

Views can act as aggregated tables, where the database engine aggregates data (sum, average, etc.) and presents the calculated results as part of the data.

Views can hide the complexity of data. For example, a view could appear as Sales2000 or Sales2001, transparently partitioning the actual underlying table.

Views take very little space to store; the database contains only the definition of a view, not a copy of all the data that it presents.

Depending on the SQL engine used, views can provide extra security.I would like to create a view instead of a table.

Statistics are tools used by a lot of DB to improve speed of queries (Hive, Vertica, etc...). It may be interesting to have an option on the write in db or data stream in to calculate the statistics. (something like a check box for )

Example on Hive : analyse {table} comute statistics; analyse {table} compute statistics for columns;

I noticed through the ODBC driver log that Alteryx doesn't care about the kind of base I precise. It tests every single kind of base to find the good one and THEN applies the queries to get the metadata info.

Here an example. I have chosen an Hive in db connection. If I read the simba logs, i can find those lines :

Mar 01 11:37:21.318 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: select USER(), APPLICATION_ID() from system.iota

Mar 01 11:37:22.863 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: select USER as USER_NAME from SYSIBM.SYSDUMMY1

Mar 01 11:37:23.454 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: select * from rdb$relations

Mar 01 11:37:23.546 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: select first 1 dbinfo('version', 'full') from systables

Mar 01 11:37:23.707 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: select #01/01/01# as AccessDate

Mar 01 11:37:23.868 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: exec sp_server_info 1

Mar 01 11:37:24.093 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: select top (0) * from INFORMATION_SCHEMA.INDEXES

Mar 01 11:37:24.219 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: SELECT SERVERPROPERTY('edition')

Mar 01 11:37:24.423 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: select DATABASE() as `database`, VERSION() as `version`

Mar 01 11:37:24.635 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: select * from sys.V_$VERSION at where RowNum<2

Mar 01 11:37:25.230 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: select cast(version() as char(10)), (select 1 from pg_catalog.pg_class) as t

Mar 01 11:37:25.415 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: select NAME from sqlite_master

Mar 01 11:37:25.756 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: select xp_msver('CompanyName')

Mar 01 11:37:26.156 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: select @@version

Mar 01 11:37:26.376 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: select * from dbc.dbcinfo

Mar 01 11:37:26.522 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: SELECT @@VERSION;

I can understand that when Alteryx doesn't know the kind of base he tries everything.. (eg : in memory visual query builder) but here, I have selected the Hive database and I have to loose more than 5 seconds for nothing.

Option to select start and end time per day

e.g. between 8:00 AM and 5:00 PM every 2 hours

Would be nice to group workflows and their schedules because it gets confusing if you have a lot of schedules/workflows in the schedule view.

Especially if you have more than one schedule for a workflow.

One way could be to create folder system or to manage it through the meta info like macros.

The error message is:

Error: Cross Validation (58): Tool #4: Error in tab + laplace : non-numeric argument to binary operator

This is odd, because I see that there is special code that handles naive bayes models. Seems that the model$laplace parameter is _not_ null by the time it hits `update`. I'm not sure yet what line is triggering the error.

Hi,

A lot of companies now are deploying on both AWS and Microsoft Azure.

Alteryx supports AWS S3 object storage out of the box, it would be important to support Microsoft Azure blob as part of the native Alteryx product as well.

Cheers,

Adrian

One of the most common causes for Admin trauma for our central Alteryx Gallery team - is dealing with drivers that may not be on the server; or a particular worker; or on a designer.

What we're looking for, is for the Alteryx team to maintain a packaged set of drivers as a single installer - which we can download at the same location as the Alteryx designer / server versions.

This would allow us to have 1 version of all drivers across ALL designer clients; as well as on our workers and servers.

CC: @rijuthav @jithinmony @HengHe @RajK @ydmuley @revathi @Deeksha @MPistone @Ari_Fuller @Arianna_Fuller @JoshKushner @samnelson @avinashbonu @Sunder_Sriram @Rahul_Thakur @Rahul_Singh

Roughly, in all versions of Alteryx Designer, you can use the Annotations tab and rename a tool. This is awesome for execution in designer, because you can then easily search for certain tool names, better document your workflow, and see the custom tool name in the Workflow Results.

However, when log files are generated, either via email, the AlteryxGallery settings, or an AlteryxEngineCMD command, each tool is recorded using only its default name of "ToolId Toolnumber", which is not particularly descriptive and makes these log files harder to parse in the case of an error.

Having the custom names show in these log files would go a long way towards improving log readability for enterprise systems, and would be an amazing feature add/fix. For users who prefer that the default format be shown, this could be considered as a request to ADD renames in addition to the existing format. EG "Input Data 1" that I have renamed to "Load business Excel File" could be shown in the log as:

00:00:0.003 - ToolId 1 - Load business Excel File: 1 record was read from File Finished in 00:00:0.004

I would like to see a time interface tool similar to the Date and Numeric Up Down tools. I am working on some macros where the user can select the time they would like to use a filter for the data.

Example: I want all data loaded after 5:00 PM because its late and needs to be removed.

Example 2: I want to create an app where the user can select what time range they would like to see records for (business hours, during their shift, etc)

Currently this require 2-3 numeric up downs or a Text box with directions for the user on how to format field with Error tools to prevent bad entries. It could even be UTC time.

https://community.alteryx.com/t5/Data-Sources/Loading-MDF-File/m-p/55264#M3640

It would be great to have the possibility to load .mdf files.

- New Idea 275

- Accepting Votes 1,815

- Comments Requested 23

- Under Review 173

- Accepted 58

- Ongoing 6

- Coming Soon 19

- Implemented 483

- Not Planned 115

- Revisit 61

- Partner Dependent 4

- Inactive 672

-

Admin Settings

20 -

AMP Engine

27 -

API

11 -

API SDK

218 -

Category Address

13 -

Category Apps

113 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

247 -

Category Data Investigation

77 -

Category Demographic Analysis

2 -

Category Developer

208 -

Category Documentation

80 -

Category In Database

214 -

Category Input Output

641 -

Category Interface

240 -

Category Join

103 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

76 -

Category Predictive

77 -

Category Preparation

394 -

Category Prescriptive

1 -

Category Reporting

198 -

Category Spatial

81 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

89 -

Configuration

1 -

Content

1 -

Data Connectors

964 -

Data Products

2 -

Desktop Experience

1,538 -

Documentation

64 -

Engine

126 -

Enhancement

331 -

Feature Request

213 -

General

307 -

General Suggestion

6 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

12 -

Localization

8 -

Location Intelligence

80 -

Machine Learning

13 -

My Alteryx

1 -

New Request

194 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

23 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

80 -

UX

223 -

XML

7

- « Previous

- Next »

-

NicoleJ on: Disable mouse wheel interactions for unexpanded dr...

- TUSHAR050392 on: Read an Open Excel file through Input/Dynamic Inpu...

- NeoInfiniTech on: Extended Concatenate Functionality for Cross Tab T...

- AudreyMcPfe on: Overhaul Management of Server Connections

-

AlteryxIdeasTea

m on: Expression Editors: Quality of life update - StarTrader on: Allow for the ability to turn off annotations on a...

- simonaubert_bd on: Download tool : load a request from postman/bruno ...

- rpeswar98 on: Alternative approach to Chained Apps : Ability to ...

-

caltang on: Identify Indent Level

- simonaubert_bd on: OpenAI connector : ability to choose a non-default...