Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

Our company has a need to link a new data source in Athena. We have been able to establish a connection using the input functionality however the connection is so slow it is unusable. We need to have Alteryx build an In Database option for Athena to allow us to link our data lake to Alteryx.

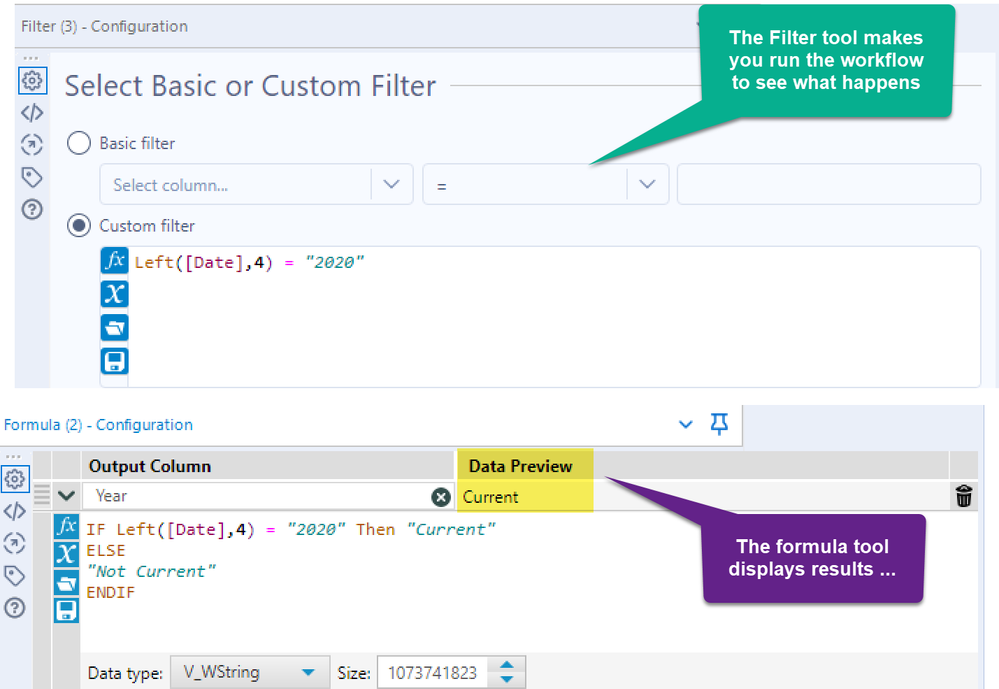

When configuring a FILTER tool, the results of your formula are uncertain until you RUN/PLAY the workflow. Compare that experience with the configuration of a FORMULA tool where you see a "Data Preview" of the first record results.

TRUE or FALSE could readily be added to the Filter Tool and save the execution time for the workflow.

When you get to HTML tool versions, you could check many rows of data and potentially give back counts of TRUE and FALSE results as well.

I'll put this on my x-mas list and see if Santa has me on the naughty or nice list.

Cheers,

Mark

Hey all,

At present, if you have an existing canvas and you want to move to a DCM Connection - you are asked something like "this will reset all of your connection details - are you sure". If you have complex queries; or pre+post SQL - then you first have to copy all of this out into Notepad before you can convert to DCM and then reconfigure it all again.

However, if you are not using DCM you can change data sources when you go into Workflow Dependancies without losing your queries etc.

Could we revisit the user experience of changing to or from a DCM connection to eliminate this "start from scratch" phenomenon - if you are converging from an existing SQL ODBC or ODB or SSVB connection to a SQL connection via DCM then it should allow you to make this conversion without losing your current configuration; and the same for any other database type.

cc: @mbarone

I'm testing out the new Data Connection Manager (DCM) and think it needs 1 enhancement based on the way we'd use DCM. Whenever Alteryx opens, it should sync the Data Sources/Credentials from the Server. This is critically important when sharing these with more than 1 user when a password needs updated.

For example, DesignerA updates the password in the source system, and then updates the password in their Designer DCM settings. In order for this new password to get synced to DesignerB, 2 things have to manually happen: DesignerA would need to sync the new password to the server, and then DesignerB would need to sync the new password from the server. I can live with the first part where DesignerA needs to sync to the server. It's just part of the password update process. The second step though seems perilous. DesignerB should get the new password without having to do anything; as things currently stand, DesignerB will have the old password until they manually intervene and sync the password. Imagine a scenario where it's just DesignerB, but hundreds of people who would all have to sync their credentials.

I also think this idea makes sense in light of the way Data Connections currently work (pre 21.4) where a similar sync happens automatically every time alteryx opens.

Hello all,

as of today, a join in-db can only be done with an equal operator.

Example : table1.customer_id = table2.customer_id

It's sufficient most of the time. However, sometimes, you need to perform another kind of join operation, (especially with calendar, period_table, etc).

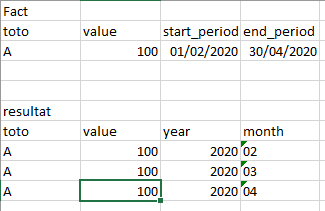

Here an example of clause you can find in existing sql

inner join calendar on calendar.id_year_month between fact.start_period and fact.end_period

helping to solve that case :

(the turnaround I use to day being : I make a full cartesian product with a join on 1=1 and then I filter the lines for the between)

or <,>, .... et caetera.

It can very useful to solve the most difficult issues. Note that a product like Tableau already offers this feature.

Best regards,

Simon

Hello!

Currently when using the formula tool, you can create a string using the two following methods:

With speech marks, or an apostrophe being used respectively.

I would expect both of these methods to behave the exact same way, however what is interesting is that if you type within the apostrophes anything that would prompt a formula, this is still prompted:

This is not the case within the speech marks:

This can cause mistakes with autocompletion when typing within the field. I propose a small QoL change that the formula tool will recognise a string is being written when within two apostrophes. I believe the logic is already built for that, given that it behaves in every other way the same, and highlights green too.

Cheers,

TheOC

It would be oh so nice to be able to copy a container's properties and paste those formatting options onto other containers. It could be accomplished through a Paint Brush icon on CTRL-Copy and Right Click to paste format. either way it would save setting the Color (multi-step select), Margin, transparency.

Cheers,

Mark

Data Cleansing Tool: There should be a sub-category on the "Punctuation" cleansing. Ideally to have an option to "Include Only" or conversely "Exclude these characters" which would allow you for example to remove all characters except "." from a dollar formatted field . There are times when you need to clean almost everything except a certain punctuation or not.

Hello all,

OLEDB is usually way faster than ODBC so that the way to connect we promote. However, the new DCM only works for ODBC connection, which is quite strange.

Best regards,

Simon

Hello all,

Change Data Capture ( https://en.wikipedia.org/wiki/Change_data_capture ) is an effective way to deal with changes in a database, allowing streaming or delta functionning. Several technos, more or less intrusive, can be applied (and combined). Ex : logs reading.

Qlik : https://www.qlik.com/us/streaming-data/data-streaming-cdc

Talend : https://www.talend.com/resources/change-data-capture/

Best regards,

Simon

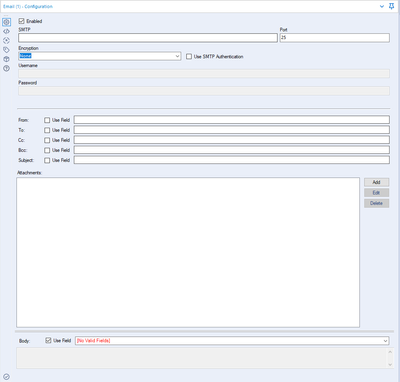

Hello there!

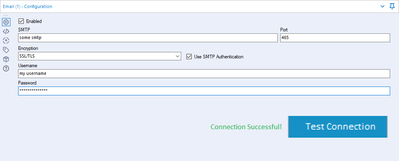

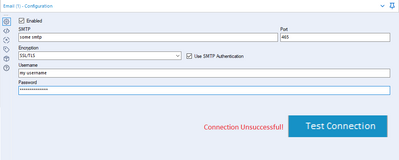

Currently the email tool has the following configuration:

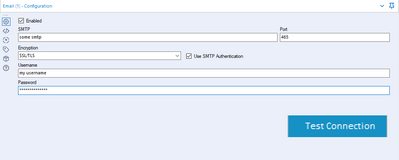

It is a fairly easy tool to use - however one part that I would like to be improved is testing the SMTP settings - similar to how it is done on the Alteryx Server. It would be awesome to have a button as part of this page, that would send a test email, and return true/display to the user that the email sent correctly. This would stop the need to setup dummy data and a dummy output to test a connection before rolling the email tool out into a live environment/use-case.

I imagine something along these lines for this functionality:

Clicking test (and passing):

Clicking test (and failing):

Thanks,

TheOC

I would love a tool to be created for looking up a value in a table based on a condition. It could be called "Lookup." One input to the tool would be the lookup list, the other is the main database. Inside the tool you could enter functions that can query the lookup table and return the results either as an overwrite of an existing field in the main DB or as a new field in the main DB, similar to the options in the Multi-Row Formula tool.

Here is a link to my post in Community that explains the problem. The solution, in a nutshell, was to create a Join (which resulted in millions of additional rows), run the conditional formula, then filter to get rid of the millions of rows that were created by the Join so only those that met the condition remained (the original database rows).

Here is the text of my Community post describing my project (slightly modified for clarity):

Table 1: A list of Pay Dates (the lookup table)

Table 2: Daily timekeeper data with Week Start and Week End Date fields.

The goal: To find the Pay Date in Table 1 that is greater than the Week Start Date in Table 2 and no more than 13 days after the Week End Date in Table 2.

[Table 2: Week Start Date] < [Table 1: Pay Date]

and [Table 2: Week End Date] < [Table 1: Pay Date]

and DateTimeDiff([Table 1: Pay Date], [Table 2: Week End Date], 'Days') <= 13

There are many different flows I could use this type of tool for that would save time and simplify the flow.

Thanks!

Using the Output tool to send data to a formatted spreadsheet apparently doesn't preserve formatting if the entire column is formatted. I'd love this changed to keep the formatting when its applied to an entire column. See this thread in Designer Discussions.

I'd like to see Alteryx allow a second install of your license on a second, personal machine. Tableau allows this and IMO is why there is such a robust online / blog community around that product.

For those of us that work at mid-size to large organizations, there are often strict rules governing internal data and use of cloud-based data sources. If I discover some new trick I'd like the share with my fellow Alteryx analysts outside of my company, I have no clear way to do that the same way I can with Tableau where I can do it at home not using my company's data.

Being able to learn new features and test things out on commonly available public data (ever notice that Superstore data set everyone who gets Tableau has?) would accelerate what we're able to do with the community site here and the larger analytics blogging community.

Credit to @pgdelafuente in his post Export Variables from Assisted Modelling Feature I... - Alteryx Community

This came up in a call with a large client - basically there's no easy way to output the feature importance plot, the accuracy metrics of the selected model (i.e. root mean squared error, correlation, max error, etc.). I would assume this is an easy addition into the Assisted Modeling tools, and perhaps useful for some of the Predictive tools!

When you start using DCM - you may have existing canvasses which use regular old connection strings which you want to migrate to DCM.

Currently (in 2023.1.1.123) - when you select "Use Data Connection Manager" - it shreds the configuration of your input tool which makes it difficult to just convert these from an existing connection to a DCM connection

The only way to then make sure that you don't lose any configuration on the tool then is to use the XML editing functionality of the tools and copy across your old configuration.

Could you please add the capability to keep my current tool configuration, but just change from using a regular old connection string to using DCM?

Many thanks

Sean

cc: @wesley-siu @_PavelP

A simple quality of life improvement that I would love, is the ability to rename the output of the transpose tool in its configuration, rather than only having 'Name' and 'Value'

Would just let me drop the renaming of these fields afterwards :)

It would be useful to be able to select a single container (containing a data input) or multiple containers using Shift, and run those and only those.

When building a new element to a larger workflow, I often enter a new Input in a new container, the ability to run just that container without having to turn off all my other containers would be really useful in speeding up the start of joining things together.

Hope that makes sense.

Thanks,

Doug

Hello,

SQLite is :

-free

-open source

-easy to use

-widely used

https://en.wikipedia.org/wiki/SQLite

It also works well with Alteryx input or output tool. 🙂

However, I think a InDB SQLite would be great, especially for learning purpose : you don't have to install anything, so it's really easy to implement.

Best regards,

Simon

It would be wonderful for Alteryx to be able to connect to and query OData feeds natively, rather than using a 3rd-party driver or custom macro.

OData querying is supported by quite a few familiar products, including Excel and PowerBI, SSIS/SSRS, FME Safe, Tableau, and many others. And the protocol is used to publish feeds from Microsoft Dynamics and Sharepoint, as well as many of the 10,000 publically available government datasets with API's (esp. those hosted by Socrata)

I didn't see it as in the Idea section, but questions and workarounds have been discussed in the community a few times (11/15, 3/18, 4/18), and suggestions seem to be just to buy the $400-600 ODBC driver from CDATA (or ZappySys), or I could use a VBA script in Excel trigger a refresh, or create my own Alteryx connector macro (great series btw, though most was beyond my understanding!)

While not opposed paying, kludging, or learning to program, they're just one more thing to build/buy, install, maintain, and break at the most inconvenient time 🙂

Thanks,

Chadd

OData Overview:

OData (Open Data Protocol) is an ISO/IEC approved, OASIS standard that defines a set of best practices for building and consuming RESTful APIs. OData helps you focus on your business logic while building RESTful APIs without having to worry about the various approaches to define request and response headers, status codes, HTTP methods, URL conventions, media types, payload formats, query options, etc. OData also provides guidance for tracking changes, defining functions/actions for reusable procedures, and sending asynchronous/batch requests. OData RESTful APIs are easy to consume. The OData metadata, a machine-readable description of the data model of the APIs, enables the creation of powerful generic client proxies and tools.

More info at at http://odata.org

- New Idea 263

- Accepting Votes 1,818

- Comments Requested 24

- Under Review 170

- Accepted 56

- Ongoing 5

- Coming Soon 11

- Implemented 481

- Not Planned 118

- Revisit 63

- Partner Dependent 4

- Inactive 674

-

Admin Settings

20 -

AMP Engine

27 -

API

11 -

API SDK

218 -

Category Address

13 -

Category Apps

112 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

245 -

Category Data Investigation

76 -

Category Demographic Analysis

2 -

Category Developer

208 -

Category Documentation

80 -

Category In Database

214 -

Category Input Output

638 -

Category Interface

239 -

Category Join

102 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

76 -

Category Predictive

77 -

Category Preparation

393 -

Category Prescriptive

1 -

Category Reporting

198 -

Category Spatial

81 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

88 -

Configuration

1 -

Data Connectors

959 -

Data Products

3 -

Desktop Experience

1,529 -

Documentation

64 -

Engine

125 -

Enhancement

320 -

Feature Request

212 -

General

307 -

General Suggestion

4 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

12 -

Localization

8 -

Location Intelligence

80 -

Machine Learning

13 -

New Request

189 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

24 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

78 -

UX

223 -

XML

7

- « Previous

- Next »

- AudreyMcPfe on: Overhaul Management of Server Connections

- StarTrader on: Allow for the ability to turn off annotations on a...

- rpeswar98 on: Alternative approach to Chained Apps : Ability to ...

-

caltang on: Identify Indent Level

- simonaubert_bd on: OpenAI connector : ability to choose a non-default...

- maryjdavies on: Lock & Unlock Workflows with Password

- nzp1 on: Easy button to convert Containers to Control Conta...

-

Qiu on: Features to know the version of Alteryx Designer D...

- DataNath on: Update Render to allow Excel Sheet Naming

- aatalai on: Applying a PCA model to new data