Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: Hot Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

I'd like to see Alteryx allow a second install of your license on a second, personal machine. Tableau allows this and IMO is why there is such a robust online / blog community around that product.

For those of us that work at mid-size to large organizations, there are often strict rules governing internal data and use of cloud-based data sources. If I discover some new trick I'd like the share with my fellow Alteryx analysts outside of my company, I have no clear way to do that the same way I can with Tableau where I can do it at home not using my company's data.

Being able to learn new features and test things out on commonly available public data (ever notice that Superstore data set everyone who gets Tableau has?) would accelerate what we're able to do with the community site here and the larger analytics blogging community.

Using the Output tool to send data to a formatted spreadsheet apparently doesn't preserve formatting if the entire column is formatted. I'd love this changed to keep the formatting when its applied to an entire column. See this thread in Designer Discussions.

Credit to @pgdelafuente in his post Export Variables from Assisted Modelling Feature I... - Alteryx Community

This came up in a call with a large client - basically there's no easy way to output the feature importance plot, the accuracy metrics of the selected model (i.e. root mean squared error, correlation, max error, etc.). I would assume this is an easy addition into the Assisted Modeling tools, and perhaps useful for some of the Predictive tools!

When you start using DCM - you may have existing canvasses which use regular old connection strings which you want to migrate to DCM.

Currently (in 2023.1.1.123) - when you select "Use Data Connection Manager" - it shreds the configuration of your input tool which makes it difficult to just convert these from an existing connection to a DCM connection

The only way to then make sure that you don't lose any configuration on the tool then is to use the XML editing functionality of the tools and copy across your old configuration.

Could you please add the capability to keep my current tool configuration, but just change from using a regular old connection string to using DCM?

Many thanks

Sean

cc: @wesley-siu @_PavelP

It would be useful to be able to select a single container (containing a data input) or multiple containers using Shift, and run those and only those.

When building a new element to a larger workflow, I often enter a new Input in a new container, the ability to run just that container without having to turn off all my other containers would be really useful in speeding up the start of joining things together.

Hope that makes sense.

Thanks,

Doug

It would be wonderful for Alteryx to be able to connect to and query OData feeds natively, rather than using a 3rd-party driver or custom macro.

OData querying is supported by quite a few familiar products, including Excel and PowerBI, SSIS/SSRS, FME Safe, Tableau, and many others. And the protocol is used to publish feeds from Microsoft Dynamics and Sharepoint, as well as many of the 10,000 publically available government datasets with API's (esp. those hosted by Socrata)

I didn't see it as in the Idea section, but questions and workarounds have been discussed in the community a few times (11/15, 3/18, 4/18), and suggestions seem to be just to buy the $400-600 ODBC driver from CDATA (or ZappySys), or I could use a VBA script in Excel trigger a refresh, or create my own Alteryx connector macro (great series btw, though most was beyond my understanding!)

While not opposed paying, kludging, or learning to program, they're just one more thing to build/buy, install, maintain, and break at the most inconvenient time 🙂

Thanks,

Chadd

OData Overview:

OData (Open Data Protocol) is an ISO/IEC approved, OASIS standard that defines a set of best practices for building and consuming RESTful APIs. OData helps you focus on your business logic while building RESTful APIs without having to worry about the various approaches to define request and response headers, status codes, HTTP methods, URL conventions, media types, payload formats, query options, etc. OData also provides guidance for tracking changes, defining functions/actions for reusable procedures, and sending asynchronous/batch requests. OData RESTful APIs are easy to consume. The OData metadata, a machine-readable description of the data model of the APIs, enables the creation of powerful generic client proxies and tools.

More info at at http://odata.org

Hello,

SQLite is :

-free

-open source

-easy to use

-widely used

https://en.wikipedia.org/wiki/SQLite

It also works well with Alteryx input or output tool. 🙂

However, I think a InDB SQLite would be great, especially for learning purpose : you don't have to install anything, so it's really easy to implement.

Best regards,

Simon

Would love to see an option to disable a specific Output tool (rather than the global "Disable All Tools that Write Output" option). I'm envisioning the inverse of the Email tool, where there is a checkbox to enable Email... rather, the Output tool could have a check box that would disable that output (and ONLY that output), similar/consistent with the "Disable All Tools" function. A "Disable This Output" check box. The benefits would be a quick way to make sure not to overwrite something in one output (but still getting all the good content in all the other outputs) rather than having to go through the multiple clicks of adding to a container and then disabling the container. Could have benefits for connecting with Action tools/interface toggles as well. It would likely need to contain the same/similar formatting in Designer to indicate it has been disabled, though maybe a slightly different color so you could tell it was disabled differently?

(On a similar vein, would love to take this opportunity to bring up my favorite idea-that-has-not-been-implemented-yet-that-would-love-your-vote-and-attention, implementing a Warning that outputs are disabled when posting to Gallery...)

Cheers!

NJ

A simple quality of life improvement that I would love, is the ability to rename the output of the transpose tool in its configuration, rather than only having 'Name' and 'Value'

Would just let me drop the renaming of these fields afterwards :)

I would love a tool to be created for looking up a value in a table based on a condition. It could be called "Lookup." One input to the tool would be the lookup list, the other is the main database. Inside the tool you could enter functions that can query the lookup table and return the results either as an overwrite of an existing field in the main DB or as a new field in the main DB, similar to the options in the Multi-Row Formula tool.

Here is a link to my post in Community that explains the problem. The solution, in a nutshell, was to create a Join (which resulted in millions of additional rows), run the conditional formula, then filter to get rid of the millions of rows that were created by the Join so only those that met the condition remained (the original database rows).

Here is the text of my Community post describing my project (slightly modified for clarity):

Table 1: A list of Pay Dates (the lookup table)

Table 2: Daily timekeeper data with Week Start and Week End Date fields.

The goal: To find the Pay Date in Table 1 that is greater than the Week Start Date in Table 2 and no more than 13 days after the Week End Date in Table 2.

[Table 2: Week Start Date] < [Table 1: Pay Date]

and [Table 2: Week End Date] < [Table 1: Pay Date]

and DateTimeDiff([Table 1: Pay Date], [Table 2: Week End Date], 'Days') <= 13

There are many different flows I could use this type of tool for that would save time and simplify the flow.

Thanks!

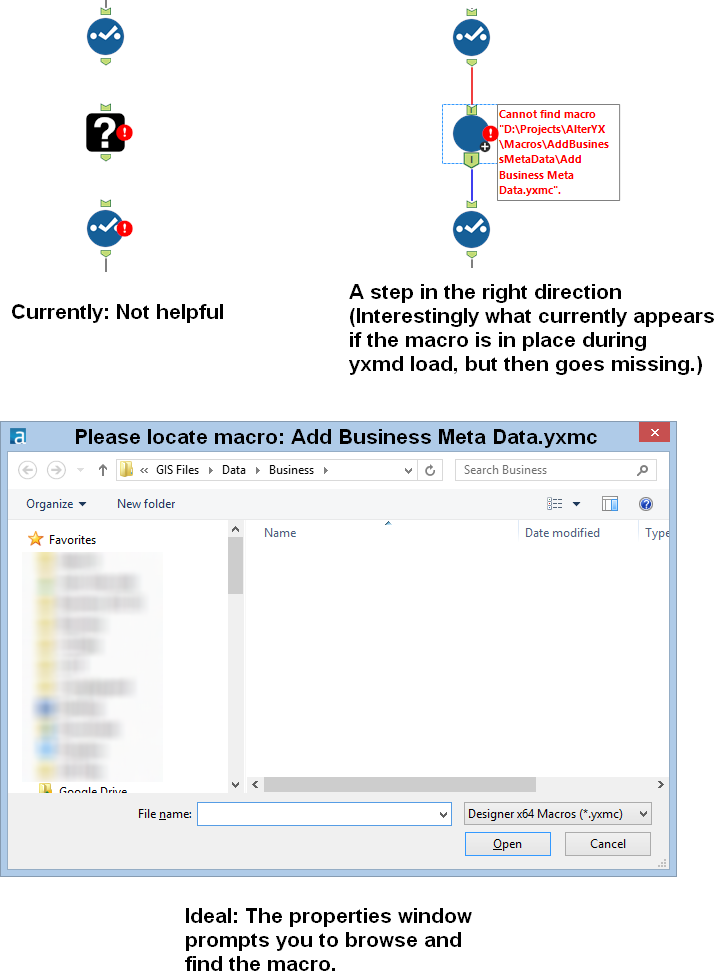

Idea: Prompt the user to find a missing macro instead of the current UX of a question mark icon.

Issue: When a macro referenced in a workflow is missing, then there is no way to a) know what the name of the macro was (assuming you were lazy like me and didn't document with a comment) and b) find the macro so you can get back to business.

When this happens to me know, I have to go to the XML view and search for macros and then cycle through them until I find the one that's missing. Then I have to either copy the macro back into that location or manually edit the workflow XML. Not cool man.

Solution: When a macro is missing, the image below at the right should be shown. In the properties window, a file browse tool should allow the user to find the macro.

After multiple years of using Alteryx, The tabbed document feature was left out of 2022.1. This feature allows for a much cleaner canvas for exploring workflow and output data. I view this feature as a basic function of Alteryx, I was surprised to find out that the development team intentionally omitted this function. I really don't want to revert back to older versions but it may be only the way to have a more comfortable feel of Alteryx.

Hello!

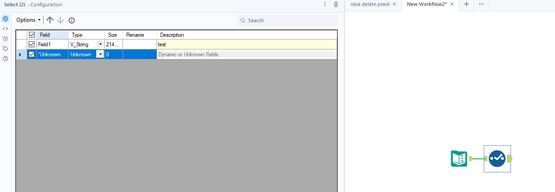

I appreciate this is a very underused element of Alteryx Functionality, however, I have noticed a few issues with the description of fields.

Firstly, if you set a description on a field within a select tool:

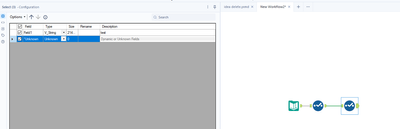

And then attempt to clear the description later in the workflow (in another select tool), you cannot. When you delete the description, it will clear back to the original value (in this case, 'test'):

This can be easily recreated, and can be more applicable to yxdb outputs that contain the description of fields. In that scenario, you cannot go back to the previous select tool and remove the description. The closest you can come to easily clearing the description is replacing it with a space ' '.

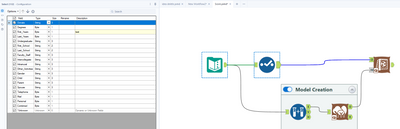

As a secondary issue, as current the score tool removes field descriptions and overrides the source. For example if I open the Score tool example workflow, and add a select tool/description:

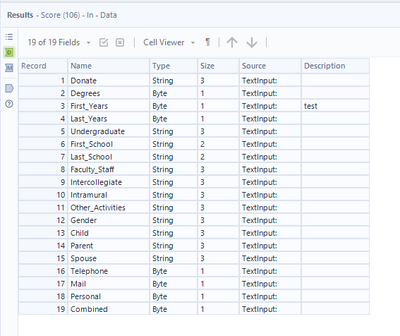

You can see the meta data going into the score tool:

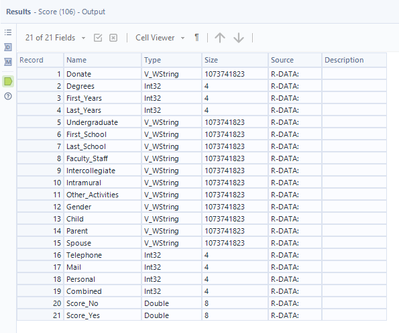

But unfortunately the output of the tool looks like:

Showing that it has completely removes the descriptions, and also replaced all of the 'source' information. My suggestion for this would be that it would not replace the source information or descriptions.

Thirdly - and quite a niche issue, but an int64 field specifically will break when the description differs between the data and the model.

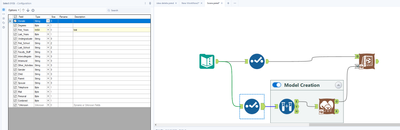

Again, easy to recreate within the Ccore tool example workflow. Apply a Select tool to both streams, setting 'First_Years' to an int64. Within the bottom stream (the model creation), set a description, in this case, 'test':

Make sure to leave the top streams description blank.

Run the workflow, observe the error:

Error: Score (106): Score: The variable testFirst_Years is missing from the input data stream.

Interestingly, it seems to be using the description as part of the name within the Score tool, which is causing issue when the descriptions differ. My suggestion for this would be that it would not utilise descriptions at all.

Kind Regards,

Owen

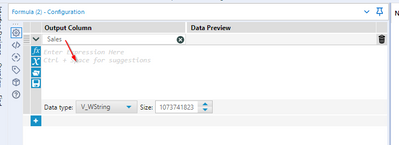

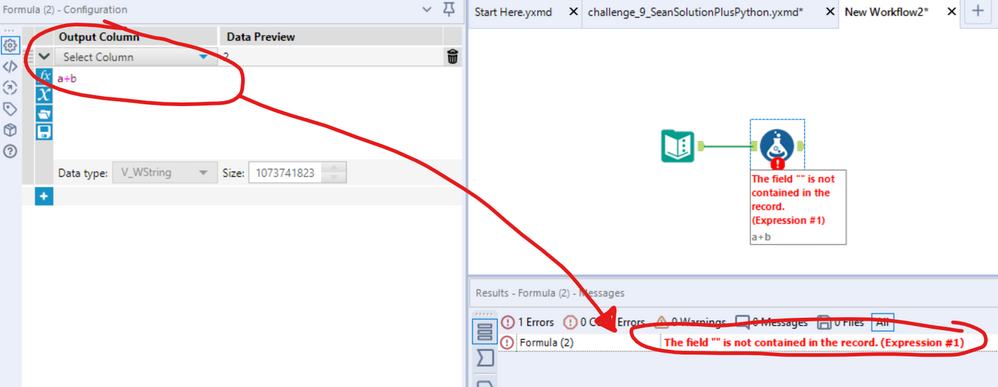

I personally think it would work better to tab from 'Select Column' to 'Enter Expression Here' and not the 'Functions' List as probably people who are tabbing would immediately like to start typing the formula rather than going through functions, fields, etc.

If you forget to put a name on a new column in the formula tool - the error message is

The field "" is not contained in the record. (Expression #1)

Please could you replace this with a user-friendly message which is self descriptive like:

"Please provide a name for the new column created in expression 1"?

This year, Microsoft updated improve their API (GraphAPI) to access Office365 enviroment.

Alteryx have launched on Microsoft District in Public Gallery the Dataverse , Onedrive & Sharepoint Connectors.

Alteryx must develop as soon as possible an connector/email with same authenticator options as connectors Above and improve the emails settings.

It´s important to release and documentation to show wich permissions on Azure it's necssary to send the emails.

References:

https://docs.microsoft.com/pt-br/lifecycle/announcements/exchange-online-basic-auth-deprecated

Azure Permissions:

https://docs.microsoft.com/en-us/graph/api/user-sendmail?view=graph-rest-1.0&tabs=csharp

Currently Alteryx does not support writing to SharePoint document libraries.

However there are success sometimes but not at other times.

Please see attachment where we ran into an issue.

See this link for additional information.

We need official support for reading and writing to SharePoint document libraries.

It's an important Output target, and will becoming more so, as Alteryx enhances its reporting capabilities.

From Wikipedia :

In a database, a view is the result set of a stored query on the data, which the database users can query just as they would in a persistent database collection object. This pre-established query command is kept in the database dictionary. Unlike ordinary base tables in a relational database, a view does not form part of the physical schema: as a result set, it is a virtual table computed or collated dynamically from data in the database when access to that view is requested. Changes applied to the data in a relevant underlying table are reflected in the data shown in subsequent invocations of the view. In some NoSQL databases, views are the only way to query data.

Views can provide advantages over tables:

Views can represent a subset of the data contained in a table. Consequently, a view can limit the degree of exposure of the underlying tables to the outer world: a given user may have permission to query the view, while denied access to the rest of the base table.

Views can join and simplify multiple tables into a single virtual table.

Views can act as aggregated tables, where the database engine aggregates data (sum, average, etc.) and presents the calculated results as part of the data.

Views can hide the complexity of data. For example, a view could appear as Sales2000 or Sales2001, transparently partitioning the actual underlying table.

Views take very little space to store; the database contains only the definition of a view, not a copy of all the data that it presents.

Depending on the SQL engine used, views can provide extra security.I would like to create a view instead of a table.

I constantly find my using pre and post SQL Commands in the Output tool to run SQL when I don't actually have any data to output.

One example is when I load data into S3 and want to load it into Redshift. I have SQL code to run but no data to Output - I end up running a dummy row into a temp table.

So can we have an SQL tool that simply acts the same as a Pre-SQL command without the associated data output. Once the command is run we should be able to continue the workflow, so the tool should have an option input and output, like the Run Command tool.

I would really love to have a tool "Dynamic change type" or "Dynamic re-type" which is used just as "Dynamic Rename".

- "Take Type from First Row of Data": By definition, all columns are of a string type initially. Sets the type of the column according to the string in the first row of data.

Col 1 Col 2 Col 3 Col 4 Double Int32 V_String Date 123.456 17 Hello 2023-10-30 3.4e17 123 Bye 2024-01-01 - "Take Type from Right Input Metadata": Changes the types of the left input table to the ones by right input.

- "Take Type from Right Input Rows": Changes the types based on a table with columns "Name" and "New Type".

Name New Type Col 1 Double Col 2 Int32 Col 3 V_String Col 4 Date

- New Idea 265

- Accepting Votes 1,818

- Comments Requested 24

- Under Review 172

- Accepted 56

- Ongoing 5

- Coming Soon 11

- Implemented 481

- Not Planned 117

- Revisit 63

- Partner Dependent 4

- Inactive 674

-

Admin Settings

20 -

AMP Engine

27 -

API

11 -

API SDK

218 -

Category Address

13 -

Category Apps

113 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

245 -

Category Data Investigation

76 -

Category Demographic Analysis

2 -

Category Developer

208 -

Category Documentation

80 -

Category In Database

214 -

Category Input Output

638 -

Category Interface

239 -

Category Join

102 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

76 -

Category Predictive

77 -

Category Preparation

393 -

Category Prescriptive

1 -

Category Reporting

198 -

Category Spatial

81 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

88 -

Configuration

1 -

Content

1 -

Data Connectors

959 -

Data Products

2 -

Desktop Experience

1,528 -

Documentation

64 -

Engine

126 -

Enhancement

321 -

Feature Request

213 -

General

307 -

General Suggestion

6 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

12 -

Localization

8 -

Location Intelligence

80 -

Machine Learning

13 -

My Alteryx

1 -

New Request

188 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

23 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

78 -

UX

222 -

XML

7

- « Previous

- Next »

- AudreyMcPfe on: Overhaul Management of Server Connections

-

AlteryxIdeasTea

m on: Expression Editors: Quality of life update - StarTrader on: Allow for the ability to turn off annotations on a...

-

AkimasaKajitani on: Download tool : load a request from postman/bruno ...

- rpeswar98 on: Alternative approach to Chained Apps : Ability to ...

-

caltang on: Identify Indent Level

- simonaubert_bd on: OpenAI connector : ability to choose a non-default...

- maryjdavies on: Lock & Unlock Workflows with Password

- noel_navarrete on: Append Fields: Option to Suppress Warning when bot...

- nzp1 on: Easy button to convert Containers to Control Conta...

| User | Likes Count |

|---|---|

| 7 | |

| 6 | |

| 6 | |

| 5 | |

| 5 |