Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: Top Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

Some of the workflows I use have multiple inputs that can take a long time to initially load. The new cache function itself has been amazing, but there is one big drawback for me: I can't cache multiple tools at the same time. Alteryx will allow me to eventually cache all of the tools I want cached, but it will take multiple times running the file. This still saves me time in the end, but it feels a bit cumbersome to set up.

I have recently added an Azure data lake v2. The Azure input/output connectors do not work with this version of the Azure data lake.

It appears that Alteryx adds ".azuredatalakestore.net" to the file path. This works for V1, but not needed for V2

any plans to configure a connector for Azure data lake v2?

The idea is to store credentials, login/pw in a "credential alias".

Then, those credential aliases can be used in :

-traditional aliases/connection

-in database aliases/connection

-hdfs aliases/connection

-API

-on user aliases for connected controllers/gallery

...etc.

The idea is that I only have to change the credentials once for all the connection type (on Hive, I have the in db alias, the traditional alias and even an HDFS alias using exactly the same credentials !! and I have to change all that manually).

It would be helpful to be able to filter within the results window of a Browse tool for all "Not OK" records (records with leading/trailing spaces, embedded newlines, etc.) I can already filter for null and empty values, but this would be helpful for cleaning up data. I want to see the "dirty" data before taking out leading/trailing spaces or embedded new lines to see if there is something I'm missing in the data that needs to be further parsed or modified.

Hi,

The current way to label or annotate a tool is that we need to double click the tool to bring up configuration window, then click on the annotation icon, then click on the annotation textbox.

My suggestion is when a tool is selected, simply press the Enter/Return key, then start typing the annotation right there (inline editing). Save a couple of clicks.

Thanks.

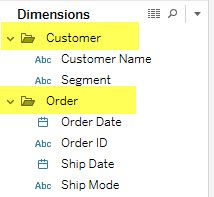

Tableau allows users to do three very useful things to make data more usable for end users, but this functionality is not available with the Publish To Tableau Server tool.

Foldering of dimensions/measures

Creating hierarchies out of dimensions

Adding custom comments to fields that are visible to users when they hover

This functionality allows subject matter experts to create data sources that can be easily understood by everyone within their organizations.

Please "star" this idea if you would like to see functionality in Alteryx that would enable you to create a metadata layer in the "Publish to Tableau Server" tool or in an accompanying tool.

As of Version 10.6, Alteryx supports connecting to ESRI File GeoDatabases from the input tool but it doesnt support writing to a geodatabase. This is something we would really like to see implemented in a future version of Alteryx. Those of us working with ESRI products and/or any of the ESRI online mapping systems can do our processing in Alteryx and store large files as YXDBs, but ultimately need our outputs for display in ArcOnline to be in shapefile or geodatabase feature class format. Shapefile have a size limit of 2 GBs and limitation on field name sizes. Many of the files we are working with are much larger than this and require geodatabases for storage which are not limited by size (GDB size is unlimited, 1 TB max per feature class) and have larger field name widths (160 chars). Right now, we have to write to one (or many) shapefile(s) from Alteryx, then import them into a GDB using ArcMap or ArcPy. This can be an arduous process when working with large amounts of data or multiple files.

The latest ESRI API allows both read and write access to GDBs -- is there a way we can add this to the list of valid output formats in Alteryx?

This idea is an extension of an older idea:

https://community.alteryx.com/t5/Alteryx-Product-Ideas/ESRI-File-Geodatabase/idi-p/1424

Hi All,

Was very happy to see the Bulk Loader introduced for Snowflake during last release. This bulk loader is specifically available for Snowflake environments that are hosted on AWS, but does not provide functionality for those environments using Azure. As Snowflake continues to build momentum, I imagine this will be a common request. Is there something in the pipeline to add this functionality?

For an interim solution, we will be working toward developing some generic scripts/snowsql to mimic that bulk load, but ultimately we'd love to have this as part of the tool.

Best,

devKev

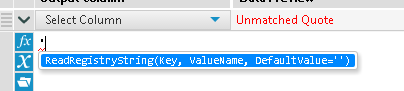

Ok Alteryx, we totally love your product. And I've got a super quick fix for you. Why on earth would you Autocomplete the ubiquitous tick mark as "ReadRegistryString(Key, ValueName, DefaultValue='')"

?

I find myself in this situation constantly where, 'dummy' suddenly becomes 'dummyReadRegistryString('HKEY_LOCAL_MACHINE\SOFTWARE\SRC\Alteryx\4.1', 'InstallDir')' the moment I strike the enter key.

Pls help, I don't ask for much.

It would be really helpful to have a bulk load 'output' tool to Snowflake. This would be functionality similar to what is available with the Redshift bulk loader.

Currently it takes a reaaally long time to insert via ODBC or would require you to write a custom solution to get this to work.

This article explains the general steps but some of the manual steps outlined would have to be automated to arrive at a solution that is entirely encapsulated within a workflow.

http://insightsthroughdata.com/how-to-load-data-in-bulk-to-snowflake-with-alteryx/

I'd say that 95.437% of the Joins I do are straight Inner Joins.

So each of those times I have to remember to go down to the Select part of the Join tool and deselect all the fields I joined on the Right Side since they'll be duplicates.

I'd like a checkbox like below (defaulted to CHECKED) to deselect all the joined fields from the right hand side. In the rare cases where there's a need I could uncheck it.

How about a quick method of disabling a container.

Current state - Click on the container, pan the mouse all the way over to the tiny checkbox target in the configuration pane and click disable.

Future state - little icon by the rollup icon that can be clicked to disable/enable, differentiated by perhaps a color change of the minimized pane perhaps?

I know what you're thinking, "talk about lazy, he's whining about moving the mouse (which his hand was already on) 2 cm along his desktop and clicking"... but still what an easy usability win and one less click to do a task I find myself repeating frequently.

I noticed that Tableau has a new connector to Anaplan in the upcoming release.

Does Alteryx have any plans to create an Anaplan connector?

Would love to see an option to disable a specific Output tool (rather than the global "Disable All Tools that Write Output" option). I'm envisioning the inverse of the Email tool, where there is a checkbox to enable Email... rather, the Output tool could have a check box that would disable that output (and ONLY that output), similar/consistent with the "Disable All Tools" function. A "Disable This Output" check box. The benefits would be a quick way to make sure not to overwrite something in one output (but still getting all the good content in all the other outputs) rather than having to go through the multiple clicks of adding to a container and then disabling the container. Could have benefits for connecting with Action tools/interface toggles as well. It would likely need to contain the same/similar formatting in Designer to indicate it has been disabled, though maybe a slightly different color so you could tell it was disabled differently?

(On a similar vein, would love to take this opportunity to bring up my favorite idea-that-has-not-been-implemented-yet-that-would-love-your-vote-and-attention, implementing a Warning that outputs are disabled when posting to Gallery...)

Cheers!

NJ

It would be great if there was an output option for excel files where you could overwrite the data in the sheet, but keep the formatting in the sheet. Similar to how the Paste Values option works in Excel. This would allow me to create a template with data validation, conditional formatting, column widths, cell fill colors, etc and set a workflow to run on a schedule and just paste the data into the existing template.

To get around this right now I have to output it to a separate tab and then paste the columns as values over the existing template. This is fine unless I am out of the office and need to bother someone else to do it. I know there have been many times where i wish this was an option outside of the report I am currently building. I am honestly surprised I couldn't find an idea already submitted about this!

Thanks,

Wes

Hello,

Tableau has a veru useful "split" function that allows you to split a string with a delimiter and specify the number of the result you want

https://onlinehelp.tableau.com/current/pro/desktop/en-us/functions_functions_string.htm

Qlik has the same function, subfield : https://help.qlik.com/en-US/sense/February2019/Subsystems/Hub/Content/Sense_Hub/Scripting/StringFunc...

I think this is quite useful and a very standard feature.

Best regards,

Simon

As a designer, I need to output data only when no data quality errors are encountered within a workflow. I suppose that I wouldn't want to see any errors, but if I am writing multiple output files and errors are encountered during the output processes (e.g. #3 of 4 fails), then I'm kind of out of luck. So let's focus on data quality. If Nulls are encountered in "Actual" data or unjoined records are found or dates are out of range, you name the issue, I don't want to output any data to specific output tools. Work-arounds exist. I can output to a staging file and conditionally schedule or use a conditional runner macro to output to the production data. But what I really want to do is to stop an output tool from receiving any data to output.

Today I handle this by counting error records that would be caught by a TEST tool and appending the count of these bad records to the data that would go to output(s). I filter for IsNull([Count]) and only when 0 ERRORS are found by the test tool, can data be output. Otherwise null records are received by the output tool and it quietly makes no changes.

My ask is to configure an output tool to be disabled if ERRORs exist. That means that the LAST thing to happen in the execution of a workflow will be the output processes. They will all be blocking tools and can't happen until there are no tools left to run except for the outputs (configured as blocked). Maybe this is a big ask.

Hi Everyone,

Many workflows I work with along with those of my colleagues, use big databases in order to get some data. After a few steps down stream and testing, we normally just add an output and then open up that data in a new workflow to save time running the original workflow. Not that this is much of a burden, but I am used to copying and pasting tools from workflow A to workflow B, but you can't do that with the output, because in workflow B the output needs to be converted to an input. I just think it would be a cool added feature if possible. Anyone else agree?

Thank you,

Justin

With the amount of users that use the publish to tableau server macros to automate workflows into Tableau, I think its about time we had a native tool that publishes to Tableau instead of the rather painful exercise of figuring out which version of the macro we are using and what version of Tableau Server we are publishing to. The current process is not efficient and frustrating when the server changes on both the Tableau and Alteryx side.

I have had multiple instances of needing to parse a set of PDF files. While I realize that this has been discussed previously with workarounds here: https://community.alteryx.com/t5/Alteryx-Knowledge-Base/Can-Alteryx-Parse-A-Word-Doc-Or-PDF/ta-p/115...

having a native PDF input tool would help me significantly. I don't have admin rights to my computer (at work) so downloading a new app to then use the "Run Command" tool is inconvenient, requires approval from IT, etc. So, it would save me (and I'm sure others) time both from an Alteryx workflow standpoint each time I need it, but also from an initial use to get the PDFtoText program installed.

- New Idea 277

- Accepting Votes 1,818

- Comments Requested 24

- Under Review 174

- Accepted 56

- Ongoing 5

- Coming Soon 11

- Implemented 481

- Not Planned 116

- Revisit 62

- Partner Dependent 4

- Inactive 674

-

Admin Settings

20 -

AMP Engine

27 -

API

11 -

API SDK

218 -

Category Address

13 -

Category Apps

113 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

247 -

Category Data Investigation

77 -

Category Demographic Analysis

2 -

Category Developer

208 -

Category Documentation

80 -

Category In Database

214 -

Category Input Output

640 -

Category Interface

239 -

Category Join

103 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

76 -

Category Predictive

77 -

Category Preparation

394 -

Category Prescriptive

1 -

Category Reporting

198 -

Category Spatial

81 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

89 -

Configuration

1 -

Content

1 -

Data Connectors

963 -

Data Products

2 -

Desktop Experience

1,536 -

Documentation

64 -

Engine

126 -

Enhancement

329 -

Feature Request

213 -

General

307 -

General Suggestion

6 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

12 -

Localization

8 -

Location Intelligence

80 -

Machine Learning

13 -

My Alteryx

1 -

New Request

193 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

23 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

80 -

UX

223 -

XML

7

- « Previous

- Next »

- TUSHAR050392 on: Read an Open Excel file through Input/Dynamic Inpu...

- NeoInfiniTech on: Extended Concatenate Functionality for Cross Tab T...

- AudreyMcPfe on: Overhaul Management of Server Connections

-

AlteryxIdeasTea

m on: Expression Editors: Quality of life update - StarTrader on: Allow for the ability to turn off annotations on a...

- simonaubert_bd on: Download tool : load a request from postman/bruno ...

- rpeswar98 on: Alternative approach to Chained Apps : Ability to ...

-

caltang on: Identify Indent Level

- simonaubert_bd on: OpenAI connector : ability to choose a non-default...

- maryjdavies on: Lock & Unlock Workflows with Password