Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: Hot Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

In Alteryx enable connections to Oracle Databases that are configured to use External Authentication.

This should allow Alteryx workflows to connect to Oracle databases using different authentication mechanisms, e.g. Kerberos.

Please see this discussion for some analysis on what would be required in Alteryx to support Oracle Database External Authentication:

Essentially this would involve Alteryx allowing users to specify that a connection to an Oracle database will utilize external authentication.

Then when connecting to an Oracle database with external authentication, Alteryx would pass the relevant parameter to Oracle to indicate external authentication is required (and Alteryx would not pass user name and password info). Then authentication with the Oracle database would be controlled by the external authentication configuration on the computer running Alteryx.

For more information on Oracle Database External Authentication see:

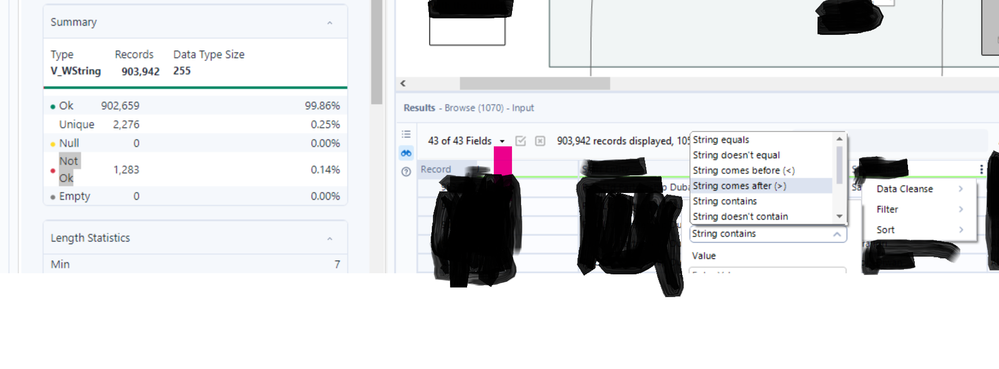

To embed the "Not ok" filter option in the browse tool

Hey there,

The performance profiling option on the "runtime" tab is very helpful to identify bottlenecks on a long-running workflow. However this is missing (along with the entire "Runtime" tab) if I change this to a macro.

Given that the only way to build relatively complex dependant chain jobs is to wrap them in dummy batch macros (using a macro like a sub-procedure with flow-of-control on the master-canvas) - most of our work is done in Macros - so it would be helpful to be able to performance profile them during testing.

When viewing results of a workflow that has Errors, could we add External error resolution data if the user clicks on the error message? Like browse everywhere it could lookup the error in help and in community posts.

cheers,

mark

The email tool, such a great tool! And such a minefield. Both of the problems below could and maybe should be remedied on the SMTP side, but that's applying a pretty broad brush for a budding Alteryx community at a big company. Read on!

"NOOOOOOOOOOOOOOOOOOO!"

What I said the first time I ran the email tool without testing it first.

1. Can I get a thumbs up if you ever connected a datasource directly to an email tool thinking "this is how I attach my data to the email" and instead sent hundreds... or millions of emails? Oops. Alteryx, what if you put an expected limit as is done with the append tool. "Warn or Error if sending more than "n" emails." (super cool if it could detect more than "n" emails to the same address, but not holding my breath).

2. make spoofing harder, super useful but... well my company frowns on this kind of thing.

There are quite a few instances reported in my ognization that user terminates the intallation process since it takes more than one hour and user tends to believe that the intallation process is somehow "failing". So they terminate the installation and try to install again.

A kind reminding message such as "This will approximatly take one hour, and you can enjoy your coffee break" something like that would definetely help.

Please kindly consider.

It would be really helpful to have a bulk load 'output' tool to Snowflake. This would be functionality similar to what is available with the Redshift bulk loader.

Currently it takes a reaaally long time to insert via ODBC or would require you to write a custom solution to get this to work.

This article explains the general steps but some of the manual steps outlined would have to be automated to arrive at a solution that is entirely encapsulated within a workflow.

http://insightsthroughdata.com/how-to-load-data-in-bulk-to-snowflake-with-alteryx/

Looking for a tool to replicate the Goal seek functionality built into Excel.

Seems it could be solved by using R or iterative macros however a tool would make life much easier,

I use a mouse which has a horizontal scroll wheel. This allows me to quickly traverse the columns of excel documents, webpages, etc.

This interaction is not available in Alteryx Designer and when working with wide data previews it would improve my UX drastically.

Hello,

I would like to suggest the ability to manage our virtual environment for Python modules within Alteryx. Some current workflows I am building would be far easier and more secure if I had access to the virtual environment that the Python code would run in.

Uses for modifying the virtual environment:

1) Setting environment variables in order to hide API Keys/DB credentials/etc.

2) Installing private GitHub repository packages into the environment.

3) Creating repeatable and easily maintainable ways to manage dependencies.

It would be important that these virtual environments have a way to persist onto Alteryx Gallery, so that workflows behave identically on local machines as they would on the server. This could potentially be done though a requirements.txt file or some other environment initializer, but I'll leave the implementation to the experts. My preference would be for each workflow to contain their own virtual environment (as is best practice when developing Python scripts).

Thank you,

It would be helpful to be able to embed a macro within my workflows so in the end I have one single file.

Similar to how Excel becomes a macro enabled file, it would be great if the actual macro could be contained in the workflow. As it stands now, the macro that I insert into a workflow is similar to a linked cell in MS Excel that points to another file. If the macro is moved the workflow becomes broken. I often work on a larger workflow that I save locally while developing. Once it's complete, I then save the workflow to a network drive and have to delete the macros and reinsert these. It also makes it challenging if I were to send a workflow to someone else... I will have to give them instructions on which macros to insert and where. Similar to a container, they could be minimized so to speak to their normal icon, and then expanded/opened if any edits were needed....then collapsed when done.

Thanks for the consideration.

To get simple information from a workflow, such as the name, run start date/time and run end date/time is far more complex than it should be. Ideally the log, in separate line items distinctly labelled, would have the workflow path & name, the start date/time, and end date/time and potentially the run time to save having to do a calculation. Also having an overall module status would be of use, i.e. if there was an Error in the run the overall status is Error, if there was a warning the overall status is Warning otherwise Success.

Parsing out the workflow name and start date/time is challenge enough, but then trying to parse out the run time, convert that to a time and add it to the start date/time to get the end date/time makes retrieving basic monitoring information far more complex than it should be.

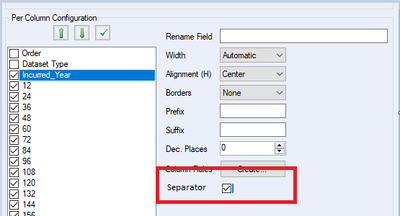

First of, let me say that I really love that the render tool adds commas to your numbers when you output them to excel. You can even control the number of decimals!

However, there are those times that I wish I could turn the commas off. For example, I have a column that represents years. In this case, I want it to be a number, but I don't want commas. I can see this xml coming out of my table tool:

.de41ddeb2857c4579b858debce63bfbec tbody .column0 { numeric:true; decimal-places:0; }

I would love an additional item like: separator:false that could be set in the table tool to shut off the separator. I've mocked up the table tool here:

In my limited knowledge, I'm guessing Alteryx would need to change/enhance the way their pcxml is structured.

For the Output tool, File Format of Microsoft Excel (*.xlsx) - the non-Legacy one - it doesn't have the "Delete Data & Append" option that the Legacy ad 97-2003 Excel formats have.

Having the Delete Data & Append for the most recent version of Excel would be very beneficial. Without it, there does not appear to be a way to udpate an existing Excel sheet using an Alteryx workflow while preserving the formatting within the Excel sheet. The option to Overwrite/Drop removes all formatting.

I have this workflow refreshing an Excel sheet daily, and then am emailing it to a distribution at the end of the workflow. Unfortunately, right now I have to use the 97-2003 format to preserve the formatting of the Excel sheet when it is automatically refreshed and emailed each day.

Can you please assess adding this option? Thanks!

With more and more enterprises moving to cloud infrastructures and Azure being one of the most used one, there should be support for its authentication service Azure Active Directory (AAD).

Currently if you are using cloud services like Azure SQL Servers the only way to connect is with SQL login, which in a corporate environment is insecure and administrative overhead to manage.

The only work around I found so far is creating an ODBC 17 connection that supports AAD authentication and connect to it in Alteryx.

Please see the post below covering that topic:

In order to run a canvas using either AMP or E1 - the user has to perform at least 5 operations which are not obvious to the user.

a) click on whitespace for the canvas to get to the workflow configuration. If this configuration pane is not docked - then you have to first enable this

b) set focus in this window

c) change to the runtime tab

d) scroll down past all the confusing and technical things that most end users are nervous to touch like "Memory limits" and temporary file location and code page settings - to click on the last option for the AMP engine.

e) and then hit the run button

A better way!

Could we instead simplify this and just put a drop-down on the run button so that you can run with the old engine, or run with the new engine? Or even better, have 2 run buttons - run with old engine, and run with super-fast cool new engine?

- This puts the choice where the user is looking at the time they are looking to run (If I want to run a canvas - I'm thinking about the run button, not a setting at the bottom of the third tab of a workflow configuration)

- It also makes it super easy for users to run with E1 and AMP without having to do 10 clicks to compare - this way they can very easily see the benefit of AMP

- It makes it less scary since you are not wading through configuration changes like Memory or Codepages

- and finally - it exposes the new engine to people who may not even know it exists 'cause it's buried on the bottom of the third tab of a workflow configuration panel, under a bunch of scary-sounding config options.

cc: @TonyaS

There are circumstances where the python install section of Alteryx fails - however it does not report failure - in fact it reports success, and the only way to check if the Jupyter install fails is to check the Jupyter.log to look for error messages.

Can we add two features to Alteryx install / platform to manage this:

- If the installer for Python fails - please can you make this explicit in feedback to the user

- Can we also add a way for the user to repair the Python install - either using standard MSI Repair functionality, or a utility or a menu system in Designer.

Thank you

Sean

As of 2019.4+, Alteryx is now leveraging the Tableau Hyper API in order to output .Hyper files. Unfortunately, our hardware is not compatible with the Tableau Hyper API. It would be great if Alteryx could allow a best of both worlds in that they use the new Tableau Hyper API when possible but revert back to the old method (pre 2019.4) when the machine's hardware doesn't support the new Tableau Hyper API. Thanks!

The autorecover feature should also backup macros. I was working on a macro when there was an issue with my code. I have my autorecover set very frequent, so I went there to backup to a previous version. To my great surprise, my macro wasn't being saved behind the scenes at all. My workflow had its expected backups, but not my macro. Please let any extension be backed up by autorecover.

Thanks!

When moving a tool container, all of the tools within it become mis-aligned with the canvas grid. Moving any single tool immediately re-aligns it to the grid, which puts it out of alignment with the rest of the tools in the container.

Example: Put 3 tools in a row in a tool container, all aligned horizontally. Next, move the container. Now, move the middle tool, then try to place it back in alignment with the other two. You won't be able to, because they are out of alignment with the canvas grid.

Please fix this.

- New Idea 258

- Accepting Votes 1,818

- Comments Requested 24

- Under Review 169

- Accepted 56

- Ongoing 5

- Coming Soon 11

- Implemented 481

- Not Planned 118

- Revisit 64

- Partner Dependent 4

- Inactive 674

-

Admin Settings

20 -

AMP Engine

27 -

API

11 -

API SDK

218 -

Category Address

13 -

Category Apps

112 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

245 -

Category Data Investigation

76 -

Category Demographic Analysis

2 -

Category Developer

208 -

Category Documentation

80 -

Category In Database

214 -

Category Input Output

636 -

Category Interface

238 -

Category Join

102 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

76 -

Category Predictive

77 -

Category Preparation

392 -

Category Prescriptive

1 -

Category Reporting

198 -

Category Spatial

81 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

87 -

Configuration

1 -

Data Connectors

958 -

Data Products

3 -

Desktop Experience

1,525 -

Documentation

64 -

Engine

125 -

Enhancement

316 -

Feature Request

212 -

General

307 -

General Suggestion

4 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

12 -

Localization

8 -

Location Intelligence

80 -

Machine Learning

13 -

New Request

188 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

24 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

78 -

UX

223 -

XML

7

- « Previous

- Next »

- rpeswar98 on: Alternative approach to Chained Apps : Ability to ...

-

caltang on: Identify Indent Level

- simonaubert_bd on: OpenAI connector : ability to choose a non-default...

- maryjdavies on: Lock & Unlock Workflows with Password

- nzp1 on: Easy button to convert Containers to Control Conta...

-

Qiu on: Features to know the version of Alteryx Designer D...

- DataNath on: Update Render to allow Excel Sheet Naming

- aatalai on: Applying a PCA model to new data

- charlieepes on: Multi-Fill Tool

- seven on: Turn Off / Ignore Warnings from Parse Tools

| User | Likes Count |

|---|---|

| 27 | |

| 12 | |

| 11 | |

| 7 | |

| 6 |