Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

It would be great if there was an output option for excel files where you could overwrite the data in the sheet, but keep the formatting in the sheet. Similar to how the Paste Values option works in Excel. This would allow me to create a template with data validation, conditional formatting, column widths, cell fill colors, etc and set a workflow to run on a schedule and just paste the data into the existing template.

To get around this right now I have to output it to a separate tab and then paste the columns as values over the existing template. This is fine unless I am out of the office and need to bother someone else to do it. I know there have been many times where i wish this was an option outside of the report I am currently building. I am honestly surprised I couldn't find an idea already submitted about this!

Thanks,

Wes

Most of the finance industry is using HFM or Essbase finance solutions which is flexible in keeping the data and generating reports. Alteryx should create a direct connection with Hyperion or Essbase so that it's easier to connect with the data in those systems and it can directly feed other system reports.

When packaging a workflow, or uploading to a Server environment the ability to manage the assets which need to be included is critical, particularly in more complex solutions which may have numerous dependencies.

The asset management display should be modified to present two column with the first showing just the file name and extension of the asset, and the second column can then show the full path of the asset. This easy change would would prevent the need for scrolling left and right to see the file name when longer paths are utilized.

An alternative approach would be to allow the window to be resizable so the user could see everything without the need to scroll.

The ability to filter/sort the assets by type would also be useful with the following categories: Macros (.yxmc), Data Files (supported file types from file input screen), Other Assets.

Hello,

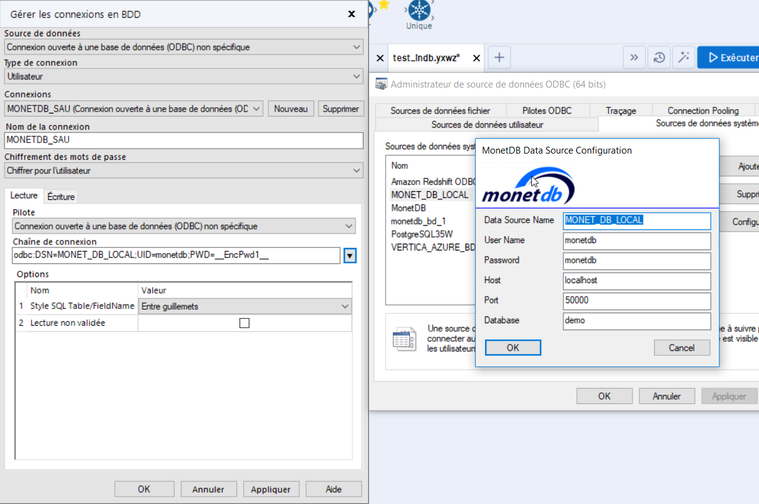

My issue is very easy to solve. I want to use the generic ODBC In database for a specific base (monetdb here but it isn't important).

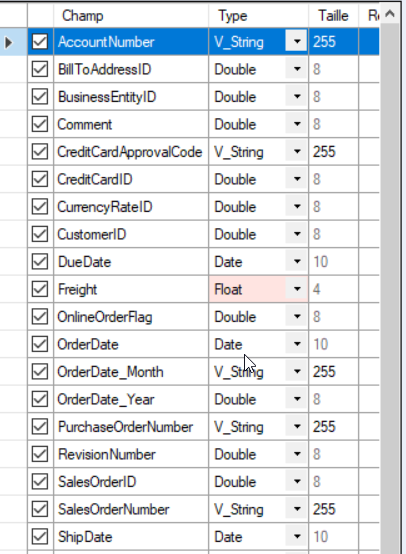

The connexion works just fine. However, I cannot create table because the data types are changed and does not even exist. Here is my data with some Date type :

And here the error in my data stream in give me this very interesting message :

Error: Entrée du flux de données (2): Erreur lors de la création de la table "formation.temp1" : [MonetDB][ODBC Driver 11.31.11]Type (datetime) unknown in: "create table "formation"."temp1" ("AccountNumber" varchar(255),"BillToAddressID"

syntax error, unexpected IDENT in: ""Freight""

CREATE TABLE "formation"."temp1" ("AccountNumber" varchar(255),"BillToAddressID" float,"BusinessEntityID" float,"Comment

" float,"CreditCardApprovalCode" varchar(255),"CreditCardID" float,"CurrencyRateID" float,"CustomerID" float,"DueDate" datetime,"Freight" real,"OnlineOrderFlag" float,"OrderDate" datetime,"OrderDate_Month" varchar(255),"OrderDate_Year" float,"PurchaseOrderNumber" varchar(255),"RevisionNumber" float,"SalesOrderID" float,"SalesOrderNumber" varchar(255),"ShipDate" datetime,"ShipMethodID" float,"ShipToAddressID" float,"Status" float,"SubTotal" float,"TaxAmt" float,"TotalDue" float)

1/ My field is a date, why do you want to convert it in Datetime??

2/ Datetime is not even a usual field type in sql database (at least not supported by monetdb, vertica, postgresql, oracle, etc, etc...)... it should obviously be timestamp

Currently, this non-specific in database ODBC connexion cannot be used at all!

Hello,

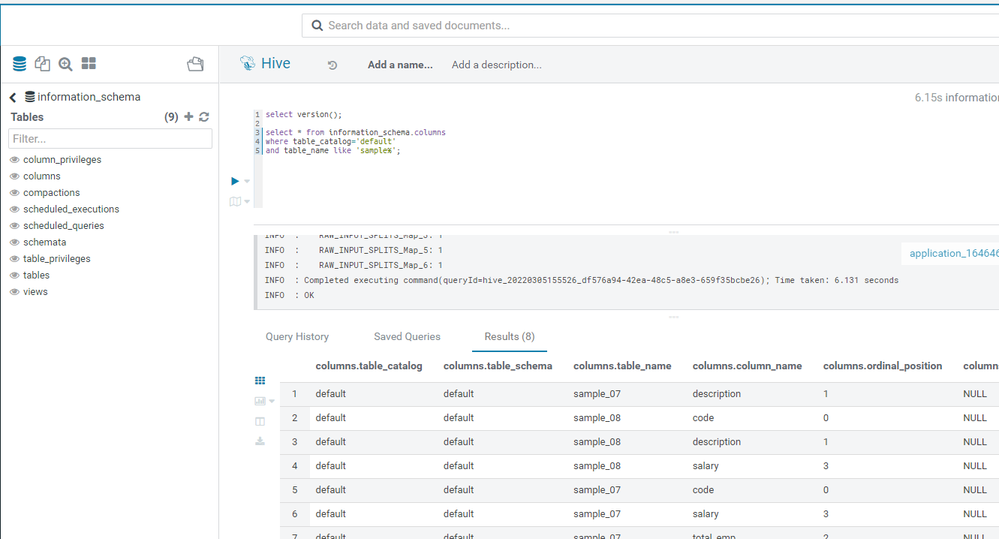

As of today, when you connect o, a database, you go through a batch of queries to retrieve which database it is ( cf https://community.alteryx.com/t5/Alteryx-Designer-Ideas/Smart-Visual-Query-Builder-for-in-db-less-te... where I suggest a solution to speed up the process) and then, Alteryx queries the metadata. In order to get the column in each table, Alteryx use a SHOW TABLES and then loop on each table. This is really slow.

However, since Hive 3.0, an information_schema with the list of columns for each table is now available. I suggest to use the information_schema.columns instead of the time-consuming loop.

PS : I don't know if it's linked to the Active Query Builder, the third-party tool behind the Visual Query Builder. In that case, it would be a good idea to update it as suggested here https://community.alteryx.com/t5/Alteryx-Designer-Ideas/Update-Query-Builder-component/idi-p/799086

Best regards,

Simon

There are many circumstances when you have to build an interative macro where it's not just the iterating data set that needs to change every iteration, but also a second data set.

Think about this like a loop where two different variables are updated on every iteration, not just the control variable in the For xxx control variable.

The way that users work around this is to use a temporary yxdb file where instead of a macro input you input from the yxdb, and then write back to the same yxdb. This allows you to pretend that you can adjust 2 different data sets on every cycle of the loop. there are 4 downsides to this:

a) User complexity - this breaks the conceptual simplicity of macro inputs since now the users have to understand that in situation X I use macro inputs; and in situation Y I have to use some other type of tool.

b) Speed penalty - writing to disk is between 1000x slower and 1 000 000x slower than working with data in memory (especially if it's in cache) - so by forcing this to go through a yxdb file, you do incur a speed penalty which is just not needed

c) blocking penalty - Because of the fact that you can't write to a file that you're still reading from, you need to pepper this with Block until done tools - and you need to initialize the macro using a first write to the yxdb file outside the macro - which further hurts speed. Given the nuanced behaviour of block-until-done, this also introduces user complexity issues

d) Self-contained - because you have to initialize these files outside the macro - the macro is no-longer self-contained and portable (which breaks the principle of Information Hiding which is a key pillar of good modular decoupled software design.

The other way that users work around this - is to serialize their entire second recordset into a field which then gets tacked onto the iterating data set using an iterative macro. This is HIGHLY wasteful becuase then you have to build a serialize & deserialize process for this second recordset. It fixes the speed and blocking penalites from above, but introduces a computational overhead which is generating no value; and makes this even more complex for users - and a further blocker to using macros.

Recommendation:

We could make this simpler by allowing users to create multiple pairs of macro input / macro outputs so that 2 or 3 or n different data sets can be updated with every iteration.

Below is a screenshot demonstrating this, from an Advent of code challenge - the details of the problem are not important - the issue at hand is that there are 2 record sets which both need to be updated on every iteration.

cc: @NicoleJohnson @Samanthaj_hughes @stevea

Currently I find myself always wanting to replace the DateTime field with a string or visa verse.

It would be nice to have a radio button to pick whether to append the parsed field to replace the current field with the parsed field.

I understand that all you need is a select tool after, this would be a nice QoL change especially where the field may be dynamically updated.

Hi,

It would be great if users have the option to display the number of records that go in and out of the different tools in your canvas. This allows users to very quickly see how many records are in their datasets, and especially quickly analyze the results of specific actions such as joins, filters etc. without the need to open each individual tool. Especially when performing joins this can be very useful to quickly see how many of your rows have been successfully joined. I think this will give users a feeling that they have more control over their data and a better understanding of what is happening in Alteryx. Also if you quickly want to review a complex workflow (especially when it is not your own) this could be a huge timesaver. Simply run the workflow and follow the numbers to see what is happening and identify tools that might cause issues.

Love to hear what you think!

Presently when mapping an Excel file to an input tool the tool only recognizes sheets it does not recognize named tables (ranges) as possible inputs. When using PowerBI to read Excel inputs I can select either sheets or named ranges as input. Alteryx input tool should do the same.

Thank you to @LeahK for suggesting that this should be a separate idea (cc @JulieH; @TaraM, @LeahK, @JoeM😞

The lack of home use / learning editions of Alteryx is a serious mid-term competitive threat factor for Alteryx - and it's well worth both this Alteryx community; and the Alteryx Community Management team making this a key priority for the Alteryx platform.

Rationale:

The BI/Data science space is very competitive (as evidenced by the recent Gartner 2016 Magic Quadrants), and although Alteryx was given very favourable review in some factors - there were serious concerns about licensing costs; and time to productivity.

Clearly - Alterxy succeeds in direct proportion to the size of the active user base - so the question is "How do we increase the licensed / deployed user base" and I believe that there are 3 factors which all relate to the issue of learning-edition alteryx licenses.

a) Time to productivity: Right now - I cannot ask one of my staff to learn Alteryx at home and prove their capability before paying several thousand dollars to give them a license. Once I give them a license; it then takes them 2-3 months to become productive, and we run the lottery of finding out whether this person was a good fit for this kind of role in the first place. Solution: Make Alteryx available in a learning edition at home with a robust certification program; and a learning progression built in - that way I can tell my staff "I will give you a license once you demonstrate your competence by learning to this level". How does this help Alteryx: lower time to productivity = more attractive product

b) Size of committed user base: As tech professionals - we take our tech bias with us to our new role (the "Affinity effect"), and often will have some leverage in tool selection. The more people know Alteryx and are invested in their Alterxy skill set - the greater this "Affinity effect" will start to show in sales figures. This also makes the tool more sticky, reducing the defector population if users can see and use latest features at home.

Solution: Give the learning edition away for free - Pentaho spoon is currently free for learning; as is Tableau Public; and MS Power BI is incredibly cheap to learn. If Alteryx doesn't do this - others definitely will anyway, and this "Affinity Effect" will be washed out.

c) Increase the skill pool in the market: The more wide-spread the skills are - the more likely I am to purchase a toolset. So - if Alteryx skills are very broadly available in the market - it's easier to make the purchase decision for Alteryx rather than competitors. However - right now the skillset growth is severely constrained, so Alteryx is making the "Buy Alteryx" decision needlessly harder.

Solution: Make Alteryx available for training; learning; & Certification for free.

Note: Although this is directly contrary to my personal interests in the short term (the smaller the number of people with skillX, the higher the market value), it is also long-term selfish because I will be able to increase the impact within my firm by having access to a greater pool of skilled and instantly productive workers in the market.

Although price may be an issue - this is a separate matter. I believe that Alteryx can do a lot to create future sales pipeline, and a committed and very sticky user base by providing this home-learning & certification program. In my mind - this is probably one of the biggest competitive opportunities & threats facing Alteryx right now, given the velocity of this particular industry & segment.

I'd be very happy to talk this through in detail with the Alteryx team if that's helpful (just PM me)

Many thanks

Sean

It would be nice to be able to concatenate numeric values (integers, doubles, etc.) directly in the Summarize Tool.

I know this would involve converting it to a string on the backend, but I don't believe there would be any data loss when going from numeric to string. I know this can be done by using other tools like Select of Formula to convert to string before the summarize but I don't see any reason why this couldn't be accomplished in a single tool.

Thanks,

Paul

Hello,

I work for a company with circa 250K employees. We are in the process of shifting all documents over to OneDrive and I've noticed that when I have an Alteryx workflow that uses inputs stored on OneDrive the connection can be very intermittent. I use the UNC file naming protocol for my input/directory tools, but more often than not I need to run a VBA script that accesses OneDrive before Alteryx tools will connect.

There's a couple of posts on this community about this, but nothing in the ideas board. I believe the SharePoint connector is being updated for v11, but nothing for OneDrive.

I'd like there to be better integration to OneDrive for business and SharePoint Online please.

Thanks,

John.

Is it possible to add a search feature to the Summarize Tool that is similar to the search feature in the Select Tool? Selecting specific fields to summarize in small datasets is fine, but if I am dealing with a table that has 200 fields searching for a specific field can be cumbersome. Type in a few key letters to filter the available fields would be helpful.

I have recently added an Azure data lake v2. The Azure input/output connectors do not work with this version of the Azure data lake.

It appears that Alteryx adds ".azuredatalakestore.net" to the file path. This works for V1, but not needed for V2

any plans to configure a connector for Azure data lake v2?

As simple as the title : an In-Database Block Until Done would be a pretty nice feature to control the execution of a workflow.

We're currently using Regex and text to columns to parse raw HTML as text into the appropriate format when web scraping, when a tool to at least parse tables would be hugely beneficial.

This functionality exists within Qlik so it would be nice to have this replicated in Alteryx.

Obviously, we need to retain the ability to scrape raw HTML, but automatically parsing data using the <td>, <th> and <tr> tags would be nice.

In the following page there is a table showing the states and territories of the US:

As this functionality exists elsewhere it would be nice to incorporate this into Alteryx.

See the discussion on this page:

Roughly, in all versions of Alteryx Designer, you can use the Annotations tab and rename a tool. This is awesome for execution in designer, because you can then easily search for certain tool names, better document your workflow, and see the custom tool name in the Workflow Results.

However, when log files are generated, either via email, the AlteryxGallery settings, or an AlteryxEngineCMD command, each tool is recorded using only its default name of "ToolId Toolnumber", which is not particularly descriptive and makes these log files harder to parse in the case of an error.

Having the custom names show in these log files would go a long way towards improving log readability for enterprise systems, and would be an amazing feature add/fix. For users who prefer that the default format be shown, this could be considered as a request to ADD renames in addition to the existing format. EG "Input Data 1" that I have renamed to "Load business Excel File" could be shown in the log as:

00:00:0.003 - ToolId 1 - Load business Excel File: 1 record was read from File Finished in 00:00:0.004

Hi all,

In supporting our Alteryx users - we often have situations where users have had multiple different versions of Alteryx installed on their machines over time - and this leads to a situation where settings / configurations are carried forward from one install to another, and there doesn't appear to be an obvious way to force a full & clean reinstall and reset. This creates a problem when something like the Python settings are broken, since the reinstall does not fix this.

In this line - it would be very useful to have the ability to perform a full & clean uninstall - potentially in 2 phases:

- Initially - a script (e.g. Powershell) which is available on community, which cleans out all files that are installed by Alteryx (any version); all registry entries; an any user settings

- Later - it would be valuable to build this into the uninstaller so that the user has the ability to uninstall and remove ALL traces of the software and user settings.

Many thanks

Sean

- New Idea 395

- Accepting Votes 1,783

- Comments Requested 20

- Under Review 181

- Accepted 47

- Ongoing 7

- Coming Soon 13

- Implemented 550

- Not Planned 106

- Revisit 56

- Partner Dependent 3

- Inactive 674

-

Admin Settings

22 -

AMP Engine

27 -

API

11 -

API SDK

230 -

Bug

1 -

Category Address

13 -

Category Apps

114 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

252 -

Category Data Investigation

79 -

Category Demographic Analysis

3 -

Category Developer

220 -

Category Documentation

82 -

Category In Database

215 -

Category Input Output

658 -

Category Interface

246 -

Category Join

109 -

Category Machine Learning

3 -

Category Macros

156 -

Category Parse

78 -

Category Predictive

79 -

Category Preparation

406 -

Category Prescriptive

2 -

Category Reporting

205 -

Category Spatial

83 -

Category Text Mining

23 -

Category Time Series

24 -

Category Transform

93 -

Configuration

1 -

Content

2 -

Data Connectors

985 -

Data Products

4 -

Desktop Experience

1,615 -

Documentation

64 -

Engine

136 -

Enhancement

421 -

Event

1 -

Feature Request

219 -

General

307 -

General Suggestion

8 -

Insights Dataset

2 -

Installation

26 -

Licenses and Activation

15 -

Licensing

15 -

Localization

8 -

Location Intelligence

82 -

Machine Learning

13 -

My Alteryx

1 -

New Request

229 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

26 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

87 -

UX

228 -

XML

7

- « Previous

- Next »

-

Carolyn on: Blob output to be turned off with 'Disable all too...

- MJ on: Add Tool Name Column to Control Container metadata...

-

fmvizcaino on: Show dialogue when workflow validation fails

- ANNE_LEROY on: Create a SharePoint Render tool

- jrlindem on: Non-Equi Relationships in the Join Tool

- AncientPandaman on: Continue support for .xls files

- EKasminsky on: Auto Cache Input Data on Run

- jrlindem on: Global Field Rename: Automatically Update Column N...

- simonaubert_bd on: Workflow to SQL/Python code translator

- abacon on: DateTimeNow and Data Cleansing tools to be conside...

| User | Likes Count |

|---|---|

| 7 | |

| 3 | |

| 3 | |

| 3 | |

| 2 |