Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

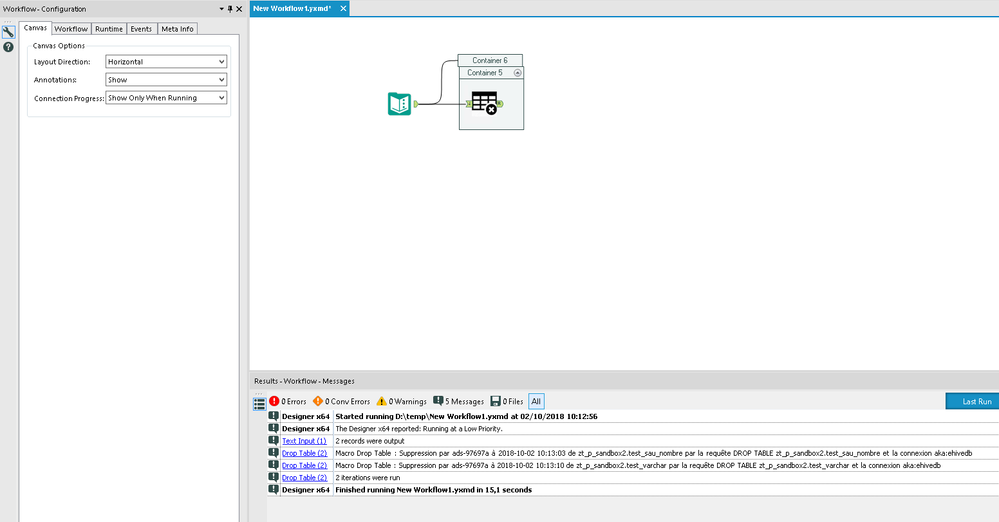

Today, the behaviour of batch macro can be strange.

If I refer to https://community.alteryx.com/t5/Alteryx-Designer-Discussions/Batch-Macro-not-looping-after-running-...

we can have big behaviour differences between :

-wf and app

-designer and scheduler

Example here with a batch macro running for all lines in designer and only for line in scheduler

I know the turnaroud (just use a message box) but it's not natural and I think

-at least the same behaviour is needed in any use case

-if you want to do some optimization, ok, but make it an option!!

- TEXT TO COLUMN TOOL : Check Mark for “Output/No-Output” next to “OUTPUT ROOT NAME”

Most of the time I don't want/need the column that I parsed. Provide a check box for if you want the root column output.

Currently when you add an event to notify you of workflow failure / success - you have to enter the SMTP settings every time. It would be more efficient to set this up as a user setting which can be used for the default across all canvasses that this user creates.

Hello All,

I'm using the dynamic input tool for SQL requests in my Workflow (WF).

I'm using the "Replace a Specific String" to replace elements in the SQL statement dynamically depeding on results of prevoius tools, user input etc.

So the statement looks like

select * from Schema_Name_xx where invoice_number = 'invoice_number_xx'

Since Schema_Name_xx is no valid Schema in the Database, the statement (= Validation) won't work. Only if I replace Schema_Name_xx by e.g. Invoice_Data_Current it will work, same with the invoice number, invoice_number_xx is replaced by e.g. 4711.

Therefore, validation makes no sense and will never work, only if the WF is running, the correct Schema is inserted in the SQL statement by the "Replace a Specific String" function.

It would be great to disable it in the users settings or wherever in the Designer, changing a config file would also be great :-)

Pls. note: I'm thinking (since I'm not allowed anyway ;-)) about changing/disabeling anything in the Alteryx Server settings.

Reason:

1. Speed: Validating a WF with SQL statements that don't work takes time (every time I save it), sometimes I get even a timeout...

2. WF error entries: Each upload with a failed validation creates an entry in the WF result list which makes it harder to seperate them from the "real" WF errors...

Thanks & Best Regards,

Thomas

In order to debug a call to a REST API - it is often necessary to take the web call, and pop this into a web browser. Can you add a second output to a RestAPI tool (a derivative of the Download tool) that has a second output that provides the full web call that was made, including the full parameterised URL. This would make it MUCH easier to debug rest API calls.

cc: @TashaA

Similar to this idea https://community.alteryx.com/t5/Alteryx-Designer-Ideas/Download-tool-Request-and-Response-details/i...

except my preference would be to pull Rest API calls into a more specific tool and give a second output for the responses

When building out Alteryx workflows there may be a need to read in different ranges within the same Excel spreadsheet. For example bringing in a table from Sheet1, but also isolating a table name in a particular cell (in my example cell C8).

When turning this into an analytic app, with a file browse is to add an action tool with the default value of "Update Input Data Tool".

However when specifying this option within the analytic app interface, you are only allowed to chose one option of the following:

i) Select a sheet

ii) Select a sheet and specify a range

iii) a named range or

iv) a list of sheet names.

The problem is in the example above I need a sheet and a range, but I want to avoid adding two file browse interface tools as it shouldn't be needed. If the user selects (i) then it loses the reference to cell C8, but I would imagine a lot of users as they get started with apps don't realise this is what will happen.

There is however a way to solve this currently and it requires overwriting the default behaviour and configuring the second action tool (the one that updates the file for C8), to update value with a formula, where you assume the user would select sheet name and then use this formula:

replace([#1],"$`","$C8:C8`")

However I would argue that this has a lot of technical debt, plus if the user needs to modify where the header is, for example to D8 they need to change the input file and the action tool so it works as a workflow and an analytic app.

Solution

Like how the configuration options for the input file, such as which row to input data from or whether first row contains data is maintained, modify the behaviour of the default option in the action tool to maintain references to ranges.

When using the unknown field in a select, you can either select or deselect the fields which will appear afterwards.

I would love to have an option or different to specify elements for fields to appear for instance having :

- *unknown text where you could set the metadata type (for instance vwstring) and maximal length

- *unknown numeric where you could set the type, double or fixed decimal

and for dates too

it would set a default behaviour for incoming text fields or numeric fields allowing for more precise deselction too.

It would be great to have the below functionality in Alteryx.

A workflow is built in Alteryx and button click in Alteryx can be used to generate SQL code that can be ran on a specific database platform, such as SQL Server to run external editors such as SQL Server Management Studio. Thanks.

When we edit formula tool, only first expression is expanded. I prefer all expressions are expanded as a default. When I want to shrink them, I want to 'expand all' icon like attached snap shot. This icon is toggled same as each expression's expand icon('expand all' <-> 'shrink all')

I would like to propose three feature enhancements for the Cross Tab tool under the Transform tool category.

1. Bringing Concat Unique functionality, which is an idea that is currently in Coming Soon status.

2. Adding Start and End in addition to Separator, similar to the Concatenate Properties found in the Summarize tool.

3. Changing the Default Size from 2048 to 1073741823 (max V_WString size). It is common for especially new users to ignore the truncation errors and potentially miss important data that may need to be processed downstream.

Hi all,

The Publish to Tableau Server tool is great.. but requires username and password. If you are using AD, there is a chance that your users don't have a password. In that case, you probably have a technical user that you share across the team. This is not an ideal situation and you loose the governance around the data.

Fortunately, there is an easy workaround. You can leverage personal token authentication : https://help.tableau.com/v2019.4/server/en-us/security_personal_access_tokens.htm

The advantage of this method is that it logs in with your user and your data source is uploaded under your name. This is still using the Tableau REST API so the changes to do in the current macro is MINOR.

Changes to do in the current macro :

1- Add a parameter authentication method with choices : Username/Password ; Personal Token

2- If Personal Token is selected, add two parameters : Token_Name and Token_Value

3 - In the TableauServer.Login supporting macro, improve the formula(13) to change the payload based on user selection. If Username/Password, keep it as is. Else use the syntax here : https://help.tableau.com/current/api/rest_api/en-us/REST/rest_api_concepts_auth.htm#make-a-sign-in-r...

This is quite a straight forward change but could help a lot of companies using Alteryx.

Can you please implement that changes to strengthen this tool ?

Thanks a lot,

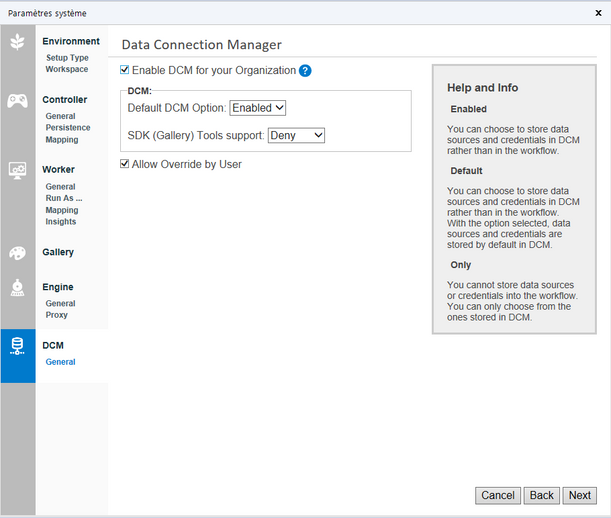

Hello all,

I just love the DCM feature. However, you have to enable it manually in system settings after the install or the update.

I don't think there is a good reason for that and it would save time to enable it by default.

Best regards,

Simon

Under the Runtime setting, there is an existing option to "Disable All Tools that Write Output". This is incredibly useful when developing workflows when you don't want to overwrite existing files.

But this option doesn't disable all outputs, like Publishing to Tableau!

I suggest adding the option to disable ALL kinds of outputs, uploads, and publishing (except possibly logging and caching).

A "Filter" that would work like a "Formula" - where you can add multiple criteria in one space, and for each criteria, you would get an output anchor. I use Alteryx to manage master data from several factories - each needing to have separate workstreams. Stacking Filter criteria functions, but it would be much cleaner to have it managed within a single tool.

Hi to all,

I have seen one or two posts requesting ability to total up rows and/or columns of numbers, however this idea also requests the ability to subtotal data by a field and also produce an overall total.

This could be an extension to existing tools such as 'Summarise' and 'Cross Tab' or could be a stand alone tool. Desired output of using a tool like this would produce something like this:

This would be incredibly useful for building reports within Alteryx as well as analysing the data, and cut down the amount of tools currently required to produce this. I have seen a third party tool which does some of this but this adds the ability to subtotal.

thanks - Roger

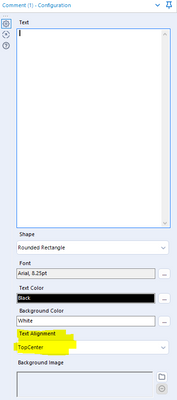

Can we set our own Comment tool default preferences? - For me I want to set the text alignment to be "Center" by default, currently it's always "TopCenter" when I open it.

Experts -

During development it would be helpful to be able to do the following in both Formula and Filter tools (and perhaps any other tool that uses custom code):

1) Highlight a line or block of code

2) Right click

3) Comment/Uncomment

Easier than manually typing or deleting "//" at every line.

Thanks in advance!

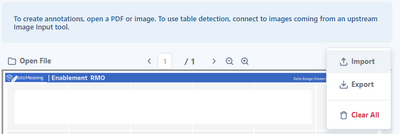

Hi, I was using the Image Template tool and I noticed that the icons for import and export are switched.

It would be useful to have the WorkflowName captured as one of the default Engine constants. The WorkflowDirectory is included so why not the WorkflowName as well?

I often have to use configuration files to pass in values to workflows meaning the workflow name needs to be manually entered into the workflow, either as a text input or User Constant, which feels like an unnecessary step as Alteryx must know the name of the workflow once it has been saved.

Hi there,

We often get the following error message from the download tool

"00:00:23.555 - Error - ToolId 106: Error in libCURL: You have found a bug. Replicate, then let us know. We shall fix it soon."

Unfortunately this seems to be a transient error so we've not been able to replicate this in a useful & repeatable way. However - we see this happening at least a few times per week on one of our servers, so this is a continuing issue.

Please could you provide more detailed error messaging on the download tool so that this error can be debugged and/or replicated?

Many thanks

Sean

- New Idea 395

- Accepting Votes 1,783

- Comments Requested 20

- Under Review 181

- Accepted 47

- Ongoing 7

- Coming Soon 13

- Implemented 550

- Not Planned 106

- Revisit 56

- Partner Dependent 3

- Inactive 674

-

Admin Settings

22 -

AMP Engine

27 -

API

11 -

API SDK

230 -

Bug

1 -

Category Address

13 -

Category Apps

114 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

252 -

Category Data Investigation

79 -

Category Demographic Analysis

3 -

Category Developer

220 -

Category Documentation

82 -

Category In Database

215 -

Category Input Output

658 -

Category Interface

246 -

Category Join

109 -

Category Machine Learning

3 -

Category Macros

156 -

Category Parse

78 -

Category Predictive

79 -

Category Preparation

406 -

Category Prescriptive

2 -

Category Reporting

205 -

Category Spatial

83 -

Category Text Mining

23 -

Category Time Series

24 -

Category Transform

93 -

Configuration

1 -

Content

2 -

Data Connectors

985 -

Data Products

4 -

Desktop Experience

1,615 -

Documentation

64 -

Engine

136 -

Enhancement

421 -

Event

1 -

Feature Request

219 -

General

307 -

General Suggestion

8 -

Insights Dataset

2 -

Installation

26 -

Licenses and Activation

15 -

Licensing

15 -

Localization

8 -

Location Intelligence

82 -

Machine Learning

13 -

My Alteryx

1 -

New Request

229 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

26 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

87 -

UX

228 -

XML

7

- « Previous

- Next »

-

Carolyn on: Blob output to be turned off with 'Disable all too...

- MJ on: Add Tool Name Column to Control Container metadata...

-

fmvizcaino on: Show dialogue when workflow validation fails

- ANNE_LEROY on: Create a SharePoint Render tool

- jrlindem on: Non-Equi Relationships in the Join Tool

- AncientPandaman on: Continue support for .xls files

- EKasminsky on: Auto Cache Input Data on Run

- jrlindem on: Global Field Rename: Automatically Update Column N...

- simonaubert_bd on: Workflow to SQL/Python code translator

- abacon on: DateTimeNow and Data Cleansing tools to be conside...

| User | Likes Count |

|---|---|

| 7 | |

| 3 | |

| 3 | |

| 3 | |

| 2 |