Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: Top Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

I like how the Multi-Row and Multi-Field tools have a variable tab to help the user know the proper syntax for building their formulas such as Row-1, Row+0, and Row+1. That said, it would be nice if the user could change the Expression manually to point to different rows. You don't necessarily need all of these variables listed, but if the user could change to [Row-2:Fieldname] so that the formula could look two or more rows up/down. I have a report where I want to divide one row up by the value two rows up. I have to use a workaround where this would make it much easier.

Additionally, it would be nice if the Multi-Field tool could accept Multi-Row functions mixed with the Current Field function... such as [Row-1:_CurrentField_]

I understand these are two requests, but the ask is similar...simply that the syntax is understood in both and more versatile.

I am always checking for some view (browse tools) and editing other tools. When moving around to editing other tools, the view will disappear with other selection. If there are features when we can have multiple views (result) as a reference and compare them together.

I use the cleanse tool a lot to remove extra spaces before and after my data. Since most of my work is done in-DB, I have to datastream out, cleanse, and then datastream back in. Would love to see an In-DB Cleanse tool added.

Background:

Teradata is a high performance database system. It is highly sensitive to indexes and balancing the records across each index segment.

Teradata uses Spool to perform queries on the database. It is also very sensitive to type of table (volatile, temp, permanent) created.

Issue:

Alteryx "In - Database" nodes are not providing the ability to configure them to Teradata needs.

As a result, when executing workflows with "In - Database" nodes there is high probability of "out of spool" error specially when working on medium size data base (between 250 million to 1 billion records).

Impact:

Question mark on the ability of Alteryx to handle medium to large databases.

Knime and SSIS are currently preferred to Alteryx

Action Requested

Please change the configuration of the "In - Database" nodes to allow fine tuning of the node behaviour.

Hi

Support was added in 2018.4 for conditional update and deletion of rows in a SQL Server table (ODBC) based on incoming records, however Azure SQL Server is not supported.

Surely support for Azure should not be difficult to add given other SQL Server implementations are now supported?

See original idea here:

And 2018.4 release here:

https://help.alteryx.com/ReleaseNotes/Designer/Designer_2018.4.htm

Thanks

14 is a bit large to have as the default text size in the Report Text Tool. Can we make it a "normal" size like 11 or 12? I am always forgetting to change it when setting up automated emails, and have to go back and edit it.

Can either be a setting, or we should just change the default to a normal size.

Text in this post set to 14(4 looked close) for effect.

Thanks!

Super simple request: Add Exasol to the list of databases that Alteryx can run in-database queries against.

We are using Exasol to store and query large volumes of data (1bn+ rows). This works great from Tableau because not very much data has to leave the database. But when we want to process that data in Alteryx we either have to pre-aggregate the data in Exasol, strip out loads of columns or sit back for hours while Alteryx sucks down the data. The first solution requires somebody to write SQL code in the database, but we are trying to avoid that (that's why we like Alteryx). The second and third are not always viable options.

Given that Exasol is partnered with Tableau, and Alteryx is partnered with Tableau, it feels like there will be lots of Alteryx customers looking for this functionality.

There are some minor tools like Twitter Search (http://www.alteryx.com/resources/blending-social-media-data-without-it) and Grazitti's Facebook Page crawler...

But we need tools for accessing and blending more of the existing semi-structure social data,

- no YouTube connector to search between videos and track impressions yet...

- no LinkedIn connector to crawl public and full profiles (with permission) to do HR analytics yet...

- no Instagram connector yet, to crawl marketing data and do trends and competition search

- no Flickr connector to grab pictures to do Image search and recognition...

Top social media sites Updated February 1, 2017;

1 | Facebook 1,100,000,000 - Estimated Unique Monthly Visitors

2 | YouTube 1,000,000,000

3 | Twitter 310,000,000

4 | LinkedIn 255,000,000

5 | Pinterest 250,000,000

6 | Google Plus+ 120,000,000

7 | Tumblr 110,000,000

8 | Instagram100,000,000

9 | Reddit 85,000,000

10 | VK 80,000,000

11 | Flickr 65,000,000

12 | Vine 42,000,000

13 | Meetup 40,000,000

14 | Ask.fm 37,000,000

15 | ClassMates 15,000,000

Currently, when choosing the "<List Of Sheet Names>" options for excel files, Alteryx always does an implicit sort of the sheets when outputting the list. For some of my excel files I need to read, the order of the sheets in the excel is important. But Alteryx will always perform a sort, without me being able to know what the original order was. And yes, one of those fun situations where I don't control the excel sheet.

It would be nice when selecting this option to either preserve the order of the sheets or somehow include an additional column that numbers the order the sheet was in.

Many legacy applications that use Mainframes have certain data encoded in EBCDIC in DB2 tables(variable length columns that can have 0-100s of iterations in a single EBCDIC encoded compressed data). When this data is downloaded to a platform like Hadoop that does'nt understand EBCDIC data , It appears as junk characters .

I solved this issue in my project using an approach designed/implemented in PySpark script[ separate logic needed for COMP , COMP3, X(alphanumeric) datatypes] .Having this functionality in a Tool can help many Organizations that use data from Mainframes applications.

My employer just started with Alteryx, so I'm sure after a few months, this will be basic simple, but I'm sure I'm not the only person who has made this mistake, so there you go...

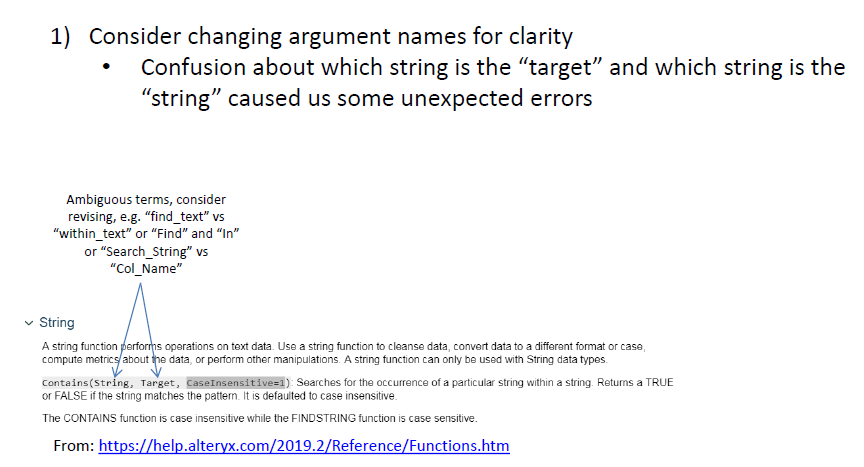

I was running a larger workflow that ends with outputting a table with about 80 columns. Everything was working fine, except for one column, which kept coming up as all 0s. Took me a while to find it, but the error all traced back to an error I made with a "Contains" function in a Formula node.

The Alteryx "Contains" function takes two arguments, 'string' and 'target'. I interpreted this as 'find this string in this target variable', and entered two arguments: the string of characters I was looking for first, and then the name of the column I wanted to search in second.

I think I had this backwards. You need to enter the column name first, then the substring you want to look for second. So the column name is the 'string' and the search term is the 'target'. Me getting these reversed did not produce any errors or warnings, which I understand (e.g. maybe you might want to pairwise search for the text in one column in the text of another).

I think the terms 'string' and 'target' are kind of vague labels in this case (in Excel, for example, the analogous "Search" function takes the arguments 'find_text' and 'within_text', which is a lot harder to misinterpret). So I would suggest changing the argument names in Designer and the online documentation, or at least adding text to the Alteryx Documentation (https://help.alteryx.com/2019.2/Reference/Functions.htm) that clarifies which is which.

Normally, I'd say this is a pretty minor thing, but since this is a potential 'silent error', I'd argue it's worthwhile.

(Also, the online documentation seems to imply there is a "CaseInsensitive" argument you can pass to "Contains", however I don't think there actually is. May want to edit that for clarity.)

Thanks!

I suggest an additional tool that would allow adding columns to the data, if and only if they do not exist already.

Currently working with data that has a dynamic set of columns can be a bit tiresome as the Select tool will not allow to select columns that have not been witnessed in the data.

Adding a tool that would ensure that certain columns are available downstream can currently be achieved by:

- 'Append Fields' tool with a 'Text Input' tool which will always append the fields, renaming them on the fly if needed

- 'Union' tool with a 'Text Input' tool

Both options do not seem straight forward and I expect have a performance impact.

A separate tool to achieve this seems the more user friendly and performance oriented way.

Transfer of records from Python SDK RecordRef seems to be slow sending large amounts of data to the Alteryx Engine (e.g. discussion here). Although unclear of the exact specifics, it seems that there's a copy and convert process in play.

Apache Arrow appears to be addressing this issue, and the roadmap and specs are impressive! It seems like (again I have no understanding of the Alteryx Engine specifics) that something like this would be excellent for expanding SDK use cases as well as for other connectors such as the Apache Spark connector.

And it looks like it'd be fun to build into Alteryx! 🙂

The Delete and connect around in the right click menu is really nice for not having to go find the connector and re plug it in. I would ask that there is essentially the same option when cutting a tool. When the tool is cut the data path is uneffected. Also, it would be nice to be able to right click(or left click and ctrl+v) on a data path and paste the tool directly in, instead of having to paste then connect everything back up. Lastly, I often find myself pulling out the wrong tool/wanting to replace a tool with another. I think it would be nice to have the option of dropping a toll directly onto another to replace it. Again connecting drectly into the data path.

Often in larger workflows, I will copy data partway down the stream into a new workflow in order to troubleshoot a small section in order to avoid having to run the workflow over and over again which can take a while. I'm aware (and thankful) of cacheing, but sometimes if there are many parallel streams or, I'd rather just copy the data from the data preview built into the tool so I don't have to take the time to run the workflow again. I'm also aware I could output a yxdb file and use that, but again that takes longer than I would like.

The issue I run into is if I copy the data and paste in a text input tool, all the field types change to what they would default to. This is fine with new data, but for data that has specific fields throughout the workflow, this can be a hassle. If copying data could also copy the field type and size that would be great.

I would LOVE the quick win of selecting some cells in the browse tool results pane, and a label somewhere shows me the sum, average, count, distinct count, etc. I hate to use the line but ... “a bit like Excel does”. They even alow a user to choose what metrics they want to show in the label by right clicking. 🙂

This improvement would save me having to export to Excel, check they add up just by selecting, and jumping back, or even worse getting my calculator out and punching the numbers in.

Jay

Can there be a User or System setting option for Desktop Designers to save the default SMTP server to be used for all email functionalities?

I recently upgraded from 2018.1 to 2019.1 version and with that lost the "Auto detect SMTP server" option in the email tool.

The existing workflows still seem to work. However the email tool errors out when I make any enhancements to those existing workflows. Dictating the email tool to use the default SMTP server pre-configured by the user in the user/system setup options will be extremely helpful.

I spend too much time repositioning tools by hand to minimize space between tools (especially when trying to parse messy XML documents). It would be great to have a feature that automatically reduces the space between the set of selected tools, such as "Distribute Horizontally and Compact". This would help free up canvas space.

Dynamic Input is a fantastic tool when it works. Today I tried to use it to bring in 200 Excel files. The files were all of the same report and they all have the same fields. Still, I got back many errors saying that certain files have "a different schema than the 1st file." I got this error because in some of my files, a whole column was filled with null data. So instead of seeing these columns as V_Strings, Alteryx interpreted these blank columns as having a Double datatype.

It would be nice if Alteryx could check that this is the case and simply cast the empty column as a V_String to match the previous files. Maybe make it an option and just have Alteryx give a warning if it has to do this..

An even simpler option would be to add the ability to bring in all columns as strings.

Instead, the current solution (without relying on outside macros) is to tick the checkbox in the Dynamic Input tool that says "First Row Contains Data." This then puts all of the field titles into the data 200 times. This makes it work because all of the columns are now interpreted as strings. Then a Dynamic Rename is used to bring the first row up to rename the columns. A Filter is used to remove the other 199 rows that just contained copies of the field names. Then it's time to clean up all of the fields' datatypes. (And this workaround assumes that all of the field names contain at least one non-numeric character. Otherwise the field gets read as Double and you're back at square one.)

- New Idea 209

- Accepting Votes 1,837

- Comments Requested 25

- Under Review 150

- Accepted 55

- Ongoing 7

- Coming Soon 8

- Implemented 473

- Not Planned 123

- Revisit 68

- Partner Dependent 4

- Inactive 674

-

Admin Settings

19 -

AMP Engine

27 -

API

11 -

API SDK

217 -

Category Address

13 -

Category Apps

111 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

239 -

Category Data Investigation

75 -

Category Demographic Analysis

2 -

Category Developer

206 -

Category Documentation

77 -

Category In Database

212 -

Category Input Output

632 -

Category Interface

236 -

Category Join

101 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

75 -

Category Predictive

76 -

Category Preparation

384 -

Category Prescriptive

1 -

Category Reporting

198 -

Category Spatial

80 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

87 -

Configuration

1 -

Data Connectors

948 -

Desktop Experience

1,493 -

Documentation

64 -

Engine

123 -

Enhancement

276 -

Feature Request

212 -

General

307 -

General Suggestion

4 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

10 -

Localization

8 -

Location Intelligence

79 -

Machine Learning

13 -

New Request

177 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

21 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

73 -

UX

220 -

XML

7

- « Previous

- Next »

- vijayguru on: YXDB SQL Tool to fetch the required data

- apathetichell on: Github support

- Fabrice_P on: Hide/Unhide password button

- cjaneczko on: Adjustable Delay for Control Containers

-

Watermark on: Dynamic Input: Check box to include a field with D...

- aatalai on: cross tab special characters

- KamenRider on: Expand Character Limit of Email Fields to >254

- TimN on: When activate license key, display more informatio...

- simonaubert_bd on: Supporting QVDs

- simonaubert_bd on: In database : documentation for SQL field types ve...