Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

The more python and R development I do the more I want to use the shortcutes [CTRL] + [ENTER] to run my workflow,

Is it possible to add this as a second way to run the workflow?

I'm thinking its going to have to have a new shortcut anyways with cloud as [CTRL] + [R] would refresh the page! :D

Asking for a friend :D

We aren't getting a huge amount of help from support on this, so I'm posting this idea to raise awareness for the product teams responsible for the Salesforce connectors and the embedded Python environment.

This post from user Dubya describes the issue in detail:

I have a workflow with several salesforce tools in it, which works fine on my machine. But we need another alteryx user in our office to be able to access, run and maintain the workflow too, via their machine and copy of alteryx designer.

However we're finding that the salesforce inputs and outputs can only be authenticated on one machine at a time.

When the other new user opens the original workflow from the shared network location, the salesforce tools display an error "Salesforce Input (1): {'error': 'invalid_grant', 'error_description': 'authentication failure'}" and the tools fail to load any data. But we can see the full query in the tool and we can even set the custom query option and validate the query successfully, which suggests the source is being correctly connected to and queried, but we just cant run the tool.

The only way to run the tool successfully is to change the credentials and re-authenticate the tool. However this then de-authenticates the original machine, and when we open up the workflow on there and try to run ying the workflow brings back the same error.

We've both tried this authentication back and forth on our own machines and each time one of us re-authenticates, it de-authenticates the other, leading to it triggering the error.

Can someone help explain what's going on and how to fix it, as this doesn't bode well for our collaboration.

We're both running:

The latest build of version of designer 2021.2 (original machine also running desktop automation)

Salesforce Input Tool v4.1.0

Salesforce Output Tool v1.3.0

My response here identifies that this is a problem for our organization as well:

We're experiencing the same issue. It appears to be related to how the tool handles password and security token decryption. I've found that when you modify the related registry entry from "true" to "false", you can see in the tool's xml that the encrypted password and security token are still in there. I'm not sure what else is going on behind the scenes beyond that, but that ought to be addressable by the product teams handling the Salesforce connectors and the Python installation embedded in Designer.

The only differences in our environment compared to u/Dubya's are that we're running on 2020.4 and attempting to use Salesforce Input Tool v4.2.4.

This is a must have for anyone who needs the ability to share workflows among multiple users. This is part of a series of problems that these updated connectors have been plagued with since introducing them years ago, and no one at Alteryx seems to care enough to truly fix the problems. Salesforce is a core system for our organization, so having tools that utilize the latest version of Salesforce's APIs is very important to us. The additional features that the Input tool provides are welcome, but these bugs have to be sorted out in order for us to extract any kind of value out of them. If the "deprecated" Salesforce tools were ever to be removed from Designer while there are issues with the "new" connectors, we would have no choice other than to never upgrade Designer/Server again and be forced to look for another product to serve as our ETL platform.

Please, please, please address this.

When building custom tools for Alteryx using the Python SDK, there is no current way to test these outside of the Alteryx Designer.

This means that your development process is:

- write some code (no code-sense; intellisense; auto-complete because Jupyter; VSCode; Visual Studio; etc cannot access AlteryxEngine or any of the other imports)

- hope

- copy that .py module into your C:\Users\<username>\AppData\roaming\Alteryx\Tools\<toolname>

- fire up Alteryx

- drop this new custom tool on a canvas

- run it to see if you get any errors

- then copy these errors out of Alteryx result window into Notepad to be able to read them

- then go back into your development environment to make changes

- repeat.

This is very painful, and this will directly scare most people away from learning how to create custom tools since it's not only inefficient - but also scary and frustrating for beginners.

Proposal:

Could we instead create mock python libraries; and a development harness (like Google does with Android development in Eclipse) in this SDK where:

- you have full code intelligence (intellisense, autocomplete)

- you can simulate engine events in a test harness (for example in the Android SDK; you can simulate the user rotating their phone, turning off GPS, hitting a volume button, etc).

- you can also write test cases which can run automatically

- then once you know that your tool will work - only then you drop it into the Alteryx Designer environment.

NOTE: This IDE way of thinking also allows you to bring the configuration pieces (like number of inputs; etc) out of raw code and into configuration options.

Although you may be able to do remote debugging by using platforms like PyCharm - that really does not give you the full ability to check in the code of your tool; along with all the test cases; in a harness that allows you to automatically check different events; or to make sure that your tool works in the test harness before deploying.

Thank you

cc: @BlytheE @stevea @Ozzie @tlarsen7572 @cam_w @jdunkerley79

The Multi-Field formula tool has three really powerful features that it supports:

[_CurrentField_]

[_CurrentFieldName_]

[_CurrentFieldType_]

These are really powerful within Multi-Field formulas because they allow for a dynamic process to apply across multiple fields.

However, they would also be very helpful in regular formulas and Multi-Row formulas, for code transportability.

A basic example: I have a Longitude field that is a string. I need to set it to a value of 0 if there is a null value.

My formula today:

IF ISNULL([Longitude]) THEN 0 ELSE [Longitude] ENDIF

Now lets say I want to use the same formula somewhere else, but for Latitude instead.

That formula looks like:

IF ISNULL([Latitude]) THEN 0 ELSE [Latitude] ENDIF

If I could use [_CurrentField_] instead, that would allow me to instead write both formulas as:

IF ISNULL([_CurrentField_]) THEN 0 ELSE [_CurrentField_] ENDIF

This code can easily be copied for any field that requires replacing Nulls with 0s, and doesn't require refactoring to use a Multi-Field formula instead.

This also means that if I later change my field name, the code will remain consistent. This not only speeds up development time and flexibility, but more readily allows for validation that the existing code has not changed.

When a custom (bespoke for @Chrislove) macro is created, I would like the option to create an annotation that goes along with the tool. This is entirely cosmetic, but might help users to recognize the macro.

Thanks,

Mark

Every time we create a file output - you first have to check if the folder exists - and if not then create it.

Currently it's quite onerous to do a directory create - especially with all the error trapping to make this production safe - and everyone is reinventing the wheel in their own companies.

Given the commonality of this need - could we add a tool that allows you to check for existance of a directory and attempt to create it (with nested directories and useful status / error descriptions to act upon)

Hi Alteryx Devs -

It would be *really tight* to have a drop down interface tool that would support auto completion based on a odbc connection to a table/column or ajax call. I recently had a situation wherein we need to give the users the ability to select an address, then run a workflow. But the truth is, our address data is terrible, and what I really needed was to be able to let the users start typing the address, then give them a list of choices to pick from, they pick the correct (but usually wrongly formatted) address, and then I send that value into the workflow.

I could not find a decent way to give a gallery user a reliable way to pick an address from our list, so eventually wound up having to write an ajax piece to handle the auto completion, capture the user input, then post to a service that would in turn, interact with gallery through the API, get the response, and send it back calling page, and back to the user. A significant amount of work to put into something that is an exceedingly common web operation of auto completion.

This would make a lot of gallery operations flow so much more naturally.

Thanks for listening!

brian

We will not be enabling DCM for the time being (see https://community.alteryx.com/t5/Alteryx-Designer-Desktop-Ideas/Enable-auto-complete-predictive-typi...).

But, when you do not enable DCM, you get an annoying pop up every time you open Designer that says "DCM toggle is not enabled".

Please give us the ability to turn this pop up off.

Problem statement -

Currently we are storing our Alteryx data in .yxdb file format and whenever we want to fetch the data, the whole dataset first load into the memory and then we can able to apply filter tool afterwards to get the required subset of data from .yxdb which is completely waste of time and resources.

Solution -

My idea is to introduce a YXDB SQL statement tool which can directly apply in a workflow to get the required dataset from .YXDB file, I hope this will reduce the overall runtime of workflow and user will get desired data in record time which improves the performance and reduce the memory consumption.

The Source field of the field metadata is very useful, but has some problems.

- It is repetitious. A long connection string repeated for many fields from the same source can bloat the size of the workflow above 10 MB, and when removed is around 0.5 MB.

- It exposes sensitive information about a company's infrastructure, such as server names, ports, user ids, and proprietary data structures.

I first started paying attention when we found a user's password in the metadata because they had passed it as a string to the Dynamic Input Tool (separate Idea submitted for that - LINK). Then when I had to share an App with the Alteryx Support team for support with an issue, I thought to check the metadata, and I noticed that the file was too big and was exposing information that I would not normally share with another company.

I'm not sure how you want to handle this, but here's some thoughts:

- Default the Source field to 'off' and provide users the option to turn it 'on' in the workflow/app settings.

- Provide a mechanism to strip the 'Source' field at time of saving or exporting the workflow.

- If nothing else, provide education to users on the implications of including this information in the file.

Thanks for listening!

Cameron

With the 2019.3 release the summarize tool now includes prefixes for grouped fields. While a nice addition, in application it makes using this data downstream (like joining to other tables) more involved because of needing to remove this prefix.

It would be nice to have this as an option (a checkbox to add/remove prefixes maybe) or just revert back to pre-2019.3 behavior...thanks!

Currently the cross tab tool automatically sorts alphabetically by the "New Column Headers" field. Often times I have to output data with dates across the columns and therefore have to do a cross tab to achieve this. The problem is when I have the dates formatted with month names, the crosstab automatically sorts it in alphabetical order instead of date order (i.e. Apr, Aug, Dec, etc vs Jan, Feb, Mar). To get around this issue, I have to use a dynamic rename tool. It would be great if there was a way to choose the order of the crosstab (i.e. in the order of the data, crosstab, another field, etc.).

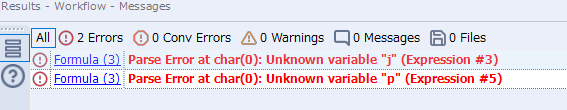

I want to jump to expression #3 of formula (3), when I see following error message. Now I can jump to formula (3), but only expression #1 is opened, not #3. If I have 30 expressions, it is hard to find #20 in 30s.

I decided to get real fancy when building a standard macro the other day. I checked the box on my macro input that made the connection optional:

It worked really well. My macro then became more complex, so I changed it to a batch macro. To my great surpise/astonishment/shock, the optional incoming connection is no longer optional:

The standard macro is working as expected on the left, but the batch macro is producing an error because my optional connection is requiring that something be connected to it.

I've been told that the code to make it optional is not there for batch macros and that this would be a product feature/improvement.

In the Gallery, the File Browse tool returns the file location on the server where the file was uploaded. This allows the file to then be read in as input to a workflow.

If you need the file path of the original file location, you have to add a Text Input for the user to manually add it.

In my case (#00293302), I used a chained app to populate a list box for the user to select the Sheet Names they would like to process through the application. Unfortunately, since I was not able to capture the original file location the application errored out. This is due to the second app using the file location on the server where the file was uploaded, which is provided by the first workflow. This file location (from the Browse tool) is a temporary file location, where inputs are immediately deleted after processing.

Want to test this out? Create an application where you Output the file path from a File Browse tool.

i know.....grrr, this doesn't match your original file location!

Thank you,

Mark

Where it stands now, only a file input tool can be used to pull data from Google BigQuery tables. The issue here is that the data is streamed and processed locally, meaning the power of BigQuery processing isn't actually being leveraged.

Adding BigQuery In-Database as a connection option would appeal to a wide audience. BigQuery is also standard SQL compliant with the SQL 2011 standard, so this may make for an even easier integration.

Could we please change the Interactive Chart tool, to:

- recognize when upstream types have changed and reconfigure (in the case of numerical types marked as string)

- For line charts - sort the values in order of the X value

Sample Flow - derivation of challenge 201:

Issue 1:

- The first interactive chart on this flow has no sorting at all performed by the charting tool - this may be due to the fact that the X & Y axes are in string fields. Generally line charts would attempt to sort both the axes and the values (where the values should order according to the X axis). Please can you add a default sort anyway?

Issue 2:

- If you then change the data types on these fields to be numeric - the charting tool still does not sort them until you reconfigure the tool manually

- REquest: please can you get the tool to remember the data types, so that it can prompt you; or even better just reconfigure?

(image looks identical after retyping the fields)

Issue 3:

- When you do a manual reconfigure of the tool after changing the types - the axes are sorted, but the values are not - so you end up with a chart that crosses back and forth. Generally line charts are ordered in the order of the X Axis for the values

- Request: Please sort values on the line chart automatically in order of the X value?

NOTE: Finally got the outcome needed by forcing the sort before the interactive chart tool

Dear Alteryx GUI Gang,

I'll create a container and then customize the colours, margins, transparency, border and then want consistency for other containers. It would be nice to have a format painter function (brush) to apply the format of one container to another. This of course could be extended to other tools like comments. There might be a desire to apply this to more tools too, but the comments and containers would be my focus as they are almost always custom configured.

Cheers,

Mark

I recently came to know that Alteryx doesn't support Denodo Data sources. We at our company are using Denodo as a data virtualization tool and also Alteryx is used for data blending. The request is for Alteryx to start supporting Denodo as a data source so that our company can reach out to Alteryx for any support related issues with Denodo.

Can we have a User Setting that allows the users to select if Alteryx should prevent the computer to go into Sleep or Hibernate mode when running a workflow?

- New Idea 395

- Accepting Votes 1,783

- Comments Requested 20

- Under Review 181

- Accepted 47

- Ongoing 7

- Coming Soon 13

- Implemented 550

- Not Planned 106

- Revisit 56

- Partner Dependent 3

- Inactive 674

-

Admin Settings

22 -

AMP Engine

27 -

API

11 -

API SDK

230 -

Bug

1 -

Category Address

13 -

Category Apps

114 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

252 -

Category Data Investigation

79 -

Category Demographic Analysis

3 -

Category Developer

220 -

Category Documentation

82 -

Category In Database

215 -

Category Input Output

658 -

Category Interface

246 -

Category Join

109 -

Category Machine Learning

3 -

Category Macros

156 -

Category Parse

78 -

Category Predictive

79 -

Category Preparation

406 -

Category Prescriptive

2 -

Category Reporting

205 -

Category Spatial

83 -

Category Text Mining

23 -

Category Time Series

24 -

Category Transform

93 -

Configuration

1 -

Content

2 -

Data Connectors

985 -

Data Products

4 -

Desktop Experience

1,615 -

Documentation

64 -

Engine

136 -

Enhancement

421 -

Event

1 -

Feature Request

219 -

General

307 -

General Suggestion

8 -

Insights Dataset

2 -

Installation

26 -

Licenses and Activation

15 -

Licensing

15 -

Localization

8 -

Location Intelligence

82 -

Machine Learning

13 -

My Alteryx

1 -

New Request

229 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

26 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

87 -

UX

228 -

XML

7

- « Previous

- Next »

-

Carolyn on: Blob output to be turned off with 'Disable all too...

- MJ on: Add Tool Name Column to Control Container metadata...

-

fmvizcaino on: Show dialogue when workflow validation fails

- ANNE_LEROY on: Create a SharePoint Render tool

- jrlindem on: Non-Equi Relationships in the Join Tool

- AncientPandaman on: Continue support for .xls files

- EKasminsky on: Auto Cache Input Data on Run

- jrlindem on: Global Field Rename: Automatically Update Column N...

- simonaubert_bd on: Workflow to SQL/Python code translator

- abacon on: DateTimeNow and Data Cleansing tools to be conside...

| User | Likes Count |

|---|---|

| 7 | |

| 3 | |

| 3 | |

| 3 | |

| 3 |