Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

I was setting up a rather large set of repetitive filters and formulas and when I got done, I wanted to select the output of each tool all at once to drag them in to a Union tool. I think it would be great if you could hold the control key to select multiple outputs to drag to the next tool at a given time.

Is it possible to add sort functionality to the Sample tool in Designer, similar to the 'Sample Based on Order' functionality in the Sample tool in Designer Cloud? This would cut down on the Sort + Sample tool combo in Designer!

Thanks!

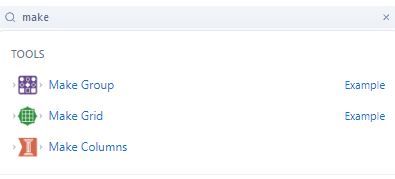

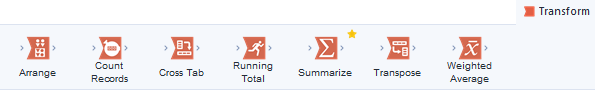

I was just responding to a post about the Make Columns tool, and I noticed that there is not an example workflow for this tool built into Designer. It is also missing from the Transform category, so I never think of it.

Hi all,

I think it would be great if Alteryx could send calendar invites in Outlook (and perhaps other calendaring systems) like it sends emails.

Currently the only way to accomplish this is to send it as an attached ICS file on a regular email.

In my use case, rather than auto-populating a Shared Mailbox/calendar, someone has to go into the inbox, the email has to be opened then the ICS has to be clicked on to interact with.

There are ways in Outlook to send an item like this but have it appear automatically without the end user ever seeing the actual invite. (Our Company adds holidays and other important dates in this manner)

So basically I want this functionality available in Alteryx to do the same. I have posted about it before in the discussions threads, but basically right now we enter our time in HR system, then have to manually enter the same info on our personal calendar in Outlook and any team calendar whether it be a SharePoint calendar, a group calendar in Outlook etc.

If Alteryx had the ability to send these types of invites, employees could enter the info in our HR system then Alteryx can get the data feed and automatically populate the other calendar (whichever type it may be).

Hopefully this gets some likes.

TIBCO Data Virtualization is a Data Virtualization product focused on creating a virtual data store consolidating data from throughout the enterprise. It can be accessed via a SQL query engine, and has a variety of supported connectors, including an ODBC driver.

This data source can be connected to via ODBC in Alteryx today, but error messaging is unclear/unhelpful, and attempting to use the Visual Query Builder causes Alteryx to crash.

Adding TIBCO Data Virtualization as a supported ODBC connection would empower business users to leverage this product and easily utilize this enterprise data store, enhancing the value of the Alteryx platform as a consumer of this data.

Hello,

Tableau has a veru useful "split" function that allows you to split a string with a delimiter and specify the number of the result you want

https://onlinehelp.tableau.com/current/pro/desktop/en-us/functions_functions_string.htm

Qlik has the same function, subfield : https://help.qlik.com/en-US/sense/February2019/Subsystems/Hub/Content/Sense_Hub/Scripting/StringFunc...

I think this is quite useful and a very standard feature.

Best regards,

Simon

Hello Alteryx Devs -

When I got to write some scripting in the formula tool, my data stream properties should be the first to be suggested once a user starts typing a letter, not the last.

uppercase(Ad -> gives me:

DateTimeAdd

FileAddPaths

PadLeft

PadRight

ReadRegistryString

[Address]

I think we would need a dedicated R macro to ascertain the chances anyone in is going to need [ReadRegistryString] before they need a column of their own data that starts with [Ad...]

Easy fix. Makes a big difference.

Thanks.

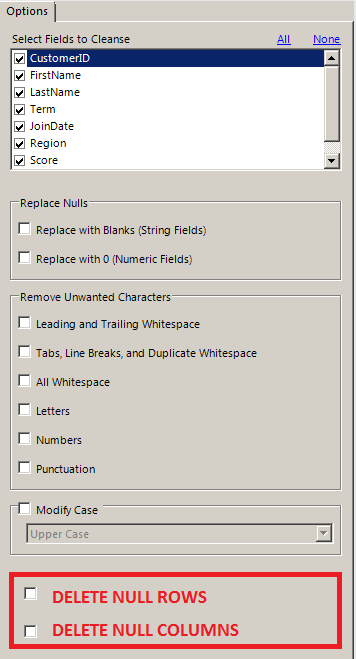

There are few workarounds for this task, but it would be really very easy if Data Cleansing Tool could delete Null Rows and Null Columns. After all its just a macro which can be modified and re-packaged into Alteryx Designer.

Currently, to delete a null row requires multiple columns validation for common Null attributes,

similarly to delete a null column every column has to be compared on a row-level and flagged for removal. Both of these approaches are clumsy.

Wouldn't it be so simple if Data Cleansing Tool gave such check boxes !!!

I know it sounds trivial, but I hate having to do the extra click to get the browse tool to pop out. Just upgraded from 2020.2 to 2021.3. Before, you could pop out a browse window in 2 clicks:

Now you need 3 clicks:

Like I said, I know it sounds trivial, but when you do this dozens of times a day, it adds up to a big annoyance.

Anyway, was just wondering if enough others felt the same and if so, hopefully the browser behavior could be pushed back to a 2 click pop out.

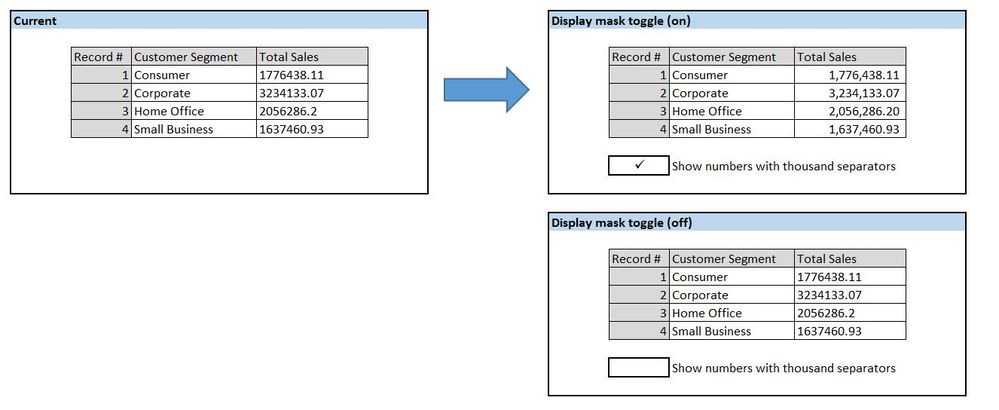

Although I must say that I just LOVE the comma inserter.

Hello all,

When looking at the Results window, I often find it a headache to read the numeric results because of the lack of commas. I understand that incorporating commas into the data itself could make for some weird errors; however, would it be possible to toggle an option that displays all numeric fields with proper commas and right-aligned in the Results window? I am referring to using a display mask to make numeric fields look like they have the thousands separator while retaining numeric functionality (as opposed to converting the fields to strings).

What do you think?

While Alteryx allows for a proxy username and password in the settings, these are not passed properly to an NTLM proxy. Support for NTLM authentication would be incredibly useful for a number of corporations who utilize this firewall setup.

We currently have to either download via Python or cURL through batch commands called by Alteryx. Since Alteryx uses a cURL back-end, this should be a fairly simple addition to the existing download tool by allowing a selection of proxy server, port, and authentication method in addition to the proxy username and password. This could be done either in the tool itself or in User Settings.

Hello,

I had a business case requiring a cost effective and quick storage solution for real time online sourced survey data from customers. A MongoDB instance would fit the need, so I quickly spun up a cluster on Mongo Atlas. Atlas was launched by MongoDB in 2016 as a database-as-a-service deployed on AWS. All instances for Atlas require TLS/SSL to connect. Currently, the Alteryx MongoDB connector does not support TLS/SSL connections and doesn't work against Atlas. So, I was left with a breakdown in my plan that would require manual intervention before ingesting data to Alteryx (not ideal).

Please consider expanding this functionality on all connectors. I am building Alteryx out in my agency as a data platform that handles sensitive customer information (name, address, email, etc.). Most tools I use to connect to secure servers today support this type of connection and should be a priority for Alteryx to resolve.

Thanks,

Mike Schock

Need ability to call Stored Procedures in Snowflake Database.

Limit conversion warning allows for a minimum of 1 message. Can we set the minimum to 0 to completely ignore the message?

Perhaps we can allow warning messages a similar function as ERROR messages and allow the designer to Ignore, Warn or Cancel?

ConvError: Imputation (441): Tool #104: No demand: 0.200000000000031 had more precision than a double. Some precision was lost.

ConvError: Summarize (456): Data: 0.360000000004675 had more precision than a double. Some precision was lost.

End: Designer x64: Finished running FP Model - Marquee Crew v3.yxmd in 32.3 seconds with 16 field conversion errors and 4 warnings

Thanks,

Mark

When creating a workflow I generally open a "TEMPLATE" first and then immediately save it to the "NEW WORKFLOW NAME". My template includes all my preferences that aren't set naturally within the user settings and won't get RESET by them either. It has a comment box and containers as well as logos and copyrights. It would be nice to have ready access to this feature. Maybe others have standards that they want applied to all users and their workflows too.

Thanks,

Mark

Hey all,

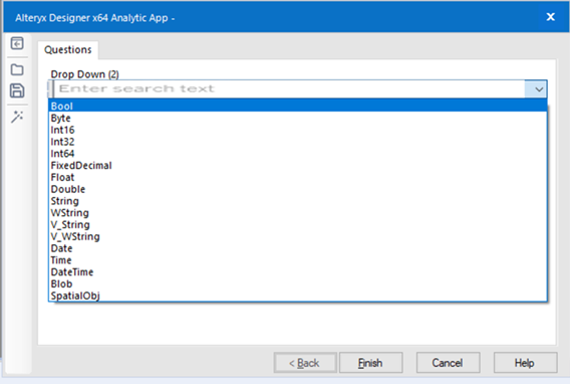

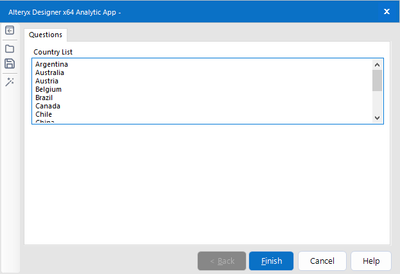

I would love to be able to have an interface tool that allows a user to search through drop down values (when there are more than 100 or so) similar to autocomplete. It would be helpful as a multiselect or single select drop down. I have inserted a very poorly mocked up picture below. It would essentially be a modified version of the drop down as all the values would be in the tool, but the user could type to find what they are looking for.

Here's a reason to get excited about amp! Create a runtime setting that gets Alteryx working even faster.

when you configure a file input you see 100 records. Imagine the delight that after you run your workflows all input tools are automatically cached. You run so much faster.

now think of the absolute delight that even before you run the workflows that a configured input tool causes a background read off the input data. Whether it is a new workflow or an opened existing flow that reading can start ahead of the time button.

what do you think 🤔?

From Wikipedia

Druid is a column-oriented, open-source, distributed data store written in Java. Druid is designed to quickly ingest massive quantities of event data, and provide low-latency queries on top of the data.[1] The name Druid comes from the shapeshifting Druid class in many role-playing games, to reflect the fact that the architecture of the system can shift to solve different types of data problems. Druid is commonly used in business intelligence/OLAP applications to analyze high volumes of real-time and historical data.[2] Druid is used in production by technology companies such as Alibaba,[2] Airbnb,[2] Cisco,[3] eBay,[4] Netflix,[5] Paypal,[2], Yahoo.[6] and Wikimedia Foundation [7]

More and more companies are going from Hive to Druid for Dataviz needs, maybe it's time to look for Druid Integration with Alteryx?

I have developed many workflows, macros, and apps, and I have always had to find a workaround for displaying information on the user config page or user interface.

For example, I want to input 'Default text' into the Text Box interface tool, but the problem is that it does not accept any external connection.

It would be great if this tool had a Q input anchor that could accept data from a connected tool (in both single or multi-line mode) or from external input (such as a file for DropDown list or List Box tools).

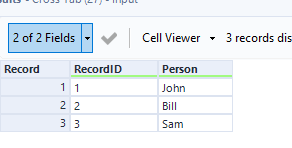

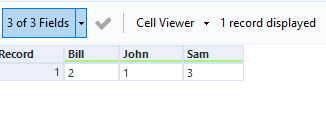

Cross tab automatically alphabetizes the column headers this can be a little awkward when unioning on column position later on. Would be nice to have this as an optional feature through a tick box on the tool.

- New Idea 396

- Accepting Votes 1,783

- Comments Requested 20

- Under Review 181

- Accepted 47

- Ongoing 7

- Coming Soon 13

- Implemented 550

- Not Planned 106

- Revisit 56

- Partner Dependent 3

- Inactive 674

-

Admin Settings

22 -

AMP Engine

27 -

API

11 -

API SDK

230 -

Bug

1 -

Category Address

13 -

Category Apps

114 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

252 -

Category Data Investigation

79 -

Category Demographic Analysis

3 -

Category Developer

220 -

Category Documentation

82 -

Category In Database

215 -

Category Input Output

658 -

Category Interface

246 -

Category Join

109 -

Category Machine Learning

3 -

Category Macros

156 -

Category Parse

78 -

Category Predictive

79 -

Category Preparation

406 -

Category Prescriptive

2 -

Category Reporting

205 -

Category Spatial

83 -

Category Text Mining

23 -

Category Time Series

24 -

Category Transform

93 -

Configuration

1 -

Content

2 -

Data Connectors

985 -

Data Products

4 -

Desktop Experience

1,616 -

Documentation

64 -

Engine

136 -

Enhancement

422 -

Event

1 -

Feature Request

219 -

General

307 -

General Suggestion

8 -

Insights Dataset

2 -

Installation

26 -

Licenses and Activation

15 -

Licensing

16 -

Localization

8 -

Location Intelligence

82 -

Machine Learning

13 -

My Alteryx

1 -

New Request

229 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

26 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

87 -

UX

228 -

XML

7

- « Previous

- Next »

-

Carolyn on: Blob output to be turned off with 'Disable all too...

- MJ on: Add Tool Name Column to Control Container metadata...

-

fmvizcaino on: Show dialogue when workflow validation fails

- ANNE_LEROY on: Create a SharePoint Render tool

- jrlindem on: Non-Equi Relationships in the Join Tool

- AncientPandaman on: Continue support for .xls files

- EKasminsky on: Auto Cache Input Data on Run

- jrlindem on: Global Field Rename: Automatically Update Column N...

- simonaubert_bd on: Workflow to SQL/Python code translator

- abacon on: DateTimeNow and Data Cleansing tools to be conside...

| User | Likes Count |

|---|---|

| 7 | |

| 3 | |

| 3 | |

| 3 | |

| 2 |