Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: Top Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

These tools seem to be volatile, as in if you click on them before you run the workflow they lose their configuration. This is infuriating. Can we change this to be like every other tool where you can copy, paste or click into it at any time and it remembers its config.

Nick

-

Category Transform

-

Desktop Experience

A simple quality of life improvement that I would love, is the ability to rename the output of the transpose tool in its configuration, rather than only having 'Name' and 'Value'

Would just let me drop the renaming of these fields afterwards :)

-

Category Transform

-

Desktop Experience

Hope this is fairly self-explanatory.

I'd like to be able to create presets for Summarize tool. Instead of having Group By, Sum, Count, Count Non Null, etc on top of the libraries of functions, put them into their own category. Users could then create a Favorites and the functions that they use the most would be stored in that section (editable by user).

-

Category Transform

-

Desktop Experience

I am on a forecasting project where we convert one vector of forecasts into another vector of forecasts by multiplying by a conversion matrix. This is very clumsy and fragile to do in Alteryx meaning we have to drop out to Excel. The ability to do very simple matrix multiplication in Alteryx would be very useful here and in other use cases. I realise you can probably exit to R and do the job, but for something so basic that shouldn't be required.

The relational representation of an mxp matrix is a three column table of cardinality mxp with columns { I , J , A }, where I labels the first index set with index i, J labels the second index set with index j, and A labels the numeric values with value a(i,j). Given a second pxn matrix { J, K, B } in relational form we should be able to multiply them to get a mxn matrix { I, K, C} in relational form where of course c(i,k) = sum over j in J of a(i,j)*b(j,k).

Vectors can of course be represented as 1x and x1 matrices. If you really wanted to go to town this could be generalised to array processing ala APL2.

-

Category Input Output

-

Category Transform

-

Data Connectors

-

Desktop Experience

Please create the ability to Concat a field in the In-DB Summarize Tool similar to the regular Summarize tool. This would enable much faster processing on concatenating fields using the database's processing power vs. the local machine.

-

Category In Database

-

Category Transform

-

Data Connectors

-

Desktop Experience

The Summarize tool should have an option to ignore warnings like this:

Group Bys on Double or Float are not recommended due to rounding error.

-

Category Transform

-

Desktop Experience

-

Category Transform

-

Desktop Experience

Access to only MD5 hashes via MD5_ASCII(String) and MD5_UNICODE(String) found under string functions is limiting. Is there a way to access other hashing algorithms, ideally via the crypto algorithms from OpenSSL or the .NET framework?

- https://msdn.microsoft.com/en-us/library/system.security.cryptography.hashalgorithm(v=vs.110).aspx

- https://wiki.openssl.org/index.php/Command_Line_Utilities#Signing_.2F_Digest

Hashing functions are a very useful tool to have. There are many different types of hashes and each one has tradeoffs for different uses. This can range from error checking, privacy shielding, password protection, forensic analysis, message authentication (HMAC) and much more. See: http://stackoverflow.com/questions/800685/which-cryptographic-hash-function-should-i-choose

- For workflows with data containing existing hashes, being able to consistently create hashes from non-hashed data for comparison is useful.

- Hashes are also useful because they are the same outside the Alteryx environment. They can be used to confirm correct operation of a production system or a third party's external process.

Access to only MD5 hashes via MD5_ASCII(String) and MD5_UNICODE(String) found under string functions in the formula tool is a start, but quite limiting.

Further, the ability to use non-cryptographic hashes and checksums would be useful, such as MurmurHash or CRC. https://en.wikipedia.org/wiki/List_of_hash_functions

Having the implementation benefit from hardware acceleration (AES-NI / CUDA) would be a great plus for high volume applications.

For reference, these are some hash algorithms that could be useful in workflows:

SHA-1

SHA-256

Whirlpool

xxHash

MurmurHash

SpookyHash

CityHash

Checksum

CRC-16

CRC-32

CRC-32 MPEG-2

CRC-64

BLAKE-256

BLAKE-512

BLAKE2s

BLAKE2b

ECOH

FSB

GOST

Grøstl

HAS-160

HAVAL

JH

MD2

MD4

MD6

RadioGatún

RIPEMD

RIPEMD-128

RIPEMD-160

RIPEMD-320

SHA-224

SHA-256

SHA-384

SHA-512

SHA-3 (originally known as Keccak)

Skein

Snefru

Spectral Hash

Streebog

SWIFFT

Tiger

-

Category Data Investigation

-

Category Parse

-

Category Transform

-

Desktop Experience

While In-db tools are very helpful and cut down the time needed to write complex SQL , there are some steps that are faster by directly writing SQL like window functions- OVER (PARTITION BY .....). In Alteryx, we need to create multiple joins and summaries to perform a window function. It would be immensely helpful if there was a SQL editor tool for in-db workflows where we can edit the SQL code at any point in the workflow, or even better, if they can add an "edit" function to every in-db tool where we can customize the SQL code generated and then send to the next tool.

This will cut down the time immensely and streamline the workflow to make Alteryx a true contender for the ETL solution space.

-

API SDK

-

Category Developer

-

Category In Database

-

Category Transform

It would be nice to be able to concatenate numeric values (integers, doubles, etc.) directly in the Summarize Tool.

I know this would involve converting it to a string on the backend, but I don't believe there would be any data loss when going from numeric to string. I know this can be done by using other tools like Select of Formula to convert to string before the summarize but I don't see any reason why this couldn't be accomplished in a single tool.

Thanks,

Paul

-

Category Transform

-

Desktop Experience

Can you add the flexibility to access fields based on its position or index like a[1], a[2],... a[n]. a[1] being the first column. Also an option to get max[a] can give the last column and min[a] give the first column. In this way, we can easily subset the dataset. Most cases, we are handling survey data which has 1000s of columns and when we need to select certain columns, we have to manually select the column checkbox and its painful to select 100s of columns. It will be nice if there is an option to select based on ID or index. It will also be useful while doing multi-field formula with more number of fields, because currently there is no option to write formulaes based on field Name column in it.

Regards,

Jeeva.

-

Category Transform

-

Desktop Experience

It was discovered that 'Select' transformation is not throwing warning messages for cases where data truncation is happening but relevant warning is being reflected from the 'Formula' transformation. I think it would be good if we can have a consistent logging of warnings/errors for all transformations (at least consistent across the ones based on same use cases - for e.g. when using Alteryx as an ETL tool, 'Select' and 'Formula' tool usage should be common place).

Without this in place, it becomes difficult to completely rely on Alteryx in terms of whether in a workflow which is moving/populating data from source to target truncation related errors/warnings would be highlighted in a consistent manner or not. This might lead to additional overhead of having some logic built in to capture such data issues which is again differing transformation by transformation - for e.g when data passes through 'Formula' tool there is no need for custom error/warning logging for truncation but when the same data passes through 'Select' transformation in the workflow it needs to be custom captured.

-

Category Data Investigation

-

Category Preparation

-

Category Transform

-

Desktop Experience

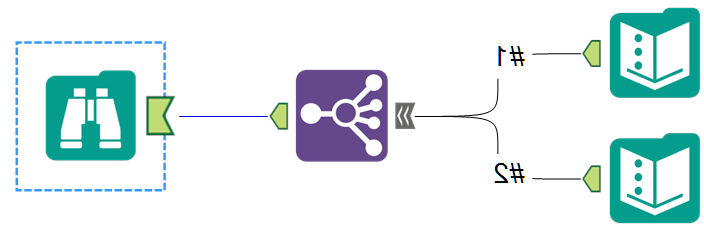

I'd like to see a tool that can take an input, then send it in different directions (similar to formula tool), but with many options... based on filters and/or formulas and/or fields.

Sometimes I need to perform actions on parts of my data or perform different actions depending on whether the data matches certain criteria and then re-union it later.

Right now, the filter tool only allows true or false. If we could customize further we could optimize our workflows rather than stringing filter tools together as if they are nested if/then.

So either the filter tool could have more options than true/false, and infinite ouputs, or the join multiple tool could be flipped, as shown below.

I envision something that says:

Split workflow:

- By Field: Field Name (perhaps with summarize functions such as min/max, etc.)

- By Formula (same configuration as current)

- By Filter

- Field

- Operator

- Variable

-

Category Interface

-

Category Transform

-

Desktop Experience

Another seemingly minor one that would just make life a little easier and clean up workflows. I often find myself renaming the Name/Value fields from a Transpose to be more descriptive. Currently this requires a Select tool after each transpose, and it would be nice to put a couple of text boxes at the bottom of the transpose to rename Name/Value directly in the tool itself.

-

Category Transform

-

Desktop Experience

Hello, it would be helpful to be able to have multiple levels of detail in a summarize tool. So, rather than aggregating on the lowest level of the group-bys, being able to select the level or partition for the aggregate. The current workaround for this is having multiple summarize tools and joining back to get all the data in one table.

The configuration would look something like this:

| Field | Action | Aggregation | Output Field Name |

| Region | Group by | Region | |

| Metro | Group by | Metro | |

| Store | Group by | Store | |

| UPC | Group by | UPC | |

| Store Sales | Sum | Store; UPC | Item Store Sales |

| Store Sales | Sum | Metro; UPC | Item Metro Sales |

| Store Sales | Sum | Region; UPC | Item Region Sales |

| Store Sales | Sum | Store | Total Store Sales |

With the aggregation field maybe being a pick-list of available "group by" columns. It should default to all the group by columns, but you could un-select some if you wanted a higher level of detail.

-

Category Transform

-

Desktop Experience

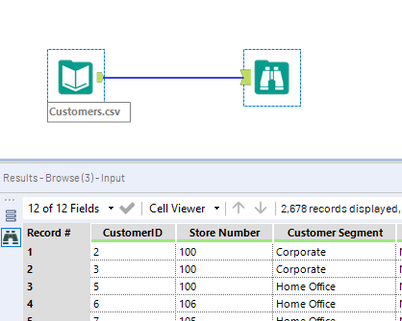

OK, this one will only save a single click per addition of a tool, but with Alteryx's general awesomeness small annoyances become like a stone in the shoe.

Consider my highly complex workflow below ; having inspected the dataset I now realise that I don't want some of the fields in my dataset...

...so I drag a Select tool in to deselect the columns, but I can't remember what the dataset looks like and I can't see the data...

...so I click on the Input tab and there it is, I can deselect away !

It would be particularly useful with something like a Summarize tool where I like to see the data to decide which aggregation to use.

Thanks !

-

Category Preparation

-

Category Transform

-

Desktop Experience

PLEASE add a count function to Formula/Multi-Row Formula/Multi-Field Formula!

I have searched for alternatives but am just confused about how to store the result for the total number of rows from Summarise or Count Records in a variable that can then be used within a Formula tab. It should not be that difficult to just add equivalents to R's nrow() and ncol().

-

Category Input Output

-

Category Transform

-

Data Connectors

-

Desktop Experience

Why Alteryx does not have an easier way (Drag, Drop, Click and Run) to calculate moving averages with a specified lookback? There are so many things that one has to adjust before calculating moving averages for a simple numeric column.

I understand that there is a CrewMacros called "Moving Summarize" which does that, but it has a limitation of a lookback period of 100. What if you have data with millions of rows where you need a lookback in 1000s then there is no easy solution to this.

Does anyone know that this configuration is in the making? Moving Average is bread and butter for analysts like me. I am urging Alteryx developers to build this tool asap. and it will bring lot of comfort to my troubled soul.

Maybe i am clearly missing something here, please enlighten me!

Thank you!

-

Category Transform

-

Desktop Experience

Idea:

Some well known scoring methods use optimal binned variables for added robustness. Let's add this capability to Alteryx.

Retionale:

Here's a basic link on why to do that; http://documents.software.dell.com/statistics/textbook/optimal-binning

Current status in Alterys as I'm aware of:

Tile tool or Multi-field Binning tool for completing same task as Tile tool on multiple fields, splits the variables by 5 methods;

Equal Records or Intervals or Sums

Smart Tile

Unique Value

Manual

Unfortunately "equal something" binnings are bad idea, as the values are categorized "blindly" irrespective of the effects on the predictive power of the models.

What to do:

What's needed is to bin both numerical and categorical variables optimally such that the Weights of Evidences (WoE) should present a monotone increasing or decreasing pattern. Maybe at most a V or U shaped "convex" structure.

Quick win:

Without constraining ourselves with monotonicity or convex cases, the easiest practice would be running a C4.5 or CHAID tree algorithm (produces multiple splits rather than binary splits in CART) for a single variable and select the target as the dependent variable and all the resulting nodes will be the bins we are looking for. Doing this for multiple variables at once is the key to the tool to be generated.

Clients:

This capability is sought by risk management departments building robust, stable Basel compliant models in financial industry, especially by banks.

-

Category Predictive

-

Category Preparation

-

Category Transform

-

Desktop Experience

Would it be possible to add some additional options to the running total, in particular average (max, st dev. may be useful) to the running sum tool or create a rolling average tool where you can group by multiple fields and have a rolling window for the last x rows, which you select? I know this can be done in the Multi-Row formula and with the moving summarise tool tool (http://www.chaosreignswithin.com/2014/12/moving-summarize.html), however the former is capped by 10,000 rows and alteryx crashes on my mac when trying to click on the multi-row formul tool with 6000 rows. Also it takes a several hours to analyse 1 file, and would like to have a solution to do this on a daily basis. Also I need to be able to so a rolling average over the last 11,999 rows, as this would be the last 20 minutes of data using a 10Hz GPS unit. This would be a great tool I think.

-

Category Transform

-

Desktop Experience

- New Idea 209

- Accepting Votes 1,836

- Comments Requested 25

- Under Review 152

- Accepted 55

- Ongoing 7

- Coming Soon 8

- Implemented 473

- Not Planned 123

- Revisit 67

- Partner Dependent 4

- Inactive 674

-

Admin Settings

19 -

AMP Engine

27 -

API

11 -

API SDK

217 -

Category Address

13 -

Category Apps

111 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

239 -

Category Data Investigation

75 -

Category Demographic Analysis

2 -

Category Developer

206 -

Category Documentation

77 -

Category In Database

212 -

Category Input Output

632 -

Category Interface

236 -

Category Join

101 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

75 -

Category Predictive

76 -

Category Preparation

384 -

Category Prescriptive

1 -

Category Reporting

198 -

Category Spatial

80 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

87 -

Configuration

1 -

Data Connectors

948 -

Desktop Experience

1,493 -

Documentation

64 -

Engine

123 -

Enhancement

276 -

Feature Request

212 -

General

307 -

General Suggestion

4 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

10 -

Localization

8 -

Location Intelligence

79 -

Machine Learning

13 -

New Request

177 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

21 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

73 -

UX

220 -

XML

7

- « Previous

- Next »

- vijayguru on: YXDB SQL Tool to fetch the required data

- apathetichell on: Github support

- Fabrice_P on: Hide/Unhide password button

- cjaneczko on: Adjustable Delay for Control Containers

-

Watermark on: Dynamic Input: Check box to include a field with D...

- aatalai on: cross tab special characters

- KamenRider on: Expand Character Limit of Email Fields to >254

- TimN on: When activate license key, display more informatio...

- simonaubert_bd on: Supporting QVDs

- simonaubert_bd on: In database : documentation for SQL field types ve...