Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: Hot Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

Please add Parquet data format (https://parquet.apache.org/) as read-write option for Alteryx.

Apache Parquet is a columnar storage format available to any project in the Hadoop ecosystem, regardless of the choice of data processing framework, data model or programming language.

Thank you.

Regards,

Cristian.

-

Category Connectors

-

Category Input Output

-

Data Connectors

Could Alteryx create a solution or work around for their tools to retry the queries with Azure DB connectivity outages.

If there are intermittent, transient (short-lived) connection outages with cloud Azure DB, then what action can we take with Alteryx to retry the queries.

Examples of retry Azure SQL logic:

“2. Applications that connect to a cloud service such as Azure SQL Database should expect periodic reconfiguration events and implement retry logic to handle these errors instead of surfacing these as application errors to users”.

SQL retry logic is a feature that is not currently supported by Alteryx.

For further information please see [ ref:_00DE0JJZ4._5004412Star:ref ]:

Hi Alteryx Support,

We are experiencing intermittent errors with our Alteryx workflows connecting to our Azure production database with Alteryx Designer v2018.4.3.54046.

Is there anything we can do to avoid or work around these intermittent / transient (short-lived) connection errors, such as, changing the execution timing or the SQL driver settings.

Or can we incorporate examples of retry Azure SQL logic:

“2. Applications that connect to a cloud service such as Azure SQL Database should expect periodic reconfiguration events and implement retry logic to handle these errors instead of surfacing these as application errors to users”.

https://docs.microsoft.com/en-us/azure/sql-database/sql-database-develop-error-messages

https://docs.microsoft.com/en-us/azure/sql-database/sql-database-connectivity-issues

Salesforce Import process, which contains 25 Workflow modules, completed with errors on:

Mon 25/02/2019 23:27

Error 1

2019-02-25 23:11:23:

2.1.18_SF_MailJobDocument_Import.yxmd:

Tool #245: Error opening connect string: Microsoft OLE DB Provider for SQL Server: Login timeout expired\HYT00 = 0; Microsoft OLE DB Provider for SQL Server: Invalid connection string attribute\01S00 = 0.

Error 2:

2019-02-25 23:26:31:

2.1.25_SF_ClientActivityParticipant_Import.yxmd:

Tool #258: Error opening connect string: Microsoft OLE DB Provider for SQL Server: Login timeout expired\HYT00 = 0; Microsoft OLE DB Provider for SQL Server: Invalid connection string attribute\01S00 = 0.

Salesforce Import Workflow completed with errors on:

Wed 27/02/2019 23:24

Error 3

2019-02-27 23:06:47:

2.1.17_SF_MailJobs_Import.yxmd:

DataWrap2ODBC::SendBatch: [Microsoft][SQL Server Native Client 11.0]TCP Provider: The specified network name is no longer available.

Regards,

Nigel

-

Category Connectors

-

Data Connectors

Create new connector to pull Salesforce Reports

We are a large company with tens of thousands employees using Salesforce on a daily basis. Over the years, we have worked with Salesforce to make many customizations and create many reports to provide data for various reporting needs. However, we have increasingly found it inefficient and prone to error to download the reports manually. We have many teams using the Salesforce reports as a base to create additional business insights.

Alteryx is a great tool to manage data ETL and workflows, but it does not support pulling data from Salesforce reports directly. Instead, it only offers connectors to pull data from base Salesforce objects. The data from Salesforce objects such as tables can be useful, but do not necessarily offer the logical view of Salesforce reports, and may require a lot of efforts to reconcile the data consistency against the reports our users are used to. Sometimes, it may be impossible to repeat producing the same data from Salesforce tables as those from Salesforce reports. That in turn would cause a lot of efforts spent by the reporting teams, their audience, and users of the Salesforce reports to match things up.

Salesforce does not have any out-of-box solution to schedule downloading the reports. At our request, their support team did some research and have not found a good 3rd-party solution in the Salesforce App Exchange ecosystem that supports this need.

I strongly believe this is a great opportunity for Alteryx. Salesforce already has an API that allows for building custom applications to pull Salesforce reports. However, most Salesforce users are more business oriented and do not necessarily have the appetite to engage with their IT staff or external resources provide to develop such apps and bear the burden to main them.

I have attached the Salesforce Reports and Dashboards API Developer Guide for your reference.

Sincerely,

Vincent Wang

-

Category Connectors

-

Data Connectors

The option to open Hyper files in 2019.4 is great! For some of our use cases it would be even better, if we would be able to directly open Hyper files that have been published to Tableau Server.

It should be possible to achieve this by combining the Tableau REST API method Download Data Source, which returns a Tableau Packaged Data Source (.tdsx), which then would need to be converted to a Zip file to be able to navigate to the contained Hyper file.

-

Category Connectors

-

Data Connectors

In Alteryx enable connections to Oracle Databases that are configured to use External Authentication.

This should allow Alteryx workflows to connect to Oracle databases using different authentication mechanisms, e.g. Kerberos.

Please see this discussion for some analysis on what would be required in Alteryx to support Oracle Database External Authentication:

Essentially this would involve Alteryx allowing users to specify that a connection to an Oracle database will utilize external authentication.

Then when connecting to an Oracle database with external authentication, Alteryx would pass the relevant parameter to Oracle to indicate external authentication is required (and Alteryx would not pass user name and password info). Then authentication with the Oracle database would be controlled by the external authentication configuration on the computer running Alteryx.

For more information on Oracle Database External Authentication see:

-

Category Connectors

-

Data Connectors

Hi there,

When connecting to data sources using DCM - could we please add the ability to make JDBC connections?

see:

https://community.alteryx.com/t5/Engine-Works/JDBC-Connections-in-Alteryx/ba-p/968782

As mentioned in these threads - JDBC is very common in large enterprises - and in many cases is better supported by the technology teams / developer community and so is much easier to make a connection. Added to this - there are many databases (e.g. DB2) where JDBC connections are just much easier

Please could you add JDBC connections to the DCM tooling?

Thank you

Sean

cc: @wesley-siu @_PavelP

-

Category Connectors

-

Enhancement

-

New Request

-

Scheduler

With more and more enterprises moving to cloud infrastructures and Azure being one of the most used one, there should be support for its authentication service Azure Active Directory (AAD).

Currently if you are using cloud services like Azure SQL Servers the only way to connect is with SQL login, which in a corporate environment is insecure and administrative overhead to manage.

The only work around I found so far is creating an ODBC 17 connection that supports AAD authentication and connect to it in Alteryx.

Please see the post below covering that topic:

-

Category Connectors

-

Category Input Output

-

Data Connectors

Please add a configuration to the RedShift bulk load to EITHER use access keys or an IAM EC2 role for access.

We should not have to specify access keys when we are in an IAM enabled environment.

Thanks

-

Category Connectors

-

Data Connectors

With the amount of users that use the publish to tableau server macros to automate workflows into Tableau, I think its about time we had a native tool that publishes to Tableau instead of the rather painful exercise of figuring out which version of the macro we are using and what version of Tableau Server we are publishing to. The current process is not efficient and frustrating when the server changes on both the Tableau and Alteryx side.

-

Category Connectors

-

Category Input Output

-

Data Connectors

It would be useful if enhancements could be made to the Sharepoint Input tool to support SSO. In my organisation we host a lot of collaborative work on SharePoints protected by ADFS authentication and directly pulling data from them is not supported with the SharePoint input tool, it is blocked. The addition of this feature to enable it to recognise logins would be very useful.

-

Category Connectors

-

Data Connectors

My specific use case relates to writing to AWS but am sure there are many other use cases for federated user session token support.

Specifically, using the S3 Upload tool or Athena Bulk Write (via SIMBA and Athena ODBC), the configuration works when using a IAM user, access key, and secret access key but when using a federated user via Okta there is no option to enter the session token and authentication fails.

Alteryx desktop should support federated users' session tokens.

-

Category Connectors

-

Category Input Output

-

Data Connectors

As an Alteryx Designer user I would like the ability to write .hyper files to a subdirectory on Tableau Server to keep make my Tableau site easier to manage.

-

Category Connectors

-

Data Connectors

Hi there,

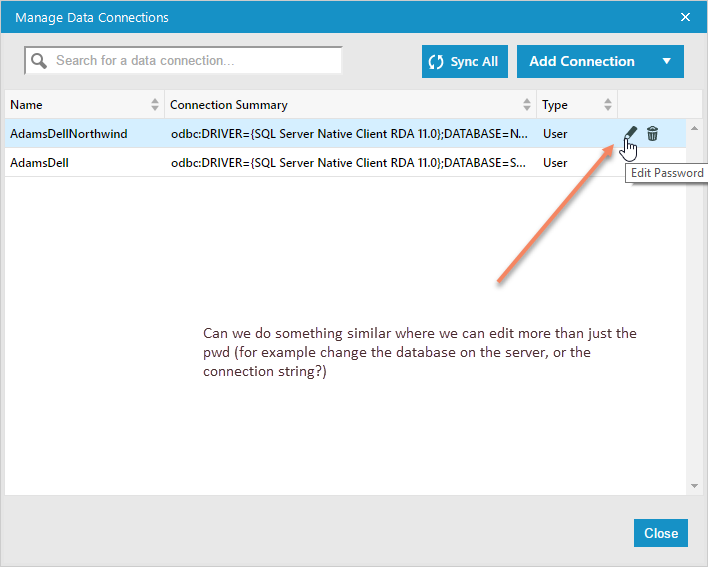

When you use DB connection aliases that are saved in Alteryx, it's currently not easy to edit them when you move a database to a different location.

Can we do something simliar to the "Edit Password" function, but which allows the user to also edit the database or server, so that this applies to all workflows using this alias?

-

Category Connectors

-

Data Connectors

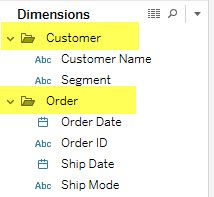

Tableau allows users to do three very useful things to make data more usable for end users, but this functionality is not available with the Publish To Tableau Server tool.

Foldering of dimensions/measures

Creating hierarchies out of dimensions

Adding custom comments to fields that are visible to users when they hover

This functionality allows subject matter experts to create data sources that can be easily understood by everyone within their organizations.

Please "star" this idea if you would like to see functionality in Alteryx that would enable you to create a metadata layer in the "Publish to Tableau Server" tool or in an accompanying tool.

-

Category Connectors

-

Data Connectors

I would like to see the Publish to Tableau Server tool updated to include the option of authenticating with a Personal Access Token in addition to Username/Password. The user would be able to toggle the login method and provided the necessary credentials for that method.

-

Category Connectors

-

Data Connectors

Request: Google Drive Output Tool to be able to set the maximum records per file and create multiple files

For the regular Alteryx Output Tool, we're able to set maximum records per file. This is helpful in a variety of ways - we use it as part of a workflow where the output gets uploaded into SalesForce and we can only load 5,000 records at a time. I also use this to split up large csv files to be under Excel's ~1M line limit so my teammates without Alteryx can open their reports and not lose data.

The Google Drive Output does not have this ability to split based on the number of records. If I use the RecordID Tool plus a Filter, it crashes Alteryx due to a Bug with RecordID + GDrive Output (it's currently in Accepted Defect stage)

It would be very helpful to have this same functionality that we can with the regular Output Tool

-

Category Connectors

-

Data Connectors

In normal output tool, when file type is csv, it is possible to custom select the delimiter. It would be great to be able to have the same option in the Azure Data Lake output tool, so for example you can write a pipe delimited file to your ADLS storage account.

-

API SDK

-

Category Connectors

-

Category Developer

-

Category Input Output

The ‘Existing File Action’ configuration setting needs to address the situation where columns change.

Currently the following options exist, with instruction as follows at https://help.alteryx.com/20213/designer/microsoft-power-bi-output-tool:

There are two expectations when using this tool:

1. Reloads are built to completely replace the contents of a dataset, i.e. an Append is not being performed

2. Columns will change over time with continued development

There is therefore a need for an ‘Overwrite (update columns)’ option. However, when this Existing File Action is used it updates column names, but it does not delete the contents prior to upload. An append onto the existing data, but with new column names is therefore performed.

I acknowledge that the instructions do not say that existing rows are deleted.

This leaves the need to perform a workaround:

- Publish with ‘Overwrite (update columns)’

- Publish (immediately after) with ‘Overwrite (keep existing columns)’

If step 2 is not done data will be appended which would lead to duplication issues.

Either these Existing File Actions need to be renamed to be clearer as to their operation, or preferably an option that updates the columns and sets up new (non-appended) data is required.

-

Category Connectors

-

Data Connectors

Is there a way we can turn on and off any tools in the workflow. This way we can run the tool and when a certain tool is marked off it is not executed. This way we can test the workflow and check different output without deleting the tools existing on the workflow, we can just turn then on or off.

-

Category Connectors

-

Category Transform

-

Data Connectors

-

Desktop Experience

Connect to PowerPivot data model or an Atomsvc file

-

Category Connectors

-

Data Connectors

- New Idea 240

- Accepting Votes 1,820

- Comments Requested 25

- Under Review 164

- Accepted 58

- Ongoing 5

- Coming Soon 9

- Implemented 481

- Not Planned 119

- Revisit 65

- Partner Dependent 4

- Inactive 674

-

Admin Settings

19 -

AMP Engine

27 -

API

11 -

API SDK

217 -

Category Address

13 -

Category Apps

112 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

242 -

Category Data Investigation

76 -

Category Demographic Analysis

2 -

Category Developer

207 -

Category Documentation

80 -

Category In Database

212 -

Category Input Output

635 -

Category Interface

237 -

Category Join

101 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

76 -

Category Predictive

77 -

Category Preparation

388 -

Category Prescriptive

1 -

Category Reporting

198 -

Category Spatial

81 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

87 -

Configuration

1 -

Data Connectors

954 -

Data Products

1 -

Desktop Experience

1,514 -

Documentation

64 -

Engine

125 -

Enhancement

303 -

Feature Request

212 -

General

307 -

General Suggestion

4 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

11 -

Localization

8 -

Location Intelligence

80 -

Machine Learning

13 -

New Request

181 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

23 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

77 -

UX

222 -

XML

7

- « Previous

- Next »

- nzp1 on: Easy button to convert Containers to Control Conta...

-

Qiu on: Features to know the version of Alteryx Designer D...

- DataNath on: Update Render to allow Excel Sheet Naming

- aatalai on: Applying a PCA model to new data

- charlieepes on: Multi-Fill Tool

- seven on: Turn Off / Ignore Warnings from Parse Tools

- vijayguru on: YXDB SQL Tool to fetch the required data

- bighead on: <> as operator for inequality

- apathetichell on: Github support

- Fabrice_P on: Hide/Unhide password button

| User | Likes Count |

|---|---|

| 179 | |

| 18 | |

| 18 | |

| 12 | |

| 8 |