Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: Top Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

Hi all,

The SalesForce Input tool is great.. but has some really bad limitations when it comes to report.

I think there are 2 main limitations :

A - It can only consume 2000 rows due to the rest api limitation. There plenty of articles about it in the community.

B - Long string such as text comment are cutout after a certain number of characters.

Thanks to this great article : https://community.alteryx.com/t5/Alteryx-Designer-Discussions/Salesforce-Input-Tool-amp-Going-Beyond... , I had the idea of going through a csv file export to then import the data into Alteryx.

I've done it using two consequent download tool. The first download is used to get the session id and the second to export a report into a csv in the temp folder. This temp file can then be read using a dynamic input workflow.

Long story short, I think Alteryx should upgrade the Salesforce connector to make it more robust and usable. Using the export to csv feature, this should enable Alteryx to be fully compatible with Salesforce report.

Regards,

-

Category Connectors

-

Data Connectors

Single point of maintenance for Salesforce Input tool connection to Salesforce

This prevents user maintenance every time their password (and token) changes which requires them to update every Tool with new credentials

Also logged as issue under Alteryx, Inc Case # 00252975: Connection to Salesforce Issue

-

Category Connectors

-

Data Connectors

Hi there,

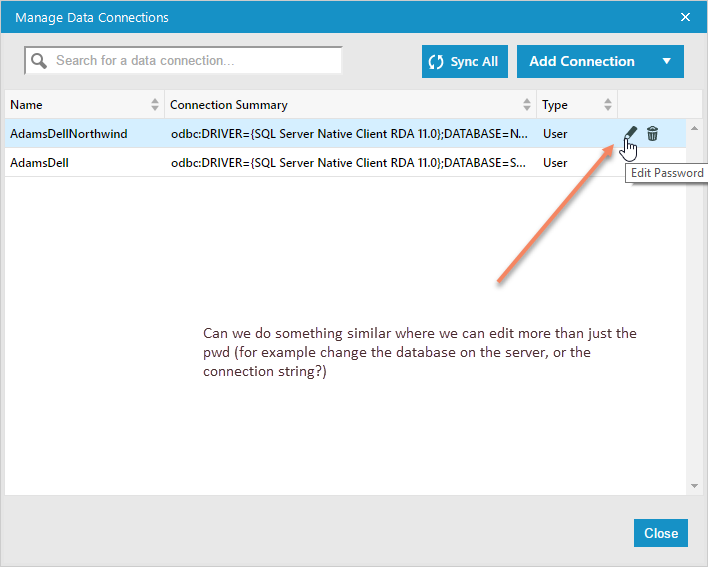

When you use DB connection aliases that are saved in Alteryx, it's currently not easy to edit them when you move a database to a different location.

Can we do something simliar to the "Edit Password" function, but which allows the user to also edit the database or server, so that this applies to all workflows using this alias?

-

Category Connectors

-

Data Connectors

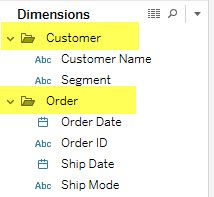

Tableau allows users to do three very useful things to make data more usable for end users, but this functionality is not available with the Publish To Tableau Server tool.

Foldering of dimensions/measures

Creating hierarchies out of dimensions

Adding custom comments to fields that are visible to users when they hover

This functionality allows subject matter experts to create data sources that can be easily understood by everyone within their organizations.

Please "star" this idea if you would like to see functionality in Alteryx that would enable you to create a metadata layer in the "Publish to Tableau Server" tool or in an accompanying tool.

-

Category Connectors

-

Data Connectors

Hi All,

Was very happy to see the Bulk Loader introduced for Snowflake during last release. This bulk loader is specifically available for Snowflake environments that are hosted on AWS, but does not provide functionality for those environments using Azure. As Snowflake continues to build momentum, I imagine this will be a common request. Is there something in the pipeline to add this functionality?

For an interim solution, we will be working toward developing some generic scripts/snowsql to mimic that bulk load, but ultimately we'd love to have this as part of the tool.

Best,

devKev

-

API SDK

-

Category Connectors

-

Category Developer

-

Category Input Output

I noticed that Tableau has a new connector to Anaplan in the upcoming release.

Does Alteryx have any plans to create an Anaplan connector?

-

Category Connectors

-

Data Connectors

With the amount of users that use the publish to tableau server macros to automate workflows into Tableau, I think its about time we had a native tool that publishes to Tableau instead of the rather painful exercise of figuring out which version of the macro we are using and what version of Tableau Server we are publishing to. The current process is not efficient and frustrating when the server changes on both the Tableau and Alteryx side.

-

Category Connectors

-

Category Input Output

-

Data Connectors

For companies that have migrated to OneDrive/Teams for data storage, employees need to be able to dynamically input and output data within their workflows in order to schedule a workflow on Alteryx Server and avoid building batch MACROs.

With many organizations migrating to OneDrive, a Dynamic Input/Output tool for OneDrive and SharePoint is needed.

- The existing Directory and Dynamic Input tools only work with UNC path and cannot be leveraged for OneDrive or SharePoint.

- The existing OneDrive and SharePoint tools do not have a dynamic input or output component to them.

- Users have to build work arounds and custom MACROS for a common problem/challenge.

- Users have to map the OneDrive folders to their machine (and server if published to the Gallery)

- This option generates a lot of maintenance, especially on Server, to free up space consumed by the local version when outputting the data.

The enhancement should have the following components:

OneDrive/SharePoint Directory Tool

- Ability to read either one folder with the option to include/exclude subfolders within OneDrive

- Ability to retrieve Creation Date

- Ability to retrieve Last Modified Date

- Ability to identify file type (e.g. .xlsx)

- Ability to read Author

- Ability to read last modified by

- Ability to generate the specific web path for the files

OneDrive/SharePoint Dynamic Input Tool

- Receive the input from the OneDrive/SharePoint Directory Tool and retrieve the data.

Dynamic OneDrive/SharePoint Output Tool

- Dynamically write the output from the workflow to a specific directory individual files in the same location

- Dynamically write the output to multiple tabs on the same file within the directory.

- Dynamically write the output to a new folder within the directory

-

Category Connectors

-

Category Input Output

-

Data Connectors

I have had multiple instances of needing to parse a set of PDF files. While I realize that this has been discussed previously with workarounds here: https://community.alteryx.com/t5/Alteryx-Knowledge-Base/Can-Alteryx-Parse-A-Word-Doc-Or-PDF/ta-p/115...

having a native PDF input tool would help me significantly. I don't have admin rights to my computer (at work) so downloading a new app to then use the "Run Command" tool is inconvenient, requires approval from IT, etc. So, it would save me (and I'm sure others) time both from an Alteryx workflow standpoint each time I need it, but also from an initial use to get the PDFtoText program installed.

-

Category Connectors

-

Category Input Output

-

Data Connectors

Please update the Publish to Tableau Server connector tool to support Tableau's Ask Data feature. The data source must be recognized as an extract on Tableau Server in order for the Ask Data feature to work. Currently, all data source published using version 2.0 of the connector tool are recognized as a live data source. The work around is cumbersome and requires multiple copies of data sources to be created and managed.

-

Category Connectors

-

Data Connectors

Pushing data to Salesforce from Oracle would bemuch easier if we were able to perform an UPSERT (Update if existing, Insert if not existing) function on any unique ID field in Salesforce. Instead of us having to do a filter to find the records that have or don't have an ID and run an Update or Insert based on the filter.

-

Category Connectors

-

Data Connectors

TIBCO Data Virtualization is a Data Virtualization product focused on creating a virtual data store consolidating data from throughout the enterprise. It can be accessed via a SQL query engine, and has a variety of supported connectors, including an ODBC driver.

This data source can be connected to via ODBC in Alteryx today, but error messaging is unclear/unhelpful, and attempting to use the Visual Query Builder causes Alteryx to crash.

Adding TIBCO Data Virtualization as a supported ODBC connection would empower business users to leverage this product and easily utilize this enterprise data store, enhancing the value of the Alteryx platform as a consumer of this data.

-

Category Connectors

-

Category In Database

-

Category Input Output

-

Data Connectors

While Alteryx allows for a proxy username and password in the settings, these are not passed properly to an NTLM proxy. Support for NTLM authentication would be incredibly useful for a number of corporations who utilize this firewall setup.

We currently have to either download via Python or cURL through batch commands called by Alteryx. Since Alteryx uses a cURL back-end, this should be a fairly simple addition to the existing download tool by allowing a selection of proxy server, port, and authentication method in addition to the proxy username and password. This could be done either in the tool itself or in User Settings.

-

API SDK

-

Category Connectors

-

Category Developer

-

Data Connectors

Could Alteryx create a solution or work around for their tools to retry the queries with Azure DB connectivity outages.

If there are intermittent, transient (short-lived) connection outages with cloud Azure DB, then what action can we take with Alteryx to retry the queries.

Examples of retry Azure SQL logic:

“2. Applications that connect to a cloud service such as Azure SQL Database should expect periodic reconfiguration events and implement retry logic to handle these errors instead of surfacing these as application errors to users”.

SQL retry logic is a feature that is not currently supported by Alteryx.

For further information please see [ ref:_00DE0JJZ4._5004412Star:ref ]:

Hi Alteryx Support,

We are experiencing intermittent errors with our Alteryx workflows connecting to our Azure production database with Alteryx Designer v2018.4.3.54046.

Is there anything we can do to avoid or work around these intermittent / transient (short-lived) connection errors, such as, changing the execution timing or the SQL driver settings.

Or can we incorporate examples of retry Azure SQL logic:

“2. Applications that connect to a cloud service such as Azure SQL Database should expect periodic reconfiguration events and implement retry logic to handle these errors instead of surfacing these as application errors to users”.

https://docs.microsoft.com/en-us/azure/sql-database/sql-database-develop-error-messages

https://docs.microsoft.com/en-us/azure/sql-database/sql-database-connectivity-issues

Salesforce Import process, which contains 25 Workflow modules, completed with errors on:

Mon 25/02/2019 23:27

Error 1

2019-02-25 23:11:23:

2.1.18_SF_MailJobDocument_Import.yxmd:

Tool #245: Error opening connect string: Microsoft OLE DB Provider for SQL Server: Login timeout expired\HYT00 = 0; Microsoft OLE DB Provider for SQL Server: Invalid connection string attribute\01S00 = 0.

Error 2:

2019-02-25 23:26:31:

2.1.25_SF_ClientActivityParticipant_Import.yxmd:

Tool #258: Error opening connect string: Microsoft OLE DB Provider for SQL Server: Login timeout expired\HYT00 = 0; Microsoft OLE DB Provider for SQL Server: Invalid connection string attribute\01S00 = 0.

Salesforce Import Workflow completed with errors on:

Wed 27/02/2019 23:24

Error 3

2019-02-27 23:06:47:

2.1.17_SF_MailJobs_Import.yxmd:

DataWrap2ODBC::SendBatch: [Microsoft][SQL Server Native Client 11.0]TCP Provider: The specified network name is no longer available.

Regards,

Nigel

-

Category Connectors

-

Data Connectors

Is there a way we can turn on and off any tools in the workflow. This way we can run the tool and when a certain tool is marked off it is not executed. This way we can test the workflow and check different output without deleting the tools existing on the workflow, we can just turn then on or off.

-

Category Connectors

-

Category Transform

-

Data Connectors

-

Desktop Experience

It would be great to have the below functionality in Alteryx.

A workflow is built in Alteryx and button click in Alteryx can be used to generate SQL code that can be ran on a specific database platform, such as SQL Server to run external editors such as SQL Server Management Studio. Thanks.

-

API SDK

-

Category Connectors

-

Category Developer

-

Category In Database

Our company is implementing an Azure Data Lake and we have no way of connecting to it efficiently with Alteryx. We would like to push data into the Azure Data Lake store and also pull it out with the connector. Currently, there is not an out-of-the-box solution in Alteryx and it requires a lot of effort to push data to Azure.

-

Category Connectors

-

Data Connectors

As Tableau has continued to open more APIs with their product releases, it would be great if these could be exposed via Alteryx tools.

One specifically I think would make a great tool would be the Tableau Document API (link) which allows for things like:

- Getting connection information from data sources and workbooks (Server Name, Username, Database Name, Authentication Type, Connection Type)

- Updating connection information in workbooks and data sources (Server Name, Username, Database Name)

- Getting Field information from data sources and workbooks (Get all fields in a data source, Get all fields in use by certain sheets in a workbook)

For those of us that use Alteryx to automate much of our Tableau work, having an easy tool to read and write this info (instead of writing python script) would be beneficial.

-

Category Connectors

-

Category Reporting

-

Data Connectors

-

Desktop Experience

It would be useful if enhancements could be made to the Sharepoint Input tool to support SSO. In my organisation we host a lot of collaborative work on SharePoints protected by ADFS authentication and directly pulling data from them is not supported with the SharePoint input tool, it is blocked. The addition of this feature to enable it to recognise logins would be very useful.

-

Category Connectors

-

Data Connectors

In order to make the connections between Alteryx and Snowflake even more secure we would like to have the possibility to connect to snowflake with OAuth in an easier way.

The connections to snowflake via OAuth are very similar to the connections Alteryx already does with O365 applications. It requires:

- Tenant URL

- Client ID

- Client Secret

- Get Authorization token provided by the snowflake authorization endpoint.

- Give access consent (a browser popup will appear)

- With the Authorization Code, the client ID and the Client Secret make a call to retrieve the Refresh Token and TTL information for the tokens

- Get the Access Token every time it expires

With this an automated workflow using OAuth between Alteryx and Snowflake will be possible.

You can find a more detailed explanation in the attached document.

-

Category Connectors

-

Data Connectors

- New Idea 238

- Accepting Votes 1,820

- Comments Requested 25

- Under Review 163

- Accepted 59

- Ongoing 5

- Coming Soon 9

- Implemented 481

- Not Planned 119

- Revisit 65

- Partner Dependent 4

- Inactive 674

-

Admin Settings

19 -

AMP Engine

27 -

API

11 -

API SDK

217 -

Category Address

13 -

Category Apps

112 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

242 -

Category Data Investigation

76 -

Category Demographic Analysis

2 -

Category Developer

207 -

Category Documentation

79 -

Category In Database

212 -

Category Input Output

635 -

Category Interface

237 -

Category Join

101 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

76 -

Category Predictive

77 -

Category Preparation

388 -

Category Prescriptive

1 -

Category Reporting

198 -

Category Spatial

81 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

87 -

Configuration

1 -

Data Connectors

954 -

Data Products

1 -

Desktop Experience

1,512 -

Documentation

64 -

Engine

125 -

Enhancement

301 -

Feature Request

212 -

General

307 -

General Suggestion

4 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

11 -

Localization

8 -

Location Intelligence

80 -

Machine Learning

13 -

New Request

181 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

23 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

76 -

UX

220 -

XML

7

- « Previous

- Next »

-

fmvizcaino on: Easy button to convert Containers to Control Conta...

-

Qiu on: Features to know the version of Alteryx Designer D...

- DataNath on: Update Render to allow Excel Sheet Naming

- aatalai on: Applying a PCA model to new data

- charlieepes on: Multi-Fill Tool

- seven on: Turn Off / Ignore Warnings from Parse Tools

- vijayguru on: YXDB SQL Tool to fetch the required data

- bighead on: <> as operator for inequality

- apathetichell on: Github support

- Fabrice_P on: Hide/Unhide password button

| User | Likes Count |

|---|---|

| 185 | |

| 23 | |

| 16 | |

| 12 | |

| 8 |