Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: Hot Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

Hello all,

According to wikipedia :

https://en.wikipedia.org/wiki/Join_(SQL)

CROSS JOIN returns the Cartesian product of rows from tables in the join. In other words, it will produce rows which combine each row from the first table with each row from the second table.[1]

Example of an explicit cross join:

SELECT *

FROM employee CROSS JOIN department;

Example of an implicit cross join:

SELECT *

FROM employee, department;

The cross join can be replaced with an inner join with an always-true condition:

SELECT *

FROM employee INNER JOIN department ON 1=1;

For us, alteryx users, it would be very similar to Append Fields but for in-db.

Best regards,

Simon

I love this tool, but think it would be improved by including an option to create a column per delimiting character. This could be added in the number of columns selector box. In the case where 1 row has more delimiters than another, null columns can be created. Without this option you have to Regex count the delimiters, select the max and then embed the Text to columns tools in a macro and then pass the max columns as a param. Would be nice to resolve all this in the main tool.

Thanks, nick

Hello,

We use the pre-sql statement of the input to set some parameters of connections. Sadly, we cannot do that in a in-db workflow. This would be a total game-changing feature for us.

Best Regards,

Simon

I'd like to see Alteryx allow a second install of your license on a second, personal machine. Tableau allows this and IMO is why there is such a robust online / blog community around that product.

For those of us that work at mid-size to large organizations, there are often strict rules governing internal data and use of cloud-based data sources. If I discover some new trick I'd like the share with my fellow Alteryx analysts outside of my company, I have no clear way to do that the same way I can with Tableau where I can do it at home not using my company's data.

Being able to learn new features and test things out on commonly available public data (ever notice that Superstore data set everyone who gets Tableau has?) would accelerate what we're able to do with the community site here and the larger analytics blogging community.

From Wikipedia :

In a database, a view is the result set of a stored query on the data, which the database users can query just as they would in a persistent database collection object. This pre-established query command is kept in the database dictionary. Unlike ordinary base tables in a relational database, a view does not form part of the physical schema: as a result set, it is a virtual table computed or collated dynamically from data in the database when access to that view is requested. Changes applied to the data in a relevant underlying table are reflected in the data shown in subsequent invocations of the view. In some NoSQL databases, views are the only way to query data.

Views can provide advantages over tables:

Views can represent a subset of the data contained in a table. Consequently, a view can limit the degree of exposure of the underlying tables to the outer world: a given user may have permission to query the view, while denied access to the rest of the base table.

Views can join and simplify multiple tables into a single virtual table.

Views can act as aggregated tables, where the database engine aggregates data (sum, average, etc.) and presents the calculated results as part of the data.

Views can hide the complexity of data. For example, a view could appear as Sales2000 or Sales2001, transparently partitioning the actual underlying table.

Views take very little space to store; the database contains only the definition of a view, not a copy of all the data that it presents.

Depending on the SQL engine used, views can provide extra security.I would like to create a view instead of a table.

I constantly find my using pre and post SQL Commands in the Output tool to run SQL when I don't actually have any data to output.

One example is when I load data into S3 and want to load it into Redshift. I have SQL code to run but no data to Output - I end up running a dummy row into a temp table.

So can we have an SQL tool that simply acts the same as a Pre-SQL command without the associated data output. Once the command is run we should be able to continue the workflow, so the tool should have an option input and output, like the Run Command tool.

Idea: Allow the user to set the data type including character field width in the Text Input tool.

The Text Input tool currently auto-senses the correct type and width of the field in a Text Input tool. However, this sometimes restricts the usage of the data downline.

Examples:

1 - I often run into the situation where I've copied some data from a browse tool and then pasted that as an input to a new workflow. Then I'll turn that workflow into a macro. But then I run into an issue where the data that comes into the macro is larger than the original width in the Text Input tool. This causes problems.

2 - The tool senses that a field containing zip codes should be numeric and then converts the data. This corrupts the data and makes me insert a Select/Formula tool combo to pad the zeros to the left.

A common problem with the R tool is that it outputs "False Errors" like the following: "The R.exe exit code (4294967295) indicted an error"

I call this a false error because data passes out of the R script the same as if there were no error. As such, this error can generally be ignored. In my use case, however, my R tool is embedded within an iterative macro, and the error causes the iterator to stop running.

I was able to create a workaround by moving the R tool to a separate workflow and calling it from the CReW runner macro within my iterator, effectively suppressing the error message, but this solution is a bit clumsy, requires unnecessary read/writes, and uses nonstandard macros.

I propose the solution suggested by @mbarone (https://community.alteryx.com/t5/Alteryx-Designer-Discussions/Boosted-Model-Error/td-p/5509) to only generate an error when the R return code is 1, indicating a true error, and to either ignore these false errors or pass them as warnings. This will allow R scripts and R-based tools to be embedded within iterative macros without breaking.

At the moment containers either expand and overlap other tools, or you have to leave space for them (defeating the original purpose of using them). Is there a way we can have the containers expansion shift the workflow so the others tools shift down / right to account for this expanision?

The bak file that is automatically created (and re-created if deleted) really clutters up our folders.

Please allow us to either turn it off, or specify a different location to hold our back up files.

Thanks

I would like to suggest creating a fix to allow In-DB Connect tool's custom SQL to read Common Table Expressions. As of 2018.2, the SQL fails due to the fact that In-DB tools wrap everything in a select * statement. Since CTE's need to start with With, this causes the SQL to error out. This would be a huge help instead of having to write nested sub selects in a long, complex SQL code!

Statistics are tools used by a lot of DB to improve speed of queries (Hive, Vertica, etc...). It may be interesting to have an option on the write in db or data stream in to calculate the statistics. (something like a check box for )

Example on Hive : analyse {table} comute statistics; analyse {table} compute statistics for columns;

Hi,

A lot of companies now are deploying on both AWS and Microsoft Azure.

Alteryx supports AWS S3 object storage out of the box, it would be important to support Microsoft Azure blob as part of the native Alteryx product as well.

Cheers,

Adrian

It would be great if we could have a Windows Active Directory data connector tool added to the standard Alteryx toolset.

MS Excel Power Query and PowerBI both can connect to Active Directory for use as a data source, but are both very cumbersome to use. Having a connector in Alteryx that can read AD data into a workflow would be super helpful for a long list of use cases. A couple that are top of mind for me are:

-Leveraging group membership info for dynamic distribution of reports or datasets

-Being able to build reporting and dashboards about the organization (useful for Tech audit, HR, etc.)

I've seen links to an old project on GitHub of someone that started development on this, but the method (just copy these random .dlls into your program directory) is seriously frowned upon by any enterpise IT. Would be great if Alteryx could pick up that work, polish it a bit and add it to the actual Alteryx Designer toolset.

When using the output data tool, it would save me and my cluttered organizational skills a lot of effort if the writing workflow was saved as part of the yxdb metadata.

I've often had to search to find a workflow which created the yxdb. I tend to use naming conventions to help me, but it would be easier if the file and or path was easily found.

cheers,

mark

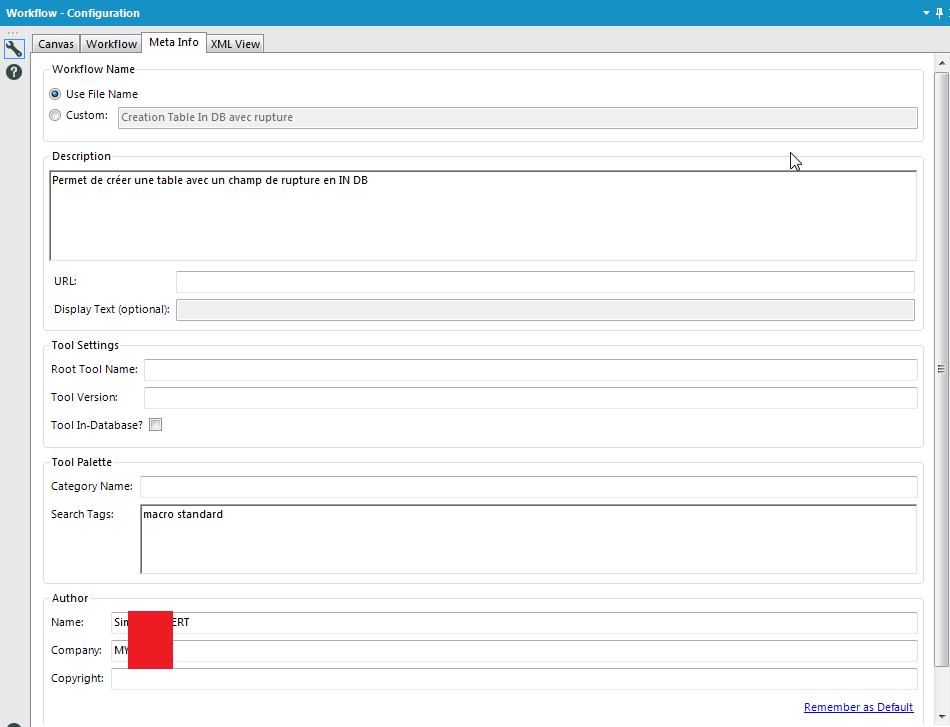

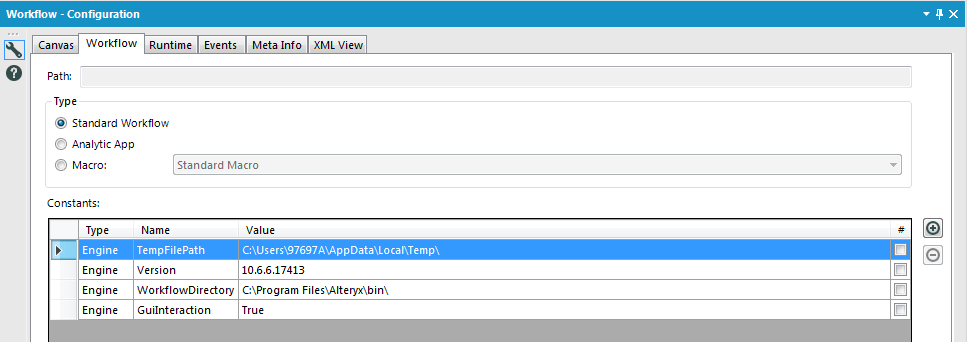

I love Workflow Meta info, especially the ability to put the Author, the search tags,the version, the description, etc...

But why can't we use it as Engine Constant? It doesn't seem very hard to implement and it would change life for development.

I noticed through the ODBC driver log that Alteryx doesn't care about the kind of base I precise. It tests every single kind of base to find the good one and THEN applies the queries to get the metadata info.

Here an example. I have chosen an Hive in db connection. If I read the simba logs, i can find those lines :

Mar 01 11:37:21.318 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: select USER(), APPLICATION_ID() from system.iota

Mar 01 11:37:22.863 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: select USER as USER_NAME from SYSIBM.SYSDUMMY1

Mar 01 11:37:23.454 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: select * from rdb$relations

Mar 01 11:37:23.546 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: select first 1 dbinfo('version', 'full') from systables

Mar 01 11:37:23.707 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: select #01/01/01# as AccessDate

Mar 01 11:37:23.868 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: exec sp_server_info 1

Mar 01 11:37:24.093 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: select top (0) * from INFORMATION_SCHEMA.INDEXES

Mar 01 11:37:24.219 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: SELECT SERVERPROPERTY('edition')

Mar 01 11:37:24.423 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: select DATABASE() as `database`, VERSION() as `version`

Mar 01 11:37:24.635 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: select * from sys.V_$VERSION at where RowNum<2

Mar 01 11:37:25.230 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: select cast(version() as char(10)), (select 1 from pg_catalog.pg_class) as t

Mar 01 11:37:25.415 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: select NAME from sqlite_master

Mar 01 11:37:25.756 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: select xp_msver('CompanyName')

Mar 01 11:37:26.156 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: select @@version

Mar 01 11:37:26.376 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: select * from dbc.dbcinfo

Mar 01 11:37:26.522 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: SELECT @@VERSION;

I can understand that when Alteryx doesn't know the kind of base he tries everything.. (eg : in memory visual query builder) but here, I have selected the Hive database and I have to loose more than 5 seconds for nothing.

I propose another wildcard, %ErrorLog%, that would simply output the error codes and narratives instead of having to use the %OutputLog% to see these. I'd rather not have a 4 MB text email depicting every line of code and action in the module when all I really need to see are the errors.

Hi there,

My idea comes when I've built an application, where user select filter from drop-down list. However it contains thousands of records, so it takes lot's of time to find desired record.

In Excel and MS Access when you use filter you can put many letter and filter shows rows that match the input. In Alteryx user can only put first letter, which is huge drawback to my users.

This is how it works in Excel:

Hope you like it!

Desperately looking for a way to connect to SQL Server Analysis Services through Alteryx as more and more of our large datasets from our older systems are moving to here in the next few months. We can connect using PowerBi with limitations (connecting 'Live' does not allow merge and connecting with Import, you need to use MDX or DAX syntax). We run into import and export limitations, too. We are not allowed access to the underlying tables, but the tables with the measures, dimensions and fields. PowerBi is a big step up from pivot tables, but Alteryx would be so much better. Ideas for connecting this up are are welcome!

- New Idea 240

- Accepting Votes 1,820

- Comments Requested 25

- Under Review 164

- Accepted 58

- Ongoing 5

- Coming Soon 9

- Implemented 481

- Not Planned 119

- Revisit 65

- Partner Dependent 4

- Inactive 674

-

Admin Settings

19 -

AMP Engine

27 -

API

11 -

API SDK

217 -

Category Address

13 -

Category Apps

112 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

242 -

Category Data Investigation

76 -

Category Demographic Analysis

2 -

Category Developer

207 -

Category Documentation

80 -

Category In Database

212 -

Category Input Output

635 -

Category Interface

237 -

Category Join

101 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

76 -

Category Predictive

77 -

Category Preparation

388 -

Category Prescriptive

1 -

Category Reporting

198 -

Category Spatial

81 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

87 -

Configuration

1 -

Data Connectors

954 -

Data Products

1 -

Desktop Experience

1,514 -

Documentation

64 -

Engine

125 -

Enhancement

303 -

Feature Request

212 -

General

307 -

General Suggestion

4 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

11 -

Localization

8 -

Location Intelligence

80 -

Machine Learning

13 -

New Request

181 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

23 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

77 -

UX

222 -

XML

7

- « Previous

- Next »

- nzp1 on: Easy button to convert Containers to Control Conta...

-

Qiu on: Features to know the version of Alteryx Designer D...

- DataNath on: Update Render to allow Excel Sheet Naming

- aatalai on: Applying a PCA model to new data

- charlieepes on: Multi-Fill Tool

- seven on: Turn Off / Ignore Warnings from Parse Tools

- vijayguru on: YXDB SQL Tool to fetch the required data

- bighead on: <> as operator for inequality

- apathetichell on: Github support

- Fabrice_P on: Hide/Unhide password button

| User | Likes Count |

|---|---|

| 180 | |

| 18 | |

| 18 | |

| 13 | |

| 8 |