Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: Top Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

I would really love to have a tool "Dynamic change type" or "Dynamic re-type" which is used just as "Dynamic Rename".

- "Take Type from First Row of Data": By definition, all columns are of a string type initially. Sets the type of the column according to the string in the first row of data.

Col 1 Col 2 Col 3 Col 4 Double Int32 V_String Date 123.456 17 Hello 2023-10-30 3.4e17 123 Bye 2024-01-01 - "Take Type from Right Input Metadata": Changes the types of the left input table to the ones by right input.

- "Take Type from Right Input Rows": Changes the types based on a table with columns "Name" and "New Type".

Name New Type Col 1 Double Col 2 Int32 Col 3 V_String Col 4 Date

It would be very helpful to have an output of the workflow into a step by step document. so someone who does not have access to Alteryx can undestand the steps taken to create the flow hence the result or output.

Would be nice to have a way to cache-uncache all inputs or a selected group of tools. Caching and Uncaching workflows with many input tools or slow data-read tools gets to be a bit cumbersome. Would be a nice QoL improvement :)

I looked around for something like this but didn't see a solution, so thought I'd recommend. Please let me know if something like this exists already natively in designer desktop.

Hello All,

I'm using the dynamic input tool for SQL requests in my Workflow (WF).

I'm using the "Replace a Specific String" to replace elements in the SQL statement dynamically depeding on results of prevoius tools, user input etc.

So the statement looks like

select * from Schema_Name_xx where invoice_number = 'invoice_number_xx'

Since Schema_Name_xx is no valid Schema in the Database, the statement (= Validation) won't work. Only if I replace Schema_Name_xx by e.g. Invoice_Data_Current it will work, same with the invoice number, invoice_number_xx is replaced by e.g. 4711.

Therefore, validation makes no sense and will never work, only if the WF is running, the correct Schema is inserted in the SQL statement by the "Replace a Specific String" function.

It would be great to disable it in the users settings or wherever in the Designer, changing a config file would also be great :-)

Pls. note: I'm thinking (since I'm not allowed anyway ;-)) about changing/disabeling anything in the Alteryx Server settings.

Reason:

1. Speed: Validating a WF with SQL statements that don't work takes time (every time I save it), sometimes I get even a timeout...

2. WF error entries: Each upload with a failed validation creates an entry in the WF result list which makes it harder to seperate them from the "real" WF errors...

Thanks & Best Regards,

Thomas

I try to use the Comment tool for documentation within workflows for team members (and my future self when I have to revisit it months after I built it). It would be helpful to be able to use markdown formatting inside the tool.

This might even encourage more documentation. *fingers crossed*

Hi there,

When you connect to a DB using a connection string or an alias - this shows up in the Workflow Dependancies in a way that is very useful to allow you to identify impacts if a DB is moved or migrated.

However - in 2023.1, if you use DCM then the database dependancies just show up as .\ which makes dependancy management much more difficult.

Please could you add the capability to view the DCM dependancies correctly in the dependancy window?

BTW - this workflow Dependancy Window would be a great place to build a simple process to move existing DB connections to a DCM connection!

CC: @wesley-siu @_PavelP

Hi there,

When connecting to data sources using DCM - could we please add the ability to make JDBC connections?

see:

https://community.alteryx.com/t5/Engine-Works/JDBC-Connections-in-Alteryx/ba-p/968782

As mentioned in these threads - JDBC is very common in large enterprises - and in many cases is better supported by the technology teams / developer community and so is much easier to make a connection. Added to this - there are many databases (e.g. DB2) where JDBC connections are just much easier

Please could you add JDBC connections to the DCM tooling?

Thank you

Sean

cc: @wesley-siu @_PavelP

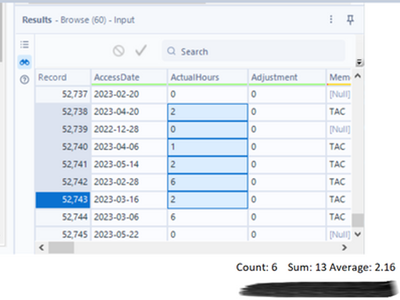

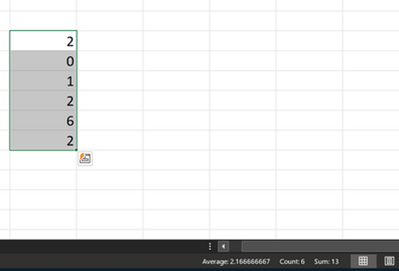

Alteryx should seriously consider incorporating certain Excel features into its Brows tool, as they greatly enhance usability and functionality.

Currently, when selecting specific records in the Brows tool, users are unable to obtain important metrics such as sum, average, or count without resorting to additional steps, such as adding a summary tool or filters.

However, envisioning the integration of a concise bar below the message result window that provides these essential statistics, which are immensely beneficial to users, would undoubtedly elevate the Brows tool to the next level.

By implementing this enhancement, Alteryx would make a significant impact and establish the Brows tool as a must-have resource.

Hi there,

When creating a database connection - Alteryx's default behaviour is to create an ODBC DSN-linked connection.

However DSN-linked connections do not work on a large server env - because this would require administrators to create these DSNs on every worker node and on every disaster recovery node, and update them all every time a canvas changes.

they are also not fully safe becuase part of the configuration of your canvas is held in the DSN - and so you cannot just rely on the code that's under version control.

So:

Could we add a feature to Alteryx Designer that allows a user to expand a DSN into a fully-declared conneciton string?

In other words - if the connection string is listed as

- odbc:DSN=DSNSnowFlakeTest;UID=Username;PWD=__EncPwd1__|||NEWTESTDB.PUBLIC.MYTESTTABLE

Then offer the user the ability to expand this out by interrogating the ODBC Connection manager to instead have the fully described connection string like this:

odbc:DRIVER={SnowflakeDSIIDriver};UID=Username;pwd=__EncPwd1__;authenticator=Snowflake;WAREHOUSE=compute_wh;SERVER=xnb27844.us-east-1.snowflakecomputing.com;SCHEMA=PUBLIC;DATABASE=NewTestDB;Staging=local;Method=user

NOTE: This is exactly what users need to do manually today anyway to get to a DSN-less conneciton string - they have to craete a file DSN to figure out all the attributes (by opening it up in Notepad) and then paste these into the connection string manually.

Thanks all

Sean

Hi there,

the Snowflake documentation only refers to connection strings which use a DSN such as this page Snowflake | Alteryx Help which refers to the connection string as odbc:DSN=Simba_Snowflake_JWT;UID=user;PRIV_KEY_FILE=G:\AlteryxDataConnectorsTeam\OAuth project\PEMkey\rsa_key.p8;PRIV_KEY_FILE_PWD=__EncPwd1__;JWT_TIMEOUT=120

However - for canvasses which need to be productionized on Alteryx Server - it is critical to use dsn-less connection strings so that the canvasses can be deployed and run on any worker node without having to set up DSNs on every worker node.

A DSN-less connection string looks like this:

ODBC:DRIVER={SnowflakeDSIIDriver};UID=UserName;pwd=Password;WAREHOUSE=compute_wh;SERVER=server.us-east-1.snowflakecomputing.com;SCHEMA=PUBLIC;DATABASE=NewTestDB;Staging=local;Method=user|||NEWTESTDB.PUBLIC.MYTESTTABLE

Please could you consider making an update to the help texts to provide and describe a DSN-free connection string as well as the DSN driven connections?

Many thanks

Sean

Hello all,

As of today, when you want to retrieve or create a file on Apache Spark for Databricks, you have only two choices : CSV and Avro

However it's clearly missing parquet file type :

-it's faster

-it's better for storage

-it's standard and already supported as input/output of Alteryx or for HDFS so doesn't seem hard to add here.

Best regards,

Simon

The basic premise is this:

Phantom spacing. Basically something that looks like it has spaces on Excel but is actually formatted as an indentation.

Unfortunately, to read the indentation we will need either a VBA prep or read the XML inside. The latter of which is difficult.

As to VBA, the general steps are to create an indentation formula in order to see the numbers, then go from there. The idea is credited to @clmc9601 as we discussed privately.

As of now, I do not see anyway to do this on Alteryx as a function or even expression. It would be very helpful especially reading trial balances or even Bloomberg outputs as they are formatted with indentation.

Reading indentation from Excel or any other file within Alteryx will be much appreciated, especially in actuarial and finance spaces.

After using the PCA can there be a model object to output to be able to "score" new data?

Similar to PCA transform here https://stackoverflow.com/questions/26182329/how-do-i-convert-new-data-into-the-pca-components-of-my...

As currently there is no way to use this model with new data

Alteryx offers the ability to add new formulae (e.g. the Abacus addin) and new tools (e.g. the marketplace; custom macros etc) - which is a very valuable and valued way to extend the capability of the platform.

However - if you add a new function or tool that has the same name as an existing function / tool - this can lead to a confusing user experience (a namespace conflict)

Would it be possible to add capability to Alteryx to help work around this - two potential vectors are listed below:

- Check for name conflicts when loading tools or when loading Alteryx - and warn the user. e.g. "The Coalesce function in package CORE Alteryx conflicts with the same function name in XXX package - this may cause mysterious behaviours"

- Potentially allow prefixes to address a function if there are same names - e.g. CoreAlteryx.Coalesce or Abacus.Coalesce - and if there is a function used in a function tool in a way that is ambiguous (e.g. "Coalesce") then give the user a simple dialog that allows them to pick which one they meant, and then Alteryx can self-cleanup.

I would love a tool to be created for looking up a value in a table based on a condition. It could be called "Lookup." One input to the tool would be the lookup list, the other is the main database. Inside the tool you could enter functions that can query the lookup table and return the results either as an overwrite of an existing field in the main DB or as a new field in the main DB, similar to the options in the Multi-Row Formula tool.

Here is a link to my post in Community that explains the problem. The solution, in a nutshell, was to create a Join (which resulted in millions of additional rows), run the conditional formula, then filter to get rid of the millions of rows that were created by the Join so only those that met the condition remained (the original database rows).

Here is the text of my Community post describing my project (slightly modified for clarity):

Table 1: A list of Pay Dates (the lookup table)

Table 2: Daily timekeeper data with Week Start and Week End Date fields.

The goal: To find the Pay Date in Table 1 that is greater than the Week Start Date in Table 2 and no more than 13 days after the Week End Date in Table 2.

[Table 2: Week Start Date] < [Table 1: Pay Date]

and [Table 2: Week End Date] < [Table 1: Pay Date]

and DateTimeDiff([Table 1: Pay Date], [Table 2: Week End Date], 'Days') <= 13

There are many different flows I could use this type of tool for that would save time and simplify the flow.

Thanks!

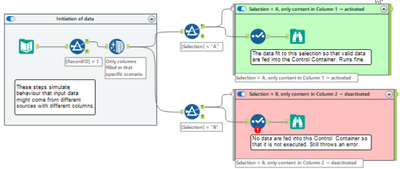

Sometimes, Control Containers produce error messages even if they are deactivated by feeding an empty table into their input connection.

(Note that this is a made up example of something which can happen if input tables might be from different sources and have different columns so that they need separated treatment.)

According to the product team, this is expected behaviour since a selection does not allow zero columns selected. This might be true (which I doubt a bit), but it is at least counter-intuitive. If this behaviour cannot be avoided in total, I have a proposal which would improve the user experience without changing the entire workflow validation logic.

(The support engineer understands the point and has raised a defect.)

Instead of writing messages inside Control Containers directly to the log output (on screen, in logfile) and to mark the workflow as erroneous, I propose to introduce a message (message, warning, error) stack for tools inside Control Containers:

- When the configuration validation is executed:

- Messages (messages, warnings, errors) produced outside of Control Containers are output to the screen log and to the log files (as today).

- Messages (messages, warning, errors) produced inside of Control Containers are not yet output but stored in a message stack.

- At the moment when it is decided whether a Control container is activated or deactivated:

- If Control Container activated: Write the previously stored message stack for this Control Container to the screen and to the log output, and increase error and warning counts accordingly.

- If Control Container deactivated: Delete the message stack for this Control Container (w/o reporting anything to the log and w/o increasing error and warning count).

This would result in a different sequence of messages than today (because everything inside activated Control Containers would be reported later than today). Since there’s no logical order of messages anyways, this would not matter. And it would avoid the apparently illogical case that deactivated Control Containers produce errors.

Please consider implementing a consistent case-sensitive option for all tools and functions.

To compare string values, including case-sensitivity: This post had a good description of the challenge, but the post has been archived:

For all the time I've used Alteryx, I thought that IF "test" = "TEST" would evaluate to false. Today I realised that isn't the case and I was surprised. I'm very surprised that "equals" performs like it does.

A few existing Ideas request case-sensitivity for individual tools:

Case insensitive option while joining two data sets

https://community.alteryx.com/t5/Alteryx-Designer-Desktop-Ideas/Case-insensitive-option-while-joinin...

Unique tool enhancement - deal with case sensitive data

https://community.alteryx.com/t5/Alteryx-Designer-Desktop-Ideas/Unique-tool-enhancement-deal-with-ca...

This new Idea requests system-wide consideration for case-sensitivity, for all tools and functions.

Current state:

These tools and functions are case-sensitive:

- Tool: Join

- Tool: Tile

- Function: FindString

- Functions: MD5_ASCII, MD5_UNICODE, MD5_UTF8

These tools and functions are NOT case-sensitive:

- Tool: Unique

- Function: CompareDictionary

These tools and functions can be either case-sensitive or NOT case-sensitive, depending on the options used:

- Function: Contains

- Function: EndsWith

- Function: StartsWith

- Functions: REGEX_Match, REGEX_Replace, REGEX_CountMatches

Current Challenges:

How do we easily identify Lower Case, Upper Case, Mixed Case?

How do we easily compare strings for equality, using case sensitivity?

Request:

Ensure all tools and functions include an option to ignore or consider Case

Create new functions for IsUpperCase, IsLowerCase, IsMixedCase

Create a new function for IsEqual, with an option to ignore or consider Case

See attached workflow, which

- uses REGEX_Match to create 3 new fields: IsUpperCase, IsLowerCase, IsMixedCase

- creates a field [Flag: Original value IsEqual, case-sensitive], to compare strings for equality, using case sensitivity

Hi all,

At present, Alteryx does not support DSN-free connections to Snowflake using the Bulk Connector. This is a critical functionality for any large company that uses Alteryx - and so I'm hoping that this can be changed in the product in an upcoming release. As a corollary - every DB connection type has to be able to work without DSNs for any medium or large size server instance - so it's worth extending this to check every DB connection type available in Alteryx.

Here are the details:

What is DSN-Free?

In order to be able to run our Alteryx canvasses on a multi-node server - we have to avoid using DSNs - so we generally expand connection strings that look like this:

odbc:DSN=DSNSnowFlakeTest;UID=Username;PWD=__EncPwd1__|||NEWTESTDB.PUBLIC.MYTESTTABLE

to instead have the fully described connection string like this:

odbc:DRIVER={SnowflakeDSIIDriver};UID=Username;pwd=__EncPwd1__;authenticator=Snowflake;WAREHOUSE=compute_wh;SERVER=xnb27844.us-east-1.snowflakecomputing.com;SCHEMA=PUBLIC;DATABASE=NewTestDB;Staging=local;Method=user

For Snowflake BL:

Now - for the Snowflake Bulk Loader the same process does not work and Alteryx gives the classic error below

With DSN:

snowbl:DSN=DSNSnowFlakeTest;UID=Username;pwd=__EncPwd1__;Staging=local;Method=user|||NEWTESTDB.PUBLIC.MYTESTTABLE

Without DSN:

snowbl:driver=SnowflakeDSIIDriver;UID=SeanBAdamsJPMC;pwd=__EncPwd1__;SERVER=xnb27844.us-east-1.snowflakecomputing.com;WAREHOUSE=compute_wh;SCHEMA=PUBLIC;DATABASE=NewTestDB;Staging=local;Method=user|||NEWTESTDB.PUBLIC.MYTESTTABLE

Many thanks

Sean

Lets say you have a row of 10 filter tools vertically and there's a select tool coming out of each input for each filter. It can get dizzying to tell the difference. It would be great to be able to select a colour for tools when on the canvas so e.g. in the above I could say " my green selects are the true and my red selects are the false"

- New Idea 250

- Accepting Votes 1,818

- Comments Requested 25

- Under Review 167

- Accepted 56

- Ongoing 5

- Coming Soon 11

- Implemented 481

- Not Planned 118

- Revisit 65

- Partner Dependent 4

- Inactive 674

-

Admin Settings

19 -

AMP Engine

27 -

API

11 -

API SDK

218 -

Category Address

13 -

Category Apps

112 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

244 -

Category Data Investigation

76 -

Category Demographic Analysis

2 -

Category Developer

208 -

Category Documentation

80 -

Category In Database

214 -

Category Input Output

636 -

Category Interface

238 -

Category Join

102 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

76 -

Category Predictive

77 -

Category Preparation

390 -

Category Prescriptive

1 -

Category Reporting

198 -

Category Spatial

81 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

87 -

Configuration

1 -

Data Connectors

957 -

Data Products

1 -

Desktop Experience

1,519 -

Documentation

64 -

Engine

125 -

Enhancement

309 -

Feature Request

212 -

General

307 -

General Suggestion

4 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

11 -

Localization

8 -

Location Intelligence

80 -

Machine Learning

13 -

New Request

185 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

23 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

77 -

UX

222 -

XML

7

- « Previous

- Next »

-

caltang on: Identify Indent Level

- simonaubert_bd on: OpenAI connector : ability to choose a non-default...

- nzp1 on: Easy button to convert Containers to Control Conta...

-

Qiu on: Features to know the version of Alteryx Designer D...

- DataNath on: Update Render to allow Excel Sheet Naming

- aatalai on: Applying a PCA model to new data

- charlieepes on: Multi-Fill Tool

- seven on: Turn Off / Ignore Warnings from Parse Tools

- vijayguru on: YXDB SQL Tool to fetch the required data

- bighead on: <> as operator for inequality

| User | Likes Count |

|---|---|

| 85 | |

| 14 | |

| 10 | |

| 6 | |

| 5 |