Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

Not sure if any of you have a similar issue - but we often end up bringing in some data (either from a website or a table) to profile it - and then an hour in, you realise that the data will probably take 6 weeks to completely ingest, but it's taken in enough rows already to give us a useful sense.

Right now, the only option is to stop (in which case all the profiling tools at the end of the flow will all give you nothing) and then restart with a row-limiter - or let it run to completion. The tragedy of the first option is that you've already invested an hour or 2 in the data extract, but you cannot make use of this.

It feels like there's a third option - a option to "Stop bringing in new data - but just finish the data that you currently have", which terminates any input or download tools in their current state, and let's the remainder of the data flush through the full workflow.

Hopefully I'm not alone in this need 🙂

Please add in a feature to connect to S3 via AWS IAM roles.

When selecting a HANA view in Alteryx that has a required prompt/parameter/variable Alteryx does not pre-populate the SQL with the required HANA prompt code. Instead it give a SQL Error. Requesting an Alteryx enhancement that would read the HANA view and determine the required HANA prompt SQL required and prepopulate the prompt or ask the user to fill in the prompt/variable box. This may work similar to how Tableau work when connecting directly to a HANA view that requires a prompt (it would pop up the prompt and allow you to select, when you convert Tableau to custom sql it would save the SQL code required for the prompt.

Today, any Alteryx tool with "Select" functionality has an option for "Dynamic or Unknown Fields" which, when checked, allows any new fields to pass through that tool. This is a great function for most of the tools as you can allow workflow updates to pass through the tool without issue.

However, in the Join tool, there are some use cases where there is NEVER a reason to pass new fields from one side or the other into the tool, but you might still want new fields from a primary process. Examples being something like a lookup/cross-reference to do an inclusive join, where adding new fields to the lookup might inadvertently pass these downstream. Having the option to only allow unknown fields from one side through would greatly enhance this output.

When we try to call external web site from Alteryx Designer Download tool, our company proxy server failed the authentication because Alteryx uses the basic login/password authentication. This has happened to multiple applications that need to interact with external partners. Will like to request an enhancement to enable Alteryx to authenticate using Kerberos or NTLM.

Current State: When a macro contains nested macros the only method that reliably works to share them is via yxi (which I fondly refer to as my wixies).

Future State: Allow macros published to the gallery be their own tool palette so that when I or any user connects to the server the macros are there and just work, no import, no visible install just a single set of tools that work on that server.

Side task - also get export to yxi

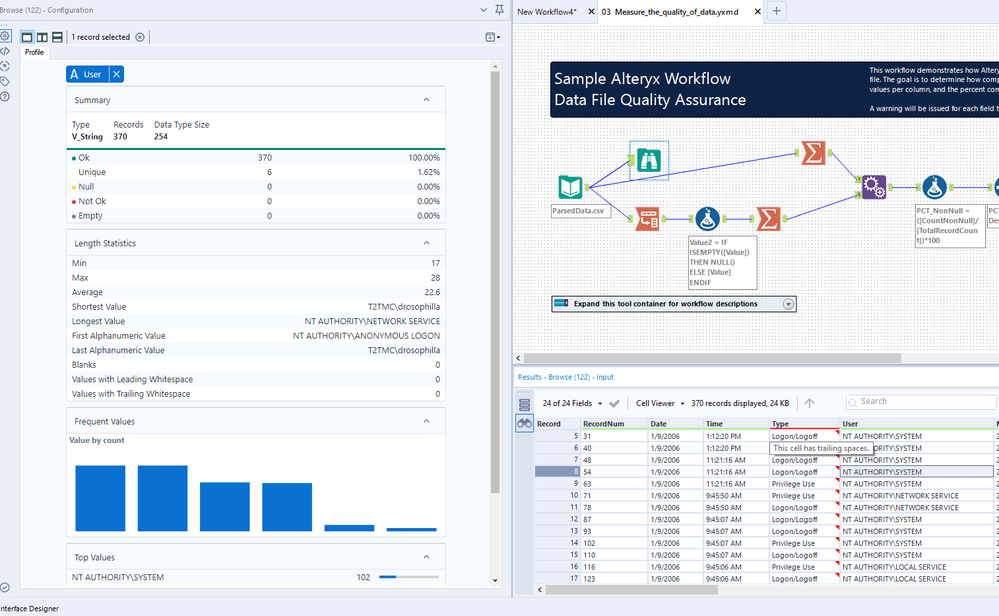

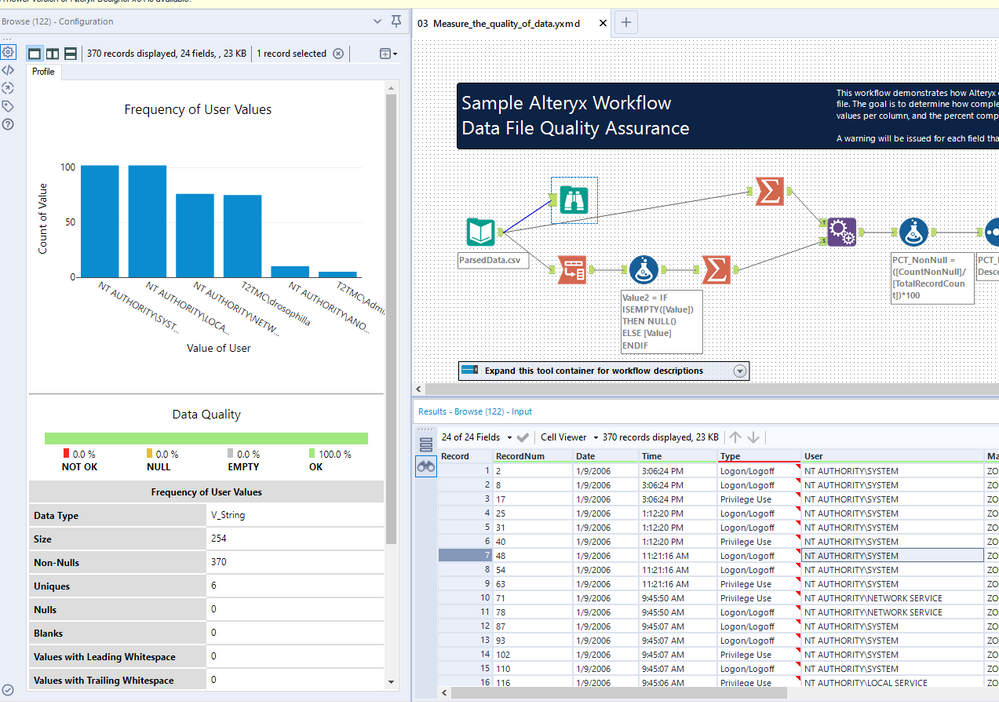

I'm digging the new holistic data view in the browse; however, there is one aspect of the old view that I miss: I liked the list of top values to be available without scrolling. Here is the current view of the new browse:

What are my top values? I either need to hover over the blue bars, or scroll down to see the list at the bottom. I would like the top values list moved to the top. For reference, here is what the old view looked like:

My top values are available right there at the top.

Please extend the Workflow Dependencies functionality to include dependencies of used macros in the worflow too. Currenctly macros are simply marked as dependencies by themselves, but the underlying dependencies (e.g. data sources) of these macros are not included.

We have a large ETL process developed with Alteryx that applies several layers of custom and complex macros and several data sources referenced using aliases. Currently the process is deployed locally (non-server) and executed ad-hoc, but will be moved to the server platform at some point.

Recently I had to prep an employee for running the process. This requires creating aliases and associated connections and making sure that access to needed network locations is in place (storing macros, temp files, etc.). Hence I needed to identify all aliases and components/macros used. As everything is wrapped nicely by a single workflow, I hoped that the workflow dependencies functionality would cover dependencies in the macro nodes within, but unfortunately it didn't and I had to look through the dependencies of 10-15 macros.

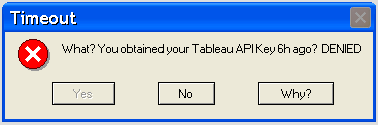

The current version of the Publish to Tableau macro retrieves an API key at the start of the workflow run. Often times the workflow may take several hours to run before it's ready to write to Tableau by which time the API may have expired. (I think the default tableau server setting times out in 2 hrs) It's one of those soul crushing "I should've forked the output!"

Sample Log Error -

- Tool #46: TableauServer.UploadChunks (238): Iteration #1: Tool #19: Tool #4: Tableau Server API Request (Upload file) Error Code 401002: Unauthorized Access -- Invalid authentication credentials were provided.

- Tool #46: Tool #252: Tool #4: Tableau Server API Request (Publish file) Error Code 401002: Unauthorized Access -- Invalid authentication credentials were provided.

The idea would be to change when the macro obtains the API from when the workflow is initiated to just before the workflow is ready to write to the Tableau avoiding these timeouts.

(If you're having this issue in the meantime you can have your Tableau server admin up the timeout)

My testing has shown that when a datetime field is input from a Snowflake table, the Input tool will convert the data to reflect the datetime as local time to the machine the workflow is run on.

For example, this is a data set from a direct query to Snowflake...

This is what is coming out of the Input tool with the machine set to Pacific time...

However when setting the machine to Central time, the results from the Input tool are...

This obviously can wreck havoc with inconsistencies of subsequent reporting off of this data.

Since Snowflake carries the UTC offset as part of the data, it would be nice to have the ability to disable this "assumption" by Alteryx that the results should convert the datetime to the machine datetime. This way the data could pass through with the datetime values that are held in the database.

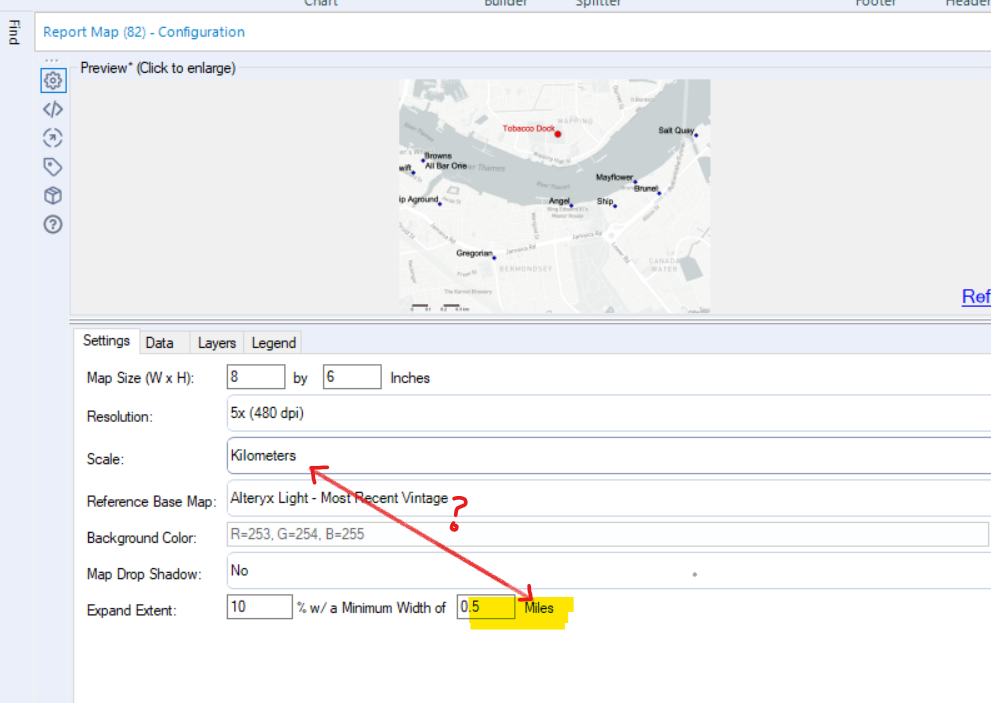

In the Report Map tool - you have the ability to select units - KM vs. Miles

You also have the ability to put a map buffer around your zoomed points - either in % or distance.

However - if you've selected your units as KM - the "Expand Extent" is still in Miles - see picture below.

Please can you make this consistent?

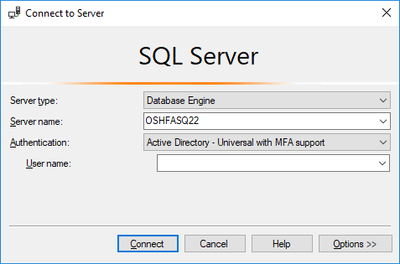

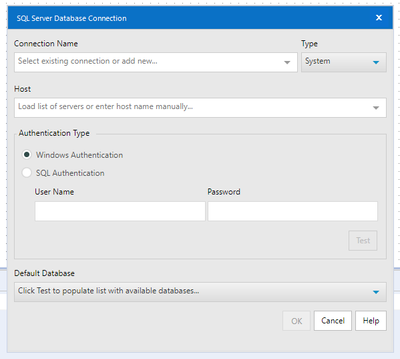

Add to SQL Server Database Connection an Authentication Type for Multi-Factored Authentication (MFA). Allowing to connect to databases which require 2-step authentication (a separate token key each time user connects).

The above added to

Add ability to name the columns for the text to column fields tool.

The method of saving the results of one app to be read in by a follow on app seems very clunky to me. Can we develop a method to use the results within a workflow to feed drop down lists in later stages in the same workflow? That way an app can stand on it's own without having to save files out and chain further apps to read them again.

It seems this only works for selecting fields to include in the output but not for list of values to feed to a drop down list.

It looks like we can choose which fields to include in a workflow with the Listbox interface + Select tool., but we cannot ORDER or REORDER fields.

I did stumble across this post, it looks like it can be done but it isnt very elegant.

While I strongly support the S3 upload and download connectors, the development of AWS Athena has changed the game for us. Please consider opening up an official support of Athena compute on S3 like support already show for Teradata, Hadoop Hive, MS SQL, and other database types.

Please follow above link...

We are seeing a huge requests from users to support this feature...

Till 2019.4 version, Alteryx can't connect to a table if complete read access is NOT granted to it....

Other DataConnector tools des this action except Alteryx...

Please consider this as a Feature Request ..

Hello,

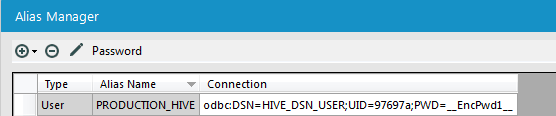

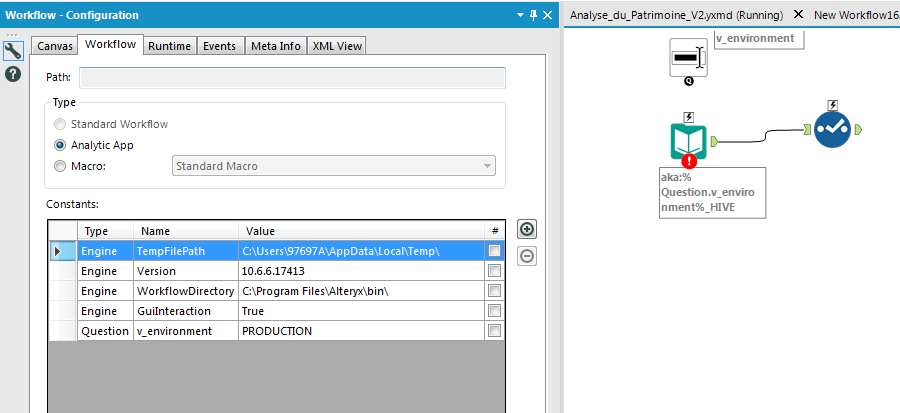

we have several environment in our organization : dev, recept, production.

In order to make that change safe we intend to make several connection (standard alias) like

PRODUCTION_HIVE

DEV_HIVE

RECEPT_HIVE

In our workflows, we want to use aka:%Question.v_environment%HIVE

Sadly, this solution does not work despite the value defaut.

The function isnumber(<arg>) (in formula-like tools) does not do what I would expect from it:

It returns whether the data format of the argument is numeric. It does not return whether the argument actually represents a number (even though it might be a string).

Currently, you would have to help yourself by something like

REGEX_Match(<arg>, "^[+-±]?\d*([.,]+\d*)?([eE][+-]?\d+)?$")which is quite clumpsy.

From my perspective, the right setup would have been:

- isnumber(<arg>) returns whether the argument is a number (even if it might be of type string)

- isnumeric(<arg>) returns whether the argument is of a numeric data type

I understand if the functionality of isnumber(<arg>) needs to be preserved. Then, a new function could be called isfloat(<arg>): "Is the argument something which could be converted to a float?" That would still be misleading but better than nothing.

When I run a Standard Workflow in the Designer, I can continue to work on other workflows, I can even run two workflows in parallel.

In contrast, when running an Analytical App in the Designer, the entire program is blocked and neither another workflow can be edited or run.

I propose to allow access to the Designer GUI also when running Analytical Apps.

- New Idea 275

- Accepting Votes 1,815

- Comments Requested 23

- Under Review 173

- Accepted 58

- Ongoing 6

- Coming Soon 19

- Implemented 483

- Not Planned 115

- Revisit 61

- Partner Dependent 4

- Inactive 672

-

Admin Settings

20 -

AMP Engine

27 -

API

11 -

API SDK

218 -

Category Address

13 -

Category Apps

113 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

247 -

Category Data Investigation

77 -

Category Demographic Analysis

2 -

Category Developer

208 -

Category Documentation

80 -

Category In Database

214 -

Category Input Output

641 -

Category Interface

240 -

Category Join

103 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

76 -

Category Predictive

77 -

Category Preparation

394 -

Category Prescriptive

1 -

Category Reporting

198 -

Category Spatial

81 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

89 -

Configuration

1 -

Content

1 -

Data Connectors

964 -

Data Products

2 -

Desktop Experience

1,538 -

Documentation

64 -

Engine

126 -

Enhancement

331 -

Feature Request

213 -

General

307 -

General Suggestion

6 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

12 -

Localization

8 -

Location Intelligence

80 -

Machine Learning

13 -

My Alteryx

1 -

New Request

194 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

23 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

80 -

UX

223 -

XML

7

- « Previous

- Next »

-

NicoleJ on: Disable mouse wheel interactions for unexpanded dr...

- TUSHAR050392 on: Read an Open Excel file through Input/Dynamic Inpu...

- NeoInfiniTech on: Extended Concatenate Functionality for Cross Tab T...

- AudreyMcPfe on: Overhaul Management of Server Connections

-

AlteryxIdeasTea

m on: Expression Editors: Quality of life update - StarTrader on: Allow for the ability to turn off annotations on a...

- simonaubert_bd on: Download tool : load a request from postman/bruno ...

- rpeswar98 on: Alternative approach to Chained Apps : Ability to ...

-

caltang on: Identify Indent Level

- simonaubert_bd on: OpenAI connector : ability to choose a non-default...

| User | Likes Count |

|---|---|

| 20 | |

| 9 | |

| 6 | |

| 6 | |

| 5 |