Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

Alteryx 2019.4 introduced support for Tableau's .hyper extract format, however it only supports single table extracts. .hyper files have supported multiple tables since mid-2018, so I'd like Alteryx to support that as well.

Here are a couple of current use cases (as of February 2020) and one future one.

- We have malaria incidence data that is joined to multiple sets of spatial data. Doing all of the joins in the extract creation process to build a single table extract is not possible due to processing time & memory constraints, so we use a multiple-table extract.

- There are multiple ways to do row level security in Tableau. A common way is to have separate tables for the data & the entitlements and then use calculations at run-time to filter the data, and for that having a multiple table extract is ideal.

- In 2020 Tableau will be introducing new data modeling capabilities (this was first demoed at the 2018 Tableau Conference, there were sessions on it at the 2019 Tableau Conference) where one goal is vastly improved performance for large fact table to fact table joins where previously we'd have to do much more data preparation. This is another case where multiple table extracts would be useful.

I've attached a sample Hyper file with two tables in the extract (it's zipped because the Community site doesn't accept .hyper files).

Supporting alternative schema and table names in Hyper extracts https://community.alteryx.com/t5/Alteryx-Designer-Ideas/Input-tool-Support-more-than-Extract-Extract... is a prerequisite for this because by definition multiple table extracts have multiple table names.

A related idea is supporting multiple table extracts for the Output tool: https://community.alteryx.com/t5/Alteryx-Designer-Ideas/Support-multiple-table-extracts-in-the-Table...

Jonathan

Alteryx 2019.4 added support in the Input tool for Tableau .hyper extract files. The tables stored in the .hyper files have a schema and a table name. Tableau's old .tde files and Hyper files created by Alteryx & Tableau Desktop use "Extract.Extract" as the schema.tablename. However when using Tableau's Hyper API the default schema is "public" and the table name is arbitrarily specified by the user or application.

This has two impacts:

1) Without this support Alteryx can't open many .hyper files created by other applications. By way of example I've attached a sample .hyper file (in a .zip because the community software doesn't allow .hyper files) that has the schema.tablename "public.table1".

2) Also support for names beyond Extract.Extract is required in order to support multiple table extracts (submitted as a separate Idea).

Please update the Input tool so the user can select the particular schema and table name from the .hyper file.

Jonathan

Thanks you to @JoeM for recent training on macros, and @NicoleJohnson for pointing out some of the challenges.

when writing an iterative macro - it is a little bit difficult to debug because when you run this in designer mode, it only does one iteration and stops.

Could we add the capability to the designer itself to be able to run the second and third iteration using the test data built into the macro input tool? Even something as simple as an option to run X iterations; or when it's run the first iteration allow me to look at what happened and trigger iteration 2 (or to trigger a run-through to completion) would be immensely helpful.

While you can do this with a test-flow wrapped around a macro, macro development is a bit of a black box because Alteryx doesn't natively have the ability to step into a macro during run-time and pause it to see what's happening on iteration 1 or 2 or n and why it's not terminating etc. So putting the ability to run in a debug mode would be HUGELY helpful.

With the 2019.3 release the summarize tool now includes prefixes for grouped fields. While a nice addition, in application it makes using this data downstream (like joining to other tables) more involved because of needing to remove this prefix.

It would be nice to have this as an option (a checkbox to add/remove prefixes maybe) or just revert back to pre-2019.3 behavior...thanks!

Hi there,

When you connect to a DB using a connection string or an alias - this shows up in the Workflow Dependancies in a way that is very useful to allow you to identify impacts if a DB is moved or migrated.

However - in 2023.1, if you use DCM then the database dependancies just show up as .\ which makes dependancy management much more difficult.

Please could you add the capability to view the DCM dependancies correctly in the dependancy window?

BTW - this workflow Dependancy Window would be a great place to build a simple process to move existing DB connections to a DCM connection!

CC: @wesley-siu @_PavelP

Hi there,

When connecting to data sources using DCM - could we please add the ability to make JDBC connections?

see:

https://community.alteryx.com/t5/Engine-Works/JDBC-Connections-in-Alteryx/ba-p/968782

As mentioned in these threads - JDBC is very common in large enterprises - and in many cases is better supported by the technology teams / developer community and so is much easier to make a connection. Added to this - there are many databases (e.g. DB2) where JDBC connections are just much easier

Please could you add JDBC connections to the DCM tooling?

Thank you

Sean

cc: @wesley-siu @_PavelP

Presently when mapping an Excel file to an input tool the tool only recognizes sheets it does not recognize named tables (ranges) as possible inputs. When using PowerBI to read Excel inputs I can select either sheets or named ranges as input. Alteryx input tool should do the same.

Hey gang, just another QoL suggestion from me!

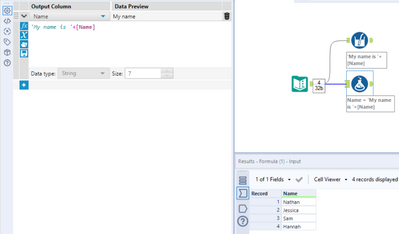

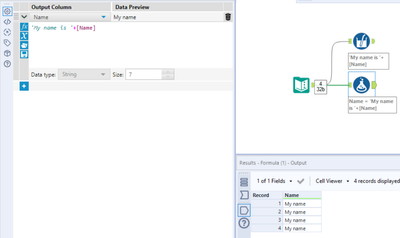

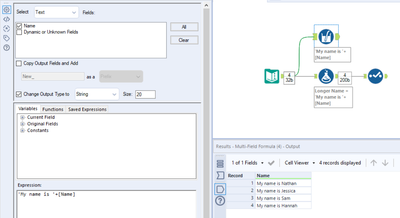

Currently, when applying changes to an existing field that will take the outcome beyond the current field size, we have to use an additional Select tool to get around truncation:

The usual route here is to either a) use a Select tool beforehand to increase the field size:

Or b) create a new field and then remove the 'old' one in a Select tool afterwards, also renaming the replacement here:

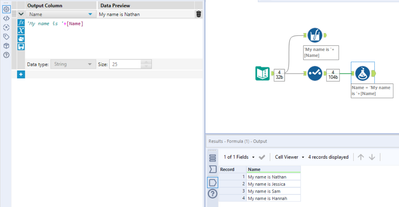

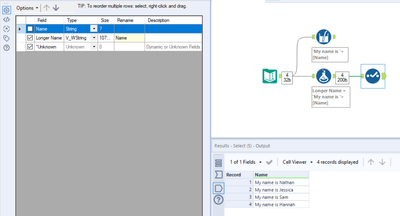

Given that we could just do this in one using the Multi-Field Formula tool:

My request is pretty simple here - can the 'Change Output Type to' configuration also be added to the standard Formula tool? The ability to also update the name of the output would be brilliant as well if possible. Cheers!

Auto Field tools help optimally size and assign data types to your data for better performance but this conversion process can be memory intensive with large datasets. What if you could right-click an Auto Field tool to convert it to a standard select tool with the new data types and sizes much like the existing ability to right-click convert inputs into macro inputs or browse tools into outputs? This would eliminate the need to manually transfer the results of the Auto Field tool into a select tool for production workflows!

See the discussion on this page:

Every time we create a file output - you first have to check if the folder exists - and if not then create it.

Currently it's quite onerous to do a directory create - especially with all the error trapping to make this production safe - and everyone is reinventing the wheel in their own companies.

Given the commonality of this need - could we add a tool that allows you to check for existance of a directory and attempt to create it (with nested directories and useful status / error descriptions to act upon)

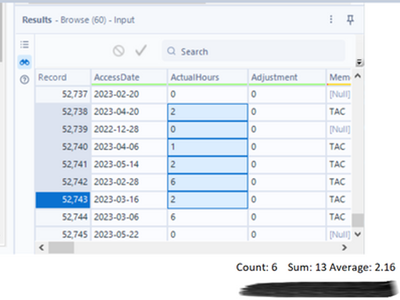

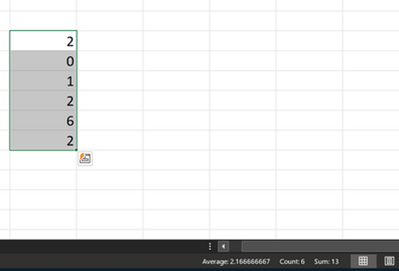

Alteryx should seriously consider incorporating certain Excel features into its Brows tool, as they greatly enhance usability and functionality.

Currently, when selecting specific records in the Brows tool, users are unable to obtain important metrics such as sum, average, or count without resorting to additional steps, such as adding a summary tool or filters.

However, envisioning the integration of a concise bar below the message result window that provides these essential statistics, which are immensely beneficial to users, would undoubtedly elevate the Brows tool to the next level.

By implementing this enhancement, Alteryx would make a significant impact and establish the Brows tool as a must-have resource.

Currently the cross tab tool automatically sorts alphabetically by the "New Column Headers" field. Often times I have to output data with dates across the columns and therefore have to do a cross tab to achieve this. The problem is when I have the dates formatted with month names, the crosstab automatically sorts it in alphabetical order instead of date order (i.e. Apr, Aug, Dec, etc vs Jan, Feb, Mar). To get around this issue, I have to use a dynamic rename tool. It would be great if there was a way to choose the order of the crosstab (i.e. in the order of the data, crosstab, another field, etc.).

The Source field of the field metadata is very useful, but has some problems.

- It is repetitious. A long connection string repeated for many fields from the same source can bloat the size of the workflow above 10 MB, and when removed is around 0.5 MB.

- It exposes sensitive information about a company's infrastructure, such as server names, ports, user ids, and proprietary data structures.

I first started paying attention when we found a user's password in the metadata because they had passed it as a string to the Dynamic Input Tool (separate Idea submitted for that - LINK). Then when I had to share an App with the Alteryx Support team for support with an issue, I thought to check the metadata, and I noticed that the file was too big and was exposing information that I would not normally share with another company.

I'm not sure how you want to handle this, but here's some thoughts:

- Default the Source field to 'off' and provide users the option to turn it 'on' in the workflow/app settings.

- Provide a mechanism to strip the 'Source' field at time of saving or exporting the workflow.

- If nothing else, provide education to users on the implications of including this information in the file.

Thanks for listening!

Cameron

I think it would be great to have a tool that allows you to update a dataset with another dataset. For example, this could be used in updating an archive table on a daily basis as data changes. Having a tool available that streamlines this data operation would be helpful to simplify workflows.

In the tool, you would be given the option to select your primary key fields, which are the fields used to identify records. Additionally, you have the option to perform an insert, modify, or delete operation, according to the primary key fields that you choose in the configuration.

Obviously this is something that anybody could create a macro for if they wanted to. But it would be nice to have a tool in place so that we dont have to worry about it. I think this would be a nice use case to bolster Alteryx usage as a data engineering tool for relational database management in particular.

Syntax Highlighting Idea:

Similar to the Formula Tool, allow the Multi-Field Formula tool (and other similar tools) to have Syntax Highlighting to allow user to easily determine if the formula being input doesn't have any errors.

Variable Autofill Idea:

Similar to the Formula Tool and how it provide a list of columns as you begin to type them, allow the Multi-Field Formula tool (and other similar tools) to have the ability to autofill variables such as [_CurrentField_] or [_CurentFiledName_] as you begin to type them.

Hello!

I am making this idea request in response to this question:

https://community.alteryx.com/t5/Alteryx-Designer-Discussions/Is-it-possible-to-enable-Performance-P...

Currently, one of my favourite settings to enable in Alteryx is the performance profiling, as i get to see exactly how much time is being used at each step, and its a quick reminder to double check those tools that take a while for optimisation. However, i have to enable this on every new workflow that i open as the setting only applies to the current workflow, and it can be frustrating executing a large workflow only to realise after waiting for it to run, that this setting was not enabled.

What i'd like to propose, is an extra set of settings within the User Settings, default tab (which is currently):

To something like:

Which would simply enable these settings as a default, when a new workflow is made.

Let me know what you think! I think a couple of the other settings in there could see use, especially as the AMP engine develops and those who want to see all macro messages, for example.

Cheers,

TheOC

Add ability to lock comment boxes size, shape, position (send to back), location on the canvas. This would allow a developer to use a template when creating workflow without accidently selecting and/or adjusting these attributes. It will also allow a user to put a tool over the top of the comment box without fear of messing up the visual display of the workflow or it getting hidden underneath the comment box.

Alteryx Server was recently updated to allow TLS-mediated connections to the MongoDB persistence layer. This allowed us to switch off of the embedded MongoDB to a highly-available MongoDB Atlas cluster. To our surprise after the switch, when we went to edit our workflows that make use of the persistence layer's data (Server Usage Report, etc.) to hit the new Atlas cluster, we found that the MongoDB Input tool does not support TLS connections. This absolutely needs to be changed. Based on organizational constraints, Atlas is our only option for a HA persistence layer. We absolutely have to have TLS support for the MongoDB Input tool. There is no other way for us to natively query our server persistence layer in Designer. Please bring the MongoDB Input tool into alignment with the MongoDB connections that are supported by Alteryx Server.

- New Idea 257

- Accepting Votes 1,818

- Comments Requested 24

- Under Review 169

- Accepted 56

- Ongoing 5

- Coming Soon 11

- Implemented 481

- Not Planned 118

- Revisit 64

- Partner Dependent 4

- Inactive 674

-

Admin Settings

20 -

AMP Engine

27 -

API

11 -

API SDK

218 -

Category Address

13 -

Category Apps

112 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

245 -

Category Data Investigation

76 -

Category Demographic Analysis

2 -

Category Developer

208 -

Category Documentation

80 -

Category In Database

214 -

Category Input Output

636 -

Category Interface

238 -

Category Join

102 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

76 -

Category Predictive

77 -

Category Preparation

391 -

Category Prescriptive

1 -

Category Reporting

198 -

Category Spatial

81 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

87 -

Configuration

1 -

Data Connectors

958 -

Data Products

3 -

Desktop Experience

1,524 -

Documentation

64 -

Engine

125 -

Enhancement

315 -

Feature Request

212 -

General

307 -

General Suggestion

4 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

12 -

Localization

8 -

Location Intelligence

80 -

Machine Learning

13 -

New Request

188 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

24 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

78 -

UX

223 -

XML

7

- « Previous

- Next »

- rpeswar98 on: Alternative approach to Chained Apps : Ability to ...

-

caltang on: Identify Indent Level

- simonaubert_bd on: OpenAI connector : ability to choose a non-default...

- maryjdavies on: Lock & Unlock Workflows with Password

- nzp1 on: Easy button to convert Containers to Control Conta...

-

Qiu on: Features to know the version of Alteryx Designer D...

- DataNath on: Update Render to allow Excel Sheet Naming

- aatalai on: Applying a PCA model to new data

- charlieepes on: Multi-Fill Tool

- seven on: Turn Off / Ignore Warnings from Parse Tools