Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: New Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

Is there a reason why Alteryx does not include hierarchical clustering?

Well it's sort of slow especially with huge data sets, computation effort increases cubic, but then when you need to do two step clustering,

"creating more than enough k-means clusters and joining cluster centers with hierarchical clustering" it seems to be a must...

P.s. Knime, SPSS modeler, SAS, Rapidminer has it already...

-

Category Predictive

-

Desktop Experience

There is a great functionality in Excel that lets users "seek" a value that makes whatever chain of formulas you might have work out to a given value. Here's what Microsoft explains about goal seek: https://support.office.com/en-us/article/Use-Goal-Seek-to-find-a-result-by-adjusting-an-input-value-...

My specific example was this:

In the excel (attached), all you have to do is click on the highlighted blue cell, select the “data” tab up top and then “What-if analysis” and finally “goal seek.” Then you set the dialogue box up to look like this:

Set cell: G9

To Value: 330

By changing cell" J6

And hit “Okay.” Excel then iteratively finds the value for the cell J6 that makes the cell G9 equal 330. Can I build a module that will do the same thing? I’m figuring I wouldn’t have to do it iteratively, if I could build the right series of formulas/commands. You can see what I’m trying to accomplish in the formulas I’ve built in Excel, but essentially I’m trying to build a model that will tell me what the % Adjustment rate should be for the other groups when I’ve picked the first adjustment rate, and the others need to change proportionally to their contribution to the remaining volume.

There doesn't really seem to be a way to do this in Alteryx that I can see. I hate to think there is something that excel can do that Alteryx can't!

-

Category Data Investigation

-

Category Predictive

-

Desktop Experience

This request is largely based on the implementation found on AzureML; (take their free trial and check out the Deep Convolutional and Pooling NN example from their gallery). This allows you to specify custom convolutional and pooling layers in a deep neural network. This is an extremely powerful machine learning technique that could be tricky to implement, but could perhaps be (for example) a great initial macro wrapped around something in Python, where currently these are more easily implemented than in R.

-

Category Predictive

-

Desktop Experience

I would like to suggest to add a widget which encapsulate an R script able to perform outlier detection, something similar like netflix did:

Thank you.

Regards,

Cristian

-

Category Predictive

-

Desktop Experience

XGboost regression is now the benchmark for every Kaggle competition and seems to consistently outperform random forest, spline regression, and all of the more basic models. For those of us using predictive modeling on a regular basis in our actual work, this tool would allow for a quick improvement in our model accuracy. And I think, from a marketing standpoint, having a core group of users competing in Kaggle using Alteryx would be a great way to show off Alteryx's power.

It is readily available as an R package: https://cran.r-project.org/web/packages/xgboost/index.html

-

Category Predictive

-

Desktop Experience

Hello! Almost all statistical softwares allow for the analyst to use either a pairwise or a listwise option when applying clustering techinques. This option affects only how the inner distance matrix is built, and after that whichever algorithm you choose is peformed. However in Alteryx [K-Centroids] by default does listwise, classifying only those records where the selected variables have no nulls.

Please consider adding this option!

PS: the difference is pairwise will build the distance between 2 variables depending on those records that have no nulls on both variables, while listwise will run the distance matrix after it has checked for complete non null records in all variables of interest (not one at a time distance calculation).

-

Category Data Investigation

-

Category Predictive

-

Desktop Experience

I am trying to run batch regressions on a pretty sizable set of data. About ~1M distinct groups of data, each wtih 30-500 x,y pairs.

A batch macro with a linear regression works ok - but it is really slow. Started at about 2-3s per regression. After stripping out bunch or reporting from the macro, I am down to ~2s. This is still feels quite slow compared to something purpose built.

Has anyone experimented with higher speed versions that just dump out m,b, & r2?

-

Category Predictive

-

Desktop Experience

Idea:

A funcionality added to the Impute values tool for multiple imputation and maximum likelihood imputation of fields with missing at random will be very useful.

Rationale:

Missing data form a problem and advanced techniques are complicated. One great idea in statistics is multiple imputation,

filling the gaps in the data not with average, median, mode or user defined static values but instead with plausible values considering other fields.

SAS has PROC MI tool, here is a page detailing the usage with examples: http://www.ats.ucla.edu/stat/sas/seminars/missing_data/mi_new_1.htm

Also there is PROC CALIS for maximum likelihood here...

Same useful tool exists in spss as well http://www.appliedmissingdata.com/spss-multiple-imputation.pdf

Best

-

Category Macros

-

Category Predictive

-

Desktop Experience

There is a web hosted trial that anyone can have a hands on experiance with alteryx tutorials without even downoading the tool.

That's awesome... http://goo.gl/dpSoe2

It may be a nice idea to;

1) either start seperate "Alteryx-kaggle" instances with data sets specific to each kaggle competition so that anyone want to try out may have a go with those well known examples thru the Alteryx site,

2) Or even better have a partnership with kaggle so that anyone can just have it's own Alteryx trial per specific competition on the kaggle website...

I'm sure this will draw a lot of attention...

Rationale;

You'll immediately have a greater reach in Kaggle community, some data hobbiyists and cs, ie students and acedemics (which will eventually end up doing lot's of data blending when ther are going to be hired by top notch firms...

-

Category Interface

-

Category Predictive

-

Desktop Experience

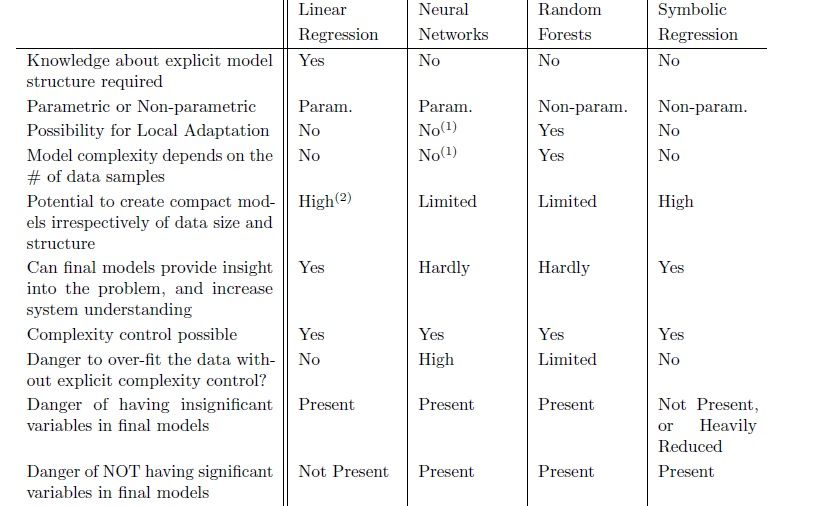

Idea:

In forecasting and in commercial/sme risk scoring there is a need for trying vast number of algebraic equations which is a very cumbersome prosess. Let's add symbolic regression as a new competitive capability.

Rationale:

Summations, ratios, power transforms and all combinations of a like are needed to be tested as new variables for a forecasting or prediction model. Doing this by hand manually is a though and long business... And there is always a possibility for one to skip a valuable combination.

Symbolic regression is a novel techinique for automatically generating algebraic equations with use of genetic programming,

In every evolution a variable is selected checked if the equation is discriminatitive of the target variable at hand. In every next step frequently observed variables will be selected more likely.

Benefit for clients:

This method produces variables mainly with nonlinear relationships. It is a technique that will help in corporate/commercial/sme risk modelling, such that powerful risk models are generated from a hort list of B/S and P/L based algebraic equations.

There is potential use cases in algorithmic trading as well...

There are 3 very interesting world problems solved with symbolic regression here.

A very relevant thesis by sean Wouter is attached as a pdf document for your reading pleasure...

R side of things:

I've found Rgp package for genetic programming, here is a link.

Competition:

I haven't seen something similar in SAS, SPSS but there is this; http://www.nutonian.com/products/eureqa/

Also there is Bruce Ratner's page

-

Category Predictive

-

Category Preparation

-

Desktop Experience

Idea:

Some well known scoring methods use optimal binned variables for added robustness. Let's add this capability to Alteryx.

Retionale:

Here's a basic link on why to do that; http://documents.software.dell.com/statistics/textbook/optimal-binning

Current status in Alterys as I'm aware of:

Tile tool or Multi-field Binning tool for completing same task as Tile tool on multiple fields, splits the variables by 5 methods;

Equal Records or Intervals or Sums

Smart Tile

Unique Value

Manual

Unfortunately "equal something" binnings are bad idea, as the values are categorized "blindly" irrespective of the effects on the predictive power of the models.

What to do:

What's needed is to bin both numerical and categorical variables optimally such that the Weights of Evidences (WoE) should present a monotone increasing or decreasing pattern. Maybe at most a V or U shaped "convex" structure.

Quick win:

Without constraining ourselves with monotonicity or convex cases, the easiest practice would be running a C4.5 or CHAID tree algorithm (produces multiple splits rather than binary splits in CART) for a single variable and select the target as the dependent variable and all the resulting nodes will be the bins we are looking for. Doing this for multiple variables at once is the key to the tool to be generated.

Clients:

This capability is sought by risk management departments building robust, stable Basel compliant models in financial industry, especially by banks.

-

Category Predictive

-

Category Preparation

-

Category Transform

-

Desktop Experience

The capability to input/output R Datasets via the input/output tools, together with all the other data formats as well (like csv, Excel, SAS, SPSS, etc).

-

Category Input Output

-

Category Predictive

-

Data Connectors

-

Desktop Experience

-

Category Predictive

-

Desktop Experience

The values of coefficients/Residuals/Errors would be very useful in building macros for techniques like Missing Value Analysis which can't be done in Alteryx as of now.

-

Category Predictive

-

Desktop Experience

I am not sure if this capability exists but I assume it does not.

We have a need to optimize a Linear Program (LP) model that consists of a system of equations and has both:

An objective function and a series of constraints. One of the software capabilities that SAS offers that currently

Alteryx does not have is this optimization capability.

I am wondering if the capability is currently not available, is this capability in the Product Roadmap?

Thanks,

Ricardo

-

Category Predictive

-

Desktop Experience

I have been using the outputs from Spline Regression to facillitate analysis of demographic data (specifically Department of Labor Quarterly Employment data). I have data from 1992Q1 to 2014Q1 and use Spline Regression to get fitted values for each quarter with predictors being the year/quarter, Year/quarter multiplied by a dummy variable for each of the 4 US Presidents, and a dummy variable for each president.

So I can compare results across various groupings by geographic, and other levels as well as the BLS aggregation level. I can analyze raw data or have the values to be fitted indexed to 1992Q1.

I use the default settings for Spline and it builds the best fit including where the node periods for each spline section. To help interpret the results, though, I use the output to compare the actual vs. fitted values (e.g. employment Level) and then look at the changes by quarter.

With the spline regression building the best model with optimal line segments, the results make it possible to see how employment progress or regress correletat with with presidential terms of office or specific impacts of economic recessions on employment data.

I can supply an example of the process, if anyone is interested.

I'd appreciate any comments and/or suggestions to improve the process or interpret the results.

-

Category Predictive

-

Desktop Experience

- New Idea 210

- Accepting Votes 1,827

- Comments Requested 25

- Under Review 152

- Accepted 61

- Ongoing 5

- Coming Soon 6

- Implemented 480

- Not Planned 123

- Revisit 67

- Partner Dependent 4

- Inactive 674

-

Admin Settings

19 -

AMP Engine

27 -

API

11 -

API SDK

217 -

Category Address

13 -

Category Apps

111 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

239 -

Category Data Investigation

75 -

Category Demographic Analysis

2 -

Category Developer

206 -

Category Documentation

77 -

Category In Database

212 -

Category Input Output

632 -

Category Interface

236 -

Category Join

101 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

75 -

Category Predictive

76 -

Category Preparation

384 -

Category Prescriptive

1 -

Category Reporting

198 -

Category Spatial

80 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

87 -

Configuration

1 -

Data Connectors

948 -

Desktop Experience

1,494 -

Documentation

64 -

Engine

123 -

Enhancement

277 -

Feature Request

212 -

General

307 -

General Suggestion

4 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

10 -

Localization

8 -

Location Intelligence

79 -

Machine Learning

13 -

New Request

177 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

21 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

73 -

UX

220 -

XML

7

- « Previous

- Next »

- vijayguru on: YXDB SQL Tool to fetch the required data

- apathetichell on: Github support

- Fabrice_P on: Hide/Unhide password button

- cjaneczko on: Adjustable Delay for Control Containers

-

Watermark on: Dynamic Input: Check box to include a field with D...

- aatalai on: cross tab special characters

- KamenRider on: Expand Character Limit of Email Fields to >254

- TimN on: When activate license key, display more informatio...

- simonaubert_bd on: Supporting QVDs

- simonaubert_bd on: In database : documentation for SQL field types ve...