Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: Top Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

If the workflow configuration had a run for 'x' number of iterations option it would make debugging macros a lot easier. My current method consists of copying results, changing inputs and repeat until I find my problem which feels very manual.

-

Category Macros

-

Desktop Experience

-

User Settings

Idea:

A funcionality added to the Impute values tool for multiple imputation and maximum likelihood imputation of fields with missing at random will be very useful.

Rationale:

Missing data form a problem and advanced techniques are complicated. One great idea in statistics is multiple imputation,

filling the gaps in the data not with average, median, mode or user defined static values but instead with plausible values considering other fields.

SAS has PROC MI tool, here is a page detailing the usage with examples: http://www.ats.ucla.edu/stat/sas/seminars/missing_data/mi_new_1.htm

Also there is PROC CALIS for maximum likelihood here...

Same useful tool exists in spss as well http://www.appliedmissingdata.com/spss-multiple-imputation.pdf

Best

-

Category Macros

-

Category Predictive

-

Desktop Experience

I would like to see a Run Workflow Tool implemented which can run a specified workflow directly from the Designer. This tool would work similar to the Run Command Tool , but instead you can select the Analytical App Filename (.yxmc etc) and optional Analytical AppValues Filename (.yxwv) as parameters.

Currently you would need the Scheduler or Server licence to run a workflow via AlteryxEngineCmd.exe with the Run Command Tool, but there are legitimate uses which require a workflow to be dynamically determined within a larger workflow. I am aware of the CReW Runner Macros, but these require an additional Executable to be installed which is difficult for locked-down environments.

My example is a workflow which downloads a file list from a Web Service, there are a large number of interface items and 80% of the workflow is standardized. However the initial processing of the files is not, so either you create a workflow for each file type and map all the interface items again, include all the handled file types in one massive workflow, or, create sub workflows which transform the files which allows the minimum amount of repeating code.

-

Category Macros

-

Desktop Experience

Can we get the input tool to automatically convert long filenames to the 8.3 convention inside of a macro?

I've written a batch macro that individually opens files in order to trap files that fail to open. However, when I pass in really long file names it bombs because beyond some length the Input tool converts the path to 8.3 but that logic doesn't fire inside of my macro.

Example of filename:

\\ccogisgc1sat\d$\Dropbox (Clear Channel Outdoor)\Mapping\BWI MapInfo\Workspaces\Local\AEs\Archives\Cara\Sunrise Senior Living\Washington+DC_Adults+55++With+HHI+Of+$75,000++Who+Are+Caregiver+Of+Aging+Parent_Relative+Or+Planning+To+Shop+For+Nursing+Care_Assisted+Living_Retirem.TAB

-

Category Input Output

-

Category Macros

-

Data Connectors

-

Desktop Experience

When you right click on a Macro tool (e.g. Google Analytics) within a workflow, you can choose the version of the tool to use. However, it does not indicate which version of the tool is already in use.

Why is this an issue for me?

I have a workflow with 15 instances of the Google Analytics tool. (I needed to use the API for each month fo GA data and then use a join. I built it this way due to the restriction on the number of records.)

So when I update the Google Analytics tool I have to do it 15 times. I'd like to be sure the update is needed before I start.

Alteryx Support confirm that there is no way to tell which version of the macro is in use.

-

Category Connectors

-

Category Macros

-

Data Connectors

-

Desktop Experience

When output is disabled, Alteryx's output tools are helpfully grayed out and include the message 'output has been disabled by the workflow properties.'

However, if a macro has an output, there is no visual indicator that output is disabled, even though the macro's output will also be suppressed by this workflow configuration.

Obviously, macros can be very complex, and could have both a file and a macro output, or have an optional file output, so these cannot be entirely locked out just because there is an output.

To that end, I suggest some other kind of color-coding/shading be applied visually to these tools, and that a message be added to the interface for these macros that says something like "output has been disabled, this macro may not perform all of its functions".

I just spent about 10 minutes debugging why a macro wasn't working properly in one workflow but was working in another, and it was because I had disabled output, which I wasn't thinking of because this particular macro uses the Render tool to produce a hyperlink. I wouldn't have spent more than 30 seconds on this if there was some kind of visual indicator showing me what I was doing wrong!

-

Category Input Output

-

Category Macros

-

Data Connectors

-

Desktop Experience

When I first started using Alteryx I did not use macros or the Runtime Tab much at all and now I use both a lot but...I can't use them together.

When working in a macro there is no Runtime Tab. While working on a macro and testing it you can't take advantage of any of the handy features in the Runtime Tab. I am assuming a macro will inherit any settings from the Flow that calls it, can't find anything in the community or "help" to confirm that though, but this is not helpful while developing and testing.

-

Category Macros

-

Desktop Experience

Hi there Alteryx team,

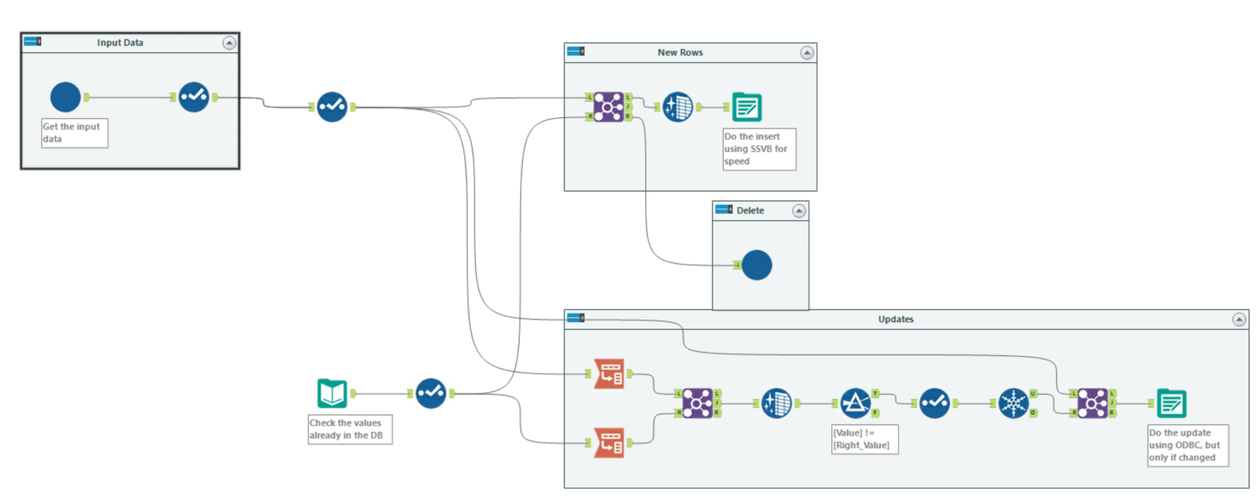

When we load data from raw files into a SQL table - we use this pattern in almost every single loader because the "Update, insert if new" functionality is so slow; it cannot take advantage of SSVB; it does not do deletes; and it doesn't check for changes in the data so your history tables get polluted with updates that are not real updates.

This pattern below addresses these concerns as follows:

- You explicitly separate out the inserts by comparing to the current table; and use SSVB on the connection - thereby maximizing the speed

- The ones that don't exist - you delete, and allow the history table to keep the history.

- Finally - the rows that exist in both source and target are checked for data changes and only updated if one or more fields have changed.

Given how commonly we have to do this (on almost EVERY data pipe from files into our database) - could we look at making an Incremental Update tool in Alteryx to make this easier? This is a common functionality in other ETL platforms, and this would be a great addition to Alteryx.

-

Category In Database

-

Category Macros

-

Data Connectors

-

Desktop Experience

The autorecover feature should also backup macros. I was working on a macro when there was an issue with my code. I have my autorecover set very frequent, so I went there to backup to a previous version. To my great surprise, my macro wasn't being saved behind the scenes at all. My workflow had its expected backups, but not my macro. Please let any extension be backed up by autorecover.

Thanks!

-

Category Macros

-

Desktop Experience

If an organisation wants many Designer users to have the same macros available to them, they have to set up their own network drive to save the macros to and share that drive to all users and ensure each user has read access to it and then maps that drive to their macros.

Instead of relying on this shared drive architecture, macro builders should be able to publish their macros to a collection in Gallery and then end users should be able to map that collection to their Macros in Designer.

It would keep the sharing of macros within the Alteryx platform and make sharing macros much more intuitive and frictionless.

-

Category Macros

-

Desktop Experience

Currently there is no option to edit an existing macro search path from Options-> User Settings -> Macros. Only options are Add / Delete. Ideally we need the Edit option as well.

Existing Category needs to be deleted and created again with the correct path, if search path is changed from one location to another.

-

Category Interface

-

Category Macros

-

Desktop Experience

"Enable Performance Profiling" a great feature for investigating which tools within the workflow are taking up most of the time.This is ok to use during the development time.

It would be ideal to have this feature extended for the following use cases as well:

- Workflows scheduled via the scheduler on the server

- Macros & apps performance profiling when executed from both workstation as well as the scheduler/gallery

Regards,

Sandeep.

-

Category Apps

-

Category Macros

-

Desktop Experience

Hi!

Just thought up a simple improvement to the US Geocoder macro that could potentially speed up the results. I'm doing an analysis on some technician data where they visit the same locations over & over again. I'm doing a full year analysis (200k + records) & the geocoder takes a bit to churn thru that much data. In the case of my data though, it's the same addresses over & over again & the geocoder will go thru each one individually.

What I did in my process & could be added to the macro is to put a unique tool into the process based off address, city, state, zip, then Geocode the reduced list, then simply join back to the original data stream using a join based off the address, city, state, zip fields (or use record id tool to created a unique process id to join off).

In my case, the 200k records were reduced to 25k, which Alteryx completed in under a minute, then joined back so my output was still the 200k records (all geocoded now).

Not everyone will have this many duplicates, but I'd bet most data has a few, & every little bit of time savings helps when management is waiting on the results haha!

-

Category Address

-

Category Macros

-

Category Spatial

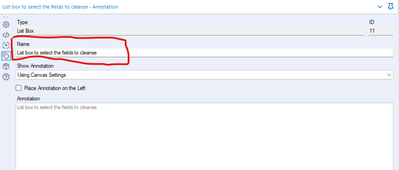

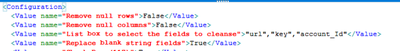

This idea has arisen from a conversation with a colleague @Carlithian where we were trying to work out a way to remove tools from the canvas which might be redundant, for example have you added a select tool to the canvas which hasn't been configured to change a data type or rename a field. So we were looking for ways of identifying in the workflow xml for tools which didn't have a configuration applied to them.

This highlighted to me an issue with something like the data cleanse tool, which is a standard macro.

The xml view of the data cleanse configuration looks like this:

<Configuration>

<Value name="Check Box (135)">False</Value>

<Value name="Check Box (136)">False</Value>

<Value name="List Box (11)">""</Value>

<Value name="Check Box (84)">False</Value>

<Value name="Check Box (117)">False</Value>

<Value name="Check Box (15)">False</Value>

<Value name="Check Box (109)">False</Value>

<Value name="Check Box (122)">False</Value>

<Value name="Check Box (53)">False</Value>

<Value name="Check Box (58)">False</Value>

<Value name="Check Box (70)">False</Value>

<Value name="Check Box (77)">False</Value>

<Value name="Drop Down (81)">upper</Value>

</Configuration>

As it is a macro, the default labelling of the drop downs is specified in the xml, if you were to do something useful with it wouldn't it be much nicer if the interface tools were named properly - such as:

So when you look at the xml of the workflow it's clearer to the user what is actually specified.

-

Category Interface

-

Category Macros

-

Desktop Experience

-

Engine

When building macros - we have the ability to put test data into the macro inputs, so that we can run them and know that the output is what we expected. This is very helpful (and it also sets the type on the inputs)

However, for batch macros, there seems to be no way to provide test inputs for the Control Parameter. So if I'm testing a batch macro that will take multiple dates as control params to run the process 3 times, then there's no way for me to test this during design / build without putting a test-macro around this (which then gets into the fact that I can't inspect what's going on without doing some funkiness)

Could we add the same capability to the Control Parameter as we have on the Macro Input to be able to specify sample input data?

-

Category Macros

-

Desktop Experience

I'm adding a 'Dynamic Input' tool to a macro that will dynmaically build the connection string based on User inputs. We intend to distribute this macro as a 'Connector' to our main database system.

However, this tool attempts to connect to the database after 'fake' credentials are supplied in the tool, returning error messages that can't be turned off.

In situations like this, I think you'd want the tool to refrain from attempting connections. Can we add a option to turn off the checking of credentials? I assume that others who are building the connection strings at runtime would also appreciate this as well.

As a corollary, for runtime connection strings, having to define a 'fake' connection in the Dynamic Input tool seems redundant, given we have already set the 'Change Entire File Path' option. There are some settings in the data connection window that are nice to be able to set at design time (e.g. caching, uncommitted read, etc.), but the main point of that window to provide the connection string is redundant given that we intend to replace it with the correct string at runtime. Could we make the data connection string optional?

To combine the above points, perhaps if the connection string is left blank, the tool does not attempt to connect to the connection string at runtime.

-

Category Apps

-

Category Macros

-

Desktop Experience

-

Tool Improvement

-

Category Macros

-

Desktop Experience

Currently when I open and run an app in designer mode im unable to access any other modules while it runs. It would be nice to be able to work on other modules while an app runs without opening a debug.

-

Category Apps

-

Category Macros

-

Desktop Experience

With the growing demand for data privacy and security, synthetic data generation is becoming an increasingly popular technique for generating datasets that can be shared without compromising sensitive information especially in the healthcare industry.

While Alteryx provides a range of tools, I believe that a custom tool could help meet the specific needs of a lot of healthcare organizations and customers.

Some potential features of a custom synthetic data generation tool for Alteryx could include:

Integration with other Alteryx tools: The tool could be seamlessly integrated with other Alteryx tools to provide a comprehensive data preparation and analysis platform.

Customizable data generation: Users could set parameters and define rules for generating synthetic data that accurately represents the statistical properties of the original dataset.

Data visualization and exploration: The tool could include features for visualizing and exploring the generated data to help users understand and validate the results.

I believe that a custom synthetic data generation tool could help our organization and customers generate high-quality synthetic datasets for testing, model training, and other purposes.

-

Category Macros

-

Desktop Experience

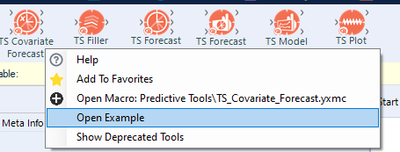

Hello! I'm just wanting to highlight a couple of small issues I've found when trying to use the TS Covariate Forecast.

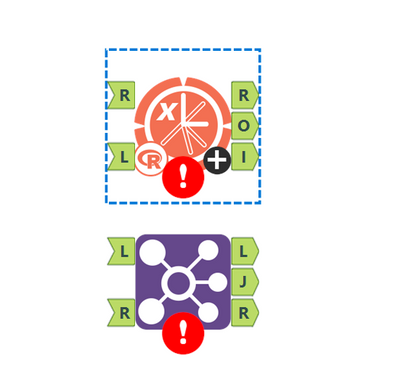

1. The example workflow does not open. This has been tested on multiple machines with different users. Right clicking the macro allows for the option 'Open Example Workflow':

However the button does not work/do anything. It is listed as a tool with a 'one tool example' (https://help.alteryx.com/20213/designer/sample-workflows-designer) so i would expect this to work.

2. Fix left/right labelling of input anchors. Currently the anchors are labelled incorrectly (compared with the join tool):

This can make things confusing when looking at documentation/advice on the tool, in which it is described as the left/right inputs.

Thanks!

TheOC

-

Category Macros

-

Category Time Series

-

Desktop Experience

- New Idea 243

- Accepting Votes 1,819

- Comments Requested 25

- Under Review 165

- Accepted 58

- Ongoing 5

- Coming Soon 9

- Implemented 481

- Not Planned 119

- Revisit 65

- Partner Dependent 4

- Inactive 674

-

Admin Settings

19 -

AMP Engine

27 -

API

11 -

API SDK

218 -

Category Address

13 -

Category Apps

112 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

243 -

Category Data Investigation

76 -

Category Demographic Analysis

2 -

Category Developer

208 -

Category Documentation

80 -

Category In Database

212 -

Category Input Output

635 -

Category Interface

237 -

Category Join

102 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

76 -

Category Predictive

77 -

Category Preparation

388 -

Category Prescriptive

1 -

Category Reporting

198 -

Category Spatial

81 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

87 -

Configuration

1 -

Data Connectors

955 -

Data Products

1 -

Desktop Experience

1,515 -

Documentation

64 -

Engine

125 -

Enhancement

306 -

Feature Request

212 -

General

307 -

General Suggestion

4 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

11 -

Localization

8 -

Location Intelligence

80 -

Machine Learning

13 -

New Request

181 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

23 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

77 -

UX

222 -

XML

7

- « Previous

- Next »

- simonaubert_bd on: OpenAI connector : ability to choose a non-default...

- nzp1 on: Easy button to convert Containers to Control Conta...

-

Qiu on: Features to know the version of Alteryx Designer D...

- DataNath on: Update Render to allow Excel Sheet Naming

- aatalai on: Applying a PCA model to new data

- charlieepes on: Multi-Fill Tool

- seven on: Turn Off / Ignore Warnings from Parse Tools

- vijayguru on: YXDB SQL Tool to fetch the required data

- bighead on: <> as operator for inequality

- apathetichell on: Github support

| User | Likes Count |

|---|---|

| 164 | |

| 20 | |

| 13 | |

| 8 | |

| 7 |