Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: Hot Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

Why Alteryx does not have an easier way (Drag, Drop, Click and Run) to calculate moving averages with a specified lookback? There are so many things that one has to adjust before calculating moving averages for a simple numeric column.

I understand that there is a CrewMacros called "Moving Summarize" which does that, but it has a limitation of a lookback period of 100. What if you have data with millions of rows where you need a lookback in 1000s then there is no easy solution to this.

Does anyone know that this configuration is in the making? Moving Average is bread and butter for analysts like me. I am urging Alteryx developers to build this tool asap. and it will bring lot of comfort to my troubled soul.

Maybe i am clearly missing something here, please enlighten me!

Thank you!

Hello,

I work for a company with circa 250K employees. We are in the process of shifting all documents over to OneDrive and I've noticed that when I have an Alteryx workflow that uses inputs stored on OneDrive the connection can be very intermittent. I use the UNC file naming protocol for my input/directory tools, but more often than not I need to run a VBA script that accesses OneDrive before Alteryx tools will connect.

There's a couple of posts on this community about this, but nothing in the ideas board. I believe the SharePoint connector is being updated for v11, but nothing for OneDrive.

I'd like there to be better integration to OneDrive for business and SharePoint Online please.

Thanks,

John.

Hello!

Just another QOL change from me today.

When building a workflow - just for fun sometimes I like to make mistakes. It's never by accident I promise 😎

Now theoretically, if I did make a mistake, and put a tool in the wrong place (or want to refactor, or want to move a select earlier in the workflow etc), I would typically right click, cut and connect around, and then right click the connection I want to paste onto. This works fine, however, some users are unaware of it, and it can still be a bit of a pain.

What would be really nice, is if we could hit ctrl and click/drag a tool, to move it elevated of connections. I have attempted to create a couple of gifs to illustrate.

The current method of moving a tool within a workstream:

What I'd love, if you could hold ctrl + drag:

Cheers!

Owen

When building API calls within Alteryx there are a few common steps required

1) Build out the URI for the API call (base URL plus any query parameters)

2) Deal with authentication, such as basic authentication requires taking a key and secret, base 64 encoding and passing this into the tool

3) parsing the results out and processing these downstream

For this idea I am specifically focusing on step 3 (but it would be great to have common authentication methods in-built within the download tool (step 2)!).

There are common steps required to parse out the results, such as using Filter (to check for a 200 response), JSON parse, text to columns and then cross tab to get the results into a readable format. These will all be common steps anyone who has worked with APIs will be familiar with:

This is all fine for a regular user to quickly add in and configure these tools. However there is no validation here for the JSON result being as expected, which when embedding an API into a batch macro or analytic app means it can easily fail.

One example of a failure which I've recently come across is where the output JSON doesn't have all fields (name:value pairs) depending the json response. For example using the UK Companies House API, when looking at the ceased to act field at this endpoint - https://developer-specs.company-information.service.gov.uk/companies-house-public-data-api/resources... the ceased to act field only appears in the results if a person has actually ceased to act. This is important if you have downstream tools such as a formula to create a field [Active] where you have:

IF ISNull([ceased_to_act]) THEN "Active" ELSE "Ceased to Act" ENDIFHowever without modification the macro / app will error if any results are returned where there is not this field.

A workaround is to add in the Crew Ensure Fields or union on a list of fields, to ensure that the Cease to Act field is present in the output for all API calls. But looking at some other tools it would be good if an expected Schema could be built in to the download tool to do this automatically.

For example in Power Automate this is achieved as follows:

I am a big advocate of not making things unnecessarily complicated. Therefore I would categorise this as an ease of use feature to improve the experience of working with APIs within Alteryx and make APIs (as load of integrations are API based) accessible to as many users as possible.

Alteryx 2019.4 introduced support for Tableau's .hyper extract format, however it only supports single table extracts. .hyper files have supported multiple tables since mid-2018, so I'd like Alteryx to support that as well.

Here are a couple of current use cases (as of February 2020) and one future one.

- We have malaria incidence data that is joined to multiple sets of spatial data. Doing all of the joins in the extract creation process to build a single table extract is not possible due to processing time & memory constraints, so we use a multiple-table extract.

- There are multiple ways to do row level security in Tableau. A common way is to have separate tables for the data & the entitlements and then use calculations at run-time to filter the data, and for that having a multiple table extract is ideal.

- In 2020 Tableau will be introducing new data modeling capabilities (this was first demoed at the 2018 Tableau Conference, there were sessions on it at the 2019 Tableau Conference) where one goal is vastly improved performance for large fact table to fact table joins where previously we'd have to do much more data preparation. This is another case where multiple table extracts would be useful.

I've attached a sample Hyper file with two tables in the extract (it's zipped because the Community site doesn't accept .hyper files).

Supporting alternative schema and table names in Hyper extracts https://community.alteryx.com/t5/Alteryx-Designer-Ideas/Input-tool-Support-more-than-Extract-Extract... is a prerequisite for this because by definition multiple table extracts have multiple table names.

A related idea is supporting multiple table extracts for the Output tool: https://community.alteryx.com/t5/Alteryx-Designer-Ideas/Support-multiple-table-extracts-in-the-Table...

Jonathan

Alteryx 2019.4 added support in the Input tool for Tableau .hyper extract files. The tables stored in the .hyper files have a schema and a table name. Tableau's old .tde files and Hyper files created by Alteryx & Tableau Desktop use "Extract.Extract" as the schema.tablename. However when using Tableau's Hyper API the default schema is "public" and the table name is arbitrarily specified by the user or application.

This has two impacts:

1) Without this support Alteryx can't open many .hyper files created by other applications. By way of example I've attached a sample .hyper file (in a .zip because the community software doesn't allow .hyper files) that has the schema.tablename "public.table1".

2) Also support for names beyond Extract.Extract is required in order to support multiple table extracts (submitted as a separate Idea).

Please update the Input tool so the user can select the particular schema and table name from the .hyper file.

Jonathan

Every time we create a file output - you first have to check if the folder exists - and if not then create it.

Currently it's quite onerous to do a directory create - especially with all the error trapping to make this production safe - and everyone is reinventing the wheel in their own companies.

Given the commonality of this need - could we add a tool that allows you to check for existance of a directory and attempt to create it (with nested directories and useful status / error descriptions to act upon)

We're currently using Regex and text to columns to parse raw HTML as text into the appropriate format when web scraping, when a tool to at least parse tables would be hugely beneficial.

This functionality exists within Qlik so it would be nice to have this replicated in Alteryx.

Obviously, we need to retain the ability to scrape raw HTML, but automatically parsing data using the <td>, <th> and <tr> tags would be nice.

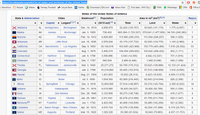

In the following page there is a table showing the states and territories of the US:

As this functionality exists elsewhere it would be nice to incorporate this into Alteryx.

I know this has been posted before, but the posts are fairly old, and I have just confirmed with Support that it is still an issue. Seems to be a pretty basic request, so I'm putting it out there again under this new heading.

The issue is that if you have data in a field, and you have that data separated by a new line (\n), it will show up fine in a browse tool, or pretty much any other output (database file, Office Document file, etc.). But if you try to use the Table Tool under Reporting, it ignores the line break and strings the data together.

Example:

The field data looks like this in a browse or most other outputs:

Hello, my name is

Michael Barone

and I love

Alteryx

But when I try to pull this field into a Table Tool, it shows up like this:

Hello, my name is Michael Barone and I love Alteyrx

Putting this out here again in hopes that it gets lots and lots of stars so it gets put on the road map!!

The Source field of the field metadata is very useful, but has some problems.

- It is repetitious. A long connection string repeated for many fields from the same source can bloat the size of the workflow above 10 MB, and when removed is around 0.5 MB.

- It exposes sensitive information about a company's infrastructure, such as server names, ports, user ids, and proprietary data structures.

I first started paying attention when we found a user's password in the metadata because they had passed it as a string to the Dynamic Input Tool (separate Idea submitted for that - LINK). Then when I had to share an App with the Alteryx Support team for support with an issue, I thought to check the metadata, and I noticed that the file was too big and was exposing information that I would not normally share with another company.

I'm not sure how you want to handle this, but here's some thoughts:

- Default the Source field to 'off' and provide users the option to turn it 'on' in the workflow/app settings.

- Provide a mechanism to strip the 'Source' field at time of saving or exporting the workflow.

- If nothing else, provide education to users on the implications of including this information in the file.

Thanks for listening!

Cameron

Currently the cross tab tool automatically sorts alphabetically by the "New Column Headers" field. Often times I have to output data with dates across the columns and therefore have to do a cross tab to achieve this. The problem is when I have the dates formatted with month names, the crosstab automatically sorts it in alphabetical order instead of date order (i.e. Apr, Aug, Dec, etc vs Jan, Feb, Mar). To get around this issue, I have to use a dynamic rename tool. It would be great if there was a way to choose the order of the crosstab (i.e. in the order of the data, crosstab, another field, etc.).

Hello!

I am making this idea request in response to this question:

https://community.alteryx.com/t5/Alteryx-Designer-Discussions/Is-it-possible-to-enable-Performance-P...

Currently, one of my favourite settings to enable in Alteryx is the performance profiling, as i get to see exactly how much time is being used at each step, and its a quick reminder to double check those tools that take a while for optimisation. However, i have to enable this on every new workflow that i open as the setting only applies to the current workflow, and it can be frustrating executing a large workflow only to realise after waiting for it to run, that this setting was not enabled.

What i'd like to propose, is an extra set of settings within the User Settings, default tab (which is currently):

To something like:

Which would simply enable these settings as a default, when a new workflow is made.

Let me know what you think! I think a couple of the other settings in there could see use, especially as the AMP engine develops and those who want to see all macro messages, for example.

Cheers,

TheOC

Hi Alteryx

I understand why you need to keep bloat away from the product and have tools available to download instead, it means you can iterate and update them outside the usual cadence cycles. But please, for the love of everything holy, make it easier to find them and download them.

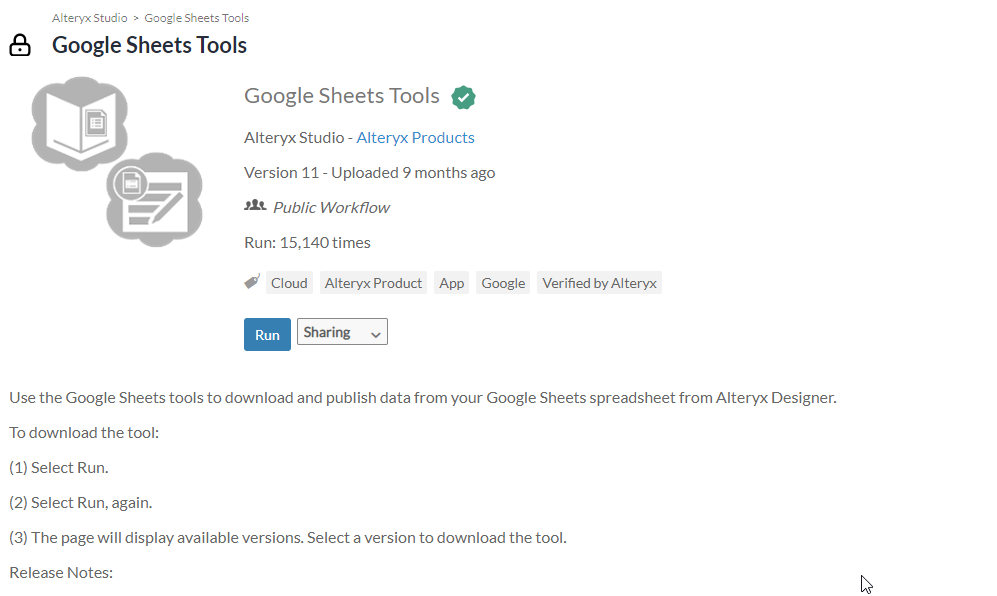

Let me give you an example of downloading the Google Sheets Input Tool:

1. I type in the amazing search and find a help article on it, so far so good:

2. I am pointed to the Gallery:

3. I click but where do I look? I need to revert to the tiny search in the top left. This isn't obvious for new users

4. but the search doesn't come top, how some of these search results get in above what I need I have no idea. I get to page 4 before I see something that looks like what I need before I realise it is a third party tool having installed it. I come back, can't find the tool and so give up. If it's there somewhere then it needs to be more obvious.

5. I google - I finally find (third item) something that's more useful but only because I know what I'm looking for

6. I run the workflow, then run it again as per the instructions. At this point I'm losing the will to live tbh.

7. Finally something that looks useful, I bang the huge download button twice and wonder why it didn't work.

9. I read the text and realise I need to click the link - finally I have the installer.

That was a five minute job. It was painful. And I'm a seasoned Alteryx user. If I was a new user, I'd have given up at step 2 or 3.

But what was the thing I downloaded in Step 8? A set of release notes and links....why aren't these simply added to the help article I found in Step 1/2? It would surely be easier for you, and would be a whole lot easier for users. Why do we need this painful process?

Please please please make it easier for me to install new tools.

Hi there,

The download tool is currently very cryptic, and difficult for most users to grasp.. This is due, at least in part, to the fact that it tries to be generic and serve all needs instead of being broken into smaller tools which fit the need.

Could we please break the download tool into:

- Input FTP tool. This would allow you to download from FTP or SFTP sites, and work in a wizard fashion to get you to the file / files you wanted and take you through FTP authentication

- Input: Web API call. This would be much easier if there was a wizard where you could put the API you wanted to call, and then you could add the parameters using a wizard

- Input: Web-download: This would allow you to download frames or pages from the web. this would be a good place to do what so many users have asked for and which Excel does natively - i.e. allow you to see the site in a wizard in a browser, and pick the elements you want to download. Must allow for authentication and walk you through this with the wizard.

- Output; FTP put. AS above - splitting this out makes it more sensible

There are probably other variants, and we can keep the Download tool for super-complex or bespoke uses - but if we break this down into smaller tools with simpler capabilities, we'll get a higher usage.

Thank you

Sean

From Wikipedia

Druid is a column-oriented, open-source, distributed data store written in Java. Druid is designed to quickly ingest massive quantities of event data, and provide low-latency queries on top of the data.[1] The name Druid comes from the shapeshifting Druid class in many role-playing games, to reflect the fact that the architecture of the system can shift to solve different types of data problems. Druid is commonly used in business intelligence/OLAP applications to analyze high volumes of real-time and historical data.[2] Druid is used in production by technology companies such as Alibaba,[2] Airbnb,[2] Cisco,[3] eBay,[4] Netflix,[5] Paypal,[2], Yahoo.[6] and Wikimedia Foundation [7]

More and more companies are going from Hive to Druid for Dataviz needs, maybe it's time to look for Druid Integration with Alteryx?

I reported this to the support team but was told it was by design and to post here.

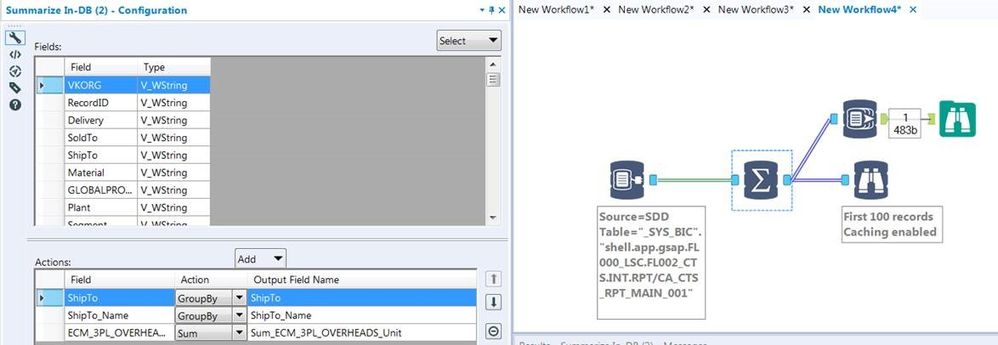

In-DB Inefficient SQL

I would like to report that the In-DB tools are generating horribly inefficient SQL code for simple operations. It seems no matter what tools you use every statement is starting with a nested 'Select * From'.

Example Simple workflow:

This is a simple Select and Group by but the SQL Generated is:

SELECT "ShipTo", "ShipTo_Name", SUM("ECM_3PL_OVERHEADS_Unit") AS "Sum_ECM_3PL_OVERHEADS_Unit"

FROM (SELECT * FROM "_SYS_BIC"."shell.app.gsap.FL000_LSC.FL002_CTS.INT.RPT/CA_CTS_RPT_MAIN_001") AS "a"

GROUP BY "ShipTo", "ShipTo_Name"

This is taking a very long time to execute:

Statement 'SELECT "ShipTo", "ShipTo_Name", SUM("ECM_3PL_OVERHEADS_Unit") AS "Sum_ECM_3PL_OVERHEADS_Unit" FROM ...'

successfully executed in 15.752 seconds (server processing time: 15.699 seconds)

Whereas if I take the same query and remove the nested Select *:

SELECT "ShipTo", "ShipTo_Name", SUM("ECM_3PL_OVERHEADS_Unit") AS "Sum_ECM_3PL_OVERHEADS_Unit"

FROM "_SYS_BIC"."shell.app.gsap.FL000_LSC.FL002_CTS.INT.RPT/CA_CTS_RPT_MAIN_001" AS "a"

GROUP BY "ShipTo", "ShipTo_Name"

It is very quick:

Statement 'SELECT "ShipTo", "ShipTo_Name", SUM("ECM_3PL_OVERHEADS_Unit") AS "Sum_ECM_3PL_OVERHEADS_Unit" FROM ...'

successfully executed in 1.211 seconds (server processing time: 1.157 seconds)

So Alteryx is generating queries up to x13 slower than they should be thereby defeating the point of using In-DB. As you can imagine in a workflow where we have multiple Connect In-DB tools this is a really substantial amount of time. Example used above is from SAP HANA DB has 1.9m rows and ~90 columns but we have much bigger tables/views than this.

If you look you will see its same behaviour for all In-DB tools where each tool creates another nested Select with its particular operator.

MY SUGGESTION:

So my suggestion is that Alteryx should combine the SQL of the first few tools and avoid using SELECT * completely unless no Select tools have been used. So it should combine:

- Connect In-DB + Select

- Connect In-DB + Filter

- Connect In-DB + Summarise

Preferably it should combine/flatten everything up until the first join or union. But Select + Filter are a must!

Note it seems some DB's can cope OK with un-nesting these big nested queries in the query plans for some Tables but normally not for Views. But some cannot cope at all and so the In-DB tools cannot even be used to Browse 100 records (due to select *).

Can we please have a tickbox (ideally one that remembers your preference to be ticked or unticked) on the Save to Gallery pop up that would allow us to save a (timestamped?) copy of that workflow on a local drive (perhaps one that is preset in the user settings)?

Hello all,

HDFS (Hadoop Distributed File System) connection is widely used to load data efficiently on Hadoop, for Hive, Spark or Impala. However, it's not compatible with the new DCM.

Best regards,

Simon

Cleanse Macro

Given a choice between the delivered macro and the CReW macro, I’ll choose the CReW macro for both speed and functionality. Wikipedia says, “Data cleansing or data cleaning is the process of detecting and correcting (or removing) corrupt or inaccurate records from a record set, table, or database and refers to identifying incomplete, incorrect, inaccurate or irrelevant parts of the data and then replacing, modifying, or deleting the dirty or coarse data.” If Alteryx were to convert the macro to a true tool, here is my feature request list:

Performance:

- AMP compatible – Fast!

- Faster than the CReW macro for deleting empty fields/rows

- Resolve time it takes to load the tool (current macro versions are slow), html is faster.

Feature Enhancement:

- Allow selection of fields based on data type

- Include incoming/outgoing SELECT functionality

- Allow for PREFIX functionality (like multi-field formula), but NOT default

- Read incoming metadata to provide color coding of fields to indicate where potential problems exist (e.g. NULL, Whitespace) – part of browse everywhere currently

- Allow for Nulls to convert to 0/blank or 0/blank to convert to Null

- When removing punctuation, provide for exceptions (e.g. Numeric set of negative, comma and period).

- Include HTML tag removal

- Support internationalization (character sets)

Going the extra mile:

- Display or opt for output, cleanup metrics. How dirty was my data? Potentially, allow for ERROR to stop workflow if garbage is detected.

- Optional: Detect outliers in numeric data. I’ve got an outlier detection macro that we can review, but while you are passing all of the data for numeric values, explaining or tagging outliers would be useful. Could be a box-whisker on numeric values maybe?

- Make outlier actionable

- Identify in data (new field indicator)

- Remove

- Modify/Impute

- Test/Preview against metadata: (pre-run), see what the incoming/outgoing results would be on *all of the metadata before I run the workflow.

- camelCase: https://en.wikipedia.org/wiki/Camel_case

- Identify/Replace unknown values (e.g. N/A, Not Applicable, #) with Null() or other?

- Identify/Remove duplicate values within a cell

- See also: https://en.wikipedia.org/wiki/Data_cleansing

- Option to point to a “personal” dictionary for spelling or validation

- Provide “smart” annotation on tool

- Make outlier actionable

- New Idea 301

- Accepting Votes 1,790

- Comments Requested 22

- Under Review 168

- Accepted 54

- Ongoing 8

- Coming Soon 7

- Implemented 539

- Not Planned 111

- Revisit 59

- Partner Dependent 4

- Inactive 674

-

Admin Settings

20 -

AMP Engine

27 -

API

11 -

API SDK

222 -

Category Address

13 -

Category Apps

113 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

247 -

Category Data Investigation

79 -

Category Demographic Analysis

2 -

Category Developer

211 -

Category Documentation

80 -

Category In Database

215 -

Category Input Output

646 -

Category Interface

242 -

Category Join

105 -

Category Machine Learning

3 -

Category Macros

154 -

Category Parse

76 -

Category Predictive

79 -

Category Preparation

395 -

Category Prescriptive

1 -

Category Reporting

199 -

Category Spatial

81 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

89 -

Configuration

1 -

Content

1 -

Data Connectors

969 -

Data Products

3 -

Desktop Experience

1,558 -

Documentation

64 -

Engine

127 -

Enhancement

348 -

Feature Request

213 -

General

307 -

General Suggestion

6 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

13 -

Localization

8 -

Location Intelligence

80 -

Machine Learning

13 -

My Alteryx

1 -

New Request

209 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

24 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

81 -

UX

223 -

XML

7

- « Previous

- Next »

- nkmcn on: Auto rename fields

- Shifty on: Copy Tool Configuration

- simonaubert_bd on: A formula to get DCM connection name and type (and...

-

NicoleJ on: Disable mouse wheel interactions for unexpanded dr...

- haraldharders on: Improve Text Input tool

- simonaubert_bd on: Unique key detector tool

- TUSHAR050392 on: Read an Open Excel file through Input/Dynamic Inpu...

- jackchoy on: Enhancing Data Cleaning

- NeoInfiniTech on: Extended Concatenate Functionality for Cross Tab T...

- AudreyMcPfe on: Overhaul Management of Server Connections