Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: New Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

Hello all,

As of today, we can easily copy or duplicate a table with in-database tool.This is really useful when you want to have data in development environment coming from production environment.

But can we for real ?

Short answer : no, we can't do it in these cases :

-partitions

-any constraints such as primary-foreign keys

But even if these ideas would be implemented, this means manually setting these parameters.

So my proposition is simply a "clone table"' tool that would clone the table from the show create table statement and just allow to specify the destination path (base.table)

Best regards,

Simon

Hi all!

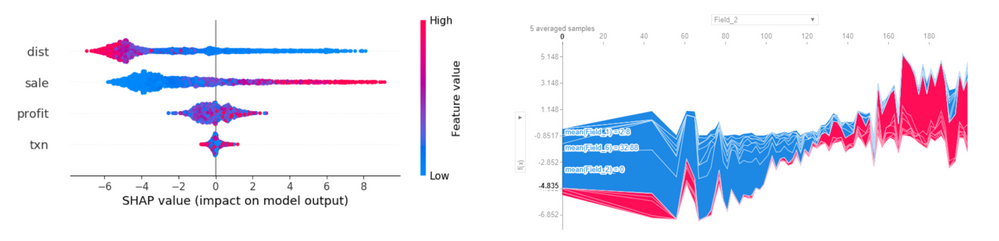

Based on the title, here's some background information: SHAPLEY Values

Currently, one way of doing so is to utilize the Python tool to write out the script and install the package. However, this will require running Alteryx as an administrator in order to successfully load, test, and run the script. The problem is, a substantial number of companies do not grant such privileges to their Alteryx teams to run as administrator fully as it will always require admin credentials to log in to even open Alteryx after closing it.

I am aware that there is a macro covering SHAP but I've recently tested it and it did not work as intended, plus it covers non-categorical values as determinants only, thereby requiring a conversion of categorical variables into numeric categories or binary categories.

It will be nice to have a built in Alteryx ML tool that does this analysis and produces a graph akin to a heat map that showcases the values like below:

By doing so, it adds more value to the ML suite and actually helps convince companies to get it.

Otherwise teams will just use Python and be done with it, leaving only Alteryx as the clean-up ETL tool. It leaves much to be desired, and can leave some teams hanging.

I hope for some consideration on this - thank you.

Hi Team,

As a Low vision person, I am able to work on Dark Background and White foreground (Dark theme).

I won't find the feature, kindly upgrade for the same. I tried the suggestions in google as work with Palette option,

in my case that's not much help. and also kindly make Alteryx as much as accessible.

Thanks & Regards

Rameshchandra

When I work I typically have 2 to 3 screens at my desk, or an oversized single screen. Being able to take the results window and float it on to my second monitor is great as it allows me to view more canvas and more data results in one setup. However the results window will not maximize or dock to my second window, would be great for it to have this Windows feature.

Add the ability in the select records tool to use formulas not just record numbers.

There is no tool that exists that outputs all records that are duplicates (those sharing the selected values with at least one other record) and also outputs the records that are not duplicates (those not sharing the selected values with at least one other record).

The Unique Tool is not sufficient. It only provides the first record of a unique duplicate group along with any non-duplicates and then provides a secondary output that only contains the additional records of a duplicate group. Sometimes you only care about the duplicates and want to quickly see what differs between the unique groups.

For example, if there are 4 records with the City of Austin and I am looking for duplicates on City I want to see all 4 records with Austin in the output so I can quickly compare additional fields to see what might differ, or if they are all indeed truly duplicates.

Many of today's APIs, like MS Graph, won't or can't return more than a few hundred rows of JSON data. Usually, the metadata returned will include a complete URL for the NEXT set of data.

Example: https://graph.microsoft.com/v1.0/devices?$count=true&$top=999&$filter=(startswith(operatingSystem,'W...') or startswith(operatingSystem,'Mac')) and (approximateLastSignInDateTime ge 2022-09-25T12:00:00Z)

This will require that the "Encode URL" checkbox in the download tool be checked, and the metadata "nextLevel" output will have the same URL plus a $skiptoken=xxxxx value. That "nextLevel" url is what you need to get the next set of rows.

The only way to do this effectively is an Iterative Macro .

Now, your download tool is "encode URL" checked, BUT the next url in the metadata is already URL Encoded . . . so it will break, badly, when using the nextLevel metadata value as the iterative item.

So, long story short, we need to DECODE the url in the nextLevel metadata before it reaches the Iterative Output point . . . but no such tool exists.

I've made a little macro to decode a url, but I am no expert. Running the url through a Find Replace tool against a table of ASCII replacements pulled from w3school.com probably isn't a good answer.

We need a proper tool from Alteryx!

Someone suggested I use the Formula UrlEncode ability . . .

Unfortunately, the Formula UrlEncode does NOT work. It encodes things based upon a straight ASCII conversion table, and therefore it encodes things like ? and $ when it should not. Whoever is responsible for that code in the formula tool needs to re-visit it.

Base URL: https://graph.microsoft.com/v1.0/devices?$count=true&$top=999&$filter=(startswith(operatingSystem,'W...') or startswith(operatingSystem,'Mac')) and (approximateLastSignInDateTime ge 2022-09-25T12:00:00Z)

Correct Encoding:

Hello all,

Like many softwares in the market, Alteryx uses third-party components developed by other teams/providers/entities. This is a good thing since it means standard features for a very low price. However, these components are very regurarly upgraded (usually several times a year) while Alteryx doesn't upgrade it... this leads to lack of features, performance issues, bugs let uncorrected or worse, safety failures.

Among these third-party components :

- CURL (behind Download tool for API) : on Alteryx 7.15 (2006) while the current release is 8.0 (2023)

- Active Query Builder (behind Visual Query Builder) : several years behind

- R : on Alteryx 4.1.3 (march 2022) while the next is 4.3 (april 2023)

- Python : on Alteryx 3.8.5 (2020) whil the current is 3.10 (april 2023)

-etc, etc....

-

of course, you can't upgrade each time but once a year seems a minimum...

Best regards,

Simon

Add ability to name the columns for the text to column fields tool.

It looks like we can choose which fields to include in a workflow with the Listbox interface + Select tool., but we cannot ORDER or REORDER fields.

I did stumble across this post, it looks like it can be done but it isnt very elegant.

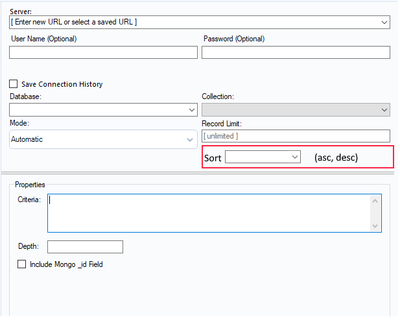

HI,

Not sure if this Idea was already posted (I was not able to find an answer), but let me try to explain.

When I am using Mongo DB Input tool to query AlteryxService Mongo DB (in order to identify issues on the Gallery) I have to extract all data from Collection AS_Result.

The problem is that here we have huge amount of data and extracting and then parsing _ServiceData_ (blob) consume time and system resources.

This solution I am proposing is to add Sorting option to Mongo input tool. Simple choice ASC or DESC order.

Thanks to that I can extract in example last 200 records and do my investigation instead of extracting everything

In addition it will be much easier to estimate daily workload and extract (via scheduler) only this amount of data we need to analyze every day ad load results to external BD.

Thanks,

Sebastian

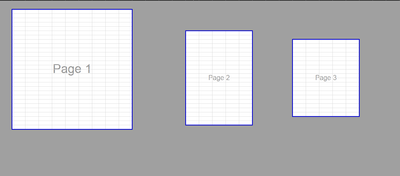

I hope have a flexibility to adjust the print area workflow.

in the canvas.

it similar to excel print area, so we can adjust all the area easily.

for security. it may add function to check whether all tools is covered in print areas.

it is hard to cut the workflow to pieces when it is huge.

I've used the Table tool with large data sets to make tables with conditional formatting etc. There's a couple of suggestions I'd like to see.

1. I noticed an issue where if you disconnect from the tool prior to the Table tool before it forgets your settings quite easily and you may need to redo them. This is quite frustrating if you have lots of columns

2. The controls for sorting and interacting with columns aren't very good, if they were more like the select tool controls that would be fantastic. Perhaps this could be resolved with a select tool beforehand but I still think it is worth putting on the table tool itself.

3. Render output. when making excel outputs with multiple sheets of varying sizes, its very difficult to control. The sheets all stretch to the largest size. I've found I've had to put in white space in Report Text tools on one side of a table tool in order to make up the space and prevent stretching. (I found that solution on the forums)

Thanks.

Frank

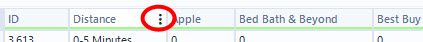

Hi!

Currently, the only visual the user has on which column they have selected in the Results window, is the 3 dots for column options (Data Cleanse/Filter/Sort). It would be incredible if Alteryx would add a border around the entire column that is selected (like you currently do when selecting a whole row) & maybe possibly even bold the header of the selected column.

I think you might be changing the background color from light grey to white, but it's so subtle it's hard to tell. Make it pop!

Connect to PowerPivot data model or an Atomsvc file

It would be nice to have a tool that automatically normalized data, or calculated percentages. This could be overall or in groups.

For example, maybe I have a dataset with 2 columns: US State and Number of amusement parks. So I know the count of amusement parks by state. But maybe I want a distribution so I can see which percentage are in what state. What I want is (# of parks in a state) / (total across all states). Currently you need at least 3 tools to do this calculation (summarize, then join or append fields, then formula). This is a very common operation, and often I want counts and percentages next to each other in a table.

Such a tool could be called "normalize" or "rescale" or "scale". It could be more general - maybe not just normalizing so values add to 1 (or 100%), but to other magnitudes, recentering the data, or doing a "standard normal" (z score) transormation as suggested here:

https://community.alteryx.com/t5/Alteryx-Designer-Discussions/How-to-do-Feature-Normalization-in-Alt...

Can we have an option to disable all tool containers at once? Similar to disable all browse tools or tools that write output.

Hello,

A lot of time, I would like to manipulate data between my interface tool (let's say a dropdown or a control parameter) and the action tool.

This can be things like parsing (text to column..), regex, multifield, etc...

As of today, we can just write formula and this is really poor.

Best regards,

Simon

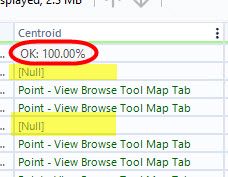

Alteryx currently shows 100% in the profiling of spatial fields in the results window, regardless of if there are rows with missing spatial features. I opened a ticket about this & was told it is expected behavior.

Therefore, I submit the idea that the profiling for spatial fields should give an accurate profile of the field, & if there are nulls in the field, it should identify that column isn't 100% OK and show the % of records that have null values, like the profiling does for every other column in workflows.

Thank you!

With data sharing, you can share live data with relative security and ease across Amazon Redshift clusters, AWS accounts, or AWS Regions for read purposes.

Data sharing can improve the agility of your organization. It does this by giving you instant, granular, and high-performance access to data across Amazon Redshift clusters without the need to copy or move it manually.

aws Datasharing feature in Alteryx. It's not working in Alteryx v 2020.4

We would like to know whether Alteryx is support redshift data share or not ?

it uses same redhshift ODBC or Simba ODBC drivers only but the functionality would be sharing the data across the clusters. Earlier we have seen a limitation from Alteryx end (not able to read data share objects) so we wanted to check if it’s resolved in newer versions of Alteryx. Reference link

https://docs.aws.amazon.com/redshift/latest/dg/datashare-overview.html

- New Idea 290

- Accepting Votes 1,791

- Comments Requested 22

- Under Review 166

- Accepted 55

- Ongoing 8

- Coming Soon 7

- Implemented 539

- Not Planned 111

- Revisit 59

- Partner Dependent 4

- Inactive 674

-

Admin Settings

20 -

AMP Engine

27 -

API

11 -

API SDK

220 -

Category Address

13 -

Category Apps

113 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

247 -

Category Data Investigation

79 -

Category Demographic Analysis

2 -

Category Developer

209 -

Category Documentation

80 -

Category In Database

215 -

Category Input Output

645 -

Category Interface

240 -

Category Join

103 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

76 -

Category Predictive

79 -

Category Preparation

395 -

Category Prescriptive

1 -

Category Reporting

198 -

Category Spatial

81 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

89 -

Configuration

1 -

Content

1 -

Data Connectors

968 -

Data Products

3 -

Desktop Experience

1,550 -

Documentation

64 -

Engine

127 -

Enhancement

342 -

Feature Request

213 -

General

307 -

General Suggestion

6 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

13 -

Localization

8 -

Location Intelligence

80 -

Machine Learning

13 -

My Alteryx

1 -

New Request

204 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

24 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

81 -

UX

223 -

XML

7

- « Previous

- Next »

- Shifty on: Copy Tool Configuration

- simonaubert_bd on: A formula to get DCM connection name and type (and...

-

NicoleJ on: Disable mouse wheel interactions for unexpanded dr...

- haraldharders on: Improve Text Input tool

- simonaubert_bd on: Unique key detector tool

- TUSHAR050392 on: Read an Open Excel file through Input/Dynamic Inpu...

- jackchoy on: Enhancing Data Cleaning

- NeoInfiniTech on: Extended Concatenate Functionality for Cross Tab T...

- AudreyMcPfe on: Overhaul Management of Server Connections

-

AlteryxIdeasTea

m on: Expression Editors: Quality of life update

| User | Likes Count |

|---|---|

| 16 | |

| 7 | |

| 5 | |

| 5 | |

| 3 |