Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: Top Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

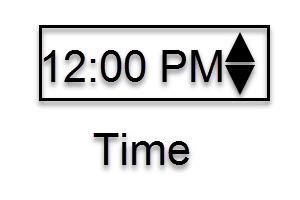

I would like to see a time interface tool similar to the Date and Numeric Up Down tools. I am working on some macros where the user can select the time they would like to use a filter for the data.

Example: I want all data loaded after 5:00 PM because its late and needs to be removed.

Example 2: I want to create an app where the user can select what time range they would like to see records for (business hours, during their shift, etc)

Currently this require 2-3 numeric up downs or a Text box with directions for the user on how to format field with Error tools to prevent bad entries. It could even be UTC time.

Please upgrade the "curl.exe" that are packaged with Designer from 7.15 to 7.55 or greater to allow for -k flags. Also please allow the -k functionality for the Atleryx Download tool.

-k, --insecure

(TLS) By default, every SSL connection curl makes is verified to be secure. This option allows curl to proceed and operate even for server connections otherwise considered insecure.

The server connection is verified by making sure the server's certificate contains the right name and verifies successfully using the cert store.

Regards,

John Colgan

The behavior of an "Overwrite Sheet (Drop)" configuration is such that it breaks formulas (#REF) that point to the overwritten sheet and named ranges that reference the overwritten sheet. This is a bummer because the only way I've found to overcome the issue is to write a script that re-applies the named range. This works, but it greatly raises the barrier to using this tool and in some corporate environments it won't even be possible.

What would probably be a good alternative behavior is to delete the contents of the sheet, rather than the rows/columns/cells of the sheet. I think both probably have valid use cases but my proposed functionality is going to cause fewer issues and be the more popular behavior for most users. I believe there is a google sheets API call for just this kind of behavior...

Would really love there to be a way to store environment related config variables without requiring the use of an external config 'file' that you need to bring in in every workflow.

Functionality should be similar to how the Alias manager works (although allowing aliasing of more than just DB connections)

The sort of things that would typically be included as such a variable would be:

- contact email address for workflow failure/completion

- other external log file location

- environment name

- environment specific messaging

If this could be set for different subscriptions or collections it would be fantastic. If not, at the server level would suffice.

Multi-Resolution Raster format, or .mrr, is a MapInfo Pro Advanced file format that is becoming increasingly common as an output option for many software providers. It is being used in place of .grd and .tiff file formats in some instances, because of its many advantages over most raster formats. I would like to request that alteryx allow .mrr files to be included as input options, as it seems this trend isn't going to decrease anytime soon.

There is "update:insert if new" option for the output data tool if using an ODBC connection to write to Redshift.

This option really needs to be added to the "amazon redshift bulk loader" method of the output data tool, and the write in db tool.

Without it means you are forced to use the "Delete and append" output which is a pain because then you need to keep reinserting data that you already have, slowing down the process.

Using the ODBC connection option of the output data tool to write to Redshift is not an option as it is too slow. Trying to write 200MB of data, the workflow runs for 20 minutes without any data reaching the destination table. End up just stopping the workflow.

Hi currently the s3 upload tool only allows file format of *.yxdb , *.json, *.csv and *.avro

In order to optimize loading to redshift, it would be good to have a few more functions

1. Ability to s3 upload with *.gz format

eg: Reading in a file using the input tool -> s3 upload tool (which has a gzip function with the following options - record limit, delimiter, UTF8)

http://docs.aws.amazon.com/redshift/latest/dg/t_loading-gzip-compressed-data-files-from-S3.html

2. Change max record limit, delimiter, UTF8 format

3. Change the objectName to 'take file/table name from field' with filename containing filename or part of filename similar to the 'Output tool'

Adrian

The ability to limit the number of records (either on a specific input tool, or via the Workflow Properties) is super useful when developing a workflow...

...but how many times do you forget that a record limit was set and then spend ridiculous amounts of time trying to figure out why something isn't working properly? (I can't be the only person this has happened to...).

Wouldn't it be fantastic if a warning/message was shown in the workflow results (e.g. "Input Tool (n) has a record limit set"

or "Record Limits are set on the Workflow Properties")?

With a module that contains a lot of tool containers, it would be nice to have an option (similar to Disable All Tool That Write Output in the RunTime TAB) to disable all Tool Containers and then I can go pick the one or two that I would like to enable.

I periodically consume data from state governments that is available via an ESRI ArcGIS Server REST endpoint. Specifically, a FeatureServer class.

For example: http://staging.geodata.md.gov/appdata/rest/services/ChildCarePrograms/MD_ChildCareHomesAndCenters/Fe...

Currently, I have to import the data via ArcMap or ArcCatalog and then export it to a datatype that Alteryx supports.

It would be nice to access this data directly from within Alteryx.

Thanks!

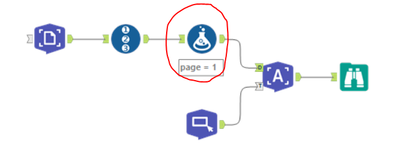

When using the text mining tools, I have found that the behaviour of using a template only applies to documents with the same page number.

So in my use case I've got a PDF file with 100+ claim statements which are all laid out the same (one page per statement). When setting up the template I used one page to set the annotations, and then input this into the T anchor of the Image to Text tool. Into the D anchor of this tool is my PDF document with 100+ pages. However when examining the output I only get results for page 1.

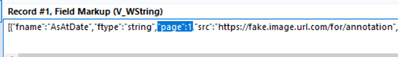

On examining the JSON for the template I can see that there is reference to the template page number:

And playing around with a generate rows tool and formula to replace the page number with pages 1 - 100 in the JSON doesn't work. I then discovered that if I change the page number on the image input side then I get the desired results.

However an improvement to the tool, as I suspect this is a common use case for the image to text tool, is to add an option in the configuration of the image to text tool to apply the same template to all pages.

The are a lot of SQL engines on top of Hadoop like:

- Apache Drill / https://drill.apache.org/

Schema-free, low latency SQL Query Engine for Hadoop, NoSQL and Cloud Storage

It's backed up at enterprise level by MapR - Apache Kylin / http://kylin.apache.org/

Apache Kylin™ is an open source Distributed Analytics Engine designed to provide SQL interface and multi-dimensional analysis (OLAP) on Hadoop supporting extremely large datasets, original contributed from eBay Inc. - Apache Flink / https://flink.apache.org/

Apache Flink is an open source platform for distributed stream and batch data processing. Flink’s core is a streaming dataflow engine that provides data distribution, communication, and fault tolerance for distributed computations over data streams. The creators of Flink provide professional services trought their company Data Artisans. - Facebook Presto / https://prestodb.io/

Presto is an open source distributed SQL query engine for running interactive analytic queries against data sources of all sizes ranging from gigabytes to petabytes.

It's backed up at enterprise level by Teradata - http://www.teradata.com/PRESTO/

My suggestion for Alteryx product managers is to build a tactical approach for these engines in 2016.

Regards,

Cristian.

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

Apache ORC is commonly used in the context of Hive, Presto, and AWS Athena. A common pattern for us is to use Alteryx to generate Apache Avro files, then convert them to ORC using Hive. For smaller data sizes, it would be convenient to be able to simply output data in the ORC format using Alteryx and skip the extra conversion step.

ORC supports a variety of storage options that users may wish to override from sensible Alteryx defaults. We typically use the SNAPPY compression codec.

Once we have our files under code control (git) the bak file is not necessary and effectively doubles our storage usage.

I need to be able to connect to Salesforce CampaignInfluence object which is only available through API v37 or later. Currently, the Salesforce Connectors of the REST API is on v36 whereas the latest version is v41 (about a year gap between v36 and v41). I was told that there was no immediate plan to update the default connector to the latest version. It would be nice to have visibility on objects available on the newer versions.

- New Idea 273

- Accepting Votes 1,818

- Comments Requested 24

- Under Review 174

- Accepted 56

- Ongoing 5

- Coming Soon 11

- Implemented 481

- Not Planned 116

- Revisit 62

- Partner Dependent 4

- Inactive 674

-

Admin Settings

20 -

AMP Engine

27 -

API

11 -

API SDK

218 -

Category Address

13 -

Category Apps

113 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

246 -

Category Data Investigation

77 -

Category Demographic Analysis

2 -

Category Developer

208 -

Category Documentation

80 -

Category In Database

214 -

Category Input Output

640 -

Category Interface

239 -

Category Join

103 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

76 -

Category Predictive

77 -

Category Preparation

394 -

Category Prescriptive

1 -

Category Reporting

198 -

Category Spatial

81 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

88 -

Configuration

1 -

Content

1 -

Data Connectors

962 -

Data Products

2 -

Desktop Experience

1,533 -

Documentation

64 -

Engine

126 -

Enhancement

326 -

Feature Request

213 -

General

307 -

General Suggestion

6 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

12 -

Localization

8 -

Location Intelligence

80 -

Machine Learning

13 -

My Alteryx

1 -

New Request

192 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

23 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

79 -

UX

222 -

XML

7

- « Previous

- Next »

- TUSHAR050392 on: Read an Open Excel file through Input/Dynamic Inpu...

- AudreyMcPfe on: Overhaul Management of Server Connections

-

AlteryxIdeasTea

m on: Expression Editors: Quality of life update - StarTrader on: Allow for the ability to turn off annotations on a...

-

AkimasaKajitani on: Download tool : load a request from postman/bruno ...

- rpeswar98 on: Alternative approach to Chained Apps : Ability to ...

-

caltang on: Identify Indent Level

- simonaubert_bd on: OpenAI connector : ability to choose a non-default...

- maryjdavies on: Lock & Unlock Workflows with Password

- noel_navarrete on: Append Fields: Option to Suppress Warning when bot...