Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

I'm really liking the new assisted modelling capabilities released in 2020.2, but it should not error if the data contains: spatial, blob, date, datetime, or datetime types.

This is essentially telling the user to add an extra step of adding a select before the assisted modelling tool and then a join after the models. I think the tool should be able to read in and through these field types (especially dates) and just not use them in any of the modelling.

An even better enhancement would be to transform date as part of the assisted modelling into something usable for the modelling (season, month, day of week, etc.)

In Designer user settings, you have the option to set a default dataset for the reference base map. This is a fantastic feature when working with spatial objects. My suggestion is that this feature should persist when viewing .yxdb files outside of Designer workflows.

When I'm browsing a folder of .yxdb files that contain spatial objects, clicking on those files opens a viewing/browse window outside of Designer. Unfortunately, the base reference map setting does not currently transfer and must be reelected each time a file is opened. I would like suggest that this setting persist in this situation.

I often need to concatenate and sort strings that are on the same row.. Currently, the only way to do this in Alteryx is by transposing each string onto a separate row, sorting the rows, then summarising them back together.

I would love a new function in the Formula tool to concatenate strings. This function could allow you to choose a sort order (or no sort), and allow you to choose the concatenation separator

Concatenate("SortAscending",",",[Field1],[Field2],...)

Please add support to read and write spatial data from a SpatiaLite database. ESRI and QGIS have supported this format out of the box for quite a while. We have a mixed use environment Alteryx, ESRI, Mapinfo and QGIS and would like a common file based spatial format. You already support SQLite, its container, so expanding to the SpatialLite spec should be a no brainer.

I don't work with Boolean fields particularly often, so I only just found this need. When looking at a Boolean field inside of a Browse tool, the Browse Profile is surprisingly unhelpful, and states "Chart not available for this data type".

When looking at the same data as a V_STRING, there is a lot of very helpful information displayed:

Obviously some of the String information in the second screenshot is likely unnecessary, but I'd like to see enhancements to support some more information, or at least the frequency chart for Boolean fields.

When opening the File Open dialog in Designer for loading a file from the Gallery, the default location inside the Gallery is set to "All Locations".

In most of the cases, a developer does not want to work on a workflow produced by a random other person having stored a workflow in the Gallery but on his own workflows. Thus, the default should be "My Private Studio".

Please apply the change to open the file open dialog with "My Private Studio" selected.

I enjoy using Alteryx. It saves me a lot of time compared to manually writing scripts. But one of my frustrations is the lack of 'intelligence' in the IDE. Please make it so that if I change a name of a column in a select tool or a join, every occurence of that variable/column in selects, summarises, formulae and probably all tools downstream of the select tool renames as well. In other IDEs I believe this is called refactoring. It doesn't seem like an big feature to make, it would save enormous amounts of time and would make me very happy.

While we're on the 'intelligence' of the IDE, there is a small, easily fixable bug. When I have a variable with spaces in the middle, for example, 'This is my column name', and start writing in the code field "[Thi" then the drop downbox suggests "[This is my column name]". All good so far. But if I get a little further in the variable name, and write instead "[This i", then the dropdown box suggests "[This is my column name]", and I click this, the result is this: "[This [This is my column name]". Alternatively, I could write "[This is my col" and the result would be "[This is my [This is my column name]". Clearly this could be avoided by my using column names with underscores or hyphens; but I wanted to highlight to you the poor functionality here.

Kind regards,

Ben Hopkins

Presto is a in-memory SQL query engine on hadoop and having Alteryx connecting to Presto will vastly improve performance. Appreciate if you could introduce a Presto connector.

I love how the new (as of 2018.3) Python tool has a Jupyter notebook in the config panel. Jupyter is great, has a lot of built-in help, and is so robust that there is really no need for an external installation of any Python IDE anywhere else. I would love to see that with the R tool as well. For now (as of 2018.3), it's much easier to develop R outside of Alteryx, e.g. in R Studio, and then copy the code in the R tool.

Therefore, this request is to implement R just like Python, using Jupyter. This would allow us to script it and see our visualizations (etc) right in our Jupyter window. It would eliminate the need to have R-Studio off on the side. Here are a couple links that may hint how to make it happen:

- http://docs.anaconda.com/anaconda/user-guide/tasks/use-r-language/

- http://docs.anaconda.com/anaconda/navigator/tutorials/r-lang/

Hope you can make it happen -- thanks!

As an Alteryx Designer user I would like the ability to write .hyper files to a subdirectory on Tableau Server to keep make my Tableau site easier to manage.

When scheduled or manual Alteryx workflows try to overwrite or append the Tableau hyper extract file, it fails(File is locked by another process). This is because a Tableau workbook is using the hyper extract file. We know the behavior is expected as the file is opened by another user/process so Alteryx won't be able to modify it until the file is closed. Alteryx product team should consider this and implement it in the future to solve many problems.

Providing user the ability to paste a tool with one of the three options regarding connections:

- Paste with Incoming Connections,

- Paste with Outgoing Connections (where applicable),

- Paste with Both Connections (where applicable)

could make it easier to configure the workflows where many incoming and/or outgoing connections are necessary for a specific source or target tool (i.e. a certain mapping table joined to several data streams in the same workflow after being modified with a formula tool to match with a specific stream).

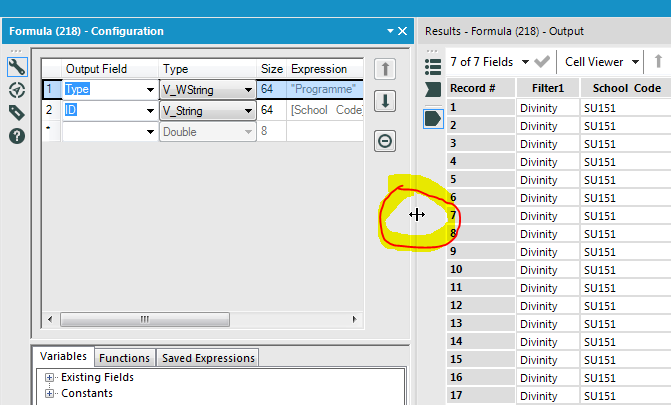

I have a dual monitor setup. My canvas lives on the left monitor, and I combine tool config and results on the right monitor. I've noticed that it's incredibly difficult to resize the config vs results window size. This is because you have to hover over EXACTLY the right part of the divider until the resize icon appears, as below:

The difficulty arises because the target zone, over which the cursor changes from an arrow to the resize tool, is only 1 pixel wide. If you have a high resolution screen, or a slightly fiddly mouse, it's almost impossible to successfully hover over the correct place. Please consider increasing the width of the hover zone to facilitate window resizing. I hope I've explained this adequately, please let me know if I need to amend. Thanks!

There is a known error when using the Write Data In-DB Tool. When writing to a table in MS SQL database that has a "identity" column you get the following error: Error: Write Data In-DB (X): Error running PreSQL on "NoTable": [DB Connection]An explicit value for the identity column in table [table_name] can only be specified when a column list is used and IDENTITY_INSERT is ON.

Essential this error happens when the table you are trying to write to has a column with the following configuration or similar: IDENTITY(1, 1) NOT NULL

I am suggesting that the Write Data In-DB Tool should be configurable insert only to specified columns as identified by the user. Similar to the functionality of this SQL statement:

INSERT INTO [table_name]

(column1, column2, column 3, etc...)

VALUES (new_value1, new_value2, new_value3, etc...)

This would 1) solve the above issue (for which there is no viable workaround), and 2) allow users more flexibility in how they write their data.

The following community posts are related:

So - given the importance of Macros - it would be valuable to have the ability within Alteryx to generate a test harness with test data that ships with the macro (this way you can maintain and enforce regression testing)

For example:

- Macro that takes in 2 numbers and adds them

- Alteryx would look at the Macro to determine the input types, output types (in this case - two integers; with an integer output)

- Based on this, it could walk you through creating a fairly robust test harness that allowed the user to specify a set of inputs, and prompt you to also include things like blanks; negatives; etc (boundary values; deliberately destructive values like % or ' signs in strings; etc)

I don't know if this has been implemented or talked about, but it would be a pretty nice QoL change to add a select all button when appending fields to record via the find and replace tool.

For example, I have a dataset where I will end up with 1000+ fields needed to be appended. Going through and clicking 1000 times is not ideal. If this is already a feature or has a hotkey, please let me know.

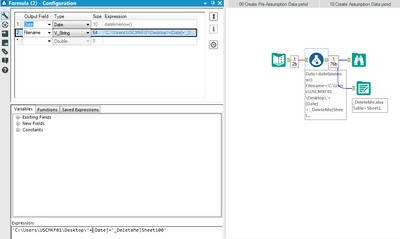

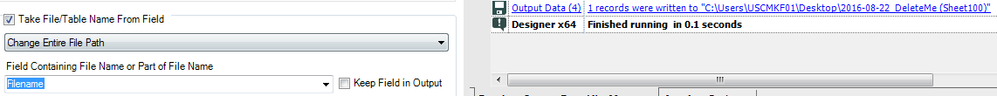

I was asked by a client @brianvigus to help him put the current date onto a daily Excel output file. When they tried to prepend/append the date, it only would do so to the worksheet name and not to the workbook name. I do like the ability to update the table (worksheet) name and understand their desire to update the workbook name too.

My solution was to create a COMPLETE PATH\FILENAME|SHEETNAME data element and use the existing option to change the entire file path. That works.

I don't know if the solution to this idea is to update the help instructions to explain that table renames act differently than file renames or if the solution requires more functional options on append/prepend.

Thanks,

Mark

The beauty of workflow constants is that a user can change a value in one place and it will be effective everywhere in the workflow where the constant is used. I want to be able to update the workflow constant value itself using an App interface. If I use interface tools to update the constant value wherever it appears, the constant loses its value and beauty. This becomes a maintenance nightmare and an interface tool clutter. Can I have a new tool or a current tool in the interface palette which allows me to change workflow constants in the interface? Thank you.

Its definately not a good UX that the full browse is now in the output window. I usually have my Output on autohide and its a few extra clicks to see the browses now... Can we have both the Browse Everywhere tab in Output and Configuration Panel?

Hello Alteryx,

It seems that the Endpoint parameter for the Amazon S3 Upload tool only support "Path Like" URL. It would be great if the Endpoint parameter could also take into account "Virual Hosted" URL.

When we enter a "Virtual Hosted" URL, the "Bucket Name" and "Object Name" parameters don't respond correctly.

The three dots option for the "Bucket Name" parameter returns the bucket name and the object name at the same time. And the three dots option for the "Object Name" parameter doesn't suggest any object name.

We can enter those manually but we lose some of the Alteryx functionnality.

It would be a great improvement that the Endpoint parameter takes into account "Virtual Hosted" URL so we keep "Bucket Name" and "Object Name" suggestions once the Endpoint is registered.

Is it in the roadmap?

François

- New Idea 394

- Accepting Votes 1,783

- Comments Requested 20

- Under Review 181

- Accepted 47

- Ongoing 7

- Coming Soon 13

- Implemented 550

- Not Planned 106

- Revisit 56

- Partner Dependent 3

- Inactive 674

-

Admin Settings

22 -

AMP Engine

27 -

API

11 -

API SDK

229 -

Bug

1 -

Category Address

13 -

Category Apps

114 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

252 -

Category Data Investigation

79 -

Category Demographic Analysis

3 -

Category Developer

219 -

Category Documentation

82 -

Category In Database

215 -

Category Input Output

658 -

Category Interface

246 -

Category Join

109 -

Category Machine Learning

3 -

Category Macros

156 -

Category Parse

78 -

Category Predictive

79 -

Category Preparation

406 -

Category Prescriptive

2 -

Category Reporting

205 -

Category Spatial

83 -

Category Text Mining

23 -

Category Time Series

24 -

Category Transform

93 -

Configuration

1 -

Content

2 -

Data Connectors

985 -

Data Products

4 -

Desktop Experience

1,615 -

Documentation

64 -

Engine

136 -

Enhancement

420 -

Event

1 -

Feature Request

219 -

General

307 -

General Suggestion

8 -

Insights Dataset

2 -

Installation

26 -

Licenses and Activation

15 -

Licensing

15 -

Localization

8 -

Location Intelligence

82 -

Machine Learning

13 -

My Alteryx

1 -

New Request

229 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

26 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

87 -

UX

228 -

XML

7

- « Previous

- Next »

-

Carolyn on: Blob output to be turned off with 'Disable all too...

- MJ on: Add Tool Name Column to Control Container metadata...

-

fmvizcaino on: Show dialogue when workflow validation fails

- ANNE_LEROY on: Create a SharePoint Render tool

- jrlindem on: Non-Equi Relationships in the Join Tool

- AncientPandaman on: Continue support for .xls files

- EKasminsky on: Auto Cache Input Data on Run

- jrlindem on: Global Field Rename: Automatically Update Column N...

- simonaubert_bd on: Workflow to SQL/Python code translator

- abacon on: DateTimeNow and Data Cleansing tools to be conside...

| User | Likes Count |

|---|---|

| 7 | |

| 3 | |

| 3 | |

| 2 | |

| 2 |