Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: Top Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

When selecting a HANA view in Alteryx that has a required prompt/parameter/variable Alteryx does not pre-populate the SQL with the required HANA prompt code. Instead it give a SQL Error. Requesting an Alteryx enhancement that would read the HANA view and determine the required HANA prompt SQL required and prepopulate the prompt or ask the user to fill in the prompt/variable box. This may work similar to how Tableau work when connecting directly to a HANA view that requires a prompt (it would pop up the prompt and allow you to select, when you convert Tableau to custom sql it would save the SQL code required for the prompt.

Would like to use arrows and other shapes for documentation. Moreover, having "anchors" (i.e., like in a wiki) would really facilitate moving about large workflows. I imagine the former is not hard to implement, though uncertain about the latter.

Check out the mock-up workflow for an admittedly bad example.

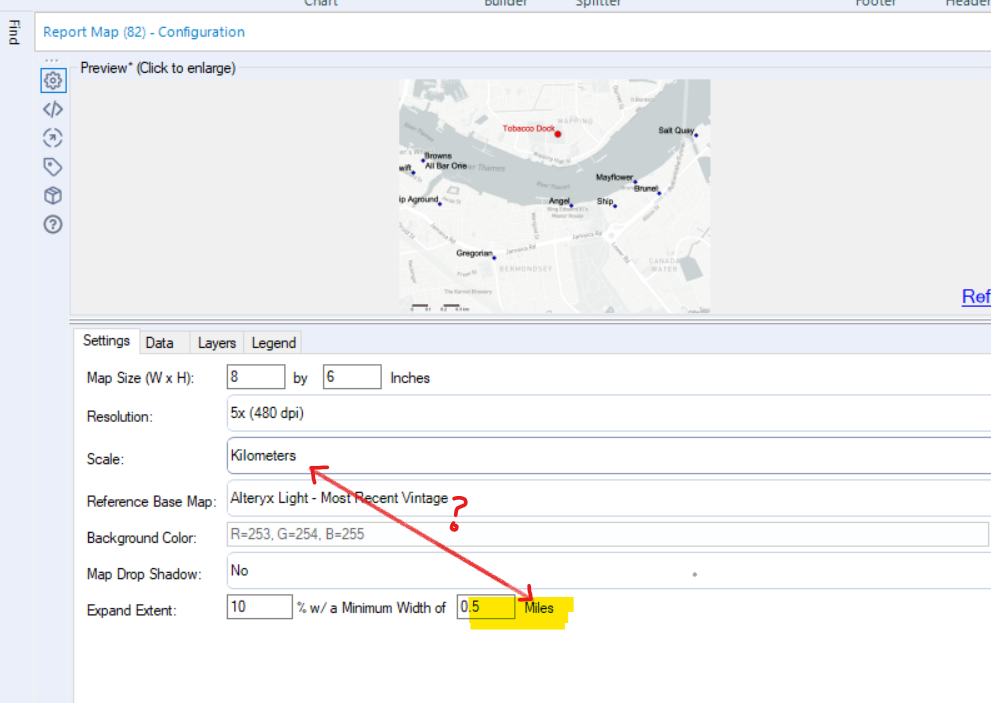

In the Report Map tool - you have the ability to select units - KM vs. Miles

You also have the ability to put a map buffer around your zoomed points - either in % or distance.

However - if you've selected your units as KM - the "Expand Extent" is still in Miles - see picture below.

Please can you make this consistent?

When I publish using the tabcmd publish command in an event or using the Publish to Tableau Server Macro, the extract becomes LIVE. I do not want it to become LIVE, because when it does, I cannot refresh the extract using the tabcmd refreshextracts command or setup a refresh schedule in Tableau Server. Is there anyway to make this tde stay an extract after Alteryx rewrites the file? When the extract is live it will not refresh until I manually select Refresh in Tableau Server when I am in the Workbook that is connected to the data source I am publishing.

I posted the above question and was told this would be good to add to the New Idea module. Thanks!

It has become clear that the Jupyter Notebook integration caches code and does not appropriately clear when there are changes made - resulting in "saved" workflows that do not contain updated code. This happens when two people are using a "shared" workflow (emailed back and forth or from a shared drive) if one person does not completely shut down out of Designer Desktop if they had previously had the workflow open at any point. This has been confirmed by Alteryx Support and is not just my hunch.

This also happens sometimes with a single user - where the Jupyter Notebook save button has been pressed multiple times and the workflow has been saved, but the changes do not make it to the file.

The integration is a step in the right direction for sure and is great to use - but my idea is that the cache should be attached to the workflows, not the entire session of Designer. Not knowing if changes were actually saved, and discovering that some were not is extremely frustrating.

We are using Alteryx designer to bulk upload to Snowflake database. We also use Alteryx Connect to pick up metadata from Snowflake. It would be awesome if the designer can add table and field description and snowflake loader can pick up the description automatically.

As of now, the metadata loader doesnt pick up metadata content in real time. This feature will motivate our analysts to document which will improve Connect adoption in our department.

Currently, there isn't any option in the Salesforce Input tool > Existing Reports to remove the 2000 record limit for queries. Is there any way of removing that cap so that our queries will return the correct number of records?

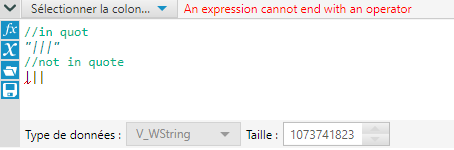

I love color coding in formula (and hope one day we have the same for query tool ^^ ). However, it appears italics is perturbing.

Here the issue I have with Pipes on the second line :

This is particulary hard to to distinguish with slash /. 😞

It is very difficult moving from Alteryx functions to SQL In-Database as a business user, I need to learn a whole new language.

In the short term Alteryx should provide a simple function reference, as similar as possible to the Formula tool, for building formula in the in-database tools.

Longer term I'd like there to be a parser from Alteryx Formulae to SQL so I can just write in my favourite Alteryx formula (or a subset thereof) and Alteryx handles the conversion to SQL.

As an enterprise customer, we need to know that Alteryx products are kept secure.

Please post vulnerabilities and fixes, and provide a way for me to subscribe to these alerts.

The option to "Disable all tools that Write Output" is great during testing but I often need to toggle back and forth and its location on the Runtime tab of the Workflow Config is inconvenient.

I think it would be great to have a button for that on the toolbar with the added feature that it would visually display whether the feature is on or off (so you don't need to see an Output Data tool to determine the current status)

There should by a Python Tool that is just a code paste (more like the R tool) and allows selection/packaging of venvs, similar to an IDE or we should be able to package scripts with workflows/macros.

A python tool that is easily integrated into macros for powerful and quick custom tools while avoiding Jupyter's failures would be incredibly beneficial. This would highlight how Python and Alteryx can work together, and don't need to be all or nothing competitors in the ETL space.

Jupyter is not a tool that should be used for production level processes - it is for teaching. Nobody has airflow or Luigi spinning up Jupyter and executing code in their ETL pipeline, so our Workflows shouldn't either. Yes, yes I have used to SDK to work around and I have also run scripts from the cmd tool but the first solution is time consuming and imposes a high skill wall and the latter has a lot of moving, non-packaged parts.

You guys have the API to do this and venv management from the SDK already so I don't think it would be expensive to implement.

In order to fully take advantage of Alteryx spatial features it would be great to have the ability of using Openstreetmap extract files natively.

While there are some sources available in SHP format they tend to be heavily cut down in detail, while the native OSM and PBF have the full feature set.

As it's an open format licensing shouldn't be an issue and it may pave the way to new features.

What do you think?

We've been researching snowflake and are eager to try this new cloud database tool but are holding off till Alteryx supports in-database tools for that environment. I know it's a fairly new service and there probably aren't tons of users, but it seems like a perfect fit since it's fully SQL complaint and is a truely native clouad, SAAS tool. It's built from scratch for AWS, and claims to be faster and cheaper.

Snowflake for data storage, Alteryx for loading and processing, Tableau for visualization - the perfect trio, no?

Has anyone had experience/feedback with snowflake? I know it supports ODBC so we could do basic connections with Alteryx, but the real key would obviously be enabling in-database functionality so we could take advantage of the computation power of the snowflake.

Anyway, I just wanted to mention the topic and find out if it's in the plans or not.

Thanks,

Daniel

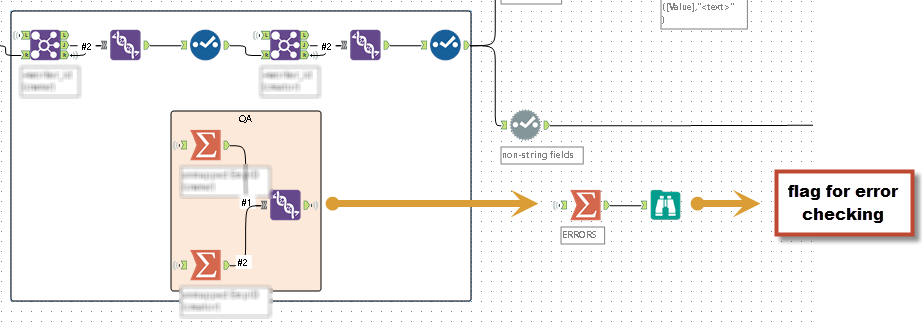

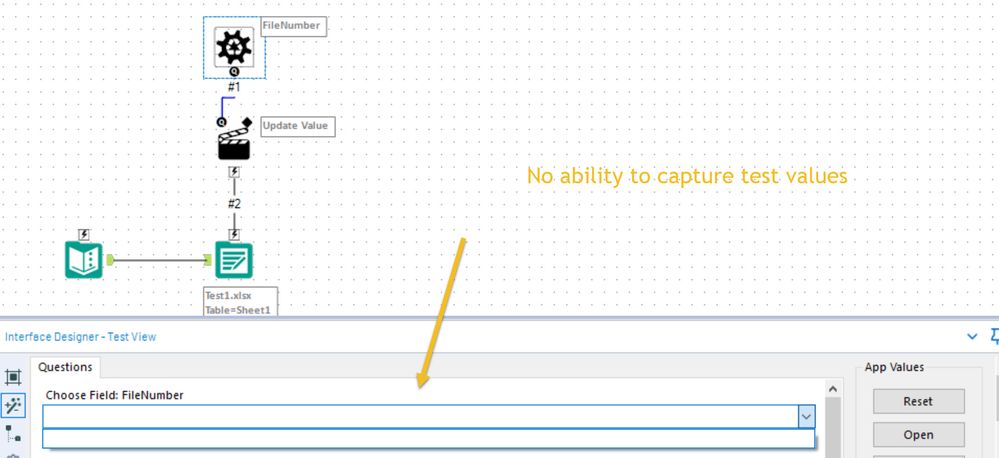

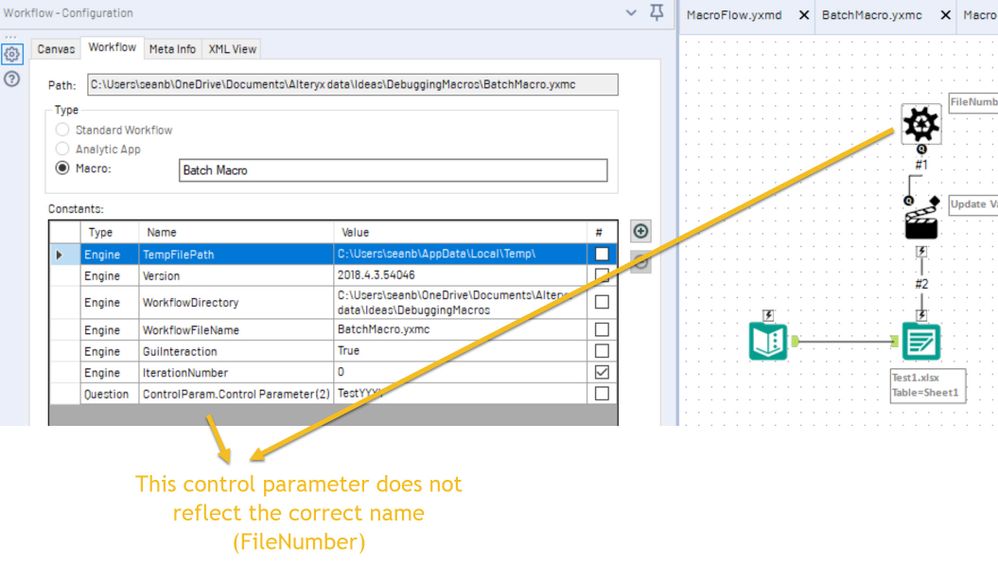

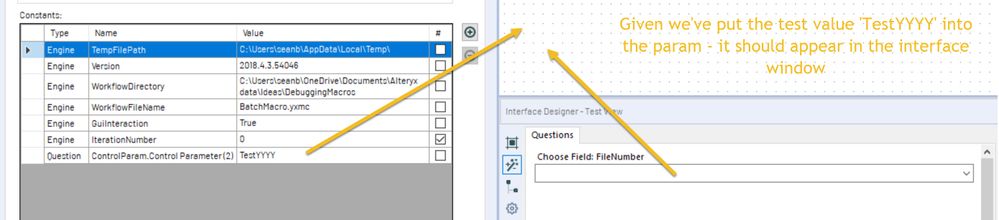

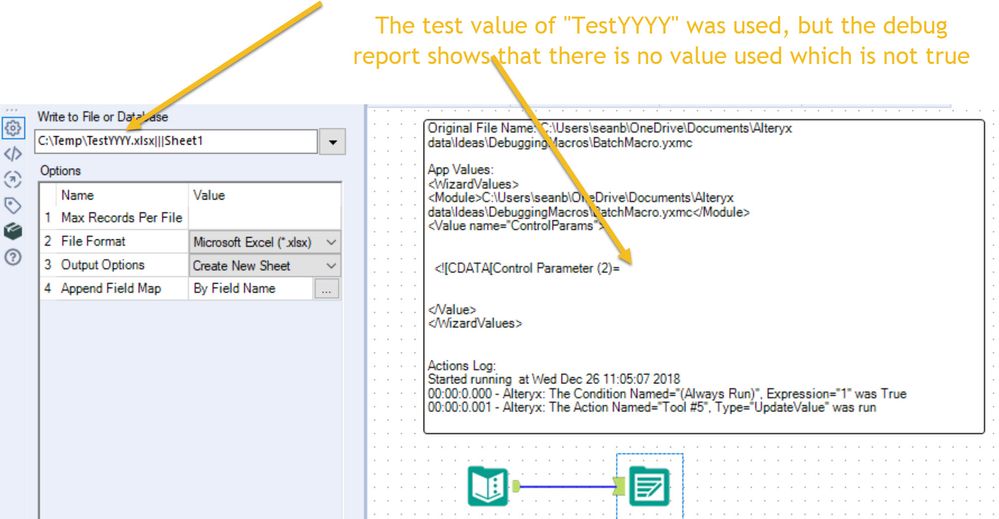

When building and debugging batch macros - it is important to be able to add test values and use these for debugging. However, the input values in the interface tools section do not allow input, and the ability to save or load test values also does not work.

While there is a workaround - setting the values in the workflow variables - this does not work fully (it doesn't reflect in the interface view; and is incorrect in the debug report) and is inconsistent with all other macro types.

Please could you make this consistent with other ways of testing & debugging macros?

All screenshots and examples attached

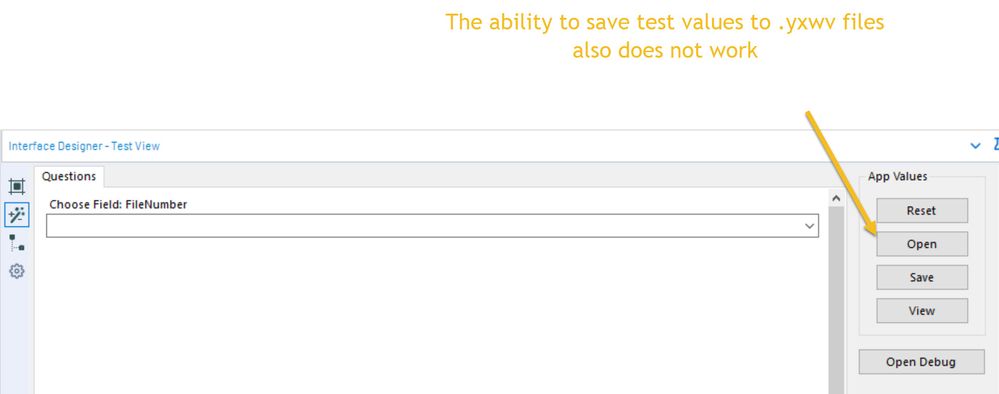

Screenshot 1: not possible to capture test values

Screenshot 2: saving and loading test values does not work

Screenshot 3: Workaround by using workflow variables

Scree

Screenshot 4: Values entered do not reflect properly

Screenshot 5: Debug works partially

We are starting to use Alteryx as a full ETL DW build tool (and blogging about it too..)

Compared to other tools in the market there do not seem to be the usual SCD(slowly changing dimension) and other "standard" tools or templates to start building.

It would be great to have a template/Macros/guide to starting to build a DW solution. It is rather daunting starting with a blank page!

Hope this is fairly self-explanatory.

I'd like to be able to create presets for Summarize tool. Instead of having Group By, Sum, Count, Count Non Null, etc on top of the libraries of functions, put them into their own category. Users could then create a Favorites and the functions that they use the most would be stored in that section (editable by user).

A seemingly minor task that has popped up several times on my team is the ability to select a subset of columns using an input list. Different people have achieved this in different ways (transpose/join/cross-tab, dynamic rename/select), but it seems like a common enough task to warrant a single-tool solution.

R has a simple way to do this:

# create a vector of the columns we would like to select

columns <- c("column_b", "column_d")

# subset based on the column names we defined in our "columns" vector

df_subset <- df[columns]

We have built a macro to achieve this (attached), but I would love if there was a second input anchor on the Dynamic Select tool, with list-based select mode as an option in the dropdown.

The macro currently has a minor annoyance, where the user gets a "RecordInfo::CreateRecord" error presented on their palette when they choose the "Keep Columns in List" entry. This error goes away with a run or an F5 refresh, but if anyone has a suggestion for getting rid of this, it would also be appreciated.

Some say to mato and some say to_mato, but how about: to/mato?

While working with my new friend, @Cedric we ran across a field in his data that contained a '/' character. We were building a macro where we updated the value of the field [AB/CD] with another field selected from the incoming data. Our error message was something akin to: Field AB was not found.

We worked around the issue, but what remained was the fact that certain characters are permitted in field names within some aspects of Alteryx and not in others. I don't know if you're aware of this limitation.

Cheers,

Mark

I'm loving the ability to read from a zip file! However, I would love the ability to read all file types. For me, I don't see .accdb or .flat, and I assume other folks might be missing other file formats that they use. I find it confusing that the input tool accepts a lot of file types, but selecting the zip format then limits my choices. I believe @Aguisande mentioned this issue in the 10.5 beta.

Thanks!

- New Idea 275

- Accepting Votes 1,815

- Comments Requested 23

- Under Review 173

- Accepted 58

- Ongoing 6

- Coming Soon 19

- Implemented 483

- Not Planned 115

- Revisit 61

- Partner Dependent 4

- Inactive 672

-

Admin Settings

20 -

AMP Engine

27 -

API

11 -

API SDK

218 -

Category Address

13 -

Category Apps

113 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

247 -

Category Data Investigation

77 -

Category Demographic Analysis

2 -

Category Developer

208 -

Category Documentation

80 -

Category In Database

214 -

Category Input Output

641 -

Category Interface

240 -

Category Join

103 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

76 -

Category Predictive

77 -

Category Preparation

394 -

Category Prescriptive

1 -

Category Reporting

198 -

Category Spatial

81 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

89 -

Configuration

1 -

Content

1 -

Data Connectors

964 -

Data Products

2 -

Desktop Experience

1,538 -

Documentation

64 -

Engine

126 -

Enhancement

331 -

Feature Request

213 -

General

307 -

General Suggestion

6 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

12 -

Localization

8 -

Location Intelligence

80 -

Machine Learning

13 -

My Alteryx

1 -

New Request

194 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

23 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

80 -

UX

223 -

XML

7

- « Previous

- Next »

-

NicoleJ on: Disable mouse wheel interactions for unexpanded dr...

- TUSHAR050392 on: Read an Open Excel file through Input/Dynamic Inpu...

- NeoInfiniTech on: Extended Concatenate Functionality for Cross Tab T...

- AudreyMcPfe on: Overhaul Management of Server Connections

-

AlteryxIdeasTea

m on: Expression Editors: Quality of life update - StarTrader on: Allow for the ability to turn off annotations on a...

- simonaubert_bd on: Download tool : load a request from postman/bruno ...

- rpeswar98 on: Alternative approach to Chained Apps : Ability to ...

-

caltang on: Identify Indent Level

- simonaubert_bd on: OpenAI connector : ability to choose a non-default...

| User | Likes Count |

|---|---|

| 20 | |

| 9 | |

| 6 | |

| 6 | |

| 5 |