Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: Hot Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

This idea is to fix one of the Power BI Output tool options for existing datasets.

Currently, if the 'Replace existing dataset' option is selected, the dataset is dropped and replaced with one having the same name. Problem with this is that any reports or dashboards using that dataset become invalid (likely due to a changed internal identifier).

Idea is to change the 'Replace existing dataset' functionality to delete & replace the data within a dataset rather than deleting & replacing the dataset itself.

This behavior is described in the following thread & flagged as 'solved' although the workaround isn't practical as a true solution to the issue. We'd like to see this supported more seamlessly via Alteryx.

https://community.alteryx.com/t5/Alteryx-Designer-Discussions/Publish-to-Power-BI-breaks-linked-Powe...

Containers are a great feature. They allow us to create larger workflows in smaller canvases, and manage the flow and appearance of our work. However the design whether intentional or flawed that allows the container window to interact with the layers behind it is annoying. Connection wires should not redirect within a container because of things on the canvas behind the container. Likewise if I have a container open, I should not be able to grab a tool or container behind the open container through the container canvas. Please fix this flaw.

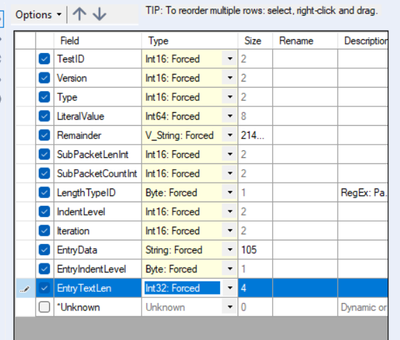

One of the common issues when you build macros is the error "the schema of macro output XXX has changed between iterations"

So the next step that we commonly follow is to put a select tool into the flow just before the macro output - and convert all the fields to a specific type; untick the "unknown" field; and then sometimes have to go into the XML to add the "Forced = true" flag into the XML so that it doesn't change over time:

Please could you add an option under the "Options" tab to force / lock down the type of every field with one click? That would eliminate dozens of clicks on every creation of a macro.

Thank you

Sean

Hi there,

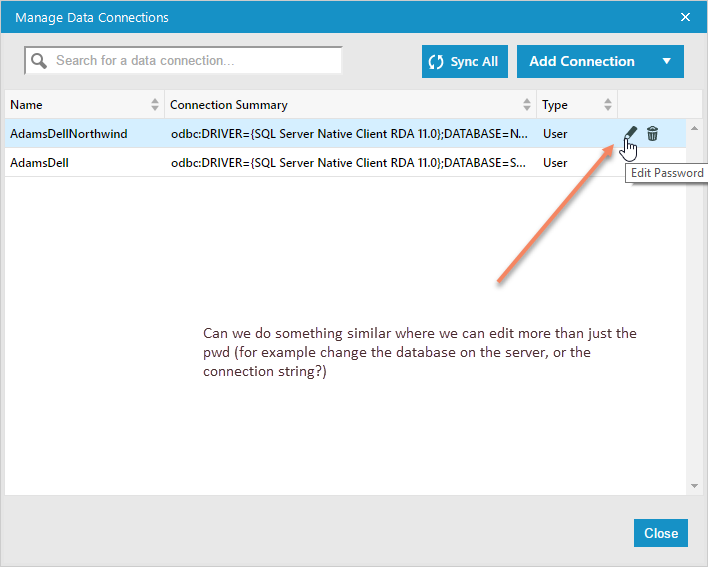

When you use DB connection aliases that are saved in Alteryx, it's currently not easy to edit them when you move a database to a different location.

Can we do something simliar to the "Edit Password" function, but which allows the user to also edit the database or server, so that this applies to all workflows using this alias?

Please add support for windows authentication to the download tool. I know there's a workaround but that involves using curl and the run command tool. The run command tool is awful and should be avoided at all costs, so please improve the download tool so I can use internal APIs.

I think it would be great to have a tool that allows you to update a dataset with another dataset. For example, this could be used in updating an archive table on a daily basis as data changes. Having a tool available that streamlines this data operation would be helpful to simplify workflows.

In the tool, you would be given the option to select your primary key fields, which are the fields used to identify records. Additionally, you have the option to perform an insert, modify, or delete operation, according to the primary key fields that you choose in the configuration.

Obviously this is something that anybody could create a macro for if they wanted to. But it would be nice to have a tool in place so that we dont have to worry about it. I think this would be a nice use case to bolster Alteryx usage as a data engineering tool for relational database management in particular.

Would be extremely useful if the Summarize Tool had an option in the numeric menu to Standardize the data. More often than not, data sets will not have the same count of variables which makes the comparison analysis meaningless. Currently, there is no easy way to Standardize the data without using the K-Centroids Cluster Analysis tool or standardize_unit interval supporting macro.

Currently, the Open Recent (from the File toolbar) generates a list of the 10 most recent workflows opened in Alteryx Designer. It would be useful to show even more (20-25?) workflows through this method as there is enough open space on the screen to do so.

The original engine support expanding the formula tool with custom functions either in XML or C++. The new AMP doesn't support these yet.

There is a fair number of user who are using these in E1 and would be good to have this available in AMP

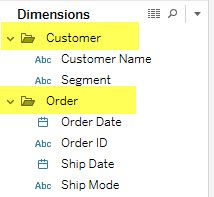

Tableau allows users to do three very useful things to make data more usable for end users, but this functionality is not available with the Publish To Tableau Server tool.

Foldering of dimensions/measures

Creating hierarchies out of dimensions

Adding custom comments to fields that are visible to users when they hover

This functionality allows subject matter experts to create data sources that can be easily understood by everyone within their organizations.

Please "star" this idea if you would like to see functionality in Alteryx that would enable you to create a metadata layer in the "Publish to Tableau Server" tool or in an accompanying tool.

Dear Users, Fans, Compatriots, and Fellow Alteryx Nerds:

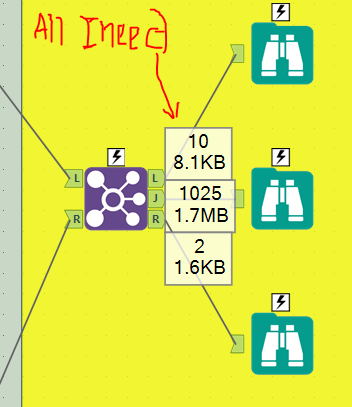

One of my favourite parts of using Alteryx is that in all the in-memory tools, there is a quick-and-dirty count in each of your tools' output nodes. You know, you use these all the time and when you switch back into SQL, you get frustrated with having to run the query two or three times just to see the count in each of your join outputs.

One thing I'm missing as an INDB user is that I have to employ a manual workaround to see what is happening. INDB tools are a bit black-box in that we don't see the counts.

I've been using this workaround for a little over a year now and I haven't found it to be incredibly taxing on my resources, so I'm wondering if Alteryx may be able to look into doing this on the back end to make the INDB experience that much closer to the in-memory experience. I just want those numbers above; I don't need to know the byte count, just the record count.

Now, I imagine this is not implemented already for a Very Good Reason. But, enough is enough! Let's shoot for the moon and make this tool all that much better!! Anyone with me?

-Cedric Justice

Cambia Healthcare

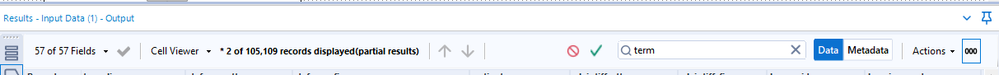

When you enter a search term in the results window, it would be great if it highlighted that term in the data results window. Otherwise, it still takes work to find where your search term is located in each row.

Hi Alteryx 🙂

When you set maximum records per file, the filename gets _# appended. Great! But in reality you get:

Filename.csv

Filename_1.csv

Filename_2.csv

The first filename doesn't get a number. I think that it should.

Cheers,

Mark

There are circumstances where the python install section of Alteryx fails - however it does not report failure - in fact it reports success, and the only way to check if the Jupyter install fails is to check the Jupyter.log to look for error messages.

Can we add two features to Alteryx install / platform to manage this:

- If the installer for Python fails - please can you make this explicit in feedback to the user

- Can we also add a way for the user to repair the Python install - either using standard MSI Repair functionality, or a utility or a menu system in Designer.

Thank you

Sean

Hello!

I recently build a couple of workflows where i needed to union many parts of my data together.

Take for instance, the following:

I appreciate this is an unrealistic workflow - but if i am splitting data, at any points, and doing different processes, i am going to need to union that data back together.

Now without my fix - the solution is to put a union tool onto the canvas, and drag each connection to the union tool. This is fine on a small scale, but when its 5+ connections this can become tedious.

My proposed solution, is similar to the 'cache and run' functionality, in that you can select many tools with Ctrl + Click, and at the bottom you have the option for 'Union Outputs':

And when clicked, a union is added to the canvas, following the furthest most right tool (or last clicked), and have a union setup, with all connections made:

Hope this makes sense!

TheOC

As pointed out by @Joshman108 in this post, you can lose some/all of your work in the table tool if the metadata is ever not flowing correctly. Losing your metadata can happen for a number of legitimate reasons (copying/pasting, crosstab tool upstream, python tool upstream etc.) There are a number of tools (including the table tool) where losing the matadata can prove catastrophic.

Consider these 2 simple examples:

1) We have the dynamic box checked and apply a rule to field 1:

If our table tool loses its metadata, our row rule is completely erased! I would expect the tool to remember our row rule once metadata is reestablished.

2) We have the dynamic box unchecked, as well as Field4 unchecked. We setup the same rule as before that references field4.

Now when the metadata is lost and restablished, the table tool does a good job of remembering that Field4 is supposed to be unselected, and that I had a rule for Field1; however, the rule has now been changed! I would expect the rule to also remember that I was referencing Field4. Note that if my rule had reference a field that was included in the table, it would have remembered the rule. It's only because my rule referenced Field4 which was not included in the table that my rule got messed up. In my rule, it now references Row# which is completely wrong:

Currently when creating a table in Oracle in Alteryx there is a lot of "magic" that happens under the hood in converting Alteryx data types to Oracle data types.

For example fixed decimal creates NUMBER, String created CHAR and V_String created VARCHAR.

It would be great to have an option to review the Oracle data types in the Output Data Tool when writing to Oracle. This would improve efficiency when using Alteryx to create Oracle tables.

See picture for example of what would display in output configuration.

.

CI / CD is critical to any production level process, especially when multiple authors are contributing new features to the same workflow. Currently, multi-author editing of workflows is extremely difficult, and something that would be aided greatly by using git to control different branches of ongoing work. Luckily, that's something we can already do today! However, the ability to test before merging a pull request is critical to modern CI / CD pipelines. For this, it we need to be able to run a headless workflow from a CI / CD environment. Also, having the ability to pass in parameters to the workflow would allow for robust integration testing - something that isn't straightforward today without running on production environments.

I would like to request that the Python tool metadata either be automatically populated after the code has run once, or a simple line of code added in the tool to output the metadata. Also, the metadata needs to be cached just like all of the other tools.

As it sits now, the Python tool is nearly unusable in a larger workflow. This is because it does not save or pass metadata in a workflow. Most other tools cache temporary metadata and pass it on to the next tool in line. This allows for things like selecting columns and seeing previews before the workflow is run.

Each time an edit is made to the workflow, the workflow must be re-run to update everything downstream of the Python tool. As you can imagine, this can get tedious (unusable) in larger workflows.

Alteryx support has replied with "this is expected behavior" and "It is giving that error because Alteryx is

doing a soft push for the metadata but unfortunately it is as designed."

- New Idea 275

- Accepting Votes 1,815

- Comments Requested 23

- Under Review 173

- Accepted 58

- Ongoing 6

- Coming Soon 19

- Implemented 483

- Not Planned 115

- Revisit 61

- Partner Dependent 4

- Inactive 672

-

Admin Settings

20 -

AMP Engine

27 -

API

11 -

API SDK

218 -

Category Address

13 -

Category Apps

113 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

247 -

Category Data Investigation

77 -

Category Demographic Analysis

2 -

Category Developer

208 -

Category Documentation

80 -

Category In Database

214 -

Category Input Output

641 -

Category Interface

240 -

Category Join

103 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

76 -

Category Predictive

77 -

Category Preparation

394 -

Category Prescriptive

1 -

Category Reporting

198 -

Category Spatial

81 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

89 -

Configuration

1 -

Content

1 -

Data Connectors

964 -

Data Products

2 -

Desktop Experience

1,538 -

Documentation

64 -

Engine

126 -

Enhancement

331 -

Feature Request

213 -

General

307 -

General Suggestion

6 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

12 -

Localization

8 -

Location Intelligence

80 -

Machine Learning

13 -

My Alteryx

1 -

New Request

194 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

23 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

80 -

UX

223 -

XML

7

- « Previous

- Next »

-

NicoleJ on: Disable mouse wheel interactions for unexpanded dr...

- TUSHAR050392 on: Read an Open Excel file through Input/Dynamic Inpu...

- NeoInfiniTech on: Extended Concatenate Functionality for Cross Tab T...

- AudreyMcPfe on: Overhaul Management of Server Connections

-

AlteryxIdeasTea

m on: Expression Editors: Quality of life update - StarTrader on: Allow for the ability to turn off annotations on a...

- simonaubert_bd on: Download tool : load a request from postman/bruno ...

- rpeswar98 on: Alternative approach to Chained Apps : Ability to ...

-

caltang on: Identify Indent Level

- simonaubert_bd on: OpenAI connector : ability to choose a non-default...

| User | Likes Count |

|---|---|

| 25 | |

| 9 | |

| 6 | |

| 6 | |

| 5 |