Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

I find the myself often needing to create unique IDs for a given category. Currently I end up using the multi row tool and leveraging the "group by" option. Enabling the record ID tool to create a unique count by grouping on distinct categories in an underlying data set would unlock an new level of grouping that would consolidate record keeping functionality in a single tool.

Scenario:

Upstream tools end in a Summarize Tool that has set of records with the following fields: EmailAddress, AttachmentUNCPath. So you get a bunch of recipients with various attachments. Each recipient can have different attachments, and this will change each time it's run. In other words, it's fully dynamic.

If the same recipient has multiple attachments, then it would be nice to group the recipient and just separate the attachments with a semi-colon (or whatever) in the same field. Essentially creating one record per recipient, and therefore one email per recipient, and having the Email Tool attach each file. In other words, mbarone@paychex.com gets one email with 5 attachments. And next week maybe only 3 attachments, and so on.

Currently the only way I see to accomplish this is with a batch macro.

Would be infinitely more convenient to just have the Email Tool by default accept multiple attachments in a field as long as they are separated by a semi-colon, much like occurs in the "to" field.

It would be great if we could set the default size of the window presented to the user upon running an Analytic App. Better yet, the option to also have it be dynamically sized (auto-size to the number of input fields required).

For the Output tool, File Format of Microsoft Excel (*.xlsx) - the non-Legacy one - it doesn't have the "Delete Data & Append" option that the Legacy ad 97-2003 Excel formats have.

Having the Delete Data & Append for the most recent version of Excel would be very beneficial. Without it, there does not appear to be a way to udpate an existing Excel sheet using an Alteryx workflow while preserving the formatting within the Excel sheet. The option to Overwrite/Drop removes all formatting.

I have this workflow refreshing an Excel sheet daily, and then am emailing it to a distribution at the end of the workflow. Unfortunately, right now I have to use the 97-2003 format to preserve the formatting of the Excel sheet when it is automatically refreshed and emailed each day.

Can you please assess adding this option? Thanks!

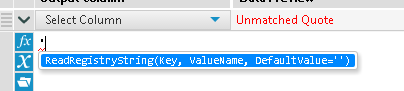

Ok Alteryx, we totally love your product. And I've got a super quick fix for you. Why on earth would you Autocomplete the ubiquitous tick mark as "ReadRegistryString(Key, ValueName, DefaultValue='')"

?

I find myself in this situation constantly where, 'dummy' suddenly becomes 'dummyReadRegistryString('HKEY_LOCAL_MACHINE\SOFTWARE\SRC\Alteryx\4.1', 'InstallDir')' the moment I strike the enter key.

Pls help, I don't ask for much.

Whenever I output the Count using the Summarize Tool I am unable to tell it to sort the results by Count and am forced to grab a sort tool. It would be nice to offer a sort option from within the Summarize tool itself instead of requiring a subsequent sort tool or to use the Results window to manually sort it.

To help in adding tools quickly it would be useful to have some form of quick keys or maybe somewhat a combination (enhancement) of search bar / right-click.

So here's the pic and a 1,000 words

And here's the blurb

The idea being that hitting a key whilst mouse is over the canvas would display the search bar at the mouse cursor position. Once you've selected the tool you want it hitting return[+shift] adds it to the canvas either:

- In a dragged (mouse down) state to help for final position and automated connections and then a final left-click to add to canvas or,

- Just add it at that position.

This would also speed up adding tools as you could include things like 'Recent' or 'Favorites'. I grant you that you could just incorporate this into the search dialog but save you a bit of eye movement. Don't get me wrong the search bar is great but it's the lots of drag-drop that can slow things down a bit.

I find the Run Command tool to be counter-intuitive: rather than supplying a required I/O parameter (in at least one of "Write Source" and/or "Read Results"), I would rather just use a "Block Until Done" approach to 1. write file, 2. issue custom system command, 3. read file. An even simpler example is the case where I don't need I/O to/from the system command... in that case, I just want to issue the command, nothing more. But the current tool will require me to specify a dummy file, which is counter-intuitive and also leaves that unnecessary file somewhere.

To fix this up without breaking existing user implementations, the "idea" is:

- Do not require either "Write Source" or "Read Result" ... allow both to be blank.

- Allow (but don't require) any of "Command," "Command Arguments," and "Working Directory" to be dynamically populated from fields in the data streamed into the tool.

So... any existing user implementation should be unnaffected... but these changes would allow users to implement system commands in a more intuitive manner, and even allow for very dynamic system commands based on the workflow.

Thanks!

I think the undo/redo capabilities in Alteryx could be greatly improved. Here is an idea that I think would be beneficial...

I'd like to see which exact tools are affected by my undo/redo actions. An idea was suggested a couple years ago to move your location on the canvas, but that was not added to the roadmap. Instead, is it possible to add the tool ID to the undo menu so that it is obvious which tool each line is detailing?

This is the current debug menu that shows your previous actions:

When a tool is created, the ID can be displayed in this menu, but this is not shown when a change is made to an existing tool. My suggestion is that the menu would say:

4. Change Sort (3) Properties

This same change should be made in the Edit dropdown menu.

Hey there,

The performance profiling option on the "runtime" tab is very helpful to identify bottlenecks on a long-running workflow. However this is missing (along with the entire "Runtime" tab) if I change this to a macro.

Given that the only way to build relatively complex dependant chain jobs is to wrap them in dummy batch macros (using a macro like a sub-procedure with flow-of-control on the master-canvas) - most of our work is done in Macros - so it would be helpful to be able to performance profile them during testing.

I use a mouse which has a horizontal scroll wheel. This allows me to quickly traverse the columns of excel documents, webpages, etc.

This interaction is not available in Alteryx Designer and when working with wide data previews it would improve my UX drastically.

When moving a tool container, all of the tools within it become mis-aligned with the canvas grid. Moving any single tool immediately re-aligns it to the grid, which puts it out of alignment with the rest of the tools in the container.

Example: Put 3 tools in a row in a tool container, all aligned horizontally. Next, move the container. Now, move the middle tool, then try to place it back in alignment with the other two. You won't be able to, because they are out of alignment with the canvas grid.

Please fix this.

It is just a bit of annoyance, really. I'd like to see the option of inputting a hexcode of color and/or a screen color picker in the color dialog. At the moment, you have to change R, G, B separately or play around with the cursor to find the right color.

The color dialog is relevant for the documentation purposes but also reporting tools and I'm sure it would make life easier to some people, especially when branding colours are important.

I set up my canvas how I want it, but I will sometimes undock or auto-hide the canvas windows (Results, Configuration, etc.). My suggestion is to add a Locked Dock as a selection that will allow for resizing, but not undocking.

DELETE from Source_Data Where ID in

SELECT ID from My_Temp_Table where FLAG = 'Y'

....

Essentially, I want to update a DB table with either an update or with the deletion of rows. I can't delete all of the data. My work around will be to create/insert into a table the keys that i want to delete and try to use a input/output tool with SQL that performs the delete. Any other suggestions are welcome, but a tool is best.

Thanks,

Mark

Having the open / close ( expand / collapse ) button for the tool container in lhe top right corner implies that everytime a big container is expanded, to close it the user has to move the pointer to its new position, which sometimes mean scrolling / zooming out and then zooming in to locate it.

I suggest to locate that button in the top left corner by the side of the enable/disable switch or even a double click mechanism for open/close, which would enable to user to open, see what is inside the container, and close it without moving the mouse to locate the new location of the button.

Apologies if this has been suggested already - did a search and didn't see anything similar.

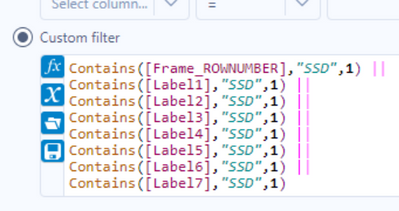

This is a quality of life/UX idea. The search functionality in the results pane essentially does a 'contains' search on all of the columns (see below screenshots for the filter inserted by the 'apply data manipulations button). As I build workflows and profile the data, it'd be helpful if I could click one or more columns and limit the search bar to just those fields.

Right now, depending on the dataset I could get rows returned by the search due to the search term appearing in columns that aren't relevant. To workaround this I could add select tools to limit the columns or do more robust filters in a filter tool, but having it built in would be very helpful.

I'd like to see an enhancement that at the install level (through an XML configuration file for example), the use of the From field in the Email reporting tool could be disabled for population by the end user and instead would auto-populate with that current users e-mail address. Currently users can populate the field with any address on their domain, which is useful, but also poses a risk in that messages can be made to appear to be coming from a party that is not aware of it. We'd like to be able to control that on install and "turn off" access to the From field

Please add a configuration to the RedShift bulk load to EITHER use access keys or an IAM EC2 role for access.

We should not have to specify access keys when we are in an IAM enabled environment.

Thanks

When you have a "reminder"/"Notification" , there needs to be the option to permanently ignore the update.

Some updates only give you a timeframe for ignore/remind as little as 7 days. There should absolutely be options for longer time frames, and should include a permanent reminder of do not display/remind me of 'this' update again.

Fine for another reminder when there is another new update, but don't repeatedly place the notice of a reminder for the same system/version/data set etc etc etc update.

There are times companies don't provide updates for a year or more. You shouldn't have to keep dismissing update reminders/notices when you don't intend to update until maybe the next version or a year from now.

Remove the constant update notification.

- New Idea 394

- Accepting Votes 1,783

- Comments Requested 20

- Under Review 181

- Accepted 47

- Ongoing 7

- Coming Soon 13

- Implemented 550

- Not Planned 106

- Revisit 56

- Partner Dependent 3

- Inactive 674

-

Admin Settings

22 -

AMP Engine

27 -

API

11 -

API SDK

229 -

Bug

1 -

Category Address

13 -

Category Apps

114 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

252 -

Category Data Investigation

79 -

Category Demographic Analysis

3 -

Category Developer

219 -

Category Documentation

82 -

Category In Database

215 -

Category Input Output

658 -

Category Interface

246 -

Category Join

109 -

Category Machine Learning

3 -

Category Macros

156 -

Category Parse

78 -

Category Predictive

79 -

Category Preparation

406 -

Category Prescriptive

2 -

Category Reporting

205 -

Category Spatial

83 -

Category Text Mining

23 -

Category Time Series

24 -

Category Transform

93 -

Configuration

1 -

Content

2 -

Data Connectors

985 -

Data Products

4 -

Desktop Experience

1,615 -

Documentation

64 -

Engine

136 -

Enhancement

420 -

Event

1 -

Feature Request

219 -

General

307 -

General Suggestion

8 -

Insights Dataset

2 -

Installation

26 -

Licenses and Activation

15 -

Licensing

15 -

Localization

8 -

Location Intelligence

82 -

Machine Learning

13 -

My Alteryx

1 -

New Request

229 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

26 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

87 -

UX

228 -

XML

7

- « Previous

- Next »

-

Carolyn on: Blob output to be turned off with 'Disable all too...

- MJ on: Add Tool Name Column to Control Container metadata...

-

fmvizcaino on: Show dialogue when workflow validation fails

- ANNE_LEROY on: Create a SharePoint Render tool

- jrlindem on: Non-Equi Relationships in the Join Tool

- AncientPandaman on: Continue support for .xls files

- EKasminsky on: Auto Cache Input Data on Run

- jrlindem on: Global Field Rename: Automatically Update Column N...

- simonaubert_bd on: Workflow to SQL/Python code translator

- abacon on: DateTimeNow and Data Cleansing tools to be conside...

| User | Likes Count |

|---|---|

| 7 | |

| 3 | |

| 3 | |

| 2 | |

| 2 |