Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: New Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

Even with browse everywhere I see plenty of workflows dotted with browses. Old habits die hard. Maybe a limit configuration for browses would be a good thing. If you've recently browsed a very large set of data you might agree.

Cheers,

Mark

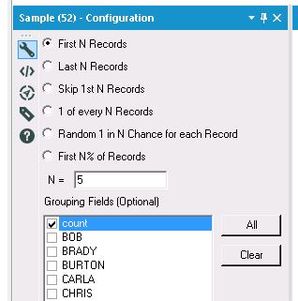

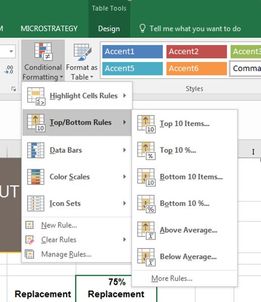

Would love to see a tool that allows you to find the Top N or Bottom N% etc. using a single tool, rather than the current common practices of using 2-3 tools to accomplish this simple task. It's possible some/all of this functionality could be added by simply expanding the current Sample tool to include more options, or at least mirroring the configuration of the Sample Tool in the creation of a new "Top/Bottom Tool."

For example, let's say I wanted to find the top 5 student grades, and then compare all scores to those top 5 grades. I would currently need to do something along the lines of Sort descending (and/or Summarize Tool, if grouping is needed) + Sample Tool (First N Records) + Join the results back to the data. That's anywhere from 3-4 tools to accomplish a simple task that could potentially be done with 1-2.

I'm envisioning this working somewhat like the Top/Bottom rules in Excel Conditional Formatting (see below), and similar to some of the existing options in the Sample Tool (also see below). For example, rather than only being able to select the First N Records in the Sample Tool, I could indicate that I want to select the Top N Records, or the Bottom N% Records. This would prevent the additional step of having to group/sort your data before using the Sample Tool, especially in cases where you're then having to put your records back into their original order rather than leaving them in their grouped/sorted state. You'd still want to have the option of choosing grouping fields if desired. You would also need to have a drop-down field to indicate which field to apply the "Top/Bottom rules" to.

A list of potential "Top/Bottom" options that I believe would be great additions include:

- Top N

- Bottom N

- Top N %

- Bottom N %

- Above Average

- Below Average

- Within a Percentile Range (i.e. "Between 20-30%")

- Skip Top N

- Skip Bottom N

The value added with just the options above would be huge in helping to streamline workflows and reduce unnecessary tools on the canvas.

When building macros - we have the ability to put test data into the macro inputs, so that we can run them and know that the output is what we expected. This is very helpful (and it also sets the type on the inputs)

However, for batch macros, there seems to be no way to provide test inputs for the Control Parameter. So if I'm testing a batch macro that will take multiple dates as control params to run the process 3 times, then there's no way for me to test this during design / build without putting a test-macro around this (which then gets into the fact that I can't inspect what's going on without doing some funkiness)

Could we add the same capability to the Control Parameter as we have on the Macro Input to be able to specify sample input data?

Thanks you to @JoeM for recent training on macros, and @NicoleJohnson for pointing out some of the challenges.

when writing an iterative macro - it is a little bit difficult to debug because when you run this in designer mode, it only does one iteration and stops.

Could we add the capability to the designer itself to be able to run the second and third iteration using the test data built into the macro input tool? Even something as simple as an option to run X iterations; or when it's run the first iteration allow me to look at what happened and trigger iteration 2 (or to trigger a run-through to completion) would be immensely helpful.

While you can do this with a test-flow wrapped around a macro, macro development is a bit of a black box because Alteryx doesn't natively have the ability to step into a macro during run-time and pause it to see what's happening on iteration 1 or 2 or n and why it's not terminating etc. So putting the ability to run in a debug mode would be HUGELY helpful.

Hi there,

Adam ( @AdamR_AYX ), Mark ( @MarqueeCrew) and many others have done a great job in putting together super helpful add-in macros in the CREW pack - and James ( @jdunkerley79 ) has really done an incredible job of filling in some gaps in a very useful way in the formula tools.

Would be possible to include a subset of these in the core product as part of the next release?

I'm thinking of (but others will chime in here to vote for their favourite):

- Unique only tool (CReW)

- Field Sort (CReW)

- Wildcard XLSX input (CReW) - this would eliminate a whole category of user queries on the discussion boards

- Runner (CReW - although this may have issues with licensing since many people don't have command line permission - Alteryx does really need the ability to do chained dependancy flows in a more smooth way.

- Date Utils (JDunkerly) - all of James's Date utils - again, these would immediately solve many of the support questions asked on the discussion forum

I think that these would really add richness & functionality to the core product, and at the same time get ahead of many of the more common queries raised by users. I guess the only question is whether the authors would have any objection?

Thank you

Sean

recently loaded the new V11 and gettting used to it. one immediate gripe is the new version of the Formula Tool no longer supports multiple field actions. In the prior version I could change Data Types on many fields at once. I could move multiple fields in a block at once. there were a few other things but these are things I am sorely missing on my first use of V11. I created about 20 fields in quick succession just getting names down and then going back and putting in formula which were variations on a theme. When done I noticed the default DataType was V_WString and I wanted integer. In the past it was no big deal because I could select the block or interspersed fields and then right click to change data type for all to the same data type. it was very handy and now appears to be gone. please bring these things back.

I've seen the question in the community and have had the need to cascade fields in a record. On certain conditions, field 2 moves to field 1 and field 3 moves to field 2 etcetera. This can be a complicated process remembering if the moves were made and which field contents should be where.

One solution might be to define the cascade condition as an expression and then map the fields as FROM TO and allow for defaulting into a field (ie: Null()).

Another solution might be to reference the input data directly. You could get field values from the input stream before enhancements to data were made.

At the end of a process, Delta Flags (fields changed during the formula) could be created if input/output of selected variables were changed.

A stream of thoughts....

Please enhance the input tool to have a feature you could select to test if the file is there and another to allow the workflow to pause for a definable period if the input file is locked by another user, then retry opening. The pause time-frame would be definable for N seconds and the number of iterations it would cycle through should be definable so you can limit how many attempts to open a file it would try.

File presence should be something we could use to control workflow processing.

A use case would be a process that runs periodically and looks to see if a file is there and if so opens and processes it. But if the file is not there then goes to sleep for a definable period before trying again or simply ends processing of the workflow without attempting to work any downstream tools that might otherwise result in "errors" trying to process a null stream.

An extension of this idea and the use case would be to have a separate tool that could evaluate a condition like a null stream or field content or file not found condition and terminate the process without causing an error indicator, or perhaps be configurable so you could cause an error to occur or choose not to cause an error to occur.

Using this latter idea we have an enhanced input tool that can pass a value downstream or generate a null data stream to the next tool, then this next tool can evaluate a condition, like a filter tool, which may be a null stream or file not found indicator or other condition and terminate processing per the configuration, either without a failure indicated or with a failure indicated, according to the wishes of the user. I have had times when a file was not there and I just want the workflow to stop without throwing errors, other times I may want it to error out to cause me to investigate, other scenarios or while processing my data goes through a filter or two and the result is no data passes the last filter and downstream tools still run and generally cause a failure as they have no data to act on and I don't want that, it may be perfectly valid that on a Sunday or holiday no data passes the filters.

Having meandered through this I sum up with the ideal being to enhance the input tool to be able to test file presence and pass that info on to another tool that can evaluate that and control the workflow run accordingly, but as a separate tool it could be applied to a wider variety of scenarios and test a broader scope of conditions to decide if to proceed or term the workflow.

This functionality would allow the user to select (through a highlight box, or ctrl+click), only the tools in a workflow they would want to run, and the tools that are not selected would be skipped. The idea is similar to the new "add selected tools to a new tool container", but it would run them instead.

I know the conventional wisdom it to either put everything you don't want run into a tool container and disable it, or to just copy/paste the tools you want run into a blank workflow. However, for very large workflows, it is very time consuming to disable a dozen or more containers, only to re-enable them shortly afterwards, especially if those containers have to be created to isolate the tools that need to be run. Overall, this would be a quality of life improvement that could save the user some time, especially with large or cumbersome workflows.

One major improvement in version 11 is that you can now schedule workflows directly in the Gallery. One thing I miss though is the ability to see the whole log from the workflow (messages, warnings and conversion errors).

I have made a workaround by using the list runner (Crew Macros), but I think this should be a functionality on the server itself.

To see the workaround and the expected output, you can watch this video:

Hi there,

We have a relatively large table that we are trying to analyse using the data-investigation tools - however the Field summary tool's interactive output seems to fail on this data set producing no output at all. It produces no error message - just a blank output on the interactive output (the other two outputs are normally populated).

The table is 104 columns wide; 1.16M rows long; and 865 Mb in size excluding indices.

We put a random row select on this - and if we passed any more than 13100 rows into the Field Summary tool (with all 107 columns), then the interactive tool output is blank. If we scale this back to 13000 rows or fewer, the Field summary interactive view works as expected (providing a frequency histogram on each field).

Is this a known issue - there was no warning provided to indicate that there was an overrun or anything similar?

Thank you

Sean

Many of us use auto-increment primary keys in our tables, but these PK's don't exist in the raw data as a natural key. So when we get new raw data, we cannot use the Update / Insert if New method which keys off the Primary Key.

Imagine if you could select ANY unique key on the table instead?

There's no reason not to allow this from a SQL perspective, though it might be a little less efficient for some DB engines. But it would make things so much easier!!!

Right now, I instead load to a temp holding table and then do deletes and inserts using the Post Create SQL statement.

Many of us use auto-increment primary keys in our tables, but these PK's don't exist in the raw data as a natural key. So when we get new raw data, we cannot use the Update / Insert if New method which keys off the Primary Key.

Imagine if you could select ANY unique key on the table instead?

There's no reason not to allow this from a SQL perspective, though it might be a little less efficient for some DB engines. But it would make things so much easier!!!

Right now, I instead load to a temp holding table and then do deletes and inserts using the Post Create SQL statement.

The tokenize would be more powerful if in addition to Drop Extra with Warning / Without Warning / Error, you could opt to have extra tokens concatenated with the final column.

Example: I have a values in a column like these:

3yd-A2SELL-407471

3vd-AAABORMI-3238738

3vd-RMLSFL-RX-10326049

In all 3 cases, I want to split to 3 columns (key, mlsid, mlsnumber), though I only care about the last two. But in the third example, the mlsnumber RX-10326049 actually contains a hyphen. (Yes, the source for this data picked a very bad delimiter for a concatenated value).

I can parse this a lot of different ways - here's how I do it in SQL:

MlsId = substr(substr(listingkey, instr(listingkey, '-')+1), 1, instr(substr(listingkey, instr(listingkey, '-')+1), '-')-1)

MlsNumber = substr(substr(listingkey, instr(listingkey, '-')+1), instr(substr(listingkey, instr(listingkey, '-')+1), '-')+1);

With Regex tokenize, I can split to 4 or more columns and then with a formula test for a 4th+ column and re-concatenate. BUT it would be awesome if in the Regex tokenize I could instead:

1. split to columns

2. # of columns 3

3. extra columns = ignore, add to final column

I would like to see the pencil (that means writing to a record) go away when the Apply/Check button is clicked.

Hi all,

In the formula tool and the SQL editors, it would be great to have a simple bracket matcher (like in some of the good SQL tools or software IDEs) where if you higlight a particular open bracket, it highlights the accompanying close bracket for you. That way, I don't have to take my shoes and socks off to count all the brackets open and brackets closed if I've dropped one 🙂

It would also be great if we could have a right-click capability to format formulae nicely. Aqua Data Studio does a tremendous job of this today, and I often bring my query out of Atlteryx, into Aqua to format, and then pop it back.

Change:

if X then if a then b else c endif else Z

To:

if X

Then

if A

then B

Else C

else Z

Endif

or Change from

Select A,B from table c inner join d on c.ID = D.ID where C.A>10

to

Select

A

,B

From

Table C

Inner Join D

on C.ID = D.ID

Where

C.A>10

Finally - intellitext in all formulae and SQL editing tools - could we allow the user to bring up intellitext (hints about parameters, with click-through to guidance) like it works in Visual Studio?

Thank you

Sean

When adding multiple integer fields together in a formula tool, if one of the integer values is Null, the output for that record will be 0. For example, if the formula is [Field_A] + [Field_B] + [Field_C], if the values for one record are 5 + Null + 8, the output will be 0. All in all, this makes sense, as a Null isn't defined as a number in any way - it's like trying to evaluate 5 + potato. However, there is no error or warning indicating that this is taking place when the workflow is run, it just passes silently.

Is there any way to have this behavior reported as a warning or conversion error when it happens? Again, the behavior itself makes sense, but it would be great to get a little heads up when it's happening.

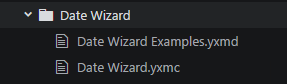

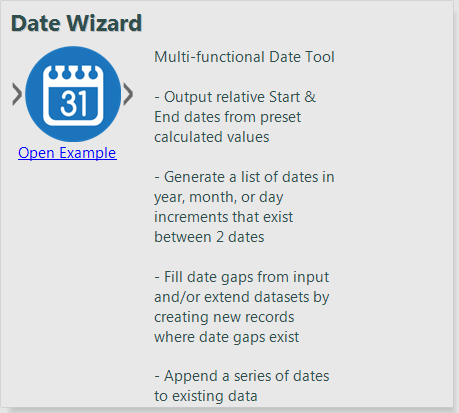

As seen in This Discussion Post, the idea here is to be able to add a link to example workflows in macro descriptions - like the ones seen in native tools.

Many thanks to @jdunkerley79 for demonstrating how this can be done by manually editing the macro's XML - specifically by adding a child element to the <MetaInfo> section, like so:

<Example>

<Description>Open Example</Description>

<File>\\aSERVER\aRootDir\path\to\Alteryx\Macros\Date Wizard\Date Wizard Examples.yxmd</File>

</Example>One small caveat is that it doesn't support truly relative paths. @PaulN explained in the discussion post that a relative reference here would search in the sample folders.

"Alteryx default behavior is to look for examples under .\Alteryx\Samples\02 One-Tool Examples or Alteryx\Samples\02 One-Tool Examples (or .\Alteryx\Samples\en\02 One-Tool Examples)."

Having said that, trying to reference a macro example in the same folder (using a relative reference) will throw an error given the following situation:

Package Structure:

Date Wizard.yxmc XML edits:

<Example>

<Description>Open Example</Description>

<!-- THIS WORKS -->

<File>\\aSERVER\aRootDir\path\to\Alteryx\Macros\Date Wizard\Date Wizard Examples.yxmd</File>

<!-- THIS DOESNT

<File>Date Wizard Examples.yxmd</File>

<File>.\Date Wizard Examples.yxmd</File>

<File>./Date Wizard Examples.yxmd</File>

-->

</Example>This shows a link in the Macro description but yields an error (shown below) when it is clicked.

Once again, this works fine with an absolute file path reference.

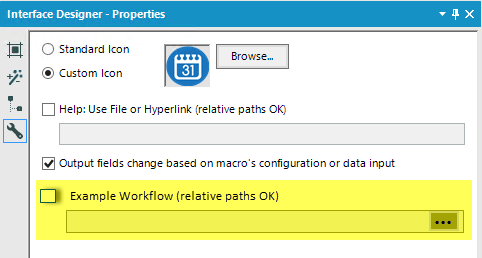

Here is ultimately what I am suggesting: Can we add an option to the Interface Designer (that updates the XML) and have it allow relative paths? Allowing relative paths would obviously be essentially to maintaining the macro's ability to be "lift-and-shift" when packaged/moved/uploaded to servers/galleries etc.

I'm assuming the option could look something like this, similar to the "Help" file -only it would show link in the macro description...

In conclusion, this would be very useful in providing links to example workflows for custom macros that may be complex and/or not self-explanatory.

Cheers,

Taylor Cox

Hello all,

Within the databases that I work in, I often find that there is duplicated data for some columns, and when using a unique tool, I have little control of what is deemed the unique record and which is deemed the duplicate.

A fantastic addition would be the ability to select which record you'd like to keep based on the type + a conditional. For example, if I had:

| Field 1 | Field 2 | Field 3 |

| 1 | a | NULL |

| 2 | a | 15 |

I would want to keep the non-null field (or non-zero if I cleansed it). It'd be something like "Select record where [Field 1] is greatest and [Field 2] is not null" (which just sounds like a summarize tool + filter, but I think you can see the wider application of this)

I know that you can either change the sort order beforehand, use a summarize tool, or go Unique > Filter duplicates > Join > Select records -- but I want the ability to just have a conditional selection based on a variety of criteria as opposed to adding extra tools.

Anyways, that's just an idea! Thanks for considering its application!

Best,

Tyler

- New Idea 267

- Accepting Votes 1,818

- Comments Requested 24

- Under Review 173

- Accepted 56

- Ongoing 5

- Coming Soon 11

- Implemented 481

- Not Planned 116

- Revisit 63

- Partner Dependent 4

- Inactive 674

-

Admin Settings

20 -

AMP Engine

27 -

API

11 -

API SDK

218 -

Category Address

13 -

Category Apps

113 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

245 -

Category Data Investigation

76 -

Category Demographic Analysis

2 -

Category Developer

208 -

Category Documentation

80 -

Category In Database

214 -

Category Input Output

639 -

Category Interface

239 -

Category Join

102 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

76 -

Category Predictive

77 -

Category Preparation

394 -

Category Prescriptive

1 -

Category Reporting

198 -

Category Spatial

81 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

88 -

Configuration

1 -

Content

1 -

Data Connectors

960 -

Data Products

2 -

Desktop Experience

1,529 -

Documentation

64 -

Engine

126 -

Enhancement

322 -

Feature Request

213 -

General

307 -

General Suggestion

6 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

12 -

Localization

8 -

Location Intelligence

80 -

Machine Learning

13 -

My Alteryx

1 -

New Request

189 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

23 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

78 -

UX

222 -

XML

7

- « Previous

- Next »

- AudreyMcPfe on: Overhaul Management of Server Connections

-

AlteryxIdeasTea

m on: Expression Editors: Quality of life update - StarTrader on: Allow for the ability to turn off annotations on a...

-

AkimasaKajitani on: Download tool : load a request from postman/bruno ...

- rpeswar98 on: Alternative approach to Chained Apps : Ability to ...

-

caltang on: Identify Indent Level

- simonaubert_bd on: OpenAI connector : ability to choose a non-default...

- maryjdavies on: Lock & Unlock Workflows with Password

- noel_navarrete on: Append Fields: Option to Suppress Warning when bot...

- nzp1 on: Easy button to convert Containers to Control Conta...

| User | Likes Count |

|---|---|

| 9 | |

| 8 | |

| 5 | |

| 5 | |

| 5 |