Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: New Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

Hi all,

One if the most common data-investigation tasks we have to do is comparing 2 data-sets. This may be making sure the columns are the same, field-name match, or even looking at row data. I think that this would be a tremendous addition to the core toolset. I've made a fairly good start on it, and am more than happy if you want to take this and extend or add to it (i give this freely with no claim on the work).

Very very happy to work with the team to build this out if it's useful

Cheers

Sean

-

Category Data Investigation

-

Desktop Experience

Access to only MD5 hashes via MD5_ASCII(String) and MD5_UNICODE(String) found under string functions is limiting. Is there a way to access other hashing algorithms, ideally via the crypto algorithms from OpenSSL or the .NET framework?

- https://msdn.microsoft.com/en-us/library/system.security.cryptography.hashalgorithm(v=vs.110).aspx

- https://wiki.openssl.org/index.php/Command_Line_Utilities#Signing_.2F_Digest

Hashing functions are a very useful tool to have. There are many different types of hashes and each one has tradeoffs for different uses. This can range from error checking, privacy shielding, password protection, forensic analysis, message authentication (HMAC) and much more. See: http://stackoverflow.com/questions/800685/which-cryptographic-hash-function-should-i-choose

- For workflows with data containing existing hashes, being able to consistently create hashes from non-hashed data for comparison is useful.

- Hashes are also useful because they are the same outside the Alteryx environment. They can be used to confirm correct operation of a production system or a third party's external process.

Access to only MD5 hashes via MD5_ASCII(String) and MD5_UNICODE(String) found under string functions in the formula tool is a start, but quite limiting.

Further, the ability to use non-cryptographic hashes and checksums would be useful, such as MurmurHash or CRC. https://en.wikipedia.org/wiki/List_of_hash_functions

Having the implementation benefit from hardware acceleration (AES-NI / CUDA) would be a great plus for high volume applications.

For reference, these are some hash algorithms that could be useful in workflows:

SHA-1

SHA-256

Whirlpool

xxHash

MurmurHash

SpookyHash

CityHash

Checksum

CRC-16

CRC-32

CRC-32 MPEG-2

CRC-64

BLAKE-256

BLAKE-512

BLAKE2s

BLAKE2b

ECOH

FSB

GOST

Grøstl

HAS-160

HAVAL

JH

MD2

MD4

MD6

RadioGatún

RIPEMD

RIPEMD-128

RIPEMD-160

RIPEMD-320

SHA-224

SHA-256

SHA-384

SHA-512

SHA-3 (originally known as Keccak)

Skein

Snefru

Spectral Hash

Streebog

SWIFFT

Tiger

-

Category Data Investigation

-

Category Parse

-

Category Transform

-

Desktop Experience

Hi there,

We have a relatively large table that we are trying to analyse using the data-investigation tools - however the Field summary tool's interactive output seems to fail on this data set producing no output at all. It produces no error message - just a blank output on the interactive output (the other two outputs are normally populated).

The table is 104 columns wide; 1.16M rows long; and 865 Mb in size excluding indices.

We put a random row select on this - and if we passed any more than 13100 rows into the Field Summary tool (with all 107 columns), then the interactive tool output is blank. If we scale this back to 13000 rows or fewer, the Field summary interactive view works as expected (providing a frequency histogram on each field).

Is this a known issue - there was no warning provided to indicate that there was an overrun or anything similar?

Thank you

Sean

-

Category Data Investigation

-

Desktop Experience

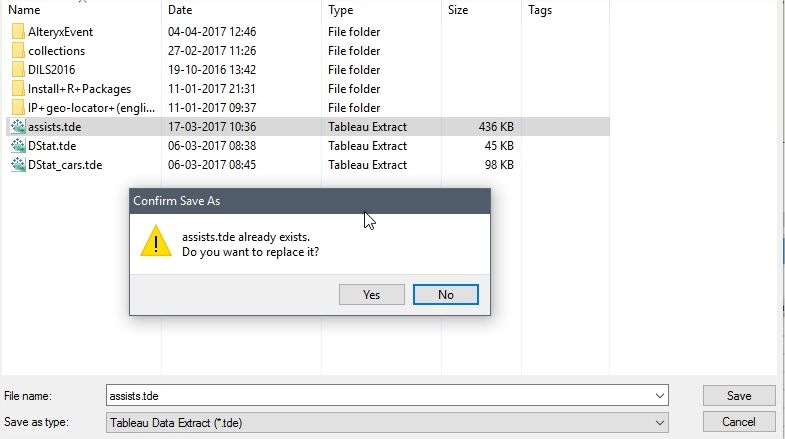

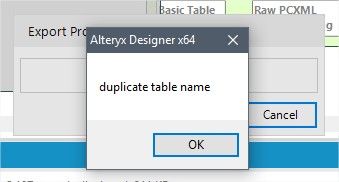

Sometime I use the save button within the browse tool to save a copy of the data. This does not work if you wish to overwrite an existing file though.

For example if I have a Tableau extract called mydata.tde and then using the browse tool I save the data and try to overwrite this file I am asked if I want to replace this file and on choosing 'Yes' Alteryx tries to save the data but then shows me an error about 'duplicate table name'. It should simply replace the file with the new data.

-

Category Data Investigation

-

Desktop Experience

When working with large amount of data the browse tool profiling causes the program to stop responding.

A feature to disable the profiling per browse tool.

or even better

After a set threshold (e.g. amount of rows), the auto profiling is disabled and requires an action to run.

-

Category Data Investigation

-

Desktop Experience

As my Alteryx workflows are becoming more complex and involve integrating and conforming more and more data sources it is becoming increasingly important to be able to communicate what the output fields mean and how they were created (ie transformation rules) as output for end user consumption; particular the file target state output.

It would be great if Alteryx could do the following:

1. Produce a simple data dictionary from the Select tool and the Output tool. The Select tool more or less contains everything that is important to the business user; It would be awesome to know of way to export this along with the actual data produced by the output tool (hopefully this is something I've overlooked and is already offered).

Examples:

- using Excel would be to produce the output data set in one sheet and the data dictionary for all of its attributes in the second sheet.

- For an odbc output you could load the data set to the database and have the option to either create a data dictionary as a database table or csv file (you'd also want to offer the ability to append that data to the existing dictionary file or table.

2. This one is more complex; but would be awesome. If the workflow used could be exported into a spreadsheet Source to Target (S2T) format along with supporting metadata / data dictionary for every step of the ETL process. This is necessary when I need to communicate my ETL processes to someone that cannot afford to purchase an alteryx licence but are required to review and approved the ETL process that I have built. I'd be happy to provide examples of how someone would likely want to see that formatted.

-

Category Data Investigation

-

Category Documentation

-

Category In Database

-

Category Reporting

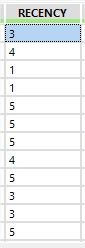

I have a column called Recency that only has values from 1 to 5. When I view the data using the Browse tool I see a scatter graph of the data that tells me very little apart from the fact that they only have values from 1 to 5. It would be much more useful to display a histogram showing how many records have 1,2 etc so I get a better idea of the data quality.

-

Category Data Investigation

-

Desktop Experience

Python pandas dataframes and data types (numpy arrays, lists, dictionaries, etc.) are much more robust in general than their counterparts in R, and they play together much easier as well. Moreover, there are only a handful of packages that do everything a data scientist would need, including graphing, such as SciKit Learn, Pandas, Numpy, and Seaborn. After utliizing R, Python, and Alteryx, I'm still a big proponent of integrating with the Python language much like Alteryx has integrated with R. At the very least, I propose to create the ability to create custom code such as a Python tool.

-

API SDK

-

Category Data Investigation

-

Category Developer

-

Category Predictive

One of the tools that I use the most is the SELECT tool because I normally get large data sets with fields that I won't be using for a specific analysis or with fields that need re-naming. In the same way, sometimes Alteryx will mark a field in a different type than the one I need (e.g. date field as string). That's when the SELECT comes in handy.

However, often times when dealing with multiple sources and having many SELECT tools on your canvas can make the workflow look a little "crowded". Not to mention adding extra tools that will need later explanation when presenting/sharing your canvas with others. That is why my suggestion is to give the CONNECTION tool "more power" by offering some of the functionality found in the SELECT tool.

For instance, if one of the most used features of the SELECT tool is to choose the fields that will move through the workflow, then may be we can make that feature available in the CONNECTION tool. Similarly, if one of the most used features (by Alteryx users) is to re-name fields or change the field type, then may be we can make that available in the CONNECTION tool as well.

At the end, developers can benefit from speeding up workflow development processes and end-users will benefit by having cleaner workflows presented to them, which always help to get the message across.

What do you guys think? Any of you feel the same? Leave your comments below.

-

Category Connectors

-

Category Data Investigation

-

Category Input Output

-

Data Connectors

With more people moving into urban developments, it would be helpful to allow analysts to measure customers within a "walking distance". Similar to drive time analysis, a step up would be to incorporate "walk time" analysis.

-

Category Behavior Analysis

-

Category Data Investigation

-

Category Spatial

-

Desktop Experience

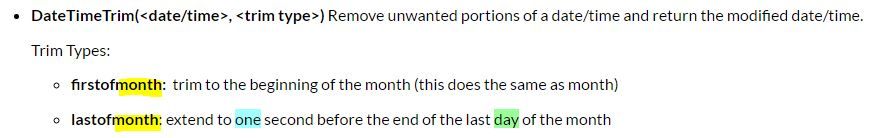

It was great to find the DateTimeTrim function when trying to identify future periods in my data set.

It would be even better if in addition to the "firstofmonth" , "lastofmonth" there could be "firstofyear", "lastofyear" functionality that would find for instance Jan, 1 xxxx plus one second and Jan, 1 xxxx minus one second. (Dec, 31 xxxx 12:58:59) respectively.

I'm not sure if time down to the second would even be needed but down to the day period.

-

Category Data Investigation

-

Category Preparation

-

Category Time Series

-

Desktop Experience

Hi All,

I believe the following would help improve the functionality of Select Tool.

The idea is to have a defaulting option for each of the field in the Select Tool (which I believe should be a light weight Tool i.e. not adversely impacting performance and gives best exhaustive picture of all columns flowing through a (/particular point in ) pipeline).

Following are some of the cases where defaulting might come handy -

1) Fields which are supposed to hold monetary data - instead of Null, one can put 0.00 to help roll up summary properly.

2) Fields which are supposed to hold dates (say expiry date) - instead of Null, one can put some enterprise standards like 31-12-2099 to avoid mixing Nulls and 31-12-2099.

3) Fields which are supposed to hold purchase quantity/number of employees/number of merchandise - instead of Null, one can put 0 (and not 0.00) again to help with roll up summary.

4) Fields which are supposed to hold Currency - instead of Null, one can put USD.

5) Fields which are supposed to hold dates (say this time create date) - instead of Null, one can hardcode actual date, or an additional feature to put Now() kind of functions.

At present one of the options of achieving same might be to put a Formula Tool and to code whatever is desired inside the Formula Tool.

Benefits of having the functionality inside Select Tool would be -

1) It would be more user friendly and call for faster build to just write '0.00' or 'USD' or '31-12-2099' as compared to writing IF IsNull()... statements.

2) Inside Formula Tool, user needs to pull desired fields from the drop down and hence exhaustive view of all fields passing through pipeline is not available.

Pain in selection of fields from drop down and writing actual formulas might be aggravated with the number of columns increasing and might be more prone to human omission related errors.

Thanks,

Rohit Bajaj

-

Category Data Investigation

-

Category Preparation

-

Category Transform

-

Desktop Experience

My users need the same functionnality In-db as the in-memory tool "Field Summary".

The purpose is to discover the data : distribution, minimum, maximum, count,valid, unique, ...

-

Category Data Investigation

-

Category In Database

-

Data Connectors

-

Desktop Experience

I know for - the most part - the Alteryx core data bundle is the only one part of allocate. It would be great if you could open up allocate to the user so we can add our own third party data sources. Just tell us what the requirements are to make our datasets ready for allocate and then we can load it ourselves. Then we can use the allocate workspace to query data in a similar way.

for example:

Geography(DMA,*)

Variable(CYADULT18P,CYADULT18P,False)

-

Category Data Investigation

-

Desktop Experience

It was discovered that 'Select' transformation is not throwing warning messages for cases where data truncation is happening but relevant warning is being reflected from the 'Formula' transformation. I think it would be good if we can have a consistent logging of warnings/errors for all transformations (at least consistent across the ones based on same use cases - for e.g. when using Alteryx as an ETL tool, 'Select' and 'Formula' tool usage should be common place).

Without this in place, it becomes difficult to completely rely on Alteryx in terms of whether in a workflow which is moving/populating data from source to target truncation related errors/warnings would be highlighted in a consistent manner or not. This might lead to additional overhead of having some logic built in to capture such data issues which is again differing transformation by transformation - for e.g when data passes through 'Formula' tool there is no need for custom error/warning logging for truncation but when the same data passes through 'Select' transformation in the workflow it needs to be custom captured.

-

Category Data Investigation

-

Category Preparation

-

Category Transform

-

Desktop Experience

It would help if there is some option provided wherein one can test the outcome of a formula during build itself rather than creating dummy workflows with dummy data to test same.

For instance, there can be a dynamic window, which generates input fields based on those selected as part of actual 'Formula', one can provide test values over there and click some 'Test' kind of button to check the output within the tool itself.

This would also be very handy when writing big/complex formulas involving regular expression, so that a user can test her formula without having to

switch screens to third party on the fly testing tools, or running of entire original workflow, or creating test workflows.

-

Category Data Investigation

-

Category Preparation

-

Category Transform

-

Desktop Experience

It would be good if an option can be provided wherein on clicking a particular data profiling output (cellular level) one can see the underlying records.

May be configurator/designer can be given this option where she can select her choice of technical/business keys and when an end user (of Data Profiling report output) clicks the data profiling result he can be redirected to those keys selected earlier.

One option might be to generate the output of data profiling in a zip folder which would contain the data profiling results along with the key fields (hyperlinked files etc).

Since in such case even data would be maintained/stored, it would be good to either encrypt or password protect the zip file based on various industry standards.

This can be provided as an optional feature under something like advanced properties for the tool, making use of the industry best practices followed in context of report formatting and rendering.

The reason why this should be optional is, not always there might be a need to have the detailed linking back to source level records in place.

For e.g. if the need is only to highlight the Data Profiling outcome at a high level to a Data Analyst this might not be useful.

On the other hand if there is a need for the Data Steward to actually go and correct the data based on the Profiling results, the linking of profiling results back to source data might come handy.

-

Category Data Investigation

-

Desktop Experience

It would be super cool to run a regular workflow in "test mode" or some other such way of running it just one tool at a time, so you can check tool outputs along the way and fix issues as they occur, especially for big workflows. Another advantage would be that if, for whatever reason, a working module stops working (maybe someone changed an underlying file - that NEVER happens to me lol), rather than running the whole thing, fixing something, running the whole thing again, you could just fix what's broken and run it that far before continuing.

Actually, that gives me an even better idea... a stop/start tool. Drop it in the workflow and the module will run up to that point and stop or start from that point. Hmm... time to submit a second idea!

-

Category Data Investigation

-

Category Interface

-

Category Preparation

-

Desktop Experience

In addition to the existing functionality, it would be good if the below functionality can also be provided.

1) Pattern Analysis

This will help profile the data in a better way, help confirm data to a standard/particular pattern, help identify outliers and take necessary corrective action.

Sample would be - for emails translating 'abc@gmail.com' to 'nnn@nnnn.nnn', so the outliers might be something were '@' or '.' are not present.

Other example might be phone numbers, 12345-678910 getting translated to 99999-999999, 123-456-78910 getting translated to 999-999-99999, (123)-(456):78910 getting translated to (999)-(999):99999 etc.

It would also help to have the Pattern Frequency Distribution alongside.

So from the above example we can see that there are 3 different patterns in which phone numbers exist and hence it might call for relevant standadization rules.

2) More granular control of profiling

It would be good, that, in the tool, if the profiling options (like Unique, Histogram, Percentile25 etc) can be selected differently across fields.

A sub-idea here might also be to check data against external third party data providers for e.g. USPS Zip validation etc, but it would be meaningful only for selected address fields, hence if there is a granular control to select type of profiling across individual fields it will make sense.

Note - When implementing the granular control, would also need to figure out how to put the final report in a more user friendly format as it might not conform to a standard table like definition.

3) Uniqueness

With on-going importance of identifying duplicates for the purpose of analytic results to be valid, some more uniqueness profiling can be added.

For example - Soundex, which is based on how similar/different two things sound.

Distance, which is based on how much traversal is needed to change one value to another, etc.

So along side of having Unique counts, we can also have counts if the uniqueness was to factor in Soundex, Distance and other related algorithms.

For example if the First Name field is having the following data -

Jerry

Jery

Nick

Greg

Gregg

The number of Unique records would be 5, where as the number of soundex unique might be only 3 and would open more data exploration opportunities to see if indeed - Jerry/Jery, Greg/Gregg are really the same person/customer etc.

4) Custom Rule Conformance

I think it would also be good if some functionality similar to multi-row formula can be provided, where we can check conformance to some custom business rules.

For e.g. it might be more helpful to check how many Age Units (Days/Months/Year) are blank/null where in related Age Number(1,10,50) etc are populated, rather than having vanila count of null and not null for individual (but related) columns.

Thanks,

Rohit

-

Category Data Investigation

-

Category Preparation

-

Desktop Experience

There is a great functionality in Excel that lets users "seek" a value that makes whatever chain of formulas you might have work out to a given value. Here's what Microsoft explains about goal seek: https://support.office.com/en-us/article/Use-Goal-Seek-to-find-a-result-by-adjusting-an-input-value-...

My specific example was this:

In the excel (attached), all you have to do is click on the highlighted blue cell, select the “data” tab up top and then “What-if analysis” and finally “goal seek.” Then you set the dialogue box up to look like this:

Set cell: G9

To Value: 330

By changing cell" J6

And hit “Okay.” Excel then iteratively finds the value for the cell J6 that makes the cell G9 equal 330. Can I build a module that will do the same thing? I’m figuring I wouldn’t have to do it iteratively, if I could build the right series of formulas/commands. You can see what I’m trying to accomplish in the formulas I’ve built in Excel, but essentially I’m trying to build a model that will tell me what the % Adjustment rate should be for the other groups when I’ve picked the first adjustment rate, and the others need to change proportionally to their contribution to the remaining volume.

There doesn't really seem to be a way to do this in Alteryx that I can see. I hate to think there is something that excel can do that Alteryx can't!

-

Category Data Investigation

-

Category Predictive

-

Desktop Experience

- New Idea 233

- Accepting Votes 1,823

- Comments Requested 25

- Under Review 160

- Accepted 59

- Ongoing 5

- Coming Soon 9

- Implemented 481

- Not Planned 119

- Revisit 65

- Partner Dependent 4

- Inactive 674

-

Admin Settings

19 -

AMP Engine

27 -

API

11 -

API SDK

217 -

Category Address

13 -

Category Apps

112 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

241 -

Category Data Investigation

76 -

Category Demographic Analysis

2 -

Category Developer

207 -

Category Documentation

79 -

Category In Database

212 -

Category Input Output

634 -

Category Interface

237 -

Category Join

101 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

76 -

Category Predictive

77 -

Category Preparation

387 -

Category Prescriptive

1 -

Category Reporting

198 -

Category Spatial

81 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

87 -

Configuration

1 -

Data Connectors

952 -

Data Products

1 -

Desktop Experience

1,509 -

Documentation

64 -

Engine

124 -

Enhancement

296 -

Feature Request

212 -

General

307 -

General Suggestion

4 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

11 -

Localization

8 -

Location Intelligence

80 -

Machine Learning

13 -

New Request

181 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

23 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

76 -

UX

220 -

XML

7

- « Previous

- Next »

-

fmvizcaino on: Easy button to convert Containers to Control Conta...

-

Qiu on: Features to know the version of Alteryx Designer D...

- DataNath on: Update Render to allow Excel Sheet Naming

- aatalai on: Applying a PCA model to new data

- charlieepes on: Multi-Fill Tool

- vijayguru on: YXDB SQL Tool to fetch the required data

- bighead on: <> as operator for inequality

- apathetichell on: Github support

- Fabrice_P on: Hide/Unhide password button

- simonaubert_bd on: ADBC connectivity : faster columnar storage query

| User | Likes Count |

|---|---|

| 45 | |

| 15 | |

| 13 | |

| 13 | |

| 8 |