Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop : Nouvelles idées

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

Currently if I drag a tool onto the canvas and it has multiple input anchors, Alteryx will try to connect to the first input anchor from the output of the nearest tool I am hovering near.

However the improvement I would like to see is where there are specific tools which are required to go into each input that it 'intelligently' connects to the correct input, for example on the gif below I have a PDF input and PDF template tool (pre-computer vision), and when I bring the image to text tool in, it will try and connect the output of the template tool into the D input anchor, when the correct input is the T anchor. What this leads to is me having to delete a connection and then re-wiring which slows down the development time.

Often (like, a surprising amount of the time), we will be on a working session with a customer and I'll notice they will break a data stream connection in order to prevent downstream tools from running. Of course, I always coach them to add a tool container to the downstream tools, then disable it but they continue to choose not to.

I'd love to see a feature where we can right-click on a data stream, then select "disable downstream tools", which then auto adds (and disables) a tool container.

Gif of current behavior I too often see below, thank you!

Similar to https://community.alteryx.com/t5/Alteryx-Designer-Ideas/Custom-Functions-in-AMP/idc-p/845446#M16381, it would be great to have AMP allow for custom C++ functions. Custom XML functions were added in 21.1 for AMP, so custom C++ functions would be the natural next step!

cc: @jdunkerley79 @TonyaS

The Dynamic Input will not accept inputs with different record layouts. The "brute force" solution is to use a standard Input tool for each file separately and then combine them with a Union Tool. The Union Tool accepts files with different record layouts and issues warnings. Please enhance the Dynamic Input tool (or, perhaps, add a new tool) that combines the Dynamic Input functionality with a more laid-back, inclusive Union tool approach. Thank you.

Hi Team,

This is very helpful if you add the run and stop option in scheduled workflow for Alteryx designer desktop automation. There is no option of pausing the workflow. this idea should be implemented that will make life easy who are running n- number of workflows.

Thanks,

Kaustubh

Hi,

Standard In-DB connection configuration for PostgreSQL / Greenplum makes "Datastream-In" In-DB tool to load data line by line instead of using Bulk mode.

As a result, loading data in a In-DB stream is very slow.

Exemple

Connection configuration

Workflow

100 000 lines are sent to Greenplum using a "Datastream-in" In-DB tool.

This is a demo workflow, the In-DB stream could be more complex and not replaceable by an Output Data In-Memory.

Load time : 11 minutes.

It's slow and spam the database with insert for each lines.

However, there is a workaround.

We can configure a In-Memory connection using the bulk mode :

And paste the connection string to the "write" tab of our In-DB Connection :

Load time : 24 seconds.

It's fast as it uses the Bulk mode.

This workaround has been validated by Greenplum team but not by Alteryx support team.

Could you please support this workaround ?

Tested on version 2021.3.3.63061

I am a citizen developer utilising Microsoft Power Apps and would like to see a Dataverse connector.

Please improve the Excel XLSX output options in the Output tool, or create a new Excel Output tool,

or enhance the Render tool to include an Excel output option, with no focus on margins, paper size, or paper orientation

The problem with the current Basic Table and Render tools are they are geared towards reporting, with a focus on page size and margins.

Many of us use Excel as simply a general output method, with no consideration for fitting the output on a printed page.

The new tool or Render enhancement would handle different formats/different schemas without the need for a batch macro, and would include the options below.

The only current option to export different schemas to different Sheets in one Excel file, without regard to paper formatting, is to use a batch macro and include the CReW macro Wait a Second, to allow Excel to properly shut down before a new Sheet is created, to avoid file-write-contention issues.

Including the Wait a Second macro increased the completion time for one of my workflows by 50%, as shown in the screehshots below.

I have a Powershell script that includes many of the formatting options below, but it would be a great help if a native Output or Reporting tool included these options:

Allow options below for specific selected Sheet names, or for All Sheets

AllColumns_MaxWidth: Maximum width for ALL columns in the spreadsheet. Default value = 50. This value can be changed for specific columns by using option Column_SetWidth.

Column_SetWidth: Set selected columns to an exact width. For the selected columns, this value will override the value in AllColumns_MaxWidth.

Column_Centered: Set selected columns to have text centered horizontally.

Column_WrapText: Set selected columns to Wrap text.

AllCells_WrapText: Checkbox: wrap text in every cell in the entire worksheet. Default value = False.

AllRows_AutoFit: Checkbox: to set the height for every row to autofit. Default value False.

Header_Format: checkbox for Bold, specify header cells background color, Border size: 1pt, 2pt, 3pt, and border color, Enable_Data_Filter: checkbox

Header_freeze_top_row: checkbox, or specify A2:B2 to freeze panes

Sheet_overflow: checkbox: if the number of Sheet rows exceeds Excel limit, automatically create the next sheet with "(2)" appended

Column_format_Currency: Set selected columns to Currency: currency format, with comma separators, and negative numbers colored red.

Column_format_TwoDecimals: Set selected columns to Two decimals: two decimals, with comma separators, and negative numbers colored red.

Note: If the same field name is used in Column_Currency and Column_TwoDecimals, the field will be formatted with two decimals, and not formatted as currency.

Column_format_ShortDate: Set selected columns to Short Date: the Excel default for Short Date is "MM/DD/YYYY".

File_suggest_read_only: checkbox: Set flag to display this message when a user opens the Excel file: "The author would like you to open 'Analytic List.xlsx' as read-only unless you need to make changes. Open as read-only?

vb code: xlWB.ReadOnlyRecommended = True

File_name_include_date_time: checkboxes to add file name Prefix or Suffix with creation Date and/or Time

========

Examples:

My only current option: use a batch macro, plus a Wait a Second macro, to write different formats/schemas to multiple Sheets in one Excel file:

Using the Wait a Second macro, to allow Excel to shut down before writing a new Sheet, to avoid write-contention issues, results in a workflow that runs 50% longer:

With the onset of Workflow Comparisons in V2021.3, it only seems natural to me that the next step would be a method of handling those changes. Maybe have some clickable dropdowns on the changed tools that have a few options as to what you'd like to do about them. I think the options to start off with would be "Apply this change to that workflow" and "Apply the other workflow's change to this one" along with the "Apply all of this workflow's changes to that one" and "Apply all of that workflow's changes to this one" somewhere in the header.

I know that I will occasionally get a request to change a workflow while I'm already in the middle of making a change to it or am waiting on approval for a change I've made and am hoping to implement. The current version control system on Server does not make it easy to implement multiple changes that may need to be implemented in an order other than the one in which they were started. The current process seems to be to merge them later by going through the whole process of selectively copying the changed tools and pasting/replacing them or otherwise manually modifying the tools to make them match.

Likewise, implementing the version merging proposed here will allow versioning strategies more akin to branches in git. One could more-or-less maintain two streams of changes until they were both complete and merge or productionize them as they're complete and ready.

Similar to how the Join tool allows to "Select all Left" or "Select all Right" I'd like to see the Append Fields tool have an option to select all source or select all target. Same for deselect.

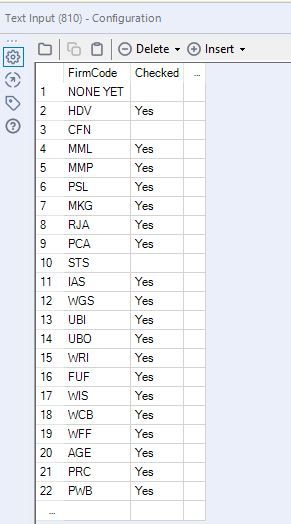

It would be great to have the option to FILTER on the columns within the TEXT INPUT TOOL.

Example, in the screenshot below I would like to filter this on the FirmCode Alphabetically

For performance, it would be great if designers could mark Batch Macros safe for parallel execution. E.g. the macro will not dead-lock itself accessing inputs / outputs.

Either Alteryx would run all iterations simultaneously, or to what available resources permit.

My use case here is a rule table (DMN) which execution against a data set, each rule needs to be executed against each row. The rules are expressed as formulas and are joined through the Dynamic Replace tool.

However we need to check whether inputs match multiple rules these may violate the matching process, so I run each rule through the batch macro to find matches. Once all matches are processed then validation on the matching occurs.

The input could be 30k rows, and there are about 80 rules which my Alteryx takes about five minutes to execute.

There needs to be a way to step into macro a which is component of parent workflow for debugging.

Currently the only way to achieve to debug these is to capture the inputs to the macro from the parent workflow, and then run the amend inputs on the macro. For iterative / batch macros, there is no option to debug at all. This can be tedious, especially if there are a number of inputs, large amounts of data, or you are have nested macros.

There should be an option on the tool representing the macro in the parent workflow to trigger a Debug when running the workflow, this would result in the same behavior when choosing 'Debug' from the interface panel in the macro itself: a new 'debug' workflow is created with the inputs received from the parent workflow.

On iterative / batch macros, which iteration / control parameter value the debug will be triggered on should be required. So if a macro returns an error on the 3 iteration, then the user ticks 'Debug' and Iteration = 3. If it doesn't reach the 3rd iteration, then no debug workflow is created.

A very useful and common function

https://www.w3schools.com/sql/func_sqlserver_coalesce.asp

Return the first non-null value in a list:

It exits in SQL, Qlik Sense, etc...

Best regards,

Simon

It would be awesome if we could edit annotations while the workflow is running.

I love annotating all my tools, and I'm often sitting there looking at my canvas right after hitting run and thinking, "I should annotate that!" and then moving on and forgetting after it's run. I'm not sure if that's feasible or not, but I think it would be neat.

We could really use a proper API Tool for Input, rather than rely on curl queries, etc. that end up requiring many tools to parse into a proper table form, even using the JSON tools!

I for one deal regularly with cloud APIs, and pulling their data. We need an API Input tool that can handle various auth methods, Headers, Params, Body data, etc and that will ALSO handle converting the typical output (JSON) into two outputs - Meta info, and the table-compatible info.

I'm moving from direct SQL query to using API, and I literally have 15 Tools and steps required to create the same table data that the single SQL query tool gave me. In one case, I have to have an 18 tool Container that just handles getting a Bearer Token before I can pass that on to another container that actually does the curl query, etc and it's 15 tools needed to manage the output JSON into proper table-style data. (Yes, I already use the JSON tools, but the data requires massaging before that tool can work right).

As an add-on, we should also be able to make aliases for the API connection so we aren't having to put user/pass information into the workflow at any point. Interfaces are nice, but not really useful in automated workflow runs.

There's got to be a better way!

In order to make it easier to find workflow logs and be able to analyze them we would suggest some changes:

- In the log name instead something like "alteryx_log_1634921961_1.log" the log name should be the queue_id for example: "6164518183170000540ac1c5.log"

This would facilitate when trying to find the job logs.

To facilitate reading the log we would suggest the following changes:

- Add the timestamp

- Add error level

For the example of current and suggested log:

Please consult the document in attachment.

In the suggested format the log would be [TIMESTAMP] [ERRORLEVEL] [ELAPSEDTIME] [MESSAGE]

In order to make the connections between Alteryx and Snowflake even more secure we would like to have the possibility to connect to snowflake with OAuth in an easier way.

The connections to snowflake via OAuth are very similar to the connections Alteryx already does with O365 applications. It requires:

- Tenant URL

- Client ID

- Client Secret

- Get Authorization token provided by the snowflake authorization endpoint.

- Give access consent (a browser popup will appear)

- With the Authorization Code, the client ID and the Client Secret make a call to retrieve the Refresh Token and TTL information for the tokens

- Get the Access Token every time it expires

With this an automated workflow using OAuth between Alteryx and Snowflake will be possible.

You can find a more detailed explanation in the attached document.

Hello,

It would be very helpful to have a search box for field names in the summary tool, I think it would help decrease errors by selecting fields by mistake with similar names and will help gain a couple of seconds while looking around for a specific field, particularly with datasets with a lots of them.

Like this:

- New Idea 275

- Accepting Votes 1 815

- Comments Requested 23

- Under Review 173

- Accepted 58

- Ongoing 6

- Coming Soon 19

- Implemented 483

- Not Planned 115

- Revisit 61

- Partner Dependent 4

- Inactive 672

-

Admin Settings

20 -

AMP Engine

27 -

API

11 -

API SDK

218 -

Category Address

13 -

Category Apps

113 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

247 -

Category Data Investigation

77 -

Category Demographic Analysis

2 -

Category Developer

208 -

Category Documentation

80 -

Category In Database

214 -

Category Input Output

641 -

Category Interface

240 -

Category Join

103 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

76 -

Category Predictive

77 -

Category Preparation

394 -

Category Prescriptive

1 -

Category Reporting

198 -

Category Spatial

81 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

89 -

Configuration

1 -

Content

1 -

Data Connectors

964 -

Data Products

2 -

Desktop Experience

1 538 -

Documentation

64 -

Engine

126 -

Enhancement

331 -

Feature Request

213 -

General

307 -

General Suggestion

6 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

12 -

Localization

8 -

Location Intelligence

80 -

Machine Learning

13 -

My Alteryx

1 -

New Request

194 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

23 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

80 -

UX

223 -

XML

7

- « Précédent

- Suivant »

-

NicoleJ sur : Disable mouse wheel interactions for unexpanded dr...

- TUSHAR050392 sur : Read an Open Excel file through Input/Dynamic Inpu...

- NeoInfiniTech sur : Extended Concatenate Functionality for Cross Tab T...

- AudreyMcPfe sur : Overhaul Management of Server Connections

-

AlteryxIdeasTea

m sur : Expression Editors: Quality of life update - StarTrader sur : Allow for the ability to turn off annotations on a...

- simonaubert_bd sur : Download tool : load a request from postman/bruno ...

- rpeswar98 sur : Alternative approach to Chained Apps : Ability to ...

-

caltang sur : Identify Indent Level

- simonaubert_bd sur : OpenAI connector : ability to choose a non-default...

| Utilisateur | Compte |

|---|---|

| 25 | |

| 9 | |

| 6 | |

| 6 | |

| 5 |