Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop : Neue Ideen

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

Hi there Alteryx team,

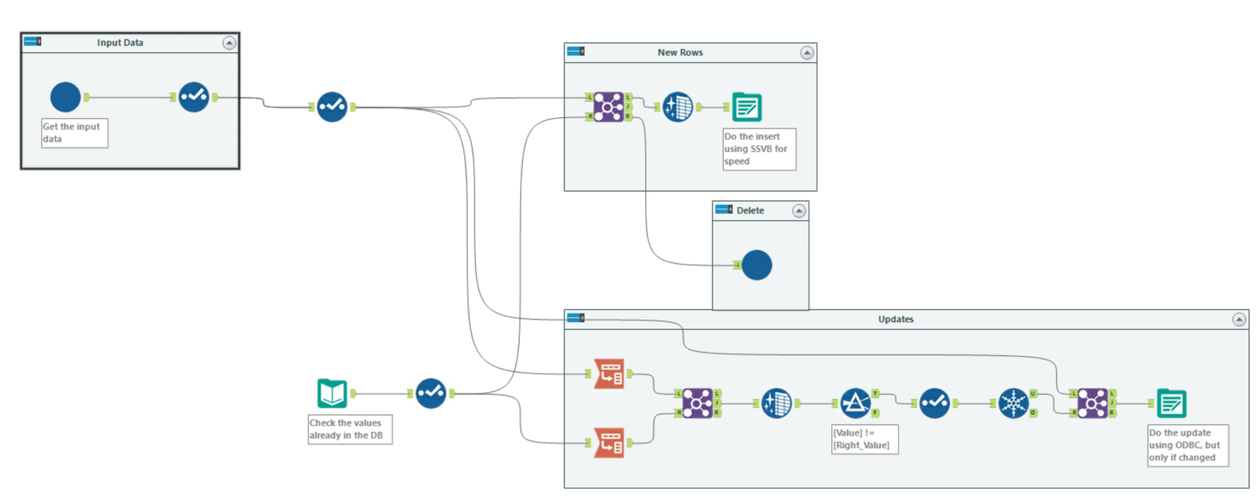

When we load data from raw files into a SQL table - we use this pattern in almost every single loader because the "Update, insert if new" functionality is so slow; it cannot take advantage of SSVB; it does not do deletes; and it doesn't check for changes in the data so your history tables get polluted with updates that are not real updates.

This pattern below addresses these concerns as follows:

- You explicitly separate out the inserts by comparing to the current table; and use SSVB on the connection - thereby maximizing the speed

- The ones that don't exist - you delete, and allow the history table to keep the history.

- Finally - the rows that exist in both source and target are checked for data changes and only updated if one or more fields have changed.

Given how commonly we have to do this (on almost EVERY data pipe from files into our database) - could we look at making an Incremental Update tool in Alteryx to make this easier? This is a common functionality in other ETL platforms, and this would be a great addition to Alteryx.

The Sharepoint file tools are certainly a step in the right direction, but it would be great to enhance the files types that it is possible to write to sharepoint from Alteryx.

The format missing that I think is probably most in demand is pdf. If we're using the Alteryx reporting suite to create PDF reports, it would be awesome to have an easy way to output these to Sharepoint.

https://help.alteryx.com/20213/designer/sharepoint-files-output-tool

https://community.alteryx.com/t5/Public-Community-Gallery/Sharepoint-Files-Tool/ta-p/877903

Hello all,

Here the issue : when you have a lot of tables, the Visual Query Builder can be very slow. On my Hive Database, with hundreds of tables, I have the result after 15 minutes and most of the time, it crashes, which is clearly unusable.

I can change the default interface in the Visual Query Builder tool but for changing this setting, I need to load all the tables in the VQB tool.

I would like to set that in User Settings to set it BEFORE opening the Visual Query Builder.

Best regards,

Simon

After talking with support we found out that Oracle Financial Cloud ERP is not listed among supported Data Sources as stated in the url below:

We would like this added as our company will begin working heavily with Oracle Financial Cloud ERP to bring data from that into our SQL servers. Is there a reason why that connection is not currently being investigated and set up?

Thanks,

Chris

Hi, I was using the Image Template tool and I noticed that the icons for import and export are switched.

The Problem: Sometimes we are developing workflows where we use a data connection that the developer has access to but not necessarily the people running the workflow do.

For example,

- A workflow is pulling from one database to another, with some specific transformations.

- This workflow is used by many people, some have Designer for other purposes.

- The workflow also writes to a log table, documenting different parts of the workflow for auditing purposes.

- This log table is not something that the people running the workflow should have access to write to other than when running this workflow

- This log table outputs using a data connection so that it is not embedding passwords (a company-wide best practice)

- For someone to run this workflow with this set up, they would need access to this log table's data connection

- If the log table data connection is shared to that group of users, now any of the users with Designer can go write whatever they would like to that table since that data connection has access to.

- This also makes the log table unsecure for auditing purposes.

The Solution: We are looking for a way to have a data connection in a workflow without giving all of the running users full access to use that connection in their workflows. Almost a proposal of two tiers of permissions:

- Access to use a data connection in a workflow you are running

- Access to use a data connection in a workflow you are building

To insert and update rows to our Oracle database, we're mostly using the output data tool (especially updating, since it's not available for Oracle via the in-DB tools). The output data tool just has more options to choose from versus the in-DB toolset (screenshot 1).

However, the one thing that we would really like to see in the output data tool is the option to connect to a database via a connection file (like f.e. in the connect in-db or data stream in tool, see screenshot 2). The thing is, we can set an encrypted connection in the output data tool (and upload it to our Gallery). However, doing this for 80 workflows and 10 output tools per flow makes it quite a annoying task to do. We're not the administrators of our Gallery and want to be able to run the workflows locally as well. Therefore, in my opinion, it would be great to utilize the connection file function in the output data tool, it provides the option to centrally manage your db connections without being dependent on a server administrator.

Screenshot 1

Screenshot 2

I am running into unexpected functionality when utilizing the date interface tool in an Analytic App after upgrading to 21.3. Previously I was able to easily select dates in the past in the app interface by first selecting the Year, then the month, date, etc. After updating I am only able to see the prior and upcoming three months, which makes it difficult if you need to navigate back, say, 10 years. A ticket was put in they could not find when or why this change was made. This issue was brought up to our Designer SME group and they agreed that this isn't an improvement on the old design and is more cumbersome. They recommended posting to the Ideas page to bring back the old design.

Hello,

Today I worked with summarize tool and need to merge/concatenate 10 string columns of dataset with different separator than is set up by default (",")

Current status is that you can multiple select columns that you want to concatenate (that's good) but when you want to set up for all output columns different separator than default, you must set up it for each column, in my case it was 10 columns..

one field is selected (Concatenate option is available)

More fields selected (Concatenate option is hidden)

My idea

Allow to multiple selection of fields that are concatenated and allow to set up separator for these fields. Benefit will be that you can mass setup different separator for various fields

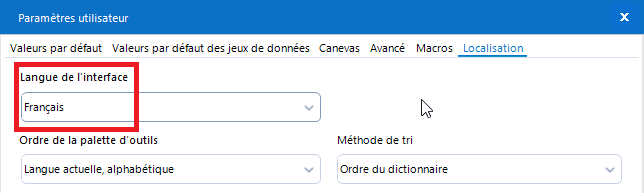

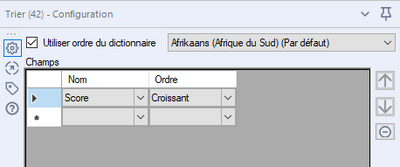

Hello all,

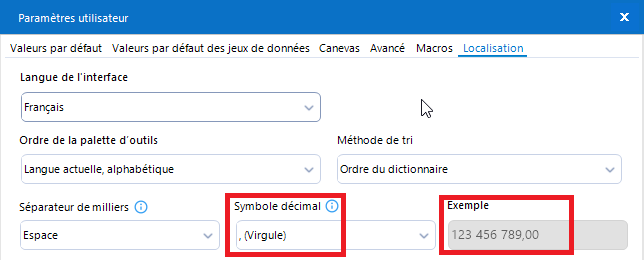

My localization settings :

Default Dictionnary in Order tool : Afrikaans

Best regards,

Simon

Hello,

here are my localization parameters, with a comma as a decimal separator :

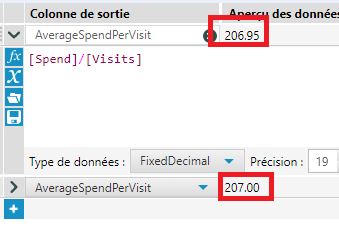

However, is the overview in the formula tool

(I took the formula tool example)

The overview formatting should obviously match the formatting and give 206,95 and 207,00, instead of 206.95 and 207.00

Best regards,

Simon

Alteryx should have a tool to trigger Qlik Sense / QlikView Tasks at the server.

Today, Tableau and Power BI tools allow us to write directly into data sets or Data servers, but there is no equivalent function for the Qlik platform.

It is possible to store QVX files, but it's impossible to trigger the Qlik job to import that data directly.

In order to run a canvas using either AMP or E1 - the user has to perform at least 5 operations which are not obvious to the user.

a) click on whitespace for the canvas to get to the workflow configuration. If this configuration pane is not docked - then you have to first enable this

b) set focus in this window

c) change to the runtime tab

d) scroll down past all the confusing and technical things that most end users are nervous to touch like "Memory limits" and temporary file location and code page settings - to click on the last option for the AMP engine.

e) and then hit the run button

A better way!

Could we instead simplify this and just put a drop-down on the run button so that you can run with the old engine, or run with the new engine? Or even better, have 2 run buttons - run with old engine, and run with super-fast cool new engine?

- This puts the choice where the user is looking at the time they are looking to run (If I want to run a canvas - I'm thinking about the run button, not a setting at the bottom of the third tab of a workflow configuration)

- It also makes it super easy for users to run with E1 and AMP without having to do 10 clicks to compare - this way they can very easily see the benefit of AMP

- It makes it less scary since you are not wading through configuration changes like Memory or Codepages

- and finally - it exposes the new engine to people who may not even know it exists 'cause it's buried on the bottom of the third tab of a workflow configuration panel, under a bunch of scary-sounding config options.

cc: @TonyaS

I suggest Alteryx may add a new interface tool / enhance existing date interface tool to allow selection of time period.

For the output of the interface tool, i believe we can simply concatenate the text (e.g. 111030AM) and perform transformation by other Alteryx tool.

The interface can be something like this, multiple drop down box which order in a single row.

Although there is workaround of creating 4 drop down box, but the presentation on Analytic app and gallery is not good (those text box is separated into multiple line.

As we begin to adopt the AMP engine - one of the key questions in every user's mind will be "How do I know I'm going to get the same outcome"

One of the easiest ways to build confidence in AMP - and also to get some examples back to Alteryx where there are differences is to allow users to run both in parallel and compare the differences - and then have an easy process that allows users to submit issues to the team.

For example:

- Instead of the option being run in AMP or run in E1 - instead can we have a 3rd option called "Run in comparison mode"

- This runs the process in both AMP and E1; and checks for differences and points them out to the user in a differences repot that comes up after the run.

- Where there's a difference that seems like a bug (not just a sorting difference but something more material) - the user then has a button that they can use to "Submit to Alteryx for further investigation". This will make it much simpler for Alteryx to identify any new issues; and much simpler for users to report these issues (meaning that more people will be likely to do it since it's easier).

The benefit of this is that not only will it make users more comfortable with AMP (since they will see that in most cases there are no difference); it will also give them training on the differences in AMP vs. E1 to make the transition easier; and finally where there are real differences - this will make the process of getting this critical info to Alteryx much easier and more streamlined since the "Submit to Alteryx" process can capture all the info that Alteryx need like your machine; version number etc; and do this automatically without taxing the user.

Experts -

While developing code in the formula tool (and perhaps elsewhere) it would be helpful to have the "parenthesis highlighting" function found in database software such as SSMS, DBeaver, SQL Developer etc. I.e. put the cursor next to a closing paren and the corresponding opening paren gets highlighted (or vice versa) - conversely if there is no corresponding paren nothing gets highlighted and you instantly know you've got a bug to fix (and where to fix it)!

The autorecover feature should also backup macros. I was working on a macro when there was an issue with my code. I have my autorecover set very frequent, so I went there to backup to a previous version. To my great surprise, my macro wasn't being saved behind the scenes at all. My workflow had its expected backups, but not my macro. Please let any extension be backed up by autorecover.

Thanks!

Hi all,

I think it would be great if Alteryx could send calendar invites in Outlook (and perhaps other calendaring systems) like it sends emails.

Currently the only way to accomplish this is to send it as an attached ICS file on a regular email.

In my use case, rather than auto-populating a Shared Mailbox/calendar, someone has to go into the inbox, the email has to be opened then the ICS has to be clicked on to interact with.

There are ways in Outlook to send an item like this but have it appear automatically without the end user ever seeing the actual invite. (Our Company adds holidays and other important dates in this manner)

So basically I want this functionality available in Alteryx to do the same. I have posted about it before in the discussions threads, but basically right now we enter our time in HR system, then have to manually enter the same info on our personal calendar in Outlook and any team calendar whether it be a SharePoint calendar, a group calendar in Outlook etc.

If Alteryx had the ability to send these types of invites, employees could enter the info in our HR system then Alteryx can get the data feed and automatically populate the other calendar (whichever type it may be).

Hopefully this gets some likes.

There are many circumstances when you have to build an interative macro where it's not just the iterating data set that needs to change every iteration, but also a second data set.

Think about this like a loop where two different variables are updated on every iteration, not just the control variable in the For xxx control variable.

The way that users work around this is to use a temporary yxdb file where instead of a macro input you input from the yxdb, and then write back to the same yxdb. This allows you to pretend that you can adjust 2 different data sets on every cycle of the loop. there are 4 downsides to this:

a) User complexity - this breaks the conceptual simplicity of macro inputs since now the users have to understand that in situation X I use macro inputs; and in situation Y I have to use some other type of tool.

b) Speed penalty - writing to disk is between 1000x slower and 1 000 000x slower than working with data in memory (especially if it's in cache) - so by forcing this to go through a yxdb file, you do incur a speed penalty which is just not needed

c) blocking penalty - Because of the fact that you can't write to a file that you're still reading from, you need to pepper this with Block until done tools - and you need to initialize the macro using a first write to the yxdb file outside the macro - which further hurts speed. Given the nuanced behaviour of block-until-done, this also introduces user complexity issues

d) Self-contained - because you have to initialize these files outside the macro - the macro is no-longer self-contained and portable (which breaks the principle of Information Hiding which is a key pillar of good modular decoupled software design.

The other way that users work around this - is to serialize their entire second recordset into a field which then gets tacked onto the iterating data set using an iterative macro. This is HIGHLY wasteful becuase then you have to build a serialize & deserialize process for this second recordset. It fixes the speed and blocking penalites from above, but introduces a computational overhead which is generating no value; and makes this even more complex for users - and a further blocker to using macros.

Recommendation:

We could make this simpler by allowing users to create multiple pairs of macro input / macro outputs so that 2 or 3 or n different data sets can be updated with every iteration.

Below is a screenshot demonstrating this, from an Advent of code challenge - the details of the problem are not important - the issue at hand is that there are 2 record sets which both need to be updated on every iteration.

cc: @NicoleJohnson @Samanthaj_hughes @SteveA

In cases where there are dynamic tools - you often get a situation where there are zero rows returned - which means that the output of something like a transpose or a JSON parse or a regex may not have the field names expected.

However - any downstream filter tools (or other similar tools) fail even though there are no rows (see screenshot below).

The only way to get around this is to insert fake rows using a union or use the CReW macro for Ensure Fields. However, this is all waste since there are no rows so there's no point in even evaluating the predicate in the filter tool. Rather than making users work around this - can we please change the engine so that a tool can avoid evaluation if there are zero rows - this will significantly reduce the amount of these kind of workaround that need to be used with any dynamic tools (including any API calls).

thank you

Sean

- New Idea 258

- Accepting Votes 1.818

- Comments Requested 24

- Under Review 169

- Accepted 56

- Ongoing 5

- Coming Soon 11

- Implemented 481

- Not Planned 118

- Revisit 64

- Partner Dependent 4

- Inactive 674

-

Admin Settings

20 -

AMP Engine

27 -

API

11 -

API SDK

218 -

Category Address

13 -

Category Apps

112 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

245 -

Category Data Investigation

76 -

Category Demographic Analysis

2 -

Category Developer

208 -

Category Documentation

80 -

Category In Database

214 -

Category Input Output

636 -

Category Interface

238 -

Category Join

102 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

76 -

Category Predictive

77 -

Category Preparation

392 -

Category Prescriptive

1 -

Category Reporting

198 -

Category Spatial

81 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

87 -

Configuration

1 -

Data Connectors

958 -

Data Products

3 -

Desktop Experience

1.525 -

Documentation

64 -

Engine

125 -

Enhancement

316 -

Feature Request

212 -

General

307 -

General Suggestion

4 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

12 -

Localization

8 -

Location Intelligence

80 -

Machine Learning

13 -

New Request

188 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

24 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

78 -

UX

223 -

XML

7

- « Vorherige

- Nächste »

- rpeswar98 auf: Alternative approach to Chained Apps : Ability to ...

-

caltang auf: Identify Indent Level

- simonaubert_bd auf: OpenAI connector : ability to choose a non-default...

- maryjdavies auf: Lock & Unlock Workflows with Password

- nzp1 auf: Easy button to convert Containers to Control Conta...

-

Qiu auf: Features to know the version of Alteryx Designer D...

- DataNath auf: Update Render to allow Excel Sheet Naming

- aatalai auf: Applying a PCA model to new data

- charlieepes auf: Multi-Fill Tool

- seven auf: Turn Off / Ignore Warnings from Parse Tools

| Benutzer | Anzahl |

|---|---|

| 27 | |

| 12 | |

| 11 | |

| 6 | |

| 6 |