Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

Hey gang, just another QoL suggestion from me!

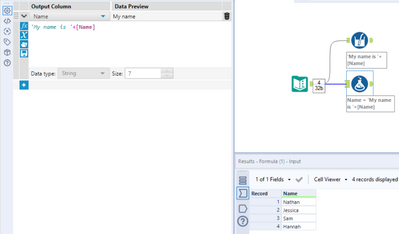

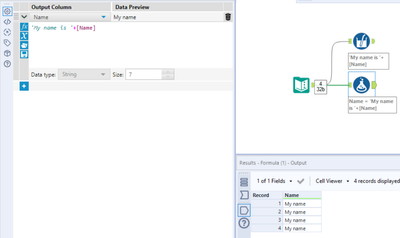

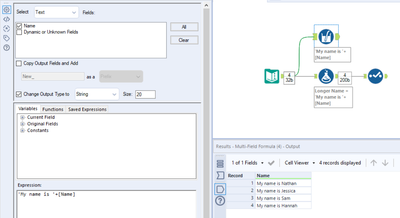

Currently, when applying changes to an existing field that will take the outcome beyond the current field size, we have to use an additional Select tool to get around truncation:

The usual route here is to either a) use a Select tool beforehand to increase the field size:

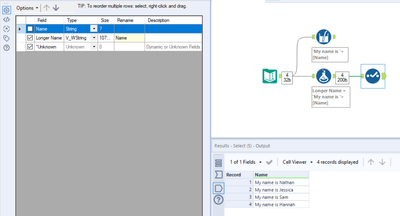

Or b) create a new field and then remove the 'old' one in a Select tool afterwards, also renaming the replacement here:

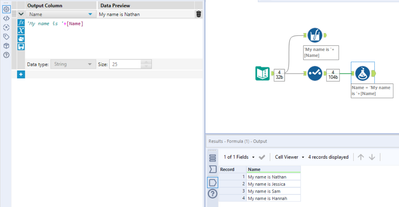

Given that we could just do this in one using the Multi-Field Formula tool:

My request is pretty simple here - can the 'Change Output Type to' configuration also be added to the standard Formula tool? The ability to also update the name of the output would be brilliant as well if possible. Cheers!

Auto Field tools help optimally size and assign data types to your data for better performance but this conversion process can be memory intensive with large datasets. What if you could right-click an Auto Field tool to convert it to a standard select tool with the new data types and sizes much like the existing ability to right-click convert inputs into macro inputs or browse tools into outputs? This would eliminate the need to manually transfer the results of the Auto Field tool into a select tool for production workflows!

The Dynamic Input will not accept inputs with different record layouts. The "brute force" solution is to use a standard Input tool for each file separately and then combine them with a Union Tool. The Union Tool accepts files with different record layouts and issues warnings. Please enhance the Dynamic Input tool (or, perhaps, add a new tool) that combines the Dynamic Input functionality with a more laid-back, inclusive Union tool approach. Thank you.

The Problem: Sometimes we are developing workflows where we use a data connection that the developer has access to but not necessarily the people running the workflow do.

For example,

- A workflow is pulling from one database to another, with some specific transformations.

- This workflow is used by many people, some have Designer for other purposes.

- The workflow also writes to a log table, documenting different parts of the workflow for auditing purposes.

- This log table is not something that the people running the workflow should have access to write to other than when running this workflow

- This log table outputs using a data connection so that it is not embedding passwords (a company-wide best practice)

- For someone to run this workflow with this set up, they would need access to this log table's data connection

- If the log table data connection is shared to that group of users, now any of the users with Designer can go write whatever they would like to that table since that data connection has access to.

- This also makes the log table unsecure for auditing purposes.

The Solution: We are looking for a way to have a data connection in a workflow without giving all of the running users full access to use that connection in their workflows. Almost a proposal of two tiers of permissions:

- Access to use a data connection in a workflow you are running

- Access to use a data connection in a workflow you are building

Tableau v2018.3 introduced multiple table extracts. These are particularly useful for fact table to fact table joins and fact table to entitlement table joins for row-level security where the number of rows created by the join and/or size of join results would be prohibitively large. Also they are useful for fact table to spatial joins where we might have multiple spatial objects (for example custom province/district/health facility catchment) for each row of fact table data.

So in Alteryx I'd like to be able to specify 2+ tables & their join keys and then write out a .hyper multiple tables extract.

Jonathan

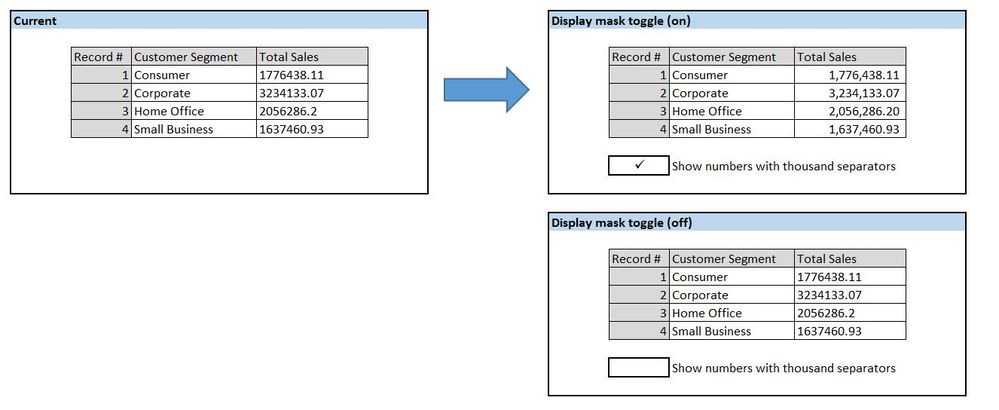

Hello all,

When looking at the Results window, I often find it a headache to read the numeric results because of the lack of commas. I understand that incorporating commas into the data itself could make for some weird errors; however, would it be possible to toggle an option that displays all numeric fields with proper commas and right-aligned in the Results window? I am referring to using a display mask to make numeric fields look like they have the thousands separator while retaining numeric functionality (as opposed to converting the fields to strings).

What do you think?

I recently came to know that Alteryx doesn't support Denodo Data sources. We at our company are using Denodo as a data virtualization tool and also Alteryx is used for data blending. The request is for Alteryx to start supporting Denodo as a data source so that our company can reach out to Alteryx for any support related issues with Denodo.

Hello all,

From https://www.sqltutorial.org/sql-triggers/

Introduction to SQL Triggers

A trigger is a piece of code executed automatically in response to a specific event occurred on a table in the database.

A trigger is always associated with a particular table. If the table is deleted, all the associated triggers are also deleted automatically.

A trigger is invoked either before or after the following event:

- INSERT – when a new row is inserted

- UPDATE – when an existing row is updated

- DELETE – when a row is deleted.

When you issue an INSERT, UPDATE, or DELETE statement, the relational database management system (RDBMS) fires the corresponding trigger.

In some RDMBS, a trigger is also invoked in the result of executing a statement that calls the INSERT, UPDATE, or DELETE statement. For example, MySQL has the LOAD DATA INFILE, which reads rows from a text file and inserts into a table at a very high speed, invokes the BEFORE INSERT and AFTER INSERT triggers.

On the other hand, a statement may delete rows in a table but does not invoke the associated triggers. For example, TRUNCATE TABLE statement removes all rows in the table but does not invoke the BEFORE DELETE and AFTER DELETE triggers.

So basically, I would like to create some triggers from in db tools in Alteryx.

Best regards,

Simon

I know this has been posted before, but the posts are fairly old, and I have just confirmed with Support that it is still an issue. Seems to be a pretty basic request, so I'm putting it out there again under this new heading.

The issue is that if you have data in a field, and you have that data separated by a new line (\n), it will show up fine in a browse tool, or pretty much any other output (database file, Office Document file, etc.). But if you try to use the Table Tool under Reporting, it ignores the line break and strings the data together.

Example:

The field data looks like this in a browse or most other outputs:

Hello, my name is

Michael Barone

and I love

Alteryx

But when I try to pull this field into a Table Tool, it shows up like this:

Hello, my name is Michael Barone and I love Alteyrx

Putting this out here again in hopes that it gets lots and lots of stars so it gets put on the road map!!

From Wikipedia

Druid is a column-oriented, open-source, distributed data store written in Java. Druid is designed to quickly ingest massive quantities of event data, and provide low-latency queries on top of the data.[1] The name Druid comes from the shapeshifting Druid class in many role-playing games, to reflect the fact that the architecture of the system can shift to solve different types of data problems. Druid is commonly used in business intelligence/OLAP applications to analyze high volumes of real-time and historical data.[2] Druid is used in production by technology companies such as Alibaba,[2] Airbnb,[2] Cisco,[3] eBay,[4] Netflix,[5] Paypal,[2], Yahoo.[6] and Wikimedia Foundation [7]

More and more companies are going from Hive to Druid for Dataviz needs, maybe it's time to look for Druid Integration with Alteryx?

I reported this to the support team but was told it was by design and to post here.

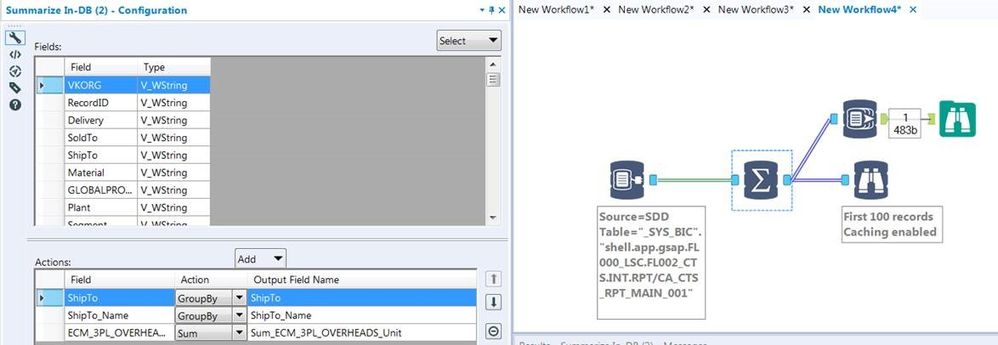

In-DB Inefficient SQL

I would like to report that the In-DB tools are generating horribly inefficient SQL code for simple operations. It seems no matter what tools you use every statement is starting with a nested 'Select * From'.

Example Simple workflow:

This is a simple Select and Group by but the SQL Generated is:

SELECT "ShipTo", "ShipTo_Name", SUM("ECM_3PL_OVERHEADS_Unit") AS "Sum_ECM_3PL_OVERHEADS_Unit"

FROM (SELECT * FROM "_SYS_BIC"."shell.app.gsap.FL000_LSC.FL002_CTS.INT.RPT/CA_CTS_RPT_MAIN_001") AS "a"

GROUP BY "ShipTo", "ShipTo_Name"

This is taking a very long time to execute:

Statement 'SELECT "ShipTo", "ShipTo_Name", SUM("ECM_3PL_OVERHEADS_Unit") AS "Sum_ECM_3PL_OVERHEADS_Unit" FROM ...'

successfully executed in 15.752 seconds (server processing time: 15.699 seconds)

Whereas if I take the same query and remove the nested Select *:

SELECT "ShipTo", "ShipTo_Name", SUM("ECM_3PL_OVERHEADS_Unit") AS "Sum_ECM_3PL_OVERHEADS_Unit"

FROM "_SYS_BIC"."shell.app.gsap.FL000_LSC.FL002_CTS.INT.RPT/CA_CTS_RPT_MAIN_001" AS "a"

GROUP BY "ShipTo", "ShipTo_Name"

It is very quick:

Statement 'SELECT "ShipTo", "ShipTo_Name", SUM("ECM_3PL_OVERHEADS_Unit") AS "Sum_ECM_3PL_OVERHEADS_Unit" FROM ...'

successfully executed in 1.211 seconds (server processing time: 1.157 seconds)

So Alteryx is generating queries up to x13 slower than they should be thereby defeating the point of using In-DB. As you can imagine in a workflow where we have multiple Connect In-DB tools this is a really substantial amount of time. Example used above is from SAP HANA DB has 1.9m rows and ~90 columns but we have much bigger tables/views than this.

If you look you will see its same behaviour for all In-DB tools where each tool creates another nested Select with its particular operator.

MY SUGGESTION:

So my suggestion is that Alteryx should combine the SQL of the first few tools and avoid using SELECT * completely unless no Select tools have been used. So it should combine:

- Connect In-DB + Select

- Connect In-DB + Filter

- Connect In-DB + Summarise

Preferably it should combine/flatten everything up until the first join or union. But Select + Filter are a must!

Note it seems some DB's can cope OK with un-nesting these big nested queries in the query plans for some Tables but normally not for Views. But some cannot cope at all and so the In-DB tools cannot even be used to Browse 100 records (due to select *).

I am running into unexpected functionality when utilizing the date interface tool in an Analytic App after upgrading to 21.3. Previously I was able to easily select dates in the past in the app interface by first selecting the Year, then the month, date, etc. After updating I am only able to see the prior and upcoming three months, which makes it difficult if you need to navigate back, say, 10 years. A ticket was put in they could not find when or why this change was made. This issue was brought up to our Designer SME group and they agreed that this isn't an improvement on the old design and is more cumbersome. They recommended posting to the Ideas page to bring back the old design.

At the moment if a part of your python code takes more than 30s to run, Jupyter times out and Alteryx cancels the workflow. This makes the Python Tool unusable for anything intensive and the timeout should be removed by default or be configurable per workflow.

I've made this idea as none of the solutions in these threads feel satisfactory:

Please add Parquet data format (https://parquet.apache.org/) as read-write option for Alteryx.

Apache Parquet is a columnar storage format available to any project in the Hadoop ecosystem, regardless of the choice of data processing framework, data model or programming language.

Thank you.

Regards,

Cristian.

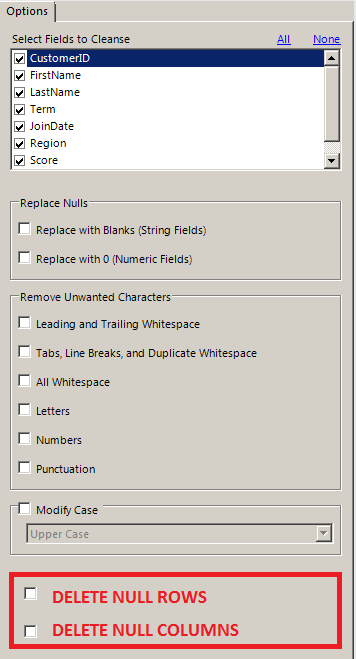

There are few workarounds for this task, but it would be really very easy if Data Cleansing Tool could delete Null Rows and Null Columns. After all its just a macro which can be modified and re-packaged into Alteryx Designer.

Currently, to delete a null row requires multiple columns validation for common Null attributes,

similarly to delete a null column every column has to be compared on a row-level and flagged for removal. Both of these approaches are clumsy.

Wouldn't it be so simple if Data Cleansing Tool gave such check boxes !!!

Hi Alteryx Devs -

It would be *really tight* to have a drop down interface tool that would support auto completion based on a odbc connection to a table/column or ajax call. I recently had a situation wherein we need to give the users the ability to select an address, then run a workflow. But the truth is, our address data is terrible, and what I really needed was to be able to let the users start typing the address, then give them a list of choices to pick from, they pick the correct (but usually wrongly formatted) address, and then I send that value into the workflow.

I could not find a decent way to give a gallery user a reliable way to pick an address from our list, so eventually wound up having to write an ajax piece to handle the auto completion, capture the user input, then post to a service that would in turn, interact with gallery through the API, get the response, and send it back calling page, and back to the user. A significant amount of work to put into something that is an exceedingly common web operation of auto completion.

This would make a lot of gallery operations flow so much more naturally.

Thanks for listening!

brian

When training people on the use of action tools, something that I always have to hit on is that when you are telling the tool which piece of the XML that you are adjusting, it's sort of difficult to tell what you have selected, and super easy to accidentally select something else.

Example:

When you initially select the action to take it's this nice Blue Color. However, it still doesn't feel exactly like you have actually selected anything or told the Action Tool what to do, since it's so easy to just select any other one of these actions.

A slightly different problem is that if you are selecting an action that has been previously configured, it is just this light grey color. So it can be easy to accidentally change your settings because you may not realize it's actually set up.

Here is a recent community post that sort of outlines a few of these problems.

Hi All,

Was very happy to see the Bulk Loader introduced for Snowflake during last release. This bulk loader is specifically available for Snowflake environments that are hosted on AWS, but does not provide functionality for those environments using Azure. As Snowflake continues to build momentum, I imagine this will be a common request. Is there something in the pipeline to add this functionality?

For an interim solution, we will be working toward developing some generic scripts/snowsql to mimic that bulk load, but ultimately we'd love to have this as part of the tool.

Best,

devKev

Hello,

I had a business case requiring a cost effective and quick storage solution for real time online sourced survey data from customers. A MongoDB instance would fit the need, so I quickly spun up a cluster on Mongo Atlas. Atlas was launched by MongoDB in 2016 as a database-as-a-service deployed on AWS. All instances for Atlas require TLS/SSL to connect. Currently, the Alteryx MongoDB connector does not support TLS/SSL connections and doesn't work against Atlas. So, I was left with a breakdown in my plan that would require manual intervention before ingesting data to Alteryx (not ideal).

Please consider expanding this functionality on all connectors. I am building Alteryx out in my agency as a data platform that handles sensitive customer information (name, address, email, etc.). Most tools I use to connect to secure servers today support this type of connection and should be a priority for Alteryx to resolve.

Thanks,

Mike Schock

It would be extremely useful to quickly find which of my many workflows feed other workflows or reports.

A quick and easy way to do this would be to export the dependencies of a list of workflows in a spreadsheet format. That way users could create their own mapping by linking outputs of one workflow, to inputs of another.

Looking at the simple example below, the Customers workflow would feed the Market workflow.

| Workflow | Dependency | Type |

| Customers | SQL Table 1 | Input |

| Customers | SQL Table 2 | Input |

| Customers | Excel File 1 | Input |

| Customers | Excel File 2 | Input |

| Customers | Excel File 3 | Output |

| Market | Excel File 3 | Input |

| Market | SQL Table 3 | Output |

It would be CRAZY AWESOME if we could get a report like this for all scheduled workflows in the scheduler.

- New Idea 272

- Accepting Votes 1,818

- Comments Requested 24

- Under Review 174

- Accepted 56

- Ongoing 5

- Coming Soon 11

- Implemented 481

- Not Planned 116

- Revisit 62

- Partner Dependent 4

- Inactive 674

-

Admin Settings

20 -

AMP Engine

27 -

API

11 -

API SDK

218 -

Category Address

13 -

Category Apps

113 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

245 -

Category Data Investigation

77 -

Category Demographic Analysis

2 -

Category Developer

208 -

Category Documentation

80 -

Category In Database

214 -

Category Input Output

640 -

Category Interface

239 -

Category Join

103 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

76 -

Category Predictive

77 -

Category Preparation

394 -

Category Prescriptive

1 -

Category Reporting

198 -

Category Spatial

81 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

88 -

Configuration

1 -

Content

1 -

Data Connectors

961 -

Data Products

2 -

Desktop Experience

1,533 -

Documentation

64 -

Engine

126 -

Enhancement

325 -

Feature Request

213 -

General

307 -

General Suggestion

6 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

12 -

Localization

8 -

Location Intelligence

80 -

Machine Learning

13 -

My Alteryx

1 -

New Request

192 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

23 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

79 -

UX

222 -

XML

7

- « Previous

- Next »

- TUSHAR050392 on: Read an Open Excel file through Input/Dynamic Inpu...

- AudreyMcPfe on: Overhaul Management of Server Connections

-

AlteryxIdeasTea

m on: Expression Editors: Quality of life update - StarTrader on: Allow for the ability to turn off annotations on a...

-

AkimasaKajitani on: Download tool : load a request from postman/bruno ...

- rpeswar98 on: Alternative approach to Chained Apps : Ability to ...

-

caltang on: Identify Indent Level

- simonaubert_bd on: OpenAI connector : ability to choose a non-default...

- maryjdavies on: Lock & Unlock Workflows with Password

- noel_navarrete on: Append Fields: Option to Suppress Warning when bot...