Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

To get simple information from a workflow, such as the name, run start date/time and run end date/time is far more complex than it should be. Ideally the log, in separate line items distinctly labelled, would have the workflow path & name, the start date/time, and end date/time and potentially the run time to save having to do a calculation. Also having an overall module status would be of use, i.e. if there was an Error in the run the overall status is Error, if there was a warning the overall status is Warning otherwise Success.

Parsing out the workflow name and start date/time is challenge enough, but then trying to parse out the run time, convert that to a time and add it to the start date/time to get the end date/time makes retrieving basic monitoring information far more complex than it should be.

I would love to see a "Product" option added to the summarize tool. I can currently count, sum, mean etc., but I can't multiply my data while grouping. There are numerous "work arounds", but a native product function built into the summarize tool would be great.

Thanks for listening!

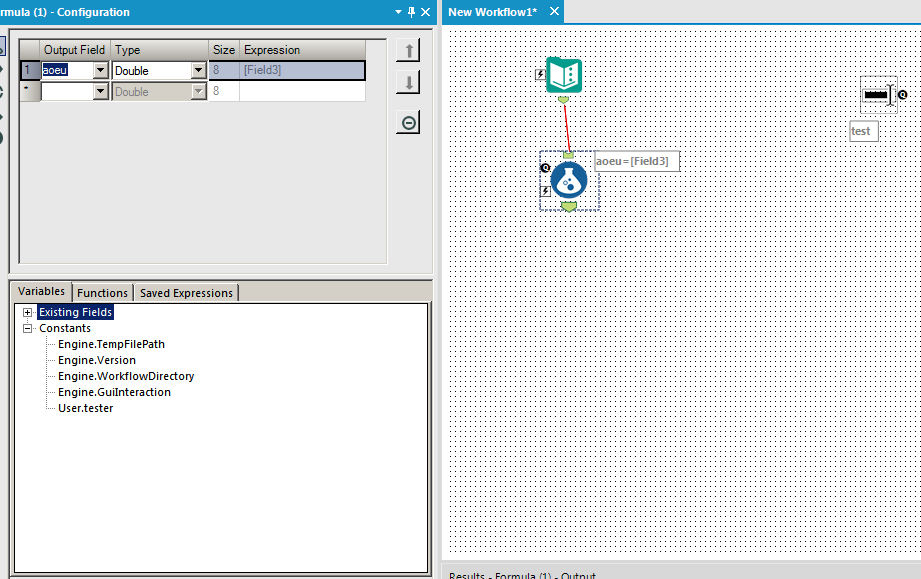

Whenever I add an interface tool, it adds a constant just like the 4 engine constants and any user constants. It would be useful if tools like the formula and filter automatically added question constants to the list for you to use. This would be identical to how user constants behave currently. Here is the before and after for visual effect:

BEFORE:

AFTER:

Create new connector to pull Salesforce Reports

We are a large company with tens of thousands employees using Salesforce on a daily basis. Over the years, we have worked with Salesforce to make many customizations and create many reports to provide data for various reporting needs. However, we have increasingly found it inefficient and prone to error to download the reports manually. We have many teams using the Salesforce reports as a base to create additional business insights.

Alteryx is a great tool to manage data ETL and workflows, but it does not support pulling data from Salesforce reports directly. Instead, it only offers connectors to pull data from base Salesforce objects. The data from Salesforce objects such as tables can be useful, but do not necessarily offer the logical view of Salesforce reports, and may require a lot of efforts to reconcile the data consistency against the reports our users are used to. Sometimes, it may be impossible to repeat producing the same data from Salesforce tables as those from Salesforce reports. That in turn would cause a lot of efforts spent by the reporting teams, their audience, and users of the Salesforce reports to match things up.

Salesforce does not have any out-of-box solution to schedule downloading the reports. At our request, their support team did some research and have not found a good 3rd-party solution in the Salesforce App Exchange ecosystem that supports this need.

I strongly believe this is a great opportunity for Alteryx. Salesforce already has an API that allows for building custom applications to pull Salesforce reports. However, most Salesforce users are more business oriented and do not necessarily have the appetite to engage with their IT staff or external resources provide to develop such apps and bear the burden to main them.

I have attached the Salesforce Reports and Dashboards API Developer Guide for your reference.

Sincerely,

Vincent Wang

Hello!

I remember a while ago running into a peculiar error:

'The R.exe exit code (4294967295) indicted an error'. This was peculiar, as the data output was still seemingly correct, however, the error made me double-check the community for answers.

There are some very technical sources here:

https://community.alteryx.com/t5/Alteryx-Designer-Discussions/R-tool-Fake-Errors/td-p/25163

https://community.alteryx.com/t5/Alteryx-Designer-Discussions/Boosted-Model-Error/td-p/5509

but in short, this seems to be caused by a return code from C++ libraries, being understood by R as an error. Its a very inconsistent error, typically caused by low memory. This creates what most call a 'fake error' - the code runs perfectly fine, but seems to produce an error that doesn't actually indicate anything wrong.

Within those threads, its also stated that calling the garbage collection function (gc()) does tend to solve the problem on R exit, however this requires a user to understand basic R, and have access to the macro to be able to change the code - thus making predictive analytics more intimidating than it already is for new Alteryx users.

The first occurrence of this error seems to be way back in 2015, however the error is still being reported by users (see posts from 2020 and 2021):

https://community.alteryx.com/t5/Alteryx-Designer-Discussions/Password-protected-Excel-files-R-solut...

https://community.alteryx.com/t5/Alteryx-Designer-Knowledge-Base/Error-The-R-exe-exit-code-n-indicat...

An important issue of these 'fake errors', is not only that they cause confusion, but also that they will cause analytic apps and server workflows to not work as expected, and stop running depending on the configuration.

My suggestion would be to revisit this issue, as by my understanding it occurs inconsistently, and calling garbage collection does not always seem to fix it. Even if the Error message is still created, it may be worth Alteryx suppressing these errors, in the case they are not real errors.

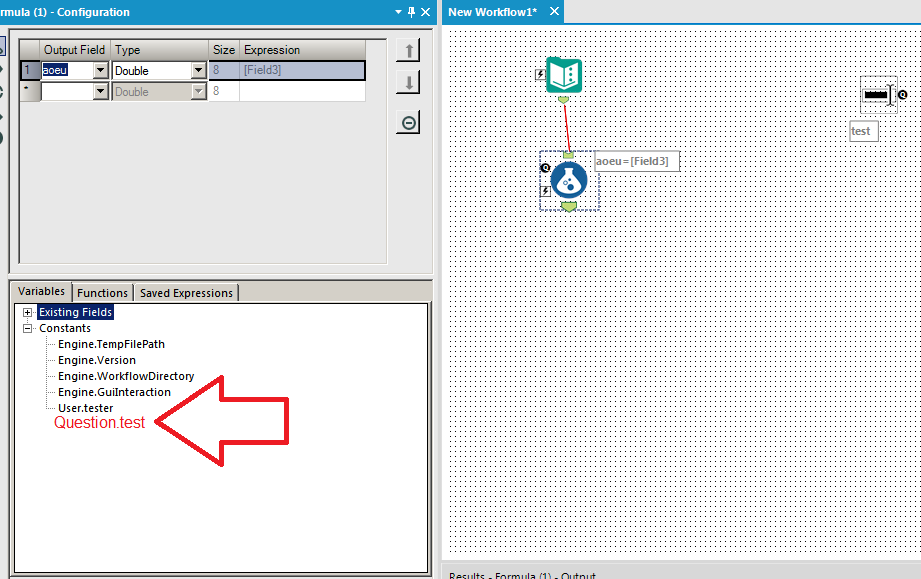

Steps to reproduce:

(as mentioned, its very inconsistent)

1. Open the Boosted Model example workflow

2. *10 the number of maximum trees in the model, in the boosted model configuration (Model customization)

3. Run the workflow, inspect the results (which are seemingly correct), and the error message in the results window.

Hope this helps!

TheOC

Our company often builds applications where we need the ability for it to dynamically update dropdowns based on a user's previous selections.

For example:

- A user needs to select their Server, database, and table for analysis (3 dropdowns).

- When the user selects their server, a query is run to get a list of all databases on that server. Then the database dropdown will automatically populate with this list of databases.

- The user then makes a database selection, and a query is then run to get all tables within that database. The table dropdown will automatically populate with this list of tables.

- The user makes their table selection, and then runs their analysis using the server, database, and table variables with values that they have selected from each dropdown.

We can do this in other programs, but unfortunately the lack of dynamic selections/dependent dropdowns is a big limitation for us when building Alteryx applications. Our current workarounds are chaining applications together, or using PyQt within the workflow. Chaining is clunky and often causes unforeseen issues when uploading to Server with errors that are non-descriptive, and using PyQt comes with Python versioning issues.

If this interactivity can somehow be added to Alteryx applications it would be a huge upgrade to our current Alteryx processes. Any suggestions for further workarounds would also be helpful!

Thank you,

Amanda

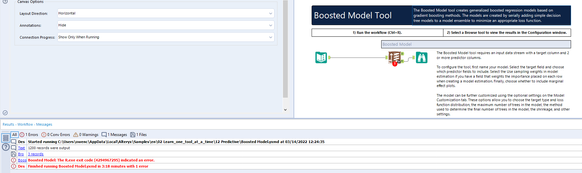

Similar to previous ideas from @patrick_mcauliffe and @shailesh_patel - would like to request 2 things:

Default on Folder Picker Interface tool

The folder picker tool does not currently allow a default value - this unnecessarily adds work if users have the same value 90% of the time.

Please add a field for the default value that will show when the interface starts up

Similar ideas:

- Default on Date interface: https://community.alteryx.com/t5/Alteryx-Designer-Ideas/Default-Date-for-Interface-Tool/idi-p/35770

- Default on File Selector: https://community.alteryx.com/t5/Alteryx-Designer-Ideas/Default-file-location-in-file-broswer-Interf...

Idea:

An Alteryx version for Mac OS X sounded like a nice idea... Although there are options for using bootcamp with windows 7-8

or some virtualisation software as mentioned in a community post here.

Rationale 1 (Competitors do it):

First of all there is no need to neglect a customer segment using Mac's.

- Rapidminer Studio comes with a dedicated OS X version,

- Knime has Mac OS X support

- Weka has Mac OS X support as well

- SPSS Modeler is Windows only but SPSS Stats is Mac OS X compatible.

Seems SAS was compatable in the last decade, but they dropped it. Now SAS is not OS X compatible but

still with the "SAS OnDemand" version Mac users can easly get a hands on experience.

Rationale 2:

The Mac Pro Beast has 7.2 TFlops of computing power with the help of dual ATI graphics cards.

It would be awesome to install Alteryx on one...

Can we please have a tickbox (ideally one that remembers your preference to be ticked or unticked) on the Save to Gallery pop up that would allow us to save a (timestamped?) copy of that workflow on a local drive (perhaps one that is preset in the user settings)?

Microsoft Office provides a facility in all its apps to make the loading of frequently used files a breeze. In the FILE OPEN function the user can "PIN" a previously opened file so that it is always easy to find and load. This would make it easier to manage and retrieve Designer files.

This is what PINNING looks like in Excel

Hi Alteryx User and Alteryx Dev team,

I saw there are number of posts from the community asking for solution to calculate the NetWorkDays (e.g. similar to the networkdays in excel which to calculate the number of days different between the two days excluding weekend and holidays.)

Although we could build a macro for it, the performance is not ideal, especially when the data set is huge and/or the date range required is far apart from each other because there is currently NO a build-in function in Alteryx. Alteryx will have to expand the date range by date and check whether each is a weekend or holiday. It will an excellent idea if a build-in function for Networkdays could be built to minimize this hassle from everyone around the world.

We are looking forward this idea could be take forward.

Thanks

Eric

I believe many have voiced out this as their pain point within the Community. Essentially, there is no straightforward method to import multiple Excel files which are password protected.

I understand that there is an R solution suggested by several users, however, that is not ideal as it can be difficult to obtain permission from internal Tech team to install the package on the users' computers.

Re-saving them without password is not only a hassle, but also raises concerns for data protection and security.

This may have been raised before, but we would like to see the equivalent of PRICE and YIELD formulas from Excel in Alteryx's Formula tool. I believe many users in the finance industry are using formulas like these frequently and it would be helpful to be able to replicate the formula in Alteryx.

Manually building the formula is possible, however it is unnecessarily complicated especially if you are working on different calendar basis e.g. 30 /360 European.

Thank you!

When training people on the use of action tools, something that I always have to hit on is that when you are telling the tool which piece of the XML that you are adjusting, it's sort of difficult to tell what you have selected, and super easy to accidentally select something else.

Example:

When you initially select the action to take it's this nice Blue Color. However, it still doesn't feel exactly like you have actually selected anything or told the Action Tool what to do, since it's so easy to just select any other one of these actions.

A slightly different problem is that if you are selecting an action that has been previously configured, it is just this light grey color. So it can be easy to accidentally change your settings because you may not realize it's actually set up.

Here is a recent community post that sort of outlines a few of these problems.

Instead of using the arrows, I think it would be nice to be able to drag and drop the questions to rearrange them in the Interface Designer. This would go more hand in hand with the drag and drop experience of Alteryx.

Additionally, when a lot of interface tools are on the canvas, Designer really slows down if you need to rearrange the order of the tools in the Interface Designer. I would like to see if there is any way that this can be sped up.

Thanks!

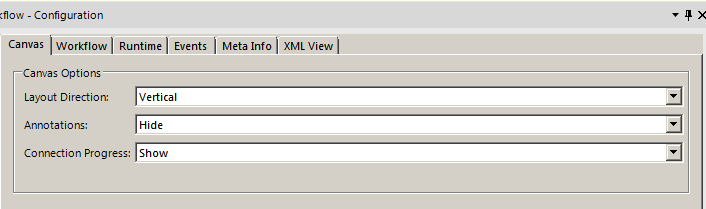

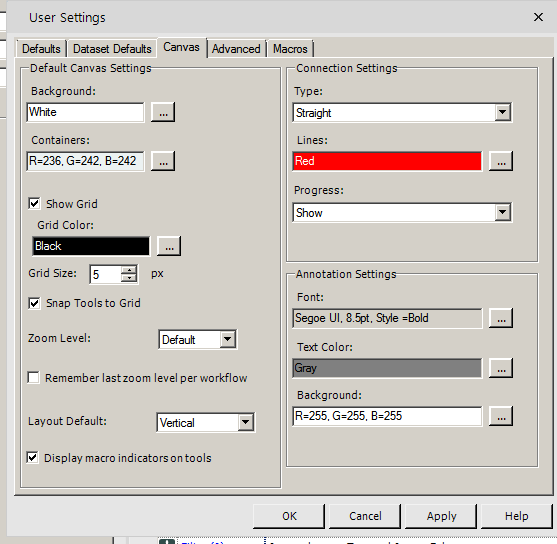

The canvas has 3 options as demonstrated by exhibit A:

The user settings can change 2 of the 3 defaults as demonstrated in exhibit B. The layout default and connection settings progress can both be defaulted for all new workflows:

Thus, I would propose that a user setting be added to the annotation box so that I can set the default to hide.

From what I can tell using ProcMon, presently when using the Directory tool to list files (including subdirectories) the Alteryx Engine runs a single threaded process.

When you're trying to find files by checking recursively in large network paths, this can take hours to run.

It would be great if the tools would split up lists of directories (maybe by getting two or three levels down first) and then run each of those recursive paths in parallel.

While it is possible to do this using a custom Python or cmd->PS command, it would be great if this could just be a native part of the application.

Please update the Publish to Tableau Server connector tool to support Tableau's Ask Data feature. The data source must be recognized as an extract on Tableau Server in order for the Ask Data feature to work. Currently, all data source published using version 2.0 of the connector tool are recognized as a live data source. The work around is cumbersome and requires multiple copies of data sources to be created and managed.

Could Alteryx create a solution or work around for their tools to retry the queries with Azure DB connectivity outages.

If there are intermittent, transient (short-lived) connection outages with cloud Azure DB, then what action can we take with Alteryx to retry the queries.

Examples of retry Azure SQL logic:

“2. Applications that connect to a cloud service such as Azure SQL Database should expect periodic reconfiguration events and implement retry logic to handle these errors instead of surfacing these as application errors to users”.

SQL retry logic is a feature that is not currently supported by Alteryx.

For further information please see [ ref:_00DE0JJZ4._5004412Star:ref ]:

Hi Alteryx Support,

We are experiencing intermittent errors with our Alteryx workflows connecting to our Azure production database with Alteryx Designer v2018.4.3.54046.

Is there anything we can do to avoid or work around these intermittent / transient (short-lived) connection errors, such as, changing the execution timing or the SQL driver settings.

Or can we incorporate examples of retry Azure SQL logic:

“2. Applications that connect to a cloud service such as Azure SQL Database should expect periodic reconfiguration events and implement retry logic to handle these errors instead of surfacing these as application errors to users”.

https://docs.microsoft.com/en-us/azure/sql-database/sql-database-develop-error-messages

https://docs.microsoft.com/en-us/azure/sql-database/sql-database-connectivity-issues

Salesforce Import process, which contains 25 Workflow modules, completed with errors on:

Mon 25/02/2019 23:27

Error 1

2019-02-25 23:11:23:

2.1.18_SF_MailJobDocument_Import.yxmd:

Tool #245: Error opening connect string: Microsoft OLE DB Provider for SQL Server: Login timeout expired\HYT00 = 0; Microsoft OLE DB Provider for SQL Server: Invalid connection string attribute\01S00 = 0.

Error 2:

2019-02-25 23:26:31:

2.1.25_SF_ClientActivityParticipant_Import.yxmd:

Tool #258: Error opening connect string: Microsoft OLE DB Provider for SQL Server: Login timeout expired\HYT00 = 0; Microsoft OLE DB Provider for SQL Server: Invalid connection string attribute\01S00 = 0.

Salesforce Import Workflow completed with errors on:

Wed 27/02/2019 23:24

Error 3

2019-02-27 23:06:47:

2.1.17_SF_MailJobs_Import.yxmd:

DataWrap2ODBC::SendBatch: [Microsoft][SQL Server Native Client 11.0]TCP Provider: The specified network name is no longer available.

Regards,

Nigel

This is a hybrid idea related to both posts regarding dynamic tool configuration during runtime / without having to run an analytic app.

What I would like to propose is a new optional connection type for the interface tools that can be updated with incoming connections (having a Q letter with white background), namely Drop Down, List Box, Tree and Map tools. This could be a simple R letter in a square for example, which would be located to the left of the incoming question anchor.

Use Case

Imagine an app where there are two control containers and three interface tools (Action tools excluded from the count) outside those containers, one of them is a Text Box connected to a filter tool (via an Action tool) in the first control container with the purpose of limiting the dataset by specifying a city for example, another one is a Numeric Up Down for limiting the dataset by the average transaction amounts that are greater than the specified amount. These two interface tools are contained in a Group Box in the Interface Designer.

The third interface tool is a Drop Down tool which obtains the values (which will be Store Name for this example) from the results of the Select tool (in the second control container that is connected to the output anchor of the first control container) that is connected to an incoming filter tool which is modified by the previously mentioned interface tools. Output anchor of this Select tool is connected to the hypothetical R anchor on the top of the Drop Down tool, which is then connected to an outgoing filter tool that is connected to a series of tools which ends with a Browse tool that displays basic KPI information for the store specified from the Drop Down tool.

The main difference of the R (Refresh) anchor from the Q anchor is that it will enable the user to dynamically update the incoming values (i.e., choices for a drop down tool) without having to run the workflow. Alteryx Designer will automatically execute only the tools necessary to be able to update the values (up to a certain point of the workflow only, which may also be indicated by the boundaries of the control containers containing the target tool) for the R anchor connected applicable Interface tools specified above. This will be possible by clicking the hypothetical confirm button (same appearance with the Apply Data Manipulations button) which only appears next to the Interface tools (or the Group Boxes containing them instead) that are automatically determined by Alteryx Designer to be providing downstream data to the the tools (T anchor of the Filter tool for example) sending values to the applicable Interface tools having an incoming R anchor connection.

I saw that a similar feature recently became available with Alteryx Analytics Cloud Platform with the App Builder product, and I think that Alteryx Designer Desktop could definitely benefit both from this feature and additional App Builder features (that can be adapted to Desktop counterpart) in the upcoming releases.

- New Idea 395

- Accepting Votes 1,783

- Comments Requested 20

- Under Review 181

- Accepted 47

- Ongoing 7

- Coming Soon 13

- Implemented 550

- Not Planned 106

- Revisit 56

- Partner Dependent 3

- Inactive 674

-

Admin Settings

22 -

AMP Engine

27 -

API

11 -

API SDK

230 -

Bug

1 -

Category Address

13 -

Category Apps

114 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

252 -

Category Data Investigation

79 -

Category Demographic Analysis

3 -

Category Developer

220 -

Category Documentation

82 -

Category In Database

215 -

Category Input Output

658 -

Category Interface

246 -

Category Join

109 -

Category Machine Learning

3 -

Category Macros

156 -

Category Parse

78 -

Category Predictive

79 -

Category Preparation

406 -

Category Prescriptive

2 -

Category Reporting

205 -

Category Spatial

83 -

Category Text Mining

23 -

Category Time Series

24 -

Category Transform

93 -

Configuration

1 -

Content

2 -

Data Connectors

985 -

Data Products

4 -

Desktop Experience

1,615 -

Documentation

64 -

Engine

136 -

Enhancement

421 -

Event

1 -

Feature Request

219 -

General

307 -

General Suggestion

8 -

Insights Dataset

2 -

Installation

26 -

Licenses and Activation

15 -

Licensing

15 -

Localization

8 -

Location Intelligence

82 -

Machine Learning

13 -

My Alteryx

1 -

New Request

229 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

26 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

87 -

UX

228 -

XML

7

- « Previous

- Next »

-

Carolyn on: Blob output to be turned off with 'Disable all too...

- MJ on: Add Tool Name Column to Control Container metadata...

-

fmvizcaino on: Show dialogue when workflow validation fails

- ANNE_LEROY on: Create a SharePoint Render tool

- jrlindem on: Non-Equi Relationships in the Join Tool

- AncientPandaman on: Continue support for .xls files

- EKasminsky on: Auto Cache Input Data on Run

- jrlindem on: Global Field Rename: Automatically Update Column N...

- simonaubert_bd on: Workflow to SQL/Python code translator

- abacon on: DateTimeNow and Data Cleansing tools to be conside...

| User | Likes Count |

|---|---|

| 7 | |

| 3 | |

| 3 | |

| 3 | |

| 2 |