Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

Directory Tool retrieves today a lot of information about a file. I must say I appreciate getting easily the size and the last write time.

But why not the owner? I have developped a macro with a powershell to do that but what a nightmare for a so little piece of information.

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

Two very useful functions

According to https://www.w3schools.com/sql/func_mysql_least.asp

The LEAST() function returns the smallest value of the list of arguments.

example : SELECT LEAST("w3Schools.com", "microsoft.com", "apple.com");

returns "apple.com"

GREATEST works exactly the same but returns the greatest value of the list of argument

As of today, Alteryx proposes max and min to deal with that, but it only works with number and , I think, it's an ambiguous syntax : Max and Min works both as an aggregation function and as a row function. I love to separate these two notions.

Having a more standard means also more interoperability.

On a related topic, the coalesce function is proposed here : https://community.alteryx.com/t5/Alteryx-Designer-Ideas/Coalesce-function/idi-p/841014

Best regards,

Simon

Currently, when one uses the Google BigQuery Output tool, the only options are to create a table, or append data to an existing table. It would be more useful if there was a process to replace all data in the table rather than appending. Having the option to overwrite an existing table in Google BigQuery would be optimal.

Hello!

Currently i develop on a 2560 x 1440 monitor, and it is great for development of Alteryx workflows.

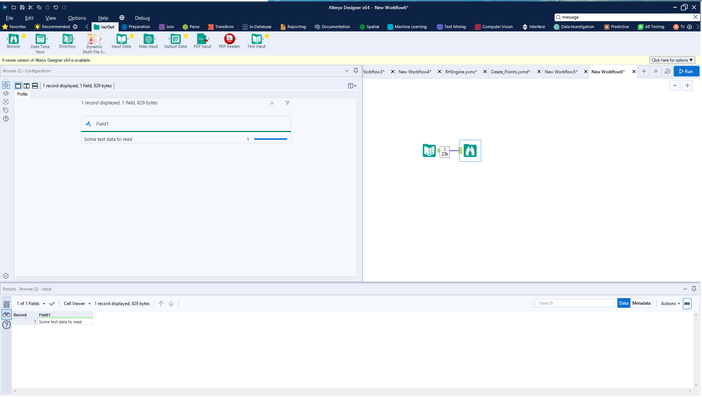

However, from an accessibility perspective (and for demonstration purposes), the whole of the Alteryx Interface text and icons are far too small for anyone to read. For instance, this is what Designer looks like at the most common monitor size, 1920 x 1080:

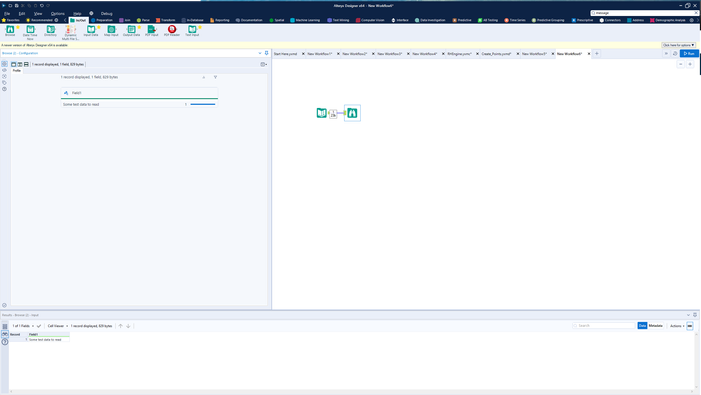

And at my native resolution (2560 x 1440)

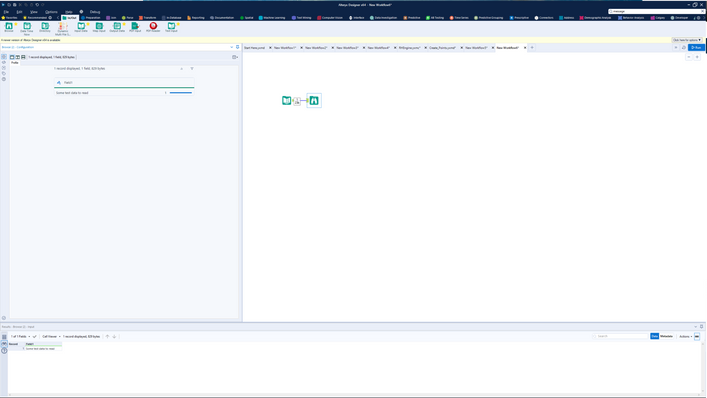

And 4k resolution, for comparison:

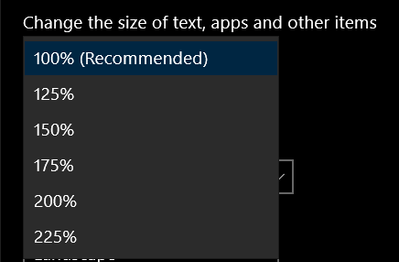

As you will notice - virtually everything is smaller, and unreadable at higher resolutions. It doesn't appear that this is a setting within Alteryx, and so I have to resort to windows settings to change the size:

Or as @CharlieS mentions here change the size of text across all applications.

It would be useful within Alteryx to have a 'scaling' slider/dropdown, so I do not have to change the resolution or size of applications within windows, to be able to easy read or demonstrate data from Alteryx Designer.

Thanks,

TheOC

I like the new cache option in 2018.3, but I would like it to function a little bit different. Let's say you cache at a certain point and then continue to build after that. If I reach another checkpoint and want to cache, it currently re-runs the entire workflow (ie it ignores my cache upstream and just goes back to the beginning of the workflow); instead, I would rather have it utilize the upstream cache. Personally, caching is usually an iterative effort during development where I keep caching along the way. The current functionality of the cache is not conducive to this. Thanks!

This has probably been mentioned before, but in case it hasn't....

Right now, if the dynamic input tool skips a file (which it often does!) it just appears as a warning and continues processing. Whilst this is still useful to continue processing, could it be built as an option in the tool to select a 'error if files are skipped'?

Right now it is either easy to miss this is happening, or in production / on server you may want this process to be stopped.

Thanks,

Andy

The DateTime tool is a great way to convert various string arrangements into a Date/Time field type. However, this tool has two simple, but annoying, shortcomings :

- Convert Multiple Fields: Each DateTime tool only lets you convert one field. Many Alteryx tools (MultiField, Auto Field, etc.) allow you to choose what field(s) are affected by the tool. If I have a database with a large number of string fields all with the same format (such as MM/DD/YYYY), I should be able to use one DateTime tool to convert them all!

- Overwrite Existing Field: The DateTime tool always creates a new field that contains your converted date/time. I ALWAYS have to delete the original string field that was converted and rename the newly created date/time field to match the original string field's name. A simple checkbox (like the "output imputed values as a separate field" checkbox in the Imputation tool) could give the flexibility of choosing to have a separate field (like how it is now) or overwrite the string field with the converted date/time field (keeping the name the same).

Alteryx is overall an amazing data blending software. I recognize that both of these shortcomings can be worked around with combinations of other Alteryx tools (or LOTS of DateTime tools), but the simplicity of these missing features demonstrates to me that this data blending tool is not sufficiently developed. These enhancements can greatly improve the efficiency of date handling in Alteryx.

STAR this post if you dislike the inflexibility of the DateTime tool! Thank you!

At the moment, at least for Postgres and ODBC connections, the DCM only supports a names DSN that must be installed on each machine running Designer or Server. However, the ODBC admin function is admin only within my company, which makes DCM more trouble than it is worth to use.

Connection strings work well in the workflows, have been implemented on the gallery before, and do not require access to the ODBC admin to implement. Could DCM please be improved to support native connection strings?

The only thing I have ever found that Excel can do that Alteryx can't is creating a pivot table that allows the user to drill up and down levels of aggregation by collapsing or expanding levels in the data hierarchy. (like this).

Can you add an interactive table to the new interactive charting tool that can provide this level of functionality? It's embarrassing to have to tell Excel users they can't do this in Alteyrx, and likely leads many of them to stick to Excel--and miss out on all the other great things Alteryx can do.

Thank you!

Hi all,

When debugging an error, we need to verify tool by tool in a sequence to better understand what is really going on.

Sometimes the tools are miles away from each other. Imagine a gigantic workflow with a lot of connections going back and forth and wireless connections everywhere to help the workflow organization. Here is an example with more than 1300 tools:

My idea is to have a shortcut showing all the previous/next tools and by selecting the previous/next one you go directly to them.

Something like this:

What do you guys think about that?

Best,

Fernando Vizcaino

Hello!

I'm submiting this idea to put other products into alteryx students program, I think that we (students) should have access to study these products (not only the Intelligence Suite, but Server as well).

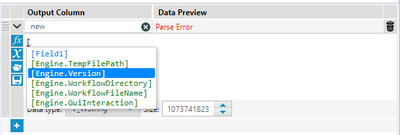

When writing an expression in a Formula tool, I love that you can just type an open bracket and suggestions pop up that allow you to auto-fill the rest of the variable name. What I find frustrating, however, is that once you type the open bracket, the highlighted field automatically moves to the one where your mouse is pointing, regardless of if you have moved your mouse or not. I think it makes more sense to always highlight the first field in the list and only take mouse position into account once it has actually moved.

It is hard to describe in just a picture as opposed to a video but essentially I had my mouse below where I was typing in the screenshot below then when I typed the open bracket, the 3rd field listed automatically got selected even though I never moved my mouse.

Cc: @Hollingsworth

Please add xlsx files within the onedrive input/output tool

I like to suggest having a Batch Macro Container (besides the existing Container) which acts as a Batch Macro within a Workflow and is stored within the Workflow.

I understand that having a batch macro available as a separate tool can be very powerful and reduces redundant work. However, very often Batch Macros are set up for a specific workflow only and are of no use for other workflows. The Creation of a Batch Macro in a container will significantly reduce the time to deploy a batch macro and keeps the Macro folder clean of one-time Batch Macros.

Attached a picture of how this could look like

Thanks

Manuel

Alteryx Server is great, but very costly. Having the ability to install the Alteryx engine without the Designer, thus allowing you to share Workflows/Apps with users directly. This could be licensed on a per user basis as well, but a reduced cost.

This also allows for some more advanced workflows that do not work in the Gallery.

DearAlteryx team and community,

all the best for 2021!

Thank you very much for enhancing the output option from Alteryx Designer to Excel keeping the format.

For a lot of my use cases this is very helpful!

Still, there are some use cases left. In case I want to overwrite a calculated/linked number (e.g. calculated prediction) with the Actual number, it would be very helpful to feed into those cells as well. At the moment Alteryx is doing the job but I receive a lot of Excel Errors (xml errors) and a corupt Excel file when overwriting calculated fields/linked fields.

Is there a chance to extend the current setup for all of those cases?

Thanks and best regards

Chhristoph

When a user wants to use the find nearest to say find the nearest within 200 miles the dropdown stops at 100.

Similar if they want a number in between IE 15 the interface is not intuitive.

While you can just type the number in the interface doesn't look like you are able to.

Simply adding a "Custom" selection at the bottom would make this much more intuitive.

Hello,

It would be very helpful to have a search box for field names in the summary tool, I think it would help decrease errors by selecting fields by mistake with similar names and will help gain a couple of seconds while looking around for a specific field, particularly with datasets with a lots of them.

Like this:

Sometimes formulas get pretty long. There are cases of deeply nested conditionals, concatenation of long strings, cases where multiple casts and parses are used, etc. where formulas get pretty large and unwieldy. The current system of wrapping lines and managing the size of the properties pane can be a hassle, especially if you are trying to use any sort of whitespace formatting to make the formulas more readable.

My solution is this is pretty simple, add a pop-out window for formulas. It could be a context menu option from right-clicking the formula box itself, a button on the bar at the top of each formula, or any number of other things.

A really good example of this is MS Access. You can right-click any text box that takes an expression and open it in the expression editor pop-up window. The current system is more like excel where you're stuck with whatever box size you're given.

- New Idea 395

- Accepting Votes 1 783

- Comments Requested 20

- Under Review 181

- Accepted 47

- Ongoing 7

- Coming Soon 13

- Implemented 550

- Not Planned 106

- Revisit 56

- Partner Dependent 3

- Inactive 674

-

Admin Settings

22 -

AMP Engine

27 -

API

11 -

API SDK

230 -

Bug

1 -

Category Address

13 -

Category Apps

114 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

252 -

Category Data Investigation

79 -

Category Demographic Analysis

3 -

Category Developer

220 -

Category Documentation

82 -

Category In Database

215 -

Category Input Output

658 -

Category Interface

246 -

Category Join

109 -

Category Machine Learning

3 -

Category Macros

156 -

Category Parse

78 -

Category Predictive

79 -

Category Preparation

406 -

Category Prescriptive

2 -

Category Reporting

205 -

Category Spatial

83 -

Category Text Mining

23 -

Category Time Series

24 -

Category Transform

93 -

Configuration

1 -

Content

2 -

Data Connectors

985 -

Data Products

4 -

Desktop Experience

1 615 -

Documentation

64 -

Engine

136 -

Enhancement

421 -

Event

1 -

Feature Request

219 -

General

307 -

General Suggestion

8 -

Insights Dataset

2 -

Installation

26 -

Licenses and Activation

15 -

Licensing

15 -

Localization

8 -

Location Intelligence

82 -

Machine Learning

13 -

My Alteryx

1 -

New Request

229 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

26 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

87 -

UX

228 -

XML

7

- « Précédent

- Suivant »

-

Carolyn sur : Blob output to be turned off with 'Disable all too...

- MJ sur : Add Tool Name Column to Control Container metadata...

-

fmvizcaino sur : Show dialogue when workflow validation fails

- ANNE_LEROY sur : Create a SharePoint Render tool

- jrlindem sur : Non-Equi Relationships in the Join Tool

- AncientPandaman sur : Continue support for .xls files

- EKasminsky sur : Auto Cache Input Data on Run

- jrlindem sur : Global Field Rename: Automatically Update Column N...

- simonaubert_bd sur : Workflow to SQL/Python code translator

- abacon sur : DateTimeNow and Data Cleansing tools to be conside...

| Utilisateur | Compte |

|---|---|

| 7 | |

| 3 | |

| 3 | |

| 3 | |

| 3 |