Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: Hot Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

I got the requirement to read the file with same structure from different path and different sheet, so I worked and identified a way to achieve this requirement.

Step 1: List the files that are required to read from the different path and create it Excel with the file name and respective path.

Step 2 : Create batch Macro give control parameter as filepath and mention the correct template.

Step 3 : Create a workflow add source input and Map Source file i.e. list of files with path.

Finally you can see the all the data are merged together write in your output as single file. You can additional transformation if you want process any business logic further and write in output.

Note: Adding additional file name source path will be enough for additional file processing.

For example, Input Data Tool can output a list of sheets name from excel files.

Our client would like to output a list of sheets name by Google Sheets Input Tool as like excel files.

Would be nice if in Designer customer's may want to upload and reference a " DATA DICITIONARY - METADATA REPOSITORY file when working with various input source to transform data .

Organizations that are mature in their data governance strategy implement special software that extracts, manages and provides access to data dictionary of data assets in multiple databases such as ERWIN to maintain schema for enterprise.

Within DESIGNER access to a file METADATA REPOSITORY held in DESIGNER customer may easily select a list of columns fields or attributes from that file to manipulate data elements using DESIGNER and provide all the relevant information required they wish to massage the data.

Possible Attributes that may be in data dictionary file:

Table name

Column Name

Data Type

Foreign Table

Source

Table Description

Sensitive Data

Required Field

Values

This might be a dumb ask, but I run into this alot. I have a workflow that has over 1000 tools and is super long. I would like to be able to to either right click and lock the connection sting so i can let go of the left click , scroll down to the tool, and then click on it to make the connection. Also, it would be nice to have the ability to right click on a tool and click a button "Connect to last used connection" as i sometimes join to the same table multiple times. Lastly, I just thought it would be cool if we can defined tools that are connected to the most so when i right click on another tool, i can open a drop down and select one of the most joined to tools, without even having to click on the original.

Alteryx has different behaviours for conversion errors depending on the type of conversion desired. When converting from string to date data type, a conversion error will generate a NULL value. When converting from a string to a numeric data type, a conversion error will generate 0. Why the different behaviours? There is a lack of harmony here. 0 is a valid value and should not be the generated value for a failed string to numeric conversion. It should be NULL.

When I perform data type conversions, i do not apply them directly to the source field and then cast it. If there is a conversion error, then I have lost or corrupted the source information. Rather, I create a target field with the desired data type and use a formula to apply a conversion, such as datetimeparse or tonumber. Finally, I do a comparison of the source and target values. If the datetimeparse generated a NULL then I can PROGRAMMATICALLY address it in the workflow by flagging or doing some other logic. This isn't so easy to do with numerics because of the generated 0 value. If I compare a string "arbitrary" to the generated 0 value as a string then clearly these do not match. However, if I compare a scientific value in a string to the converted numeric as a string, then these do not match though they should. My test of the conversion shows a false positive.

I want a unified and harmonised conversion behaviour. If the conversion fails, generate a NULL across the board please. If I am missing something here and people actually like conversion errors to generate 0 please let me know.

Hi,

Please provide users the option of downloading course videos and transcripts. If it is already there, please let me know.

Regards,

Meenakshi

When I perform data type conversions I sometimes receive conversion errors. There is not a slick way to programmatically handle these that I am aware of. Instead, I have to manage them with half a dozen tools or really unsightly expressions in formula tools. As an example, I have a string field with a value "two" and I want to convert to a decimal or int. I receive a conversion error and the value is either "0.000" or "0". This is clearly wrong and I want to have a NULL value instead. I want to use a function to attempt the conversion in the formula tool so i can nest it inside conditionals in a cleaner fashion.

Here is a reference to the try_cast doc:

https://docs.microsoft.com/en-us/sql/t-sql/functions/try-cast-transact-sql?view=sql-server-2017

I want to keep my user settings, pinned palettes, and other configurations in a file. I can keep the file versioning purposes, backups, use across multiple machines, silent installs, and other uses.

It will make life easier. Thank you.

Hello,

when parsing XML data, Alteryx does not recognise <![CDATA as being data. This type of XML file is not very common yet is still used today (by me anyway!). Their role is similar to commenting your code in some regards (for those who code).

Could it be possible to add a functionality to alteryx so that we can have it consider the data between these "tags" to be data?

Regards,

Julien

When using the latest version of PublishtoPowerBI and attempting to publish on the gallery the password encryption appears to be specific to the machine where the workflow resides.

It only works when the workflow is published from Designer on the Worker server. This is currently a limitation of the tool as confirmed by Alteryx on INC Case # 00261086.

Alteryx support also flagged that Refresh Token authentication type does not work on a Gallery. Authenication type Persist credentials should be used when publishing on the Gallery.

Could you please implement a solution so that we can use this tool when publishing to gallery?

Hi,

The Adobe Analytics API token is currently set to expire 30 days after the call has been configured. When the token expires, I have to re-authenticate AND reconfigure the API call.

The API call shuoldn't expire when the token expires. Upon re-authenticating, the call should persist as it was originally configuired.

Thanks,

Mihail

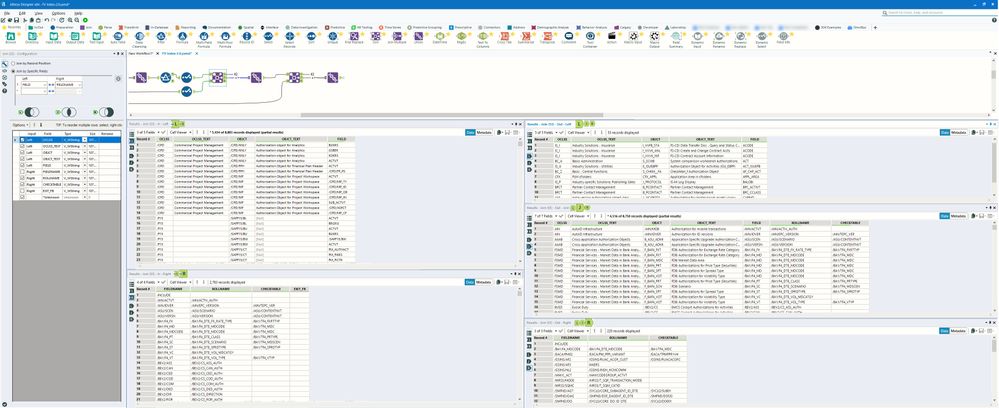

Understanding that for some tools / data sets this feature would favor larger displays with more screen real estate, I think it would be helpful to be able to view both the input(s) and output(s) data in the results pane simultaneously via dedicated sub-results panes for each input / output.

To try to put some form to the picture of it I had in my head, I patched together a few screenshots in SnagIt as a rough idea on what something like this could potentially look like to the end user, using the Join tool as an example (though I think it would be cool for all tools, if that is practical). Not sure how well it will show up in the embedded photo below, so I also also attached it - for reference, the screenshot was taken with Alteryx in full-screen mode on a 3440x1440 monitor.

Not the prettiest - but hopefully you get the idea. A few general features I thought might be helpful include:

- Clean / streamlined GUI elements for each "sub-pane"

- Ability to toggle on / off any of the sub-panes

- Ability to re-sized any of the "sub-panes" as desired

- Ability to customize how many results are included in the results view of each input / output (i.e., either by row count or size)

I'm sure there would be several more neat features a view like this could support, but these are the ones I could think of offhand. To be clear, I wouldn't want this to replace the ability to click any individual tool input / output to only view that data if desired, but rather imagined this "view" could be optionally activated / toggled on and off by double clicking on the body of a tool (or something like that). Not sure whether this is feasible or would just be too much for certain large tools / datasets, but I think it would be the bees knees - am I the only one?

Let me know if anyone has any thoughts or feedback to share!

Josh

Hello Community,

I was wondering if there is a tool that could de-duplicate records after serializing (or after using Transpose Tool) with a given priority for each field in one of the keys? i.e.

| ID | Origin | Field Name | Value |

| 1 | A | NAME | JACK |

| 1 | B | NAME | PETER |

| 1 | B | ZIP CODE | 15024 |

| 1 | C | ZIP CODE | 15024 |

| 1 | D | TYPE | MID |

| 1 | H | TYPE |

PKL |

Assuming for the field name NAME, the priority should be [ A, B ]

ZIP CODE -> [ C, B ]

TYPE -> [ H, D ]

The expected outcome for Id 1 should be -> JACK, 15024, PKL

Record discarded -> PETER, 15024, MID

In this case I'm using ID and Origin as keys in the Transpose Tool.

I just want to make sure there is no other route than the Python Tool.

Thank you

Luis

Hi,

I did do some searching on this matter but I couldn't find a solution to the issue I was having. I made a Analytic App that the user can select columns from a spreadsheet with 140+ columns. This app looks at the available columns and dynamically updates the list box every time it is run. I wanted users to use the built in Save Selection they don't have to check each box with the columns they want every time they run it. However I seem to have found an issue with the Save Selection option when the Header in the source has a comma in it e.g. "Surname, First Name".

As the saved YXWV file seems to save the selection in a comma delimited way but without " " around the headers. So as you can see in my example below when you try and load this again Alteryx appears unable to parse the values as it thinks "Surname" and "First Name" are separate values/fields and not "Surname, First Name" and doesn't provide an error when it fails to load the selection.

Last Name=False,First Name=False,Middle Name=False,Surname, First Name=False,

So perhaps the Save Selection when writing the file can put string quotes round the values to deal with special characters in the Selections for the List Box. I have made a work around and removed special characters from the header in my source data but its not really ideal.

Thanks,

Mark

We use several Files that are fairly large (canvas size). To traverse around to try and find where we last left off or to examine a specific X, Y at a specific zoom....one would need to either remember the tool number to search for or search for a keyword that returns several choices.

My suggested solution would be to create a bookmark(s). This would allow you to save a named bookmark that would save exactly where (X,Y) you are in the canvas and the Zoom level (Z). This way you could easily switch back and forth with in a canvas just back clicking the bookmark.

If anyone has ever used a CAD program that allowed the creation of 'Views' within the same diagram...this would be similar.

Thank you

When using ConsumerView macro from Join tool palette for demographic data matching from Experian, the matching yield is higher than compared to Business Match marco. It would be great if the matching key for telephone number could be added to Business Match (US) tool the yield might increase and will provide more value to the firmographic data sets than it currently yields by matching just the D&B Business Names and addresses only.

Hi,

I've been working on reporting for a while now and figure out that creatitng sub total wasn't part of any tool.

Any chance this could be implemented in next versions or any macro available?

Thanks

Simon

As you may be aware localisation is the adapting of computer software to regional differences of a target market, Internationalization is the process of designing software so that it can potentially be adapted to various languages without engineering changes.

The idea is to make Alteryx designer tool, the web help content and example workflows to be multilingual (Possibly with the use of "lic" language files or similar) Hopefully the sotware and tutorials will all be localised by crowdsourcing initiatives within the Alteryx community.

I sincerely believe this will help the tool get a lot of traction not in US and UK but in other parts of the world,

Highly likely Mandarin and Spanish would be the first two language versions...

Top languages by population per Nationalencyklopedin 2007 (2010)Language Native speakers(millions) Fraction of worldpopulation (2007)

| Mandarin | 935 (955) | 14.1% |

| Spanish | 390 (405) | 5.85% |

| English | 365 (360) | 5.52% |

| Hindi | 295 (310)[2] | 4.46% |

| Arabic | 280 (295) | 4.43% |

| Portuguese | 205 (215) | 3.08% |

| Bengali | 200 (205) | 3.05% |

| Russian | 160 (155) | 2.42% |

| Japanese | 125 (125) | 1.92% |

| Punjabi | 95 (100) | 1.44% |

In v10, I am using the summarize tool a lot and getting tired of selecting one or more fields and doing a sum function and having to revisit each summary tool when you add a numeric field upstream... I was hoping there would be a more dynamic method, e.g. select all numeric fields and then doing a SUM on _currentfield_.

Then I remembered the Field Info tool. (on a side note, I'd bet this tool is overlooked a lot). This tool is great because for each numeric field you get Min, Max, Median, Std Dev, Percent Missing, Unique Values, Mean, etc.

The one thing that's missing is SUM. Can you add it?

Also, can you give the user option to turn off layouts and reports so it runs faster? I only care out the data side.

or is there another way to do sum on dynamically selected numeric fields? (include Sum on Unknown field)

It would be great to have a spatial function that could be used to evaluate whether two spatial objects are equal/identical. I see this being available in at least three places:

- An "ST_Equal" Formula function

- A SpatialMatch "Where Target Equals Universe"

- An "Equals" Action in the Spatial Process tool

- New Idea 267

- Accepting Votes 1,818

- Comments Requested 24

- Under Review 173

- Accepted 56

- Ongoing 5

- Coming Soon 11

- Implemented 481

- Not Planned 116

- Revisit 63

- Partner Dependent 4

- Inactive 674

-

Admin Settings

20 -

AMP Engine

27 -

API

11 -

API SDK

218 -

Category Address

13 -

Category Apps

113 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

245 -

Category Data Investigation

76 -

Category Demographic Analysis

2 -

Category Developer

208 -

Category Documentation

80 -

Category In Database

214 -

Category Input Output

639 -

Category Interface

239 -

Category Join

102 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

76 -

Category Predictive

77 -

Category Preparation

394 -

Category Prescriptive

1 -

Category Reporting

198 -

Category Spatial

81 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

88 -

Configuration

1 -

Content

1 -

Data Connectors

960 -

Data Products

2 -

Desktop Experience

1,529 -

Documentation

64 -

Engine

126 -

Enhancement

322 -

Feature Request

213 -

General

307 -

General Suggestion

6 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

12 -

Localization

8 -

Location Intelligence

80 -

Machine Learning

13 -

My Alteryx

1 -

New Request

189 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

23 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

78 -

UX

222 -

XML

7

- « Previous

- Next »

- AudreyMcPfe on: Overhaul Management of Server Connections

-

AlteryxIdeasTea

m on: Expression Editors: Quality of life update - StarTrader on: Allow for the ability to turn off annotations on a...

-

AkimasaKajitani on: Download tool : load a request from postman/bruno ...

- rpeswar98 on: Alternative approach to Chained Apps : Ability to ...

-

caltang on: Identify Indent Level

- simonaubert_bd on: OpenAI connector : ability to choose a non-default...

- maryjdavies on: Lock & Unlock Workflows with Password

- noel_navarrete on: Append Fields: Option to Suppress Warning when bot...

- nzp1 on: Easy button to convert Containers to Control Conta...

| User | Likes Count |

|---|---|

| 8 | |

| 8 | |

| 5 | |

| 5 | |

| 5 |