Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: New Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

The download tool is currently a general purpose tool that is used for many different things; from downloading FTP files; to scraping websites.

However, as a general purpose tool, it cannot serve the specific need of scraping a website without doing a huge amount of work to get there. What makes Alteryx great is the fact that it drops the barrier so that regular folks can do some really powerful analytics, but the web scraping capabilities are not yet there and still require a tremendous amount of technical skill to accomplish.

I'll go through this from top to bottom:

- Split capability: The download tool tries to be too many things to too many people. Break it up into its component parts - one for FTP; one for Web Scraping; etc - with deep speciality. You can still keep the download tool as the super-user version but by creating the specialized tools, we can make this much more user-friendly

- Connection: For enterprise users, where there's a locked down connectivity to the internet - there is no way to scrape web content without using CURL. So we need the ability to connect to websites in a way that does not require curl or complex connectivity setups for users to navigate through web proxy settings.

- Alteryx could auto-detect settings by allowing the user to point to the site within a controlled browse form like Excel does

- Parameters: Many websites explicitly support named parameters (using ? notation) - it would be very useful to allow the user to link to these parameters explicitly without having to do complex string conjugations or %20 scrubbing to get of non-URL friendly characters

- Content: Alteryx presents the user with no native ability to process HTML, so all scrubbing to pull out a specific field has to be done through complex read-through of the underlying source of the website (delivered in "DownloadedData") followed by guessing on patterns on how the site does tables or spans etc, followed by complex regex.

- Instead, we could present the user with a view of the web-page and ask them to select the elements that they want

- This would serve the dual purpose of making this user-friendly for regular folks and abstract away the technicalities; but also would allow the download tool to eliminate all the other bits of the page that are not wanted like scripts; interstitial adverts; images; headers & footers etc.

- Improved post / parse capability: Sometimes the purpose of a URL is to generate a download (like the Google Finance API) - again, would be good to observe the user using the target site to record & interpret what they are looking for and what they get (e.g. the file from google)

- HTML & XML types: why not an explicit type in Alteryx for web content?

- Finally - HTML aware. The browse tools are not currently HTML aware, so all the useful formatting to be able to see what's going on, expand nodes, find patterns etc - all this has to be copied out of Alteryx into Notepad ++. Given the ubiquity of HTML parsers and pretty printers and editors, it should be reasonably easy to get a cheap component that can provide this capability

Access to only MD5 hashes via MD5_ASCII(String) and MD5_UNICODE(String) found under string functions is limiting. Is there a way to access other hashing algorithms, ideally via the crypto algorithms from OpenSSL or the .NET framework?

- https://msdn.microsoft.com/en-us/library/system.security.cryptography.hashalgorithm(v=vs.110).aspx

- https://wiki.openssl.org/index.php/Command_Line_Utilities#Signing_.2F_Digest

Hashing functions are a very useful tool to have. There are many different types of hashes and each one has tradeoffs for different uses. This can range from error checking, privacy shielding, password protection, forensic analysis, message authentication (HMAC) and much more. See: http://stackoverflow.com/questions/800685/which-cryptographic-hash-function-should-i-choose

- For workflows with data containing existing hashes, being able to consistently create hashes from non-hashed data for comparison is useful.

- Hashes are also useful because they are the same outside the Alteryx environment. They can be used to confirm correct operation of a production system or a third party's external process.

Access to only MD5 hashes via MD5_ASCII(String) and MD5_UNICODE(String) found under string functions in the formula tool is a start, but quite limiting.

Further, the ability to use non-cryptographic hashes and checksums would be useful, such as MurmurHash or CRC. https://en.wikipedia.org/wiki/List_of_hash_functions

Having the implementation benefit from hardware acceleration (AES-NI / CUDA) would be a great plus for high volume applications.

For reference, these are some hash algorithms that could be useful in workflows:

SHA-1

SHA-256

Whirlpool

xxHash

MurmurHash

SpookyHash

CityHash

Checksum

CRC-16

CRC-32

CRC-32 MPEG-2

CRC-64

BLAKE-256

BLAKE-512

BLAKE2s

BLAKE2b

ECOH

FSB

GOST

Grøstl

HAS-160

HAVAL

JH

MD2

MD4

MD6

RadioGatún

RIPEMD

RIPEMD-128

RIPEMD-160

RIPEMD-320

SHA-224

SHA-256

SHA-384

SHA-512

SHA-3 (originally known as Keccak)

Skein

Snefru

Spectral Hash

Streebog

SWIFFT

Tiger

I have used Publish to Tableau Server macro for over a years. It works fine when I want to overwrite the data.

However, the current macros (from Alteryx Gallery and Invisio) won't work with appending the data. Please modify or develop a workable macro for 'Append the data to Tableau Server'. It will save a lot of time in the daily update process.

Note: I am using Alteryx 11 and Tableau 10.1. Thank you very much.

Hi All,

My company has been using Alteryx designer for several years now but recently started a pilot for Alteryx server. One of the issues we have found is users keep trying to publish workflows that have aliased data connections. As our current Gallery Admin, i do not have permission nor do I know the wide range of connections that the users are trying to connect to. W would like to give users the ability to create/publish an aliased connection directly to Gallery. When this happens, it would automatically add that user to the data connection. They would also be given the ability to share the data source just like they can share a work flow.

One of our goals with this pilot is to promote self service, but having users wait on Admins to create the connections slows their process down.

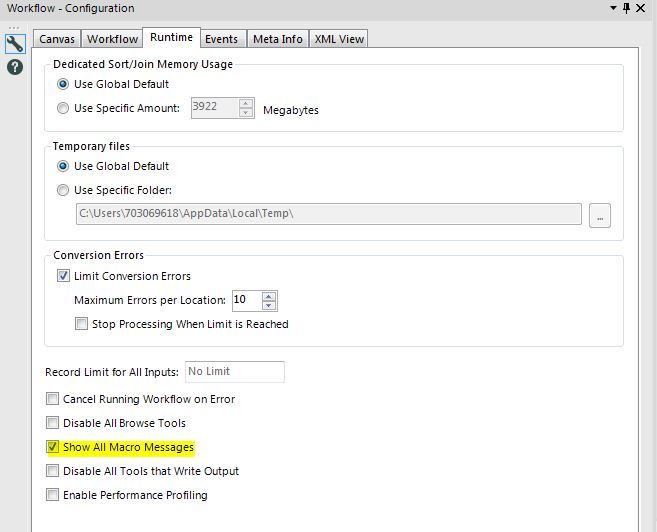

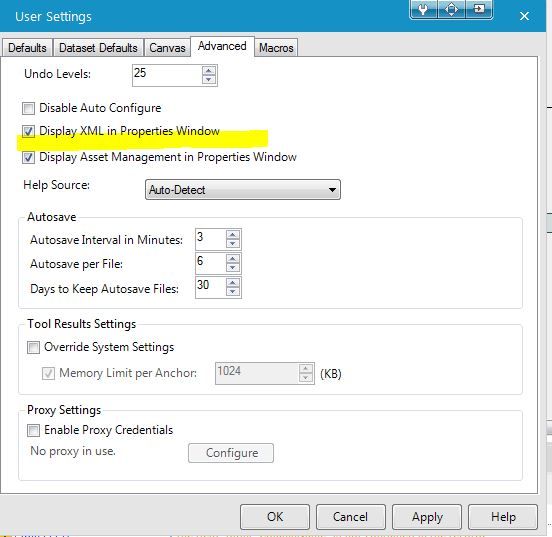

I would love to have a User Setting Default where it allows the "Show All Macro Messages" to be on for all workflows instead of having to turn it on for each workflow.

As a GIS department, we use numerous spatial datasets on a daily basis. Many of these are quite large and we are looking for ways to optimize their performance. Right now, we are forced to use an indexed folder system to increase performance, but we would like to move to Calgary databases. The problem is, that Calgary databases only hold point features which limits the number of our datasets that we can use it with. If we could spatially index line and polygon features as well, that would dramatically increase the usefulness of a Calgary database.

Hi there,

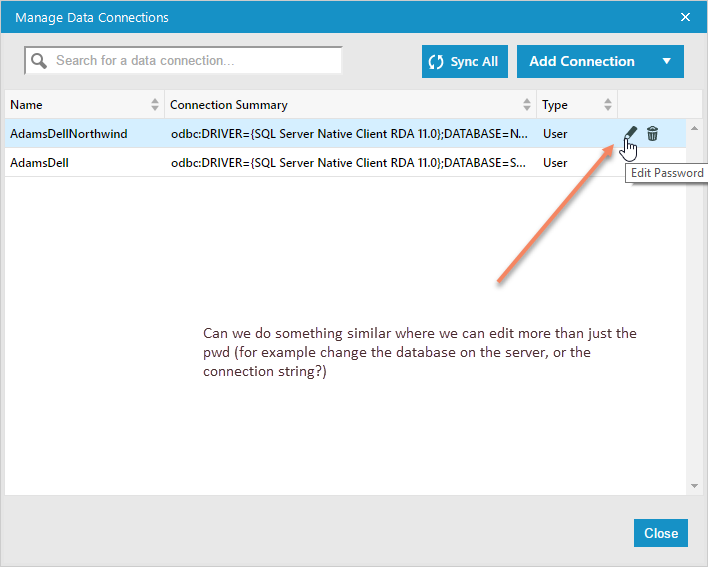

When you use DB connection aliases that are saved in Alteryx, it's currently not easy to edit them when you move a database to a different location.

Can we do something simliar to the "Edit Password" function, but which allows the user to also edit the database or server, so that this applies to all workflows using this alias?

When dealing with very large tables (100M rows plus), it's not always practical to bring the entire table back to the designer to profile and understand the data.

It would be very useful if the power of the field summary tool (frequency analysis; evaluating % nulls; min & max values; length of strings; evaluating if the type is appropriate or could be compressed; whether there is whitespace before or after) could be brought to large DB tables without having to bring the whole table back to the client.

Given that each of these profiling tasks can be done as a discrete SQL query; I would think that this would be MASSIVELY faster than doing this client-side; but it would be a bit of a pain to write this tool.

If there is interest in this - I'm more than happy to work with the Alteryx team to look at putting together an initial mockup.

Cheers

Sean

Hi there,

When you connect to a SQL server in 11, using the native SQL connection (thank you for adding this, by the way - very very helpful) - the database list is unsorted. This makes it difficult to find the right database on servers containing dozens (or hundreds) of discrete databases.

Could you sort this list alphabetically?

I would like to request that IBM Big Insights become a supported data source. Currently I have been unable to connect Alteryx Designer to Big Insights through any ODBC driver.

Or ability to batch change the connection string from A --> B for all tools using A.

Or ability to set a default "saved connection" for a workflow and if you change it cascade the change to all tools.

Case: you have numerous connections in a workflow to a database. You then either:

a) move your data somewhere else and need to replace all the connecitons

b) OR have a standard process for working against DEV, Staging, etc. and want to switch the workflow to use a different saved connection

For the love of god. Put the key to use doing exactly what the name suggests....escaping from windows.

It would be extremely handy to be able to close any open window or dialogue box with the escape key.

This should be an extremely easy fix...

What do you guys think?

-Nick

Even with browse everywhere I see plenty of workflows dotted with browses. Old habits die hard. Maybe a limit configuration for browses would be a good thing. If you've recently browsed a very large set of data you might agree.

Cheers,

Mark

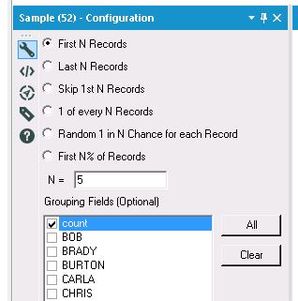

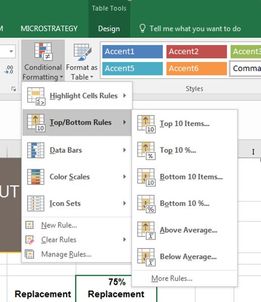

Would love to see a tool that allows you to find the Top N or Bottom N% etc. using a single tool, rather than the current common practices of using 2-3 tools to accomplish this simple task. It's possible some/all of this functionality could be added by simply expanding the current Sample tool to include more options, or at least mirroring the configuration of the Sample Tool in the creation of a new "Top/Bottom Tool."

For example, let's say I wanted to find the top 5 student grades, and then compare all scores to those top 5 grades. I would currently need to do something along the lines of Sort descending (and/or Summarize Tool, if grouping is needed) + Sample Tool (First N Records) + Join the results back to the data. That's anywhere from 3-4 tools to accomplish a simple task that could potentially be done with 1-2.

I'm envisioning this working somewhat like the Top/Bottom rules in Excel Conditional Formatting (see below), and similar to some of the existing options in the Sample Tool (also see below). For example, rather than only being able to select the First N Records in the Sample Tool, I could indicate that I want to select the Top N Records, or the Bottom N% Records. This would prevent the additional step of having to group/sort your data before using the Sample Tool, especially in cases where you're then having to put your records back into their original order rather than leaving them in their grouped/sorted state. You'd still want to have the option of choosing grouping fields if desired. You would also need to have a drop-down field to indicate which field to apply the "Top/Bottom rules" to.

A list of potential "Top/Bottom" options that I believe would be great additions include:

- Top N

- Bottom N

- Top N %

- Bottom N %

- Above Average

- Below Average

- Within a Percentile Range (i.e. "Between 20-30%")

- Skip Top N

- Skip Bottom N

The value added with just the options above would be huge in helping to streamline workflows and reduce unnecessary tools on the canvas.

When building macros - we have the ability to put test data into the macro inputs, so that we can run them and know that the output is what we expected. This is very helpful (and it also sets the type on the inputs)

However, for batch macros, there seems to be no way to provide test inputs for the Control Parameter. So if I'm testing a batch macro that will take multiple dates as control params to run the process 3 times, then there's no way for me to test this during design / build without putting a test-macro around this (which then gets into the fact that I can't inspect what's going on without doing some funkiness)

Could we add the same capability to the Control Parameter as we have on the Macro Input to be able to specify sample input data?

Thanks you to @JoeM for recent training on macros, and @NicoleJohnson for pointing out some of the challenges.

when writing an iterative macro - it is a little bit difficult to debug because when you run this in designer mode, it only does one iteration and stops.

Could we add the capability to the designer itself to be able to run the second and third iteration using the test data built into the macro input tool? Even something as simple as an option to run X iterations; or when it's run the first iteration allow me to look at what happened and trigger iteration 2 (or to trigger a run-through to completion) would be immensely helpful.

While you can do this with a test-flow wrapped around a macro, macro development is a bit of a black box because Alteryx doesn't natively have the ability to step into a macro during run-time and pause it to see what's happening on iteration 1 or 2 or n and why it's not terminating etc. So putting the ability to run in a debug mode would be HUGELY helpful.

Hi there,

Adam ( @AdamR_AYX ), Mark ( @MarqueeCrew) and many others have done a great job in putting together super helpful add-in macros in the CREW pack - and James ( @jdunkerley79 ) has really done an incredible job of filling in some gaps in a very useful way in the formula tools.

Would be possible to include a subset of these in the core product as part of the next release?

I'm thinking of (but others will chime in here to vote for their favourite):

- Unique only tool (CReW)

- Field Sort (CReW)

- Wildcard XLSX input (CReW) - this would eliminate a whole category of user queries on the discussion boards

- Runner (CReW - although this may have issues with licensing since many people don't have command line permission - Alteryx does really need the ability to do chained dependancy flows in a more smooth way.

- Date Utils (JDunkerly) - all of James's Date utils - again, these would immediately solve many of the support questions asked on the discussion forum

I think that these would really add richness & functionality to the core product, and at the same time get ahead of many of the more common queries raised by users. I guess the only question is whether the authors would have any objection?

Thank you

Sean

recently loaded the new V11 and gettting used to it. one immediate gripe is the new version of the Formula Tool no longer supports multiple field actions. In the prior version I could change Data Types on many fields at once. I could move multiple fields in a block at once. there were a few other things but these are things I am sorely missing on my first use of V11. I created about 20 fields in quick succession just getting names down and then going back and putting in formula which were variations on a theme. When done I noticed the default DataType was V_WString and I wanted integer. In the past it was no big deal because I could select the block or interspersed fields and then right click to change data type for all to the same data type. it was very handy and now appears to be gone. please bring these things back.

I've seen the question in the community and have had the need to cascade fields in a record. On certain conditions, field 2 moves to field 1 and field 3 moves to field 2 etcetera. This can be a complicated process remembering if the moves were made and which field contents should be where.

One solution might be to define the cascade condition as an expression and then map the fields as FROM TO and allow for defaulting into a field (ie: Null()).

Another solution might be to reference the input data directly. You could get field values from the input stream before enhancements to data were made.

At the end of a process, Delta Flags (fields changed during the formula) could be created if input/output of selected variables were changed.

A stream of thoughts....

Please enhance the input tool to have a feature you could select to test if the file is there and another to allow the workflow to pause for a definable period if the input file is locked by another user, then retry opening. The pause time-frame would be definable for N seconds and the number of iterations it would cycle through should be definable so you can limit how many attempts to open a file it would try.

File presence should be something we could use to control workflow processing.

A use case would be a process that runs periodically and looks to see if a file is there and if so opens and processes it. But if the file is not there then goes to sleep for a definable period before trying again or simply ends processing of the workflow without attempting to work any downstream tools that might otherwise result in "errors" trying to process a null stream.

An extension of this idea and the use case would be to have a separate tool that could evaluate a condition like a null stream or field content or file not found condition and terminate the process without causing an error indicator, or perhaps be configurable so you could cause an error to occur or choose not to cause an error to occur.

Using this latter idea we have an enhanced input tool that can pass a value downstream or generate a null data stream to the next tool, then this next tool can evaluate a condition, like a filter tool, which may be a null stream or file not found indicator or other condition and terminate processing per the configuration, either without a failure indicated or with a failure indicated, according to the wishes of the user. I have had times when a file was not there and I just want the workflow to stop without throwing errors, other times I may want it to error out to cause me to investigate, other scenarios or while processing my data goes through a filter or two and the result is no data passes the last filter and downstream tools still run and generally cause a failure as they have no data to act on and I don't want that, it may be perfectly valid that on a Sunday or holiday no data passes the filters.

Having meandered through this I sum up with the ideal being to enhance the input tool to be able to test file presence and pass that info on to another tool that can evaluate that and control the workflow run accordingly, but as a separate tool it could be applied to a wider variety of scenarios and test a broader scope of conditions to decide if to proceed or term the workflow.

- New Idea 275

- Accepting Votes 1,815

- Comments Requested 23

- Under Review 173

- Accepted 58

- Ongoing 6

- Coming Soon 19

- Implemented 483

- Not Planned 115

- Revisit 61

- Partner Dependent 4

- Inactive 672

-

Admin Settings

20 -

AMP Engine

27 -

API

11 -

API SDK

218 -

Category Address

13 -

Category Apps

113 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

247 -

Category Data Investigation

77 -

Category Demographic Analysis

2 -

Category Developer

208 -

Category Documentation

80 -

Category In Database

214 -

Category Input Output

640 -

Category Interface

239 -

Category Join

103 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

76 -

Category Predictive

78 -

Category Preparation

394 -

Category Prescriptive

1 -

Category Reporting

198 -

Category Spatial

81 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

89 -

Configuration

1 -

Content

1 -

Data Connectors

963 -

Data Products

2 -

Desktop Experience

1,538 -

Documentation

64 -

Engine

126 -

Enhancement

330 -

Feature Request

213 -

General

307 -

General Suggestion

6 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

12 -

Localization

8 -

Location Intelligence

80 -

Machine Learning

13 -

My Alteryx

1 -

New Request

195 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

23 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

80 -

UX

223 -

XML

7

- « Previous

- Next »

-

NicoleJ on: Disable mouse wheel interactions for unexpanded dr...

- TUSHAR050392 on: Read an Open Excel file through Input/Dynamic Inpu...

- NeoInfiniTech on: Extended Concatenate Functionality for Cross Tab T...

- AudreyMcPfe on: Overhaul Management of Server Connections

-

AlteryxIdeasTea

m on: Expression Editors: Quality of life update - StarTrader on: Allow for the ability to turn off annotations on a...

- simonaubert_bd on: Download tool : load a request from postman/bruno ...

- rpeswar98 on: Alternative approach to Chained Apps : Ability to ...

-

caltang on: Identify Indent Level

- simonaubert_bd on: OpenAI connector : ability to choose a non-default...

| User | Likes Count |

|---|---|

| 23 | |

| 5 | |

| 5 | |

| 5 | |

| 5 |