Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: Top Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

The Input Data tool has a "Field Length" option for CSV files. The default is 254 characters. In most cases, this is woefully inadequate. I tend to add several zeroes to the end to prevent truncation. When I don't remember to do this, I get flooded with conversion errors:

"Input Data (2) The field "hours" was truncated in record #38"

I want to set a global default, that I can override per tool, for length so I don't have to do this every time.

-

Category Input Output

-

Data Connectors

-

Feature Request

-

General

I hope that there will be a radio button or a check box where Auto Save on Run can be disabled. Auto Save on Run is a bad idea. I often go into workflows and only need one report - so I break a bunch of connections to other reports, maybe I make some other small adhoc or one-off type changes and then Run the workflow. I get my one, maybe modified, report and close without saving. So the next time I open the workflow it's as it should be.

With Auto Save on Run - I'd have to undo everything I changed. What if it's not my workflow to be changing? If there is no option to turn it off: I'd have to make a copy of the workflow, open and make changes, then run, then close, then delete the workflow.

In general, you should never be saving unless it is a deliberate act performed by the user.

-

Feature Request

-

General

When opening an App in Designer - you are generally opening it to work on. It should not open as an App but rather it should open as a Workflow. Maybe make it so if you hold down the Ctrl or Shift key and opens the App - it opens as an App and you can test it out.

-

Feature Request

-

General

Some of us work in teams to build complex workflows, resulting in various versions that have to be stitched together. It would be amazing to have the workflow on a shared drive and have a mode where multiple users can build, review, and modify simultaneously. (This was one of the biggest sells for our company migration from Microsoft Office to Google suite).

This would promote collaboration, learning, and more efficient and quality driven workflows.

-

Feature Request

-

General

As Alteryx becomes more focussed on the Enterprise - it is important that we build capabilities that support the needs of large-scale BI.

One of these critical needs is dealing with heterogeneous data from different systems that use different IDs for every critical entity / concept (e.g. client; product)

Here's the example:

Problem:

- In any large enterprise - there are several thousand different line-of business systems

- Each of these was probably built at a different time, and uses a different key for specific concepts - like Client & Product

- Most large enterprises that I've worked at do not have a pre-built way of transforming these codes so...

- This means that any downstream analytics finds it almost impossible to give single-view-of-customer or single-view-of-product.

Solution option A:

Reengineer all upstream systems. Not feasible

Solution option B:

Expect some reference-data team to fix this by building translations. More feasible but not fast

Remaining Solution Option:

Just as Kimball talked about - the only real way is to define a set of enterprise dimensions, which are the defined master-list of critical concepts that you need to slice-and-dice by (client; product; currency; shipping method; etc) in a way which is source-system agnostic

Then you need a method in the middle to transform incoming data to use these codes. This process is called "Conforming"

What would this look like in Alteryx?

Setup

- We would use the connect product to define a new dimension - say "Product".

- Give this a unique ID which is source-system independant; and then add on the attributes that are important for analytics (product type; category; manufacturer; etc)

- Then decide how to handle change (slowly changing dimension or SCD type 0,1,2, etc). Alteryx should take full responsibility for managing this SCD history; as do many of the competitors

- We then create a list of possible synonym types (within Connect). For example - a product may have a synonym ID from your supplier; from your ERP system; from your point of sale system. that's 3 different IDs for any product.

- We then load up the master data - this is painful but necessary

In Use:

- I read in data into alteryx via any input tool

- I bring in a "Conforming" tool off the toolbox (new tool which is needed)

- It asks me which column or columns I wish to conform

- For each - it asks me which synonym type to use

- It then adds a translated column for me to use which ties back to the enterprise dimension - and spits out the errors where the synonym is necessary.

Impact:

In BI in smaller contexts, or quick rapid-fire BI - you don't have to worry about this. But as soon as you go past a few hundred line-of-business systems and are trying to do enterprise reporting, you really have to take this serious. This is a HUGE part of every BI persons's role in a large enterprise - and it is painful; slow and not very rewarding. If we could create this idea of a simple-to-use and high-velocity conforming process - this would absolutely tear the doors off enterprise BI - and no-one else is doing this yet!

+ @AshleyK @BenG @NickJ @ARich @patrick_digan @JoshKushner @samN @Ari_Fuller @Arianna_Fuller

-

Feature Request

After hitting "Test" in Workflow Dependencies:

Failed result = Bold red text (and a message)

Success result = Nothing?

Maybe we could get bold green letting us know that the test completed successfully.

-

Documentation

-

Feature Request

In every application, be it in Designer or on the Server product, please make version number easily available in Help --> About and make it copy-able so that we can quickly copy-paste it when submitting a case.

Currently, the Server product does not even have a menu item to quickly be able to see what exact version number it is on.

-

Feature Request

The Python tool has been a tremendous boon in being able to add capability that is not yet available in the Alteryx platform.

It would make the Python Tool much more usable and useful if you can define the inputs explicitly rather than just relying on the good behaviour of both the user; and also the python code that reads the inbound data (Alteryx.Read('#1'))

This is not something that the Jupyter notebook code-interface may handle directly (because the Jupyter notebook has no priveledged knowledge of the workflow outside it); so this may be best handled by the container itself.

The key here is that if my python app requires 2 inputs - it should be possible to define these explicitly so that we can test; and also so that we can prevent errors and make this more bullet-proof.

The same would apply on the outbound nodes for the Python tool.

-

API SDK

-

Category Developer

-

Feature Request

-

General

The one single feature I miss the most in Alteryx, is the possibility to restart the workflow from wherever I want by using a built-in cache functionality. I have used the 'Cache Dataset V2' macro, but it really is to inflexible and really doesn't make me a happy Alteryx user. I would like to se a more flexible, quicker way of working with cached data.

On a single tool in the workflow I want to be able to set the option to:

- Enable cache

This would enable me to always use cached data from this node when possible - Run to this node

Run from start OR from node with enabled cache to this node.

There should be lots of workflow options regarding the creation/deletion of cached data. Examples:

- Enable data cache on all nodes

This would enable functionality to always use cached data on all nodes in the workflow - Enable data cache on end nodes

This would enable functionality to cache data on all 'Run to this node'-nodes.

...and so on. These are just a few examples, but there should be lots of options and shortcut keys revolving the cached data functionality in the workflow.

-

Feature Request

-

General

the SQL Editing screen has recently been changed (thank you @JPoz and team!) - and now has syntax indenting and keyword coloring.

Could I ask that you make a minor change in the indenting, where the on part is indented underneath the Join?

Select

Field1,

Field2,

field3

from

Table1

inner join table2

On Table1.key = table2.key

and table1.keyb = table2.keyb

inner join table3

on table3.key = table1.key

and table3.date = table1.date

-

Feature Request

-

General

-

Tool Improvement

I suggest that it would be beneficial to add in a column filter that can automatically remove columns based on a condition, such as removing columns where all values are NULL or if they contain something in the values.

Should have a True and False output, like the normal Filter tool, so you can check what is being removed.

e.g. Would help with when you get poorly formatted excel sheets that add in hundreds of redundant columns, or if your workflow has generated NULL columns that should be removed, without having to Transpose, Filter, Cross tab etc to clear them out.

Thanks,

Doug

-

Feature Request

-

New Tool

I would like to see some functionality that would allow the user to select a specific tool(s) and run them. It looks like the workflow caches data at each tool so it should be doable to make it where i can run a specific tool from any point.

I know for me, i often run my new workflow and have forgotten a browse somewhere then have to add it and run the whole thing. Instead it would be ideal to be able to insert my browse like normal and select the tool before that browse and run just that one.

Do you guys think this would also be a useful capability?

-

Feature Request

I enjoy using Alteryx. It saves me a lot of time compared to manually writing scripts. But one of my frustrations is the lack of 'intelligence' in the IDE. Please make it so that if I change a name of a column in a select tool or a join, every occurence of that variable/column in selects, summarises, formulae and probably all tools downstream of the select tool renames as well. In other IDEs I believe this is called refactoring. It doesn't seem like an big feature to make, it would save enormous amounts of time and would make me very happy.

While we're on the 'intelligence' of the IDE, there is a small, easily fixable bug. When I have a variable with spaces in the middle, for example, 'This is my column name', and start writing in the code field "[Thi" then the drop downbox suggests "[This is my column name]". All good so far. But if I get a little further in the variable name, and write instead "[This i", then the dropdown box suggests "[This is my column name]", and I click this, the result is this: "[This [This is my column name]". Alternatively, I could write "[This is my col" and the result would be "[This is my [This is my column name]". Clearly this could be avoided by my using column names with underscores or hyphens; but I wanted to highlight to you the poor functionality here.

Kind regards,

Ben Hopkins

-

Feature Request

Idea:

Let's add the universal PMML predictive markup language to rapidly deploy our predictive models.

Rationale:

Most of the predictive models created are essentially built to be deployed into well known enterprise level decisioning tools.

These decisioning tools are working at banks, non bank financial institutions, credit card issuers, insurers on a daily basis.

If Alteryx want's to replace SAS, SPSS and similar competitor this PMML addition seems to be a must.

They all have a common markup language and even with the newer version we can deploy almost all data pre-processing steps too...

Current status in Alteryx as I'm aware of:

Only case where Alteryx and PMML comes close is the following link; http://www.alteryx.com/topic/pmml promising including PMML in the upcoming releases in 2012. And then no word of it. The writer is retired already and no word since 2012 (3 yrs is a long time span).

What to do:

On easy way is already done for R, this is a Java library for converting R models to PMML https://github.com/jpmml/jpmml-converter

Clients:

FICO is one of the well known credit bureaus worldwide and also the vendor of decisioning software. They also have an Analytics Modeler Suite. All FICO systems are PMML compliant. Chek it out: http://www.decisionmanagementsolutions.com/2014/10/17/first-look-fico-analytic-modeler-suite-jtonedm...

Also Experian is one of the other big software vendors in the Analytics space with decision management tools and they are actually a partner of Alteryx too... Their well known widely implemented Powercurve tool has PMML language import too... Check it out: http://jtonedm.com/2014/05/13/first-look-experian-powercurve-update/

IBM ODM is a nother vendor for decisioning tools in the enterprise level. They also have PMML integration, look; http://www.prolifics.com/blog/odm-integration-spss-predictive-analysis-suite-part-1-pmml-import

Competitor status:

SAS supports PMML export from SAS Enterprise Miner

IBM SPSS products (Modeler and Statistics) offer a great deal of support for PMML

KNIME is one of the leaders in processing PMML documents

RapidMiner has a PMML extension

MicroStrategy strongly supports the standard

-

Feature Request

Data profiling feature in the Browse tool is a great feature, however, when your working with an extremely large data set, it can a large amount of time to fully render.

My idea is if Alteryx can make a pre-determination whether the data is so large that it'll take a substantial amount of time to profile the data. If it does, to not profile immediately, rather, display a message where the profile info would be letting the user know that it may take a while to generate with a "Generate Profile" button if the user needs to see it.

Another option would be to only profile a sample of the data and present the user an option to profile everything.

Thanks,

Jimmy

-

Feature Request

-

General

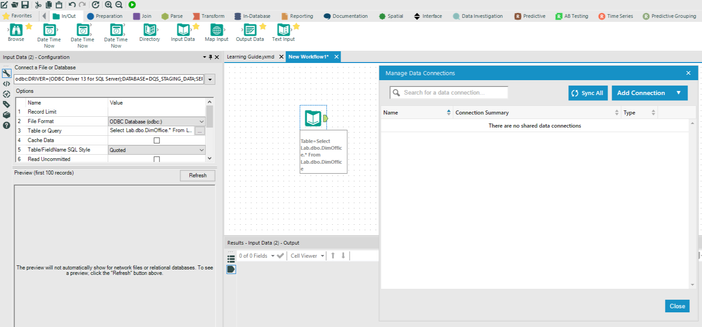

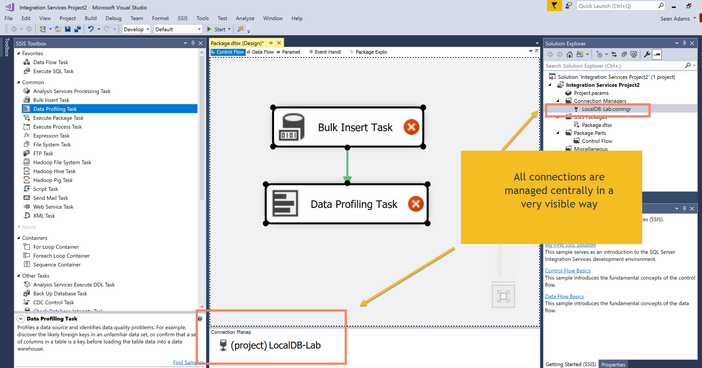

When I add a data connection to my canvas - it's only added to the Data Connections window under certain circumstances (e.g. when I use an alias, or the SQL connection wizard) rather than showing ALL data connections.

Given the importance of data connections for Alteryx flows - it would be better if ALL data connections were grouped together under a Data Connection Manager, which was as visible as the results window not buried deep in the menu system - and you could also then use this spot to change; share; alias etc.

In Microsoft SSIS there's a useful example of how this could be done - where the connections are very visibly a collection of assets that can be seen and updated centrally in one place. So if you have 5 input tools which ALL point to the same database - you only need to update the connection on your designer in one place - irrespective of whether this is a shared connection or not.

-

Feature Request

One of the biggest areas of time spent is in basic data cleaning for raw data - this can be dramatically simplified by taking a hint from the large ETL / Master data Management vendors and making this core Alteryx.

Server Side

- Allow the users of the server & connect product to define their own Business Types (what Microsoft DQS calls "Domains")

- Example may be a currency code - there are many different synonyms, but in essence you want your data all cleaned back to one master list

- Then allow for different attributes to be added to these business types

- Currency code would have 2 or 3 additional columns: Currency name; Symbol; Country of issue

- Similar to Microsoft DQS - allow users to specify synonyms and cleanup rules. For example - Rupes should be Rupees and should be translated to INR

- You also need cross business type rules - if the country is AUS then $ translates to AUD not to USD.

- These rules are maintained by the Data Steward responsibility for this Business Type.

- This master data needs to be stored and queryable as a slowly changing dimension (preferrably split into a latest & history table with the same ID per entry; and timestamps and user audit details for changes)

Alteryx Designer:

- When you get a raw data set - user can then tag some fields as being one of these business types

- Example: I have a field bal_cur (Balance Currency) - I tag this as Business Type "Currency"

- Then Alteryx automatically checks the data; and applies my cleanup rules which were defined on the server

- For any invalid entries - it marks these as an error in the canvas; and also adds them to a workflow for the data steward for this Business Type on the server - value is set to an "unmapped" value. (ID=-1; all text columns set to "unmapped")

- For any valid entries - it gives you the option to add which normalised (conformed) columns you want - currency code; description; ID; symbol; country of issue

Data Steward Workflow:

- The data steward is notified that there is an invalid value to be checked

- They can either mark this as a valid value (in which case this will be added to the knowledge base for this business type) or a synonym of some other valid value; or an invalid value

Cleanup Audit & Logs:

- In order to drive upstream data cleaning over time - we would need to be able to query and report on data cleanups done by source; by canvas; by user; by business type; and by date - to report back to the source system so that upstream data errors can be fixed at source.

Many thanks

Sean

-

Feature Request

When using the Cross Tab tool, the Count option under Method for Aggregating Values only appears when the field selected for Values for New Columns is a numeric field. I can add a numeric RecordID before the Cross Tab and then count on that, but why can't the Cross Tab count non-numeric fields? The Summarize tool can.

-

Feature Request

Add a search or find function that looks for content within a tool rather than just the tool number. e.g. ctrl Find, to look for any tool that uses a keyword or field in the formula/join/etc. This would save me a boatload of time editing, updating, and troubleshooting my workflows.

-

Feature Request

-

Tool Improvement

Request is to add in the parameter to control sampling level in the Google API connector. I'm getting very different results pulling the same report from the API and the GA UI. The API data has significantly more variability which is evidence of sampling. We have a premium 360 account and are still getting the sampling results, I believe its just necessary to add in the parameter in the outgoing script providing:

samplingLevel= DEFAULT, FASTER, or HIGHER_PRECISION.

https://developers.google.com/analytics/devguides/reporting/core/v3/reference#sampling

https://developers.google.com/analytics/devguides/reporting/core/v3/reference#samplingLevel

- Zach

-

Feature Request

- New Idea 240

- Accepting Votes 1,820

- Comments Requested 25

- Under Review 164

- Accepted 58

- Ongoing 5

- Coming Soon 9

- Implemented 481

- Not Planned 119

- Revisit 65

- Partner Dependent 4

- Inactive 674

-

Admin Settings

19 -

AMP Engine

27 -

API

11 -

API SDK

217 -

Category Address

13 -

Category Apps

112 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

242 -

Category Data Investigation

76 -

Category Demographic Analysis

2 -

Category Developer

207 -

Category Documentation

80 -

Category In Database

212 -

Category Input Output

635 -

Category Interface

237 -

Category Join

101 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

76 -

Category Predictive

77 -

Category Preparation

388 -

Category Prescriptive

1 -

Category Reporting

198 -

Category Spatial

81 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

87 -

Configuration

1 -

Data Connectors

954 -

Data Products

1 -

Desktop Experience

1,514 -

Documentation

64 -

Engine

125 -

Enhancement

303 -

Feature Request

212 -

General

307 -

General Suggestion

4 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

11 -

Localization

8 -

Location Intelligence

80 -

Machine Learning

13 -

New Request

181 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

23 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

77 -

UX

222 -

XML

7

- « Previous

- Next »

- nzp1 on: Easy button to convert Containers to Control Conta...

-

Qiu on: Features to know the version of Alteryx Designer D...

- DataNath on: Update Render to allow Excel Sheet Naming

- aatalai on: Applying a PCA model to new data

- charlieepes on: Multi-Fill Tool

- seven on: Turn Off / Ignore Warnings from Parse Tools

- vijayguru on: YXDB SQL Tool to fetch the required data

- bighead on: <> as operator for inequality

- apathetichell on: Github support

- Fabrice_P on: Hide/Unhide password button

| User | Likes Count |

|---|---|

| 180 | |

| 18 | |

| 18 | |

| 13 | |

| 8 |