Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: Hot Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

We aren't getting a huge amount of help from support on this, so I'm posting this idea to raise awareness for the product teams responsible for the Salesforce connectors and the embedded Python environment.

This post from user Dubya describes the issue in detail:

I have a workflow with several salesforce tools in it, which works fine on my machine. But we need another alteryx user in our office to be able to access, run and maintain the workflow too, via their machine and copy of alteryx designer.

However we're finding that the salesforce inputs and outputs can only be authenticated on one machine at a time.

When the other new user opens the original workflow from the shared network location, the salesforce tools display an error "Salesforce Input (1): {'error': 'invalid_grant', 'error_description': 'authentication failure'}" and the tools fail to load any data. But we can see the full query in the tool and we can even set the custom query option and validate the query successfully, which suggests the source is being correctly connected to and queried, but we just cant run the tool.

The only way to run the tool successfully is to change the credentials and re-authenticate the tool. However this then de-authenticates the original machine, and when we open up the workflow on there and try to run ying the workflow brings back the same error.

We've both tried this authentication back and forth on our own machines and each time one of us re-authenticates, it de-authenticates the other, leading to it triggering the error.

Can someone help explain what's going on and how to fix it, as this doesn't bode well for our collaboration.

We're both running:

The latest build of version of designer 2021.2 (original machine also running desktop automation)

Salesforce Input Tool v4.1.0

Salesforce Output Tool v1.3.0

My response here identifies that this is a problem for our organization as well:

We're experiencing the same issue. It appears to be related to how the tool handles password and security token decryption. I've found that when you modify the related registry entry from "true" to "false", you can see in the tool's xml that the encrypted password and security token are still in there. I'm not sure what else is going on behind the scenes beyond that, but that ought to be addressable by the product teams handling the Salesforce connectors and the Python installation embedded in Designer.

The only differences in our environment compared to u/Dubya's are that we're running on 2020.4 and attempting to use Salesforce Input Tool v4.2.4.

This is a must have for anyone who needs the ability to share workflows among multiple users. This is part of a series of problems that these updated connectors have been plagued with since introducing them years ago, and no one at Alteryx seems to care enough to truly fix the problems. Salesforce is a core system for our organization, so having tools that utilize the latest version of Salesforce's APIs is very important to us. The additional features that the Input tool provides are welcome, but these bugs have to be sorted out in order for us to extract any kind of value out of them. If the "deprecated" Salesforce tools were ever to be removed from Designer while there are issues with the "new" connectors, we would have no choice other than to never upgrade Designer/Server again and be forced to look for another product to serve as our ETL platform.

Please, please, please address this.

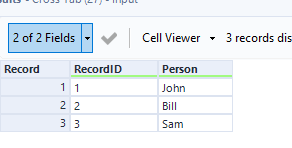

Hi currently if you use the cross tab tool and the names of the new fields should have special characters they end up being replaced in the new headers with underscores "_", and then need to be updated in someway. It would be great if this was all done in the tool. In other words the new headers have the special characters as desired

-

Category Transform

-

Enhancement

Cross tab automatically alphabetizes the column headers this can be a little awkward when unioning on column position later on. Would be nice to have this as an optional feature through a tick box on the tool.

-

Enhancement

-

UX

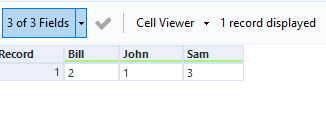

I want to jump to expression #3 of formula (3), when I see following error message. Now I can jump to formula (3), but only expression #1 is opened, not #3. If I have 30 expressions, it is hard to find #20 in 30s.

-

Enhancement

-

UX

Need a way to highlight lines whether that means right-clicking and selecting a color or what-not, but just having the lines become black & BOLD doesn't cut it. It's not easy on the eyes. If I could click this line/connector and make it bright green that would be ideal and then I can see where it connects better when zooming out.

-

Enhancement

-

UX

Instead of using the arrows, I think it would be nice to be able to drag and drop the questions to rearrange them in the Interface Designer. This would go more hand in hand with the drag and drop experience of Alteryx.

Additionally, when a lot of interface tools are on the canvas, Designer really slows down if you need to rearrange the order of the tools in the Interface Designer. I would like to see if there is any way that this can be sped up.

Thanks!

-

Enhancement

-

UX

It'd be great to have all DCM connections available in the Data connections window.

And when Use Data connection Manager (DCM) is ticked, The screen defaults to DCM Connection list.

-

Enhancement

-

UX

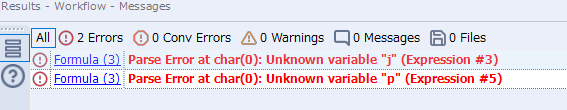

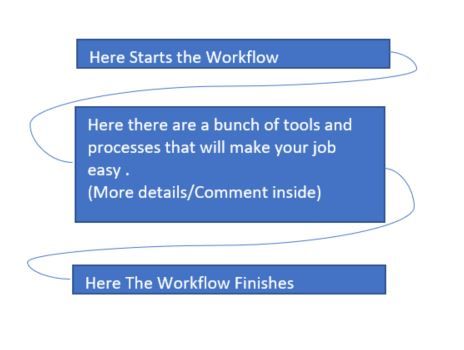

I know that the container title/label should or can be short, and as much descriptive as possible. Also, adding extra comments inside the box helps to a more detailed explanation on regards what process is run inside the container. Visually, if I collapse the container, the "Short" title given can't be of much help.

Could it be possible to enhance the "caption" for the "Container" title? I mean to allow to type 2, 3 or more lines of text?. This will make the Container title more descriptive and visually will allow to have the containers collapsed but with a reasonable amount of text that describe (as much as possible) what happens inside the container.

At the moment, If I type certain amount of text, the container expands according to the length of the text

Below is the typical container Title

Below is the current situation if a person would like to give a bit of more description in the "Container" header (The container expands)

An dream would be to have the workflow with all containers collapsed and with titles that tell you what they do (see image below)

-

Enhancement

-

UX

When building API calls within Alteryx there are a few common steps required

1) Build out the URI for the API call (base URL plus any query parameters)

2) Deal with authentication, such as basic authentication requires taking a key and secret, base 64 encoding and passing this into the tool

3) parsing the results out and processing these downstream

For this idea I am specifically focusing on step 3 (but it would be great to have common authentication methods in-built within the download tool (step 2)!).

There are common steps required to parse out the results, such as using Filter (to check for a 200 response), JSON parse, text to columns and then cross tab to get the results into a readable format. These will all be common steps anyone who has worked with APIs will be familiar with:

This is all fine for a regular user to quickly add in and configure these tools. However there is no validation here for the JSON result being as expected, which when embedding an API into a batch macro or analytic app means it can easily fail.

One example of a failure which I've recently come across is where the output JSON doesn't have all fields (name:value pairs) depending the json response. For example using the UK Companies House API, when looking at the ceased to act field at this endpoint - https://developer-specs.company-information.service.gov.uk/companies-house-public-data-api/resources... the ceased to act field only appears in the results if a person has actually ceased to act. This is important if you have downstream tools such as a formula to create a field [Active] where you have:

IF ISNull([ceased_to_act]) THEN "Active" ELSE "Ceased to Act" ENDIFHowever without modification the macro / app will error if any results are returned where there is not this field.

A workaround is to add in the Crew Ensure Fields or union on a list of fields, to ensure that the Cease to Act field is present in the output for all API calls. But looking at some other tools it would be good if an expected Schema could be built in to the download tool to do this automatically.

For example in Power Automate this is achieved as follows:

I am a big advocate of not making things unnecessarily complicated. Therefore I would categorise this as an ease of use feature to improve the experience of working with APIs within Alteryx and make APIs (as load of integrations are API based) accessible to as many users as possible.

-

API SDK

-

Category Developer

-

Enhancement

-

UX

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

-

Enhancement

-

Machine Learning

Hello Team,

Currently, in the select tool, we have to scroll up or down to check or see the list of the fields. In case, if the user wanted to change the data type, they can scroll into the list. Like, I am working on the mid-size data, and sometimes data contain 300+ fields, if I need to change anything in the data type I have to search by scrolling up or down.

The idea here is, If you provide a search bar under Field, it will be a great help to all, in case if anyone needs to go for some specific field, the user just types the name in the search bar and make changes quickly. The select tool is important and we used much time while working on the flow.

Thank you,

Mayank

-

Enhancement

-

UX

Cleanse Macro

Given a choice between the delivered macro and the CReW macro, I’ll choose the CReW macro for both speed and functionality. Wikipedia says, “Data cleansing or data cleaning is the process of detecting and correcting (or removing) corrupt or inaccurate records from a record set, table, or database and refers to identifying incomplete, incorrect, inaccurate or irrelevant parts of the data and then replacing, modifying, or deleting the dirty or coarse data.” If Alteryx were to convert the macro to a true tool, here is my feature request list:

Performance:

- AMP compatible – Fast!

- Faster than the CReW macro for deleting empty fields/rows

- Resolve time it takes to load the tool (current macro versions are slow), html is faster.

Feature Enhancement:

- Allow selection of fields based on data type

- Include incoming/outgoing SELECT functionality

- Allow for PREFIX functionality (like multi-field formula), but NOT default

- Read incoming metadata to provide color coding of fields to indicate where potential problems exist (e.g. NULL, Whitespace) – part of browse everywhere currently

- Allow for Nulls to convert to 0/blank or 0/blank to convert to Null

- When removing punctuation, provide for exceptions (e.g. Numeric set of negative, comma and period).

- Include HTML tag removal

- Support internationalization (character sets)

Going the extra mile:

- Display or opt for output, cleanup metrics. How dirty was my data? Potentially, allow for ERROR to stop workflow if garbage is detected.

- Optional: Detect outliers in numeric data. I’ve got an outlier detection macro that we can review, but while you are passing all of the data for numeric values, explaining or tagging outliers would be useful. Could be a box-whisker on numeric values maybe?

- Make outlier actionable

- Identify in data (new field indicator)

- Remove

- Modify/Impute

- Test/Preview against metadata: (pre-run), see what the incoming/outgoing results would be on *all of the metadata before I run the workflow.

- camelCase: https://en.wikipedia.org/wiki/Camel_case

- Identify/Replace unknown values (e.g. N/A, Not Applicable, #) with Null() or other?

- Identify/Remove duplicate values within a cell

- See also: https://en.wikipedia.org/wiki/Data_cleansing

- Option to point to a “personal” dictionary for spelling or validation

- Provide “smart” annotation on tool

- Make outlier actionable

-

Category Preparation

-

Desktop Experience

-

Enhancement

Our company often builds applications where we need the ability for it to dynamically update dropdowns based on a user's previous selections.

For example:

- A user needs to select their Server, database, and table for analysis (3 dropdowns).

- When the user selects their server, a query is run to get a list of all databases on that server. Then the database dropdown will automatically populate with this list of databases.

- The user then makes a database selection, and a query is then run to get all tables within that database. The table dropdown will automatically populate with this list of tables.

- The user makes their table selection, and then runs their analysis using the server, database, and table variables with values that they have selected from each dropdown.

We can do this in other programs, but unfortunately the lack of dynamic selections/dependent dropdowns is a big limitation for us when building Alteryx applications. Our current workarounds are chaining applications together, or using PyQt within the workflow. Chaining is clunky and often causes unforeseen issues when uploading to Server with errors that are non-descriptive, and using PyQt comes with Python versioning issues.

If this interactivity can somehow be added to Alteryx applications it would be a huge upgrade to our current Alteryx processes. Any suggestions for further workarounds would also be helpful!

Thank you,

Amanda

-

Category Apps

-

Desktop Experience

-

Enhancement

Hi there,

When you connect to a DB using a connection string or an alias - this shows up in the Workflow Dependancies in a way that is very useful to allow you to identify impacts if a DB is moved or migrated.

However - in 2023.1, if you use DCM then the database dependancies just show up as .\ which makes dependancy management much more difficult.

Please could you add the capability to view the DCM dependancies correctly in the dependancy window?

BTW - this workflow Dependancy Window would be a great place to build a simple process to move existing DB connections to a DCM connection!

CC: @wesley-siu @_PavelP

-

Category Connectors

-

Enhancement

-

New Request

-

Scheduler

Hi there,

When connecting to data sources using DCM - could we please add the ability to make JDBC connections?

see:

https://community.alteryx.com/t5/Engine-Works/JDBC-Connections-in-Alteryx/ba-p/968782

As mentioned in these threads - JDBC is very common in large enterprises - and in many cases is better supported by the technology teams / developer community and so is much easier to make a connection. Added to this - there are many databases (e.g. DB2) where JDBC connections are just much easier

Please could you add JDBC connections to the DCM tooling?

Thank you

Sean

cc: @wesley-siu @_PavelP

-

Category Connectors

-

Enhancement

-

New Request

-

Scheduler

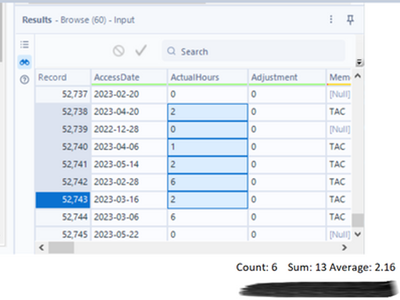

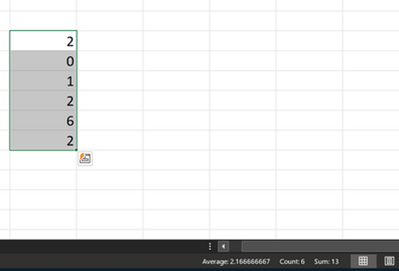

Alteryx should seriously consider incorporating certain Excel features into its Brows tool, as they greatly enhance usability and functionality.

Currently, when selecting specific records in the Brows tool, users are unable to obtain important metrics such as sum, average, or count without resorting to additional steps, such as adding a summary tool or filters.

However, envisioning the integration of a concise bar below the message result window that provides these essential statistics, which are immensely beneficial to users, would undoubtedly elevate the Brows tool to the next level.

By implementing this enhancement, Alteryx would make a significant impact and establish the Brows tool as a must-have resource.

-

Enhancement

-

New Request

-

UX

I think we can all agree that Workflow Summary Tool is immensely powerful in summarizing large and/or complicated workflows. However, some companies have begun to bar the use of certain GenAI applications, like ChatGPT. Unfortunately this makes the use of the Workflow Summary Tool impossible. At the same time those companies are allowing the use of other forms of GenAI, like AzureAI.

In the Workflow Summary tool, it would be nice to have the capability to select which GenAI engine you want to use (ChatGPT, AzureAI, etc) so that you don't break corporate policy by using barred applications. This could simply be a dropdown in the GUI configuration for the Workflow Summary Tool with a list of the most common engines. The user would then supply their API key for that engine, and you're off to the races.

-

Enhancement

-

XML

Hi there,

When creating a database connection - Alteryx's default behaviour is to create an ODBC DSN-linked connection.

However DSN-linked connections do not work on a large server env - because this would require administrators to create these DSNs on every worker node and on every disaster recovery node, and update them all every time a canvas changes.

they are also not fully safe becuase part of the configuration of your canvas is held in the DSN - and so you cannot just rely on the code that's under version control.

So:

Could we add a feature to Alteryx Designer that allows a user to expand a DSN into a fully-declared conneciton string?

In other words - if the connection string is listed as

- odbc:DSN=DSNSnowFlakeTest;UID=Username;PWD=__EncPwd1__|||NEWTESTDB.PUBLIC.MYTESTTABLE

Then offer the user the ability to expand this out by interrogating the ODBC Connection manager to instead have the fully described connection string like this:

odbc:DRIVER={SnowflakeDSIIDriver};UID=Username;pwd=__EncPwd1__;authenticator=Snowflake;WAREHOUSE=compute_wh;SERVER=xnb27844.us-east-1.snowflakecomputing.com;SCHEMA=PUBLIC;DATABASE=NewTestDB;Staging=local;Method=user

NOTE: This is exactly what users need to do manually today anyway to get to a DSN-less conneciton string - they have to craete a file DSN to figure out all the attributes (by opening it up in Notepad) and then paste these into the connection string manually.

Thanks all

Sean

-

Admin Settings

-

Category Developer

-

Enhancement

-

User Settings

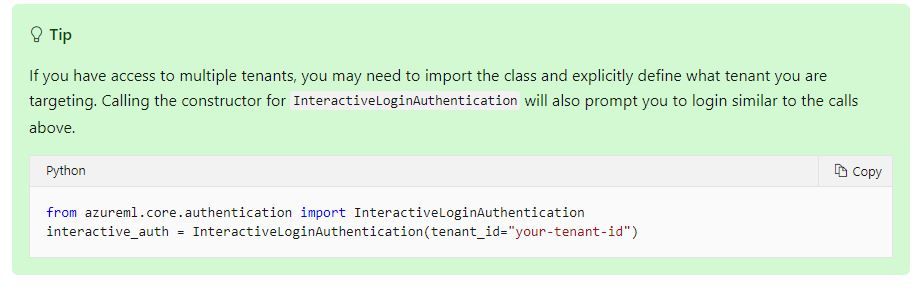

Introducing: The Azure Machine Learning Training and Scoring Tools

We tried to use this tool but can't log in to Azure ML correctly. We have several Tenant ID then log in to another tenant for office 365 not Azure ML.

====================== <Error Message> ==========================================================

Message: You are currently logged-in to 55f0a...-.............................................. tenant. You don't have access to d846a...-............................................. subscription, please check if it is in this tenant. All the subscriptions that you have access to in this tenant are =

[SubscriptionInfo(subscription_name='Microsoft Azure Enterprise', subscription_id='754c5...-...........................')].

Please refer to aka.ms/aml-notebook-auth for different authentication mechanisms in azureml-sdk.

InnerException None

ErrorResponse

=======================================================================================================

Microsoft states that tenant needs to be specified if we have access to multiple tenants.

Set up authentication for Azure Machine Learning resources and workflows

Could you add Tenant ID into Azure credentials so that we can use this tool?

-

Category Connectors

-

Data Connectors

-

Enhancement

- New Idea 256

- Accepting Votes 1,818

- Comments Requested 25

- Under Review 168

- Accepted 56

- Ongoing 5

- Coming Soon 11

- Implemented 481

- Not Planned 118

- Revisit 64

- Partner Dependent 4

- Inactive 674

-

Admin Settings

20 -

AMP Engine

27 -

API

11 -

API SDK

218 -

Category Address

13 -

Category Apps

112 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

245 -

Category Data Investigation

76 -

Category Demographic Analysis

2 -

Category Developer

208 -

Category Documentation

80 -

Category In Database

214 -

Category Input Output

636 -

Category Interface

238 -

Category Join

102 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

76 -

Category Predictive

77 -

Category Preparation

391 -

Category Prescriptive

1 -

Category Reporting

198 -

Category Spatial

81 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

87 -

Configuration

1 -

Data Connectors

958 -

Data Products

3 -

Desktop Experience

1,523 -

Documentation

64 -

Engine

125 -

Enhancement

314 -

Feature Request

212 -

General

307 -

General Suggestion

4 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

12 -

Localization

8 -

Location Intelligence

80 -

Machine Learning

13 -

New Request

188 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

24 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

77 -

UX

223 -

XML

7

- « Previous

- Next »

- rpeswar98 on: Alternative approach to Chained Apps : Ability to ...

-

caltang on: Identify Indent Level

- simonaubert_bd on: OpenAI connector : ability to choose a non-default...

- maryjdavies on: Lock & Unlock Workflows with Password

- nzp1 on: Easy button to convert Containers to Control Conta...

-

Qiu on: Features to know the version of Alteryx Designer D...

- DataNath on: Update Render to allow Excel Sheet Naming

- aatalai on: Applying a PCA model to new data

- charlieepes on: Multi-Fill Tool

- seven on: Turn Off / Ignore Warnings from Parse Tools