Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

When I have AMP enabled, I can no longer performance profile my workflows. I get that there may be issues with calculating this across multiple threads but it'd be great to have Performance profiling available for the new engine.

-

AMP Engine

-

Engine

#Deployment #LargeScale #CleanCode #BareBonesCode

Request to add and option to strip out all unnecessary text within a Workflow / Gallery App when deploying to the Alteryx Server to be scheduled or used as a Gallery App. Run at file location still causes the reading of unnecessary information across the network.

Often the workflows are bloated with un-used meta data that at a small scale is not an issue, but with scale... all the additional bloat (kBs to MBs in size) - sent from the controller to the worker does impact the server environment.

The impact explodes when leveraging the Alteryx API to launch the same job over and over with different parameters - all the non-useful information in the workflow is always sent to the various workers to handle each one of these jobs.

Even having a "compiled" version of the workflow could be a great solution. #CompiledCode

Attached is a simple workflow that shows how bloated the workflows can become.

I appreciate your consideration.

-

Engine

-

New Request

Preface: I have only used the in-DB tools with Teradata so I am unsure if this applies to other supported databases.

When building a fairly sophisticated workflow using in-DB tools, sometimes the workflow may fail due to the underlying queries running up against CPU / Memory limits. This is most common when doing several joins back to back as Alteryx sends this as one big query with various nested sub queries. When working with datasets in the hundereds of millions and billions of records, this can be extremely taxing for the DB to run as one huge query. (It is possible to get arround this by using in-DB write out to a temporary table as an intermediate step in the workflow)

When a routine does hit a in-DB resource limit and the DB kills the query, it causes Alteryx to immediately fail the workflow run. Any "temporary" tables Alteryx creates are in reality perm tables that Alteryx usually just drops at the end of a successful run. If the run does not end successfully due to hitting a resource limit, these "Temporary" (perm) tables are not dropped. I only noticed this after building out a workflow and running up against a few resource limits, I then started getting database out of space errors. Upon looking into it, I found all the previously created "temporary" tables were still there and taking up many TBs of space.

My proposed solution is for Alteryx's in-DB tools to drop any "temporary" tables it has created when a run ends - regardless of if the entire module finished successfully.

Thanks,

Ryan

It would be cool to have annotations that dynamically update. E.g. a record count would be displayed in the annotation and update after a run if changes occurred.

-

Engine

-

Runtime

With the release of 2018.3, cache has become an adhoc task. With complex workflow and multiple inputs we need a method to cache and save the cache selection by tool. Once the workflow runs after opening, the cache would be saved at the latest tool downstream.

This way we don't have to create adhoc cache steps and run the workflow 2X before realizing the time saving features of cache.

This would work similar to the cache feature in 11.0 but with enhanced functionality...the best of the old cache with the new cache intent.

Embed the cache option into tools.

Thanks!

Similar to being able change the parameters of a tool using the interface tools, it could be very useful if Alteryx Designer had an option where the configuration of a tool can be modified by another tool's output (which can only consist of one row & column and may include line breaks/tab characters, only first row is used if there are multiple rows) while the workflow is running, therefore reducing the need to chain multiple apps.

This feature could be made possible as the "Control Containers" feature is now implemented, and it could work like below:

Suppose you need to write to a database and may need to specify a Pre-SQL statement or Query that needs to be dynamically changed by the result of a previous tool in the workflow.

In this case, as the configuration of a tool in the next container needs to be changed by the result of a previous formula, there would need to be an additional icon below the tools, indicating that the tool's result can be used for configuration change.

This icon which will appear below the tools will only be visible once at least one Control Container and an Action tool is added to the workflow, and will automatically be removed if all the control containers are removed from the workflow. User can change the configuration of the destination tool using an action tool, which must be connected to a tool in a container that will be run after the one it is contained in has finished running, as a tool (or several tools) that is contained in the next CC in the workflow needs to be dynamically modified before the container it is contained in is activated.

If a formula tool containing multiple formula fields is added to the action tool, the user will see all the formula outputs similar to connections (i.e. [#1], [#2]...) that can be used as a parameter.

The screenshot below demonstrates the idea, but please note that this is a change where adding an action tool may not mean that this workflow will need to become either a macro or an analytic app, so a new workflow type may or may not have to be defined, such as "Dynamic Configuration Workflow (YXDW)". Analytic Apps and Macros which utilize this feature could still be built without having to define a new workflow type.

-

Category Apps

-

Engine

-

New Request

-

UX

Please offload map rendering, in Browse Tool, to the video card using DirectX or OpenGL, the software rendering currently used is embarrassingly slow and disruptive.

-

Engine

-

General

I understand that Server and Designer + Scheduler versions have the option to "cancel workflows running longer than X”.

I'd like to see that functionality in the desktop edition as well.

Hello all,

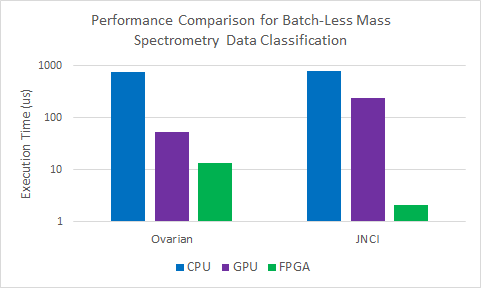

A whole field of performance improvement have not been explored by Alteryx : the hardware acceleration by using something else than a CPU for calculation.

Here some good readings about that :

https://blog.esciencecenter.nl/why-use-an-fpga-instead-of-a-cpu-or-gpu-b234cd4f309c

https://en.wikipedia.org/wiki/Application-specific_integrated_circuit

The kind of acceleration we can dream !

Simon

-

AMP Engine

-

Engine

We often build very large Alteryx projects that breakdown large data processing jobs into multiple self contained workflows.

We use CReW Runner tools to automate running the workflows in sequence but it would be nice if Alteryx supported this natively with a new panel for "Projects"

Nice features for Projects could be:

- Set the sequence

- Conditional sequence

- Error handling

- Shared constants

- Shared aliases

- Shared dependencies

- Chained Apps

- Option to pass data between workflows - Input from yxmd Output - no need to persist intermediary data

- Input/output folder/project folder setups for local data sources in dependencies window

- Ability to package like "Export Workflow" for sharing

- Results log the entire project

-

Engine

-

General

It would be great to dynamic update the next Analytic App based on an interface input. This mean I have a chained app. In Step 1 I ask a Yes/No Question. The Answer to this question will determine to open in Step 2 Analytic App A (with it's own interface Inputs) or Analytic App B (with other interface inputs).

Many users are facing this issue when they want to create an tool (e.g. for mapping purposes) that contains two datastreams/flows with different interface input requirements.

Adding this feature would allow us to create different dataflows with different input requirements. This helps us to differentiate between different mappingsschemes and increases userexperience (currently they have to fill a lot of unnecessary interface inputs). Thanks.

H.

As we begin to adopt the AMP engine - one of the key questions in every user's mind will be "How do I know I'm going to get the same outcome"

One of the easiest ways to build confidence in AMP - and also to get some examples back to Alteryx where there are differences is to allow users to run both in parallel and compare the differences - and then have an easy process that allows users to submit issues to the team.

For example:

- Instead of the option being run in AMP or run in E1 - instead can we have a 3rd option called "Run in comparison mode"

- This runs the process in both AMP and E1; and checks for differences and points them out to the user in a differences repot that comes up after the run.

- Where there's a difference that seems like a bug (not just a sorting difference but something more material) - the user then has a button that they can use to "Submit to Alteryx for further investigation". This will make it much simpler for Alteryx to identify any new issues; and much simpler for users to report these issues (meaning that more people will be likely to do it since it's easier).

The benefit of this is that not only will it make users more comfortable with AMP (since they will see that in most cases there are no difference); it will also give them training on the differences in AMP vs. E1 to make the transition easier; and finally where there are real differences - this will make the process of getting this critical info to Alteryx much easier and more streamlined since the "Submit to Alteryx" process can capture all the info that Alteryx need like your machine; version number etc; and do this automatically without taxing the user.

-

AMP Engine

-

Engine

Maybe this pointless but my guess is that memory usage could be as important as processing time and is probably a simple addition to the performance profiling feature.

-

Engine

-

Enhancement

When I run a Standard Workflow in the Designer, I can continue to work on other workflows, I can even run two workflows in parallel.

In contrast, when running an Analytical App in the Designer, the entire program is blocked and neither another workflow can be edited or run.

I propose to allow access to the Designer GUI also when running Analytical Apps.

-

Engine

-

Enhancement

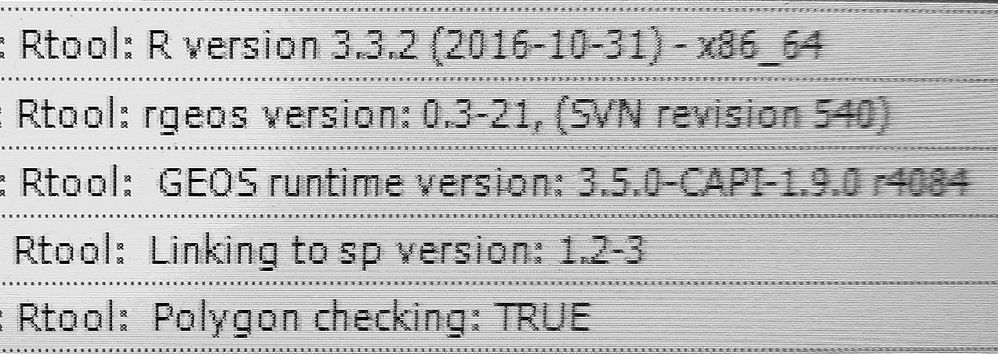

when using the R-Tool for simple tasks (like renaming files, for example) in an interative macro - there's a delay on every iteration as the R Tool starts up R.

The following are repeated on every iteration (with delays):

Can we look at an option to forward scan an alteryx job to look for R Tools, then load R into process once to eliminate these delays on every iteration?

-

Engine

-

Runtime

I learnt Alteryx for the first time nearly 5 years ago, and I guess I've been spoilt with implicit sorts after tools like joins, where if I want to find the top 10 after joining two datasets, I know that data coming out of the join will be sorted. However with how AMP works this implicit sort cannot be relied upon. The solution to this at the moment is to turn on compatibility mode, however...

1) It's a hidden option in the runtime settings, and it can't be turned on default as it's set only at the workflow level

2) I imagine that compatibility mode runs a bit slower, but I don't need implicit sort after every join, cross-tab etc.

So could the effected tools (Engine Compatibility Mode | Alteryx Help) have a tick box within the tool to allow the user to decide at the tool level instead of the canvas level what behaviour they want, and maybe change the name from compatibility mode to "sort my data"?

-

AMP Engine

-

Engine

It would be helpful to have the Read Uncommitted listed as a global runtime setting.

Most of the workflows I design need this set, so rather than risk forgetting to click this option on one of my inputs it would be beneficial as a global setting.

For example: the user would be able to set specific inputs according to their need and the check box on the global runtime setting would remain unchecked.

However, if the user checked the box on the global runtime setting for Read Uncommitted then all of the workflow would automatically use an uncommtted read on all of the inputs.

When the user unchecks the global runtime setting for Read Uncommitted, then only the inputs that were set up with this option will remain set up with the read uncommitted.

Hello all,

In addition to the create index idea, I think the equivalent for vertica may be also useful.

On vertica, the data is store in those projections, equivalent to index on other database... and a table is linked to those projections. When you query a table, the engine choose the most performant projection to query.

What I suggest : instead of a create index box, a create index/projection box.

Best regards,

Simon

Hello Alteryx,

Would it be possible to extend the "Cache and Run" functionality also to tools with multiple outputs? Our clients use the R and Python tools very frequently and the runtimes tend to be pretty long. For the development purposes, it would be great to have the caching possibilities also on these tools.

Thank you very much for considering this idea.

Regards,

Jan Laznicka

-

Engine

-

Enhancement

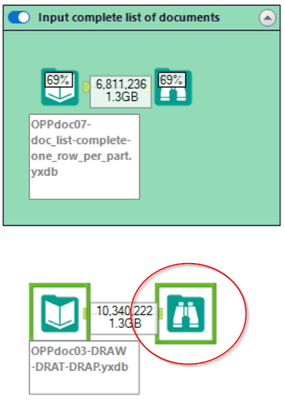

Quite often, I would love to be able to use Browse tools already while the workflow is still running, if that specific Browse tool has completed (green box around). This would help to debug and save a lot of time.

In this case, the lower Browse tool would be enabled already now.

-

Engine

-

Enhancement

- New Idea 394

- Accepting Votes 1,783

- Comments Requested 20

- Under Review 181

- Accepted 47

- Ongoing 7

- Coming Soon 13

- Implemented 550

- Not Planned 106

- Revisit 56

- Partner Dependent 3

- Inactive 674

-

Admin Settings

22 -

AMP Engine

27 -

API

11 -

API SDK

229 -

Bug

1 -

Category Address

13 -

Category Apps

114 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

252 -

Category Data Investigation

79 -

Category Demographic Analysis

3 -

Category Developer

219 -

Category Documentation

82 -

Category In Database

215 -

Category Input Output

658 -

Category Interface

246 -

Category Join

109 -

Category Machine Learning

3 -

Category Macros

156 -

Category Parse

78 -

Category Predictive

79 -

Category Preparation

406 -

Category Prescriptive

2 -

Category Reporting

205 -

Category Spatial

83 -

Category Text Mining

23 -

Category Time Series

24 -

Category Transform

93 -

Configuration

1 -

Content

2 -

Data Connectors

985 -

Data Products

4 -

Desktop Experience

1,615 -

Documentation

64 -

Engine

136 -

Enhancement

420 -

Event

1 -

Feature Request

219 -

General

307 -

General Suggestion

8 -

Insights Dataset

2 -

Installation

26 -

Licenses and Activation

15 -

Licensing

15 -

Localization

8 -

Location Intelligence

82 -

Machine Learning

13 -

My Alteryx

1 -

New Request

229 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

26 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

87 -

UX

228 -

XML

7

- « Previous

- Next »

-

Carolyn on: Blob output to be turned off with 'Disable all too...

- MJ on: Add Tool Name Column to Control Container metadata...

-

fmvizcaino on: Show dialogue when workflow validation fails

- ANNE_LEROY on: Create a SharePoint Render tool

- jrlindem on: Non-Equi Relationships in the Join Tool

- AncientPandaman on: Continue support for .xls files

- EKasminsky on: Auto Cache Input Data on Run

- jrlindem on: Global Field Rename: Automatically Update Column N...

- simonaubert_bd on: Workflow to SQL/Python code translator

- abacon on: DateTimeNow and Data Cleansing tools to be conside...

| User | Likes Count |

|---|---|

| 7 | |

| 3 | |

| 3 | |

| 2 | |

| 2 |