Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: New Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

Preface: I have only used the in-DB tools with Teradata so I am unsure if this applies to other supported databases.

When building a fairly sophisticated workflow using in-DB tools, sometimes the workflow may fail due to the underlying queries running up against CPU / Memory limits. This is most common when doing several joins back to back as Alteryx sends this as one big query with various nested sub queries. When working with datasets in the hundereds of millions and billions of records, this can be extremely taxing for the DB to run as one huge query. (It is possible to get arround this by using in-DB write out to a temporary table as an intermediate step in the workflow)

When a routine does hit a in-DB resource limit and the DB kills the query, it causes Alteryx to immediately fail the workflow run. Any "temporary" tables Alteryx creates are in reality perm tables that Alteryx usually just drops at the end of a successful run. If the run does not end successfully due to hitting a resource limit, these "Temporary" (perm) tables are not dropped. I only noticed this after building out a workflow and running up against a few resource limits, I then started getting database out of space errors. Upon looking into it, I found all the previously created "temporary" tables were still there and taking up many TBs of space.

My proposed solution is for Alteryx's in-DB tools to drop any "temporary" tables it has created when a run ends - regardless of if the entire module finished successfully.

Thanks,

Ryan

-

Category Connectors

-

Category In Database

-

Data Connectors

-

Engine

When using Server datasources Alteryx can take a long time to query metadata, particularly for long complex queries. This often happens on opening a module or clicking on the Input tool to eit properties.

This leads to frustration with these modules. It would be good for Alteryx to cache the metadata (i.e. columns) from these inputs and prompt the user to reuse this cached data if it it takes longer than say 2 seconds to retrieve the query.

-

Category Input Output

-

Data Connectors

Given redshift prefers accepting many small files for bulk loading into redshift, it would be good to be able to have a max record limit within the s3 upload tool (similar to functionality for s3 download)

The other functionality that is useful for the s3 upload tool is ability to append file names based on datetimestamp_001, 002, 003 etc similar to current output tool

-

Category Connectors

-

Category Input Output

-

Data Connectors

Hi,

Carlson Companies is moving to a Vertica environment and it would be great if that was supported with the In-database tools. That would definitely help and expand the use of Alteryx at our company!

Thanks,

Tyler Mittelstadt

-

Category In Database

-

Data Connectors

At the moment, we are not able to use input data field names and its values in Output tool, mainly in the Pre-SQL and Post-SQL statement. I see some discussions on this in the community and in many scenarios we require that. It will be great if we have this option.

-

Category Input Output

-

Data Connectors

I think it would be great to add metadata to a yxdb. For example, I was back tracking and trying to figure out which module/app I used to create an old yxdb. Now I use Notepad++ and do a "Find In Files" Search. Wouldn't it be great it the module path would be available when you look at the properties of a yxdb in Alteryx?

-

Category Input Output

-

Data Connectors

It would be cool if a connector line would turn red when you select it, making it easier to trace the path (similar to how the lines turn red when you click on a join tool).

-

Category Connectors

-

Data Connectors

Is it possible to add some color coding to the InDB tool. I am building out models InDB and I end up with a sea of navy blue icons. Maybe they could generally correspond to the other tools. For example the summary would be orange. Etc Formula Lime Green.

-

Category In Database

-

Data Connectors

I have several .yxdb files that I’ve been appending to daily from a SQL Server table in order to extend the length of time that data is retained.

They’re massive tables, but I may only need one or two rows.

I had hoped to decrease the time it takes to get data from them by running a query on them (or a dynamic query/input) as opposed to using a filter or joining on an existing data set which would have equal values that would produce the same result as a filter.

Essentially, the input of .yxdb would have the option of inputting the full table or a SQL query just like a data connection.

-

Category Input Output

-

Data Connectors

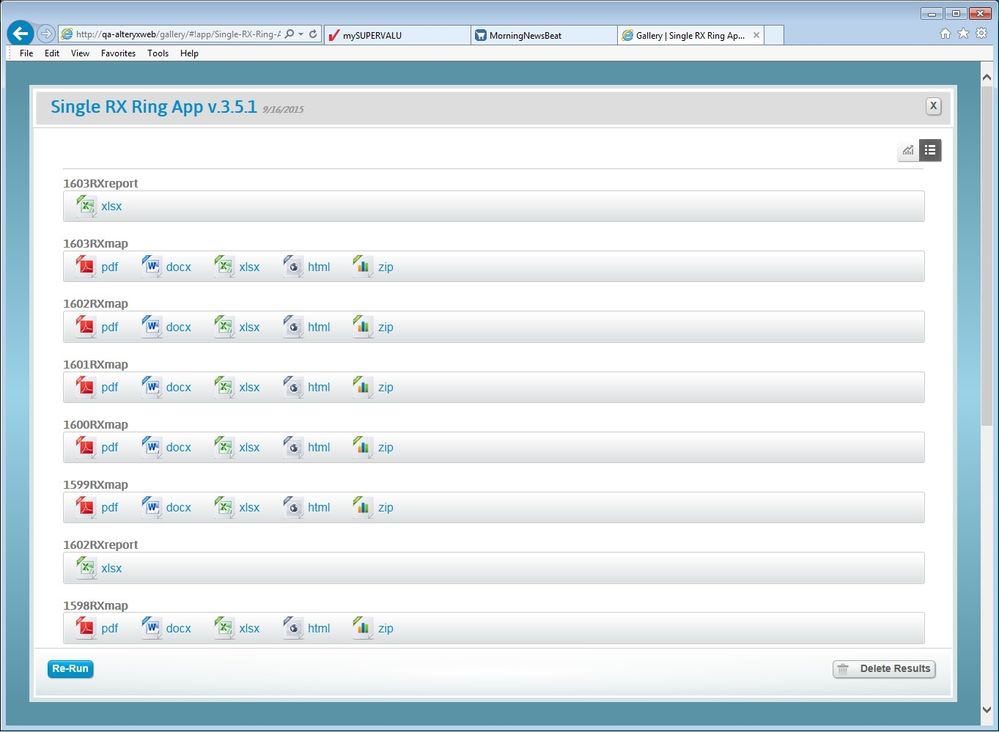

I run a report generator that can leave you with having to save one at a time several files.

It would be nice to be able to save multiple files at once. Whether using a check box or a shift select method. If this method already exists, Great! Where can I find out how to do it. If not can it be an added feature?

-

Category Input Output

-

Data Connectors

It is very difficult moving from Alteryx functions to SQL In-Database as a business user, I need to learn a whole new language.

In the short term Alteryx should provide a simple function reference, as similar as possible to the Formula tool, for building formula in the in-database tools.

Longer term I'd like there to be a parser from Alteryx Formulae to SQL so I can just write in my favourite Alteryx formula (or a subset thereof) and Alteryx handles the conversion to SQL.

-

Category In Database

-

Data Connectors

The challenge:

We have hundreds of SOAP based Salesforce (SF) connectors in our scheduled modules that were created with Alteryx 9.0-9.5. Alteryx 10.0+ is now using REST API based SF connectors. We have to replace all of these connectors when we move to 10.0+.

Proposed idea:

Alteryx creates an automated process for converting SOAP SF connectors to REST API SF connectors, so that when you open an old module in 10.0+, they are automatically updated.

This seems feasible as the information supplied by Alteryx users for the SOAP SF connectors is sufficient for the REST API SF connectors to work (i.e. URL, username, password, security token, table name, fields, WHERE clause, etc...).

Thanks,

Jeremy

-

Category Connectors

-

Data Connectors

Its definately not a good UX that the full browse is now in the output window. I usually have my Output on autohide and its a few extra clicks to see the browses now... Can we have both the Browse Everywhere tab in Output and Configuration Panel?

-

Category Input Output

-

Data Connectors

-

Category Connectors

-

Data Connectors

I was recently surprised to find that Alteryx doesn't already havea connector to upload to SFTP sites. I've managed to work around it with RunCommand and some external programs, but it's very cumbersome. A simple SFTP upload connector would be a great addition to Alteryx.

-

Category Input Output

-

Data Connectors

At TargetSmart, we create a lot of CSV deliverables for our customers. Since Alteryx differentiates between blank strings and null values (a good thing), the CSV output is not consistent between the two without an explicit multi-field formula step to set all null to empty strings (or vice versa). This is an easy fix for us. However, in some cases we have very large files with thousands of fields and millions of records. For these instances, the workflow run-time is greatly increased by the multi-field formula. If possible, I was wondering if adding a checkbox option to CSV output steps (“Make null/empty consistent” or “Never quote empty/null values”) would possibly be a more efficient approach as the check could be part of the output step (which I assume is native C++) versus the Multi-field formula (which I assume has some level of inefficiency in interpreting the formula dynamically).

-

Category Input Output

-

Data Connectors

I know you can add a field for "today" and then use that field to append the filename, so the output ends up as Ouput_Date.xlsx, but it would be great to be able to do that without adding a new field for the current date. If it were simply an option in the filt output settings dialog, that would be great.

-

Category Input Output

-

Data Connectors

The capability to input/output R Datasets via the input/output tools, together with all the other data formats as well (like csv, Excel, SAS, SPSS, etc).

-

Category Input Output

-

Category Predictive

-

Data Connectors

-

Desktop Experience

-

Category Connectors

-

Data Connectors

-

Category Connectors

-

Data Connectors

- New Idea 377

- Accepting Votes 1,784

- Comments Requested 21

- Under Review 178

- Accepted 47

- Ongoing 7

- Coming Soon 13

- Implemented 550

- Not Planned 107

- Revisit 56

- Partner Dependent 3

- Inactive 674

-

Admin Settings

22 -

AMP Engine

27 -

API

11 -

API SDK

228 -

Category Address

13 -

Category Apps

114 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

252 -

Category Data Investigation

79 -

Category Demographic Analysis

3 -

Category Developer

217 -

Category Documentation

82 -

Category In Database

215 -

Category Input Output

655 -

Category Interface

246 -

Category Join

108 -

Category Machine Learning

3 -

Category Macros

155 -

Category Parse

78 -

Category Predictive

79 -

Category Preparation

402 -

Category Prescriptive

2 -

Category Reporting

204 -

Category Spatial

83 -

Category Text Mining

23 -

Category Time Series

24 -

Category Transform

92 -

Configuration

1 -

Content

2 -

Data Connectors

982 -

Data Products

4 -

Desktop Experience

1,605 -

Documentation

64 -

Engine

134 -

Enhancement

407 -

Event

1 -

Feature Request

218 -

General

307 -

General Suggestion

8 -

Insights Dataset

2 -

Installation

26 -

Licenses and Activation

15 -

Licensing

15 -

Localization

8 -

Location Intelligence

82 -

Machine Learning

13 -

My Alteryx

1 -

New Request

226 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

26 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

86 -

UX

227 -

XML

7

- « Previous

- Next »

- abacon on: DateTimeNow and Data Cleansing tools to be conside...

-

TonyaS on: Alteryx Needs to Test Shared Server Inputs/Timeout...

-

TheOC on: Date time now input (date/date time output field t...

- EKasminsky on: Limit Number of Columns for Excel Inputs

- Linas on: Search feature on join tool

-

MikeA on: Smarter & Less Intrusive Update Notifications — Re...

- GMG0241 on: Select Tool - Bulk change type to forced

-

Carlithian on: Allow a default location when using the File and F...

- jmgross72 on: Interface Tool to Update Workflow Constants

-

pilsworth-bulie

n-com on: Select/Unselect all for Manage workflow assets

| User | Likes Count |

|---|---|

| 32 | |

| 5 | |

| 5 | |

| 3 | |

| 3 |