Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop : Neue Ideen

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

Request: Google Drive Output Tool to be able to set the maximum records per file and create multiple files

For the regular Alteryx Output Tool, we're able to set maximum records per file. This is helpful in a variety of ways - we use it as part of a workflow where the output gets uploaded into SalesForce and we can only load 5,000 records at a time. I also use this to split up large csv files to be under Excel's ~1M line limit so my teammates without Alteryx can open their reports and not lose data.

The Google Drive Output does not have this ability to split based on the number of records. If I use the RecordID Tool plus a Filter, it crashes Alteryx due to a Bug with RecordID + GDrive Output (it's currently in Accepted Defect stage)

It would be very helpful to have this same functionality that we can with the regular Output Tool

-

Category Connectors

-

Data Connectors

Have you ever had the business deliver an Excel (EEK!) file to be passed into Alteryx with a different number of header rows (because it looks pretty and is convenient)? Never, you say? Lies!

I would suggest adding an option to the Input Data Tool that would give us the ability concatenate multiple header rows. This would help enable accurate data profiling for columns when output and eliminate loss from unnecessary conversion errors. Currently, the options allow us to Start Data Input on Line X; however, if the header for the column is on multiple rows, they would have to be manually entered after input due to only being able to select the lowest possible row to assure the data is accurately passed. The solution would be to be able to specify the number of rows that contain headers, concatenate them to a single row (ignoring null and carriage return) and then output that as the header.

The current functionality, in a situation where each row has a variable number of header rows, causes forced errors such as a scientific string conversion of a numeric value.

-

Category Input Output

-

Data Connectors

Hi everyone,

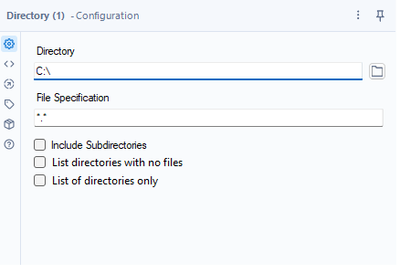

Add two additional features to a directory tool. Something like this:

Use cases:

1. Since it is not possible to use a folder browse on the Gallery, this could help a basic user create a list of possible folders to select from with the help of a drop-down

2. Directory analysis for cleaning purposes - currently, if you want to get a list of the folders with Alteryx, it takes forever for big file servers since Alteryx is mapping all the files

Both are achievable today through regex or a bat script.

Thank you,

Fernando Vizcaino

-

Category Input Output

-

Data Connectors

When creating a connection using DCM (example being ODBC for SQL) - the process requires an ODBC Data Source Name (see screenshot 1 below).

However, when you use the alias manager (another way to make database connections) - this does allow for DSN-free connections which are essential for large enterprises (see screenshot 2 below).

NOTE: the connection manager screens do have another option - Quick Connect - which seems to allow for DSN-free connections, but this is non-intuitive; and you're asked to type in the name of the driver yourself which seems to be an obvious failure point (especially since the list of all installed drivers can be read straight from the registry)

Please could we change DCM to use the same interfaces / concepts as the alias screens so that all DCM connections can easily be created without requiring an ODBC DSN; and so that DSN-free connections are the default mode of operation?

Screenshot 1: DCM connection:

screenshot 2

cc: @wesley-siu @_PavelP @ToddTarney

-

Category Connectors

-

Data Connectors

For companies that have migrated to OneDrive/Teams for data storage, employees need to be able to dynamically input and output data within their workflows in order to schedule a workflow on Alteryx Server and avoid building batch MACROs.

With many organizations migrating to OneDrive, a Dynamic Input/Output tool for OneDrive and SharePoint is needed.

- The existing Directory and Dynamic Input tools only work with UNC path and cannot be leveraged for OneDrive or SharePoint.

- The existing OneDrive and SharePoint tools do not have a dynamic input or output component to them.

- Users have to build work arounds and custom MACROS for a common problem/challenge.

- Users have to map the OneDrive folders to their machine (and server if published to the Gallery)

- This option generates a lot of maintenance, especially on Server, to free up space consumed by the local version when outputting the data.

The enhancement should have the following components:

OneDrive/SharePoint Directory Tool

- Ability to read either one folder with the option to include/exclude subfolders within OneDrive

- Ability to retrieve Creation Date

- Ability to retrieve Last Modified Date

- Ability to identify file type (e.g. .xlsx)

- Ability to read Author

- Ability to read last modified by

- Ability to generate the specific web path for the files

OneDrive/SharePoint Dynamic Input Tool

- Receive the input from the OneDrive/SharePoint Directory Tool and retrieve the data.

Dynamic OneDrive/SharePoint Output Tool

- Dynamically write the output from the workflow to a specific directory individual files in the same location

- Dynamically write the output to multiple tabs on the same file within the directory.

- Dynamically write the output to a new folder within the directory

-

Category Connectors

-

Category Input Output

-

Data Connectors

I would like to raise the idea of creating a feature that resolves the repetitive authentication problem between Alteryx and Snowflake

This is the same issue that was raised in the community forum on 11/6/18: https://community.alteryx.com/t5/Alteryx-Designer-Desktop-Discussions/ODBC-Connection-with-ExternalB...

Can a feature be added to store the authentication during the session and eliminate the popup browser? The proposed solution eliminates the prompt for credentials; however, it does not eliminate the browser pops up. For the Input/Output function, this opens four new browser windows, one for each time Alteryx tests the connection.

-

Category Connectors

-

Data Connectors

My Team Heavily rely on Dremio.

It would be great for Alteryx team to add Dremio as a dedicated data source Input for Alteryx, it would be so much easier for us to configure and run things in the future.

Thanks!

-

Category Input Output

-

Data Connectors

As an Alteryx Designer user I would like the ability to write .hyper files to a subdirectory on Tableau Server to keep make my Tableau site easier to manage.

-

Category Connectors

-

Data Connectors

User should get an Alert that file is open when using Input Tool. Currently Alteryx just clocks when attempting to use an open file in an Input Tool.

-

Category Input Output

-

Data Connectors

Hello all,

As of today, we can easily copy or duplicate a table with in-database tool.This is really useful when you want to have data in development environment coming from production environment.

But can we for real ?

Short answer : no, we can't do it in these cases :

-partitions

-any constraints such as primary-foreign keys

But even if these ideas would be implemented, this means manually setting these parameters.

So my proposition is simply a "clone table"' tool that would clone the table from the show create table statement and just allow to specify the destination path (base.table)

Best regards,

Simon

-

AMP Engine

-

Category In Database

-

Data Connectors

-

Engine

HI,

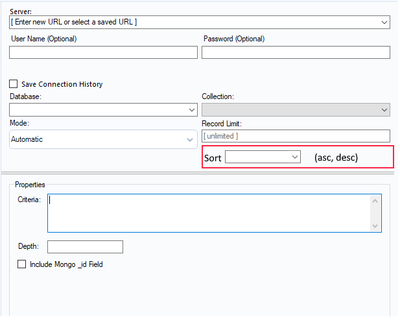

Not sure if this Idea was already posted (I was not able to find an answer), but let me try to explain.

When I am using Mongo DB Input tool to query AlteryxService Mongo DB (in order to identify issues on the Gallery) I have to extract all data from Collection AS_Result.

The problem is that here we have huge amount of data and extracting and then parsing _ServiceData_ (blob) consume time and system resources.

This solution I am proposing is to add Sorting option to Mongo input tool. Simple choice ASC or DESC order.

Thanks to that I can extract in example last 200 records and do my investigation instead of extracting everything

In addition it will be much easier to estimate daily workload and extract (via scheduler) only this amount of data we need to analyze every day ad load results to external BD.

Thanks,

Sebastian

-

Category Connectors

-

Data Connectors

Connect to PowerPivot data model or an Atomsvc file

-

Category Connectors

-

Data Connectors

With data sharing, you can share live data with relative security and ease across Amazon Redshift clusters, AWS accounts, or AWS Regions for read purposes.

Data sharing can improve the agility of your organization. It does this by giving you instant, granular, and high-performance access to data across Amazon Redshift clusters without the need to copy or move it manually.

aws Datasharing feature in Alteryx. It's not working in Alteryx v 2020.4

We would like to know whether Alteryx is support redshift data share or not ?

it uses same redhshift ODBC or Simba ODBC drivers only but the functionality would be sharing the data across the clusters. Earlier we have seen a limitation from Alteryx end (not able to read data share objects) so we wanted to check if it’s resolved in newer versions of Alteryx. Reference link

https://docs.aws.amazon.com/redshift/latest/dg/datashare-overview.html

-

Category In Database

-

Data Connectors

From what I can tell using ProcMon, presently when using the Directory tool to list files (including subdirectories) the Alteryx Engine runs a single threaded process.

When you're trying to find files by checking recursively in large network paths, this can take hours to run.

It would be great if the tools would split up lists of directories (maybe by getting two or three levels down first) and then run each of those recursive paths in parallel.

While it is possible to do this using a custom Python or cmd->PS command, it would be great if this could just be a native part of the application.

-

Category Input Output

-

Data Connectors

My organization use the SharePoint Files Input and SharePoint Files Output (v2.1.0) and connect with the Client ID, Client Secret, and Tenant ID. After a workflow is saved and scheduled on the server users receive the error "Failed to connect to SharePoint AADSTS700082: The refresh token has expired due to inactivity" every 90 days. My organization is not able to extend the 90 day limit or create non-expiring tokens.

If would be great if the SharePoint connectors could automatically refresh the token when it expires so users don't have to open the workflow and do it manually.

-

Category Connectors

-

Data Connectors

There should be an option where an existing SQL query or a complex logic is converted by Alteryx intelligently into an Alteryx high level workflow with tools suggestion which can be modified by the developers.

For e.g. Salesforce Einstein Analytics has an option where an existing dataflow (traditional way of performing data prep.) can be converted to a recipe (premium version of a dataflow with advanced features) using a single click. It gives an option for the user to make additional modifications/enhancements on top of it.

-

Category In Database

-

Data Connectors

Introduce CTE Functions and temp tables reading from SQL databases into Alteryx.I have faced use cases where I need to bring in table from multiple source tables based on certain delta condition. However, since the SQL queries turn to be complex in nature; I want to leverage an option to wrap it in a CTE function and then use the CTE function as an input for In-DB processing for Alteryx workflows.

-

Category In Database

-

Data Connectors

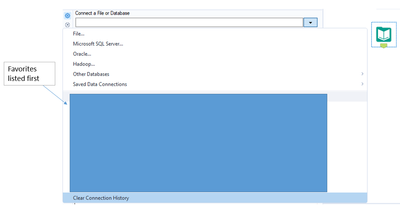

In the Input tool, I rely heavily on the recent connection history list. As soon as a file falls off of this list, it takes me a while to recall where it's saved and navigate to the file I'm wanting to use. It would be great to have a feature that would allow users to set their favorite connections/files so that they remain at the top of the connection history list for easy access.

-

Category Input Output

-

Data Connectors

Hello,

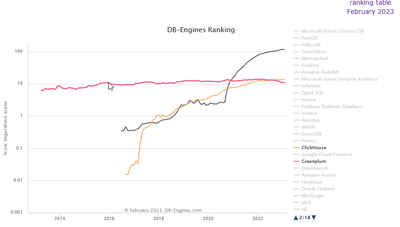

Just like Monetdb or Vertica, Clickhouse is a column-store database, claiming to be the fastest in the world. It's available on Cloud (like Snowflake), linux and macos (and here for free, it's open-source). it's also very well ranked in analytics database https://db-engines.com/en/system/ClickHouse and it would be a good differenciator with competitors.

https://clickhouse.com/

it has became more popular than Greenplum that is supported : (black snowflake, red greenplum, orange clickhouse)

Best regards,

Simon

-

AMP Engine

-

Category In Database

-

Data Connectors

-

Engine

Hello all,

MonetDB is a very light, fast, open-source database available here :

https://www.monetdb.org/

Really enjoy it, works pretty well with Tableau and it's a good introduction to column-store concepts and analytics with SQL.

It has also gained a lot of popularity these last years :

https://db-engines.com/en/ranking_trend/system/MonetDB

Sadly, Alteryx does not support it yet.

Best regards

-

AMP Engine

-

Category In Database

-

Data Connectors

-

Engine

- New Idea 376

- Accepting Votes 1.784

- Comments Requested 21

- Under Review 178

- Accepted 47

- Ongoing 7

- Coming Soon 13

- Implemented 550

- Not Planned 107

- Revisit 56

- Partner Dependent 3

- Inactive 674

-

Admin Settings

22 -

AMP Engine

27 -

API

11 -

API SDK

228 -

Category Address

13 -

Category Apps

114 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

252 -

Category Data Investigation

79 -

Category Demographic Analysis

3 -

Category Developer

217 -

Category Documentation

82 -

Category In Database

215 -

Category Input Output

655 -

Category Interface

246 -

Category Join

108 -

Category Machine Learning

3 -

Category Macros

155 -

Category Parse

78 -

Category Predictive

79 -

Category Preparation

402 -

Category Prescriptive

2 -

Category Reporting

204 -

Category Spatial

83 -

Category Text Mining

23 -

Category Time Series

24 -

Category Transform

92 -

Configuration

1 -

Content

2 -

Data Connectors

982 -

Data Products

4 -

Desktop Experience

1.604 -

Documentation

64 -

Engine

134 -

Enhancement

406 -

Event

1 -

Feature Request

218 -

General

307 -

General Suggestion

8 -

Insights Dataset

2 -

Installation

26 -

Licenses and Activation

15 -

Licensing

15 -

Localization

8 -

Location Intelligence

82 -

Machine Learning

13 -

My Alteryx

1 -

New Request

226 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

26 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

85 -

UX

227 -

XML

7

- « Vorherige

- Nächste »

- abacon auf: DateTimeNow and Data Cleansing tools to be conside...

-

TonyaS auf: Alteryx Needs to Test Shared Server Inputs/Timeout...

-

TheOC auf: Date time now input (date/date time output field t...

- EKasminsky auf: Limit Number of Columns for Excel Inputs

- Linas auf: Search feature on join tool

-

MikeA auf: Smarter & Less Intrusive Update Notifications — Re...

- GMG0241 auf: Select Tool - Bulk change type to forced

-

Carlithian auf: Allow a default location when using the File and F...

- jmgross72 auf: Interface Tool to Update Workflow Constants

-

pilsworth-bulie

n-com auf: Select/Unselect all for Manage workflow assets

| Benutzer | Anzahl |

|---|---|

| 6 | |

| 5 | |

| 3 | |

| 2 | |

| 2 |