Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

In order to speed up our workflows (which are very heavily tied to databases and DB queries) it would be valuable to be able to inspect the actual queries which were run against the SQL server so that we can index to optimize these queries directly in SQL Enterprise Manager (or the same on any DB platform - we have the same problem on DB2)

The idea would be to have a simple screen where I can run the workflow with a SQL profiling turned on, and then capture the output of either the entire workflow (grouped by connection so that I can tune one database with only the queries that apply) or a specific component on the canvas.

I appreciate that this is not something that would be required by a fair population of your users - but I'm sure that this will be helpful for any enterprise / corporate customers.

Thank you

Sean

-

Category In Database

-

Data Connectors

As of today, you must use a data stream out and then a hdfs tool to write a table in the hdfs in csv. Giving that the credentials are the same and that the adress in the DSN is the adress of the hdfs, it seems possible to keep the data in Hadoop and just putting it from the base to the HDFS.

-

Category In Database

-

Data Connectors

Currently we can't use any PaaS MongoDB products (MongoDB Atlas / CosmosDB) as Alteryx Gallery doesn't support SSL for connecting to the MongoDB back end.

SSL is good security practice when splitting the MongoDB onto a different machine too.

-

Category In Database

-

Data Connectors

I would like to see In-DB batch macros, currently we are joining tables with 30 million+ records and we are having to run it through standards tools because we are unable to process via In-DB, which has a 20% improvement in processing speed based on the peformance profiling.

-

Category In Database

-

Category Macros

-

Data Connectors

-

Desktop Experience

Current In-DB connections to SAP HANA via ODBC don't extract the Field Descriptions in addition to the technical field names. This forces users to manually rename each field within the workflow or create a secondary, In-DB connection to HANA _SYS_BI tables and dynamically rename. This second option only works if the Descriptions are maintained in the BI tables (which is not always the case).

I have posted the work-around solution on the community but a standard fix would be welcome. DVW and Tableau both offer solutions that seamlessly handle this issue.

-

Category In Database

-

Data Connectors

Please create the ability to Concat a field in the In-DB Summarize Tool similar to the regular Summarize tool. This would enable much faster processing on concatenating fields using the database's processing power vs. the local machine.

-

Category In Database

-

Category Transform

-

Data Connectors

-

Desktop Experience

Hello,

As of today, if you want to add a PostgreSQL in database connection, you may feel embarrased :

However, the help states that PostgreSQL is supported by in-database.

https://help.alteryx.com/current/In-DatabaseOverview.htm

Whaaaaaaaaat?

oh, I forgot to mention : with a little luck, you can find tis help page : https://help.alteryx.com/current/DataSources/PostgreSQL.htm

Yep, you have to configure a "greenplum" connection if you want to use a PSQL.

i think this is not user-friendly and can lead to mistake, errors, frustration and even lack of sales for Alteryx :

Also, Greeenplum and PSQL will have separate features so I think having two separate entries in the menu is pertinent.

Best regards,

Simon

-

Category In Database

-

Data Connectors

There should be an option where an existing SQL query or a complex logic is converted by Alteryx intelligently into an Alteryx high level workflow with tools suggestion which can be modified by the developers.

For e.g. Salesforce Einstein Analytics has an option where an existing dataflow (traditional way of performing data prep.) can be converted to a recipe (premium version of a dataflow with advanced features) using a single click. It gives an option for the user to make additional modifications/enhancements on top of it.

-

Category In Database

-

Data Connectors

It would be nice if AWS Glue had first class support in Alteryx. This would allow Alteryx to more seamlessly connect to data sources defined in the Glue metastore catalog. That alone would be handy and save on extra book-keeping. AWS Glue also has an ETL language for executing workflows on a managed Spark cluster, paying only for use. Integrating this big data tool with Alteyrx would be interesting as a way to execute in-database Spark workflows without the extra overhead of cluster management or Alteyrx connectivity

-

Category Connectors

-

Category In Database

-

Category Input Output

-

Data Connectors

My company has recently purchased some Alteryx licences with the hope of advancing their Data Science capability. The business is currently moving all their POS data from in-premise to cloud environment and have identified Azure Cosmos DB as a perfect enviornment to house the streaming data. Having purchased the Alteryx licences, we have now a challenge of not being able to connect to the Azure Cosmos DB environment and we would like Alteryx to consider speeding up the development of this process.

-

Category Connectors

-

Category In Database

-

Data Connectors

Please add the ability to specify indexes when creating a table with the Write Data In-DB tool.

When running Teradata SQL using the Connect In-DB tool I need to create a table on the database using the Write Data In-DB tool and do numerous updates before bringing the data to the PC. Currently there is no way to create a unique primary index (or any other index) when the Write Data In-DB tool creates a table. This causes Teradata to consume huge amounts of wasted space. Today I created a table with 160 columns and 50K rows. This consumed over 20 Gigabytes of data with 19.7 Gigabytes of wasted space. In Teradata the way to control wasted space(skew) is by properly defining the index which can't be done today.

-

Category In Database

-

Data Connectors

Hi,

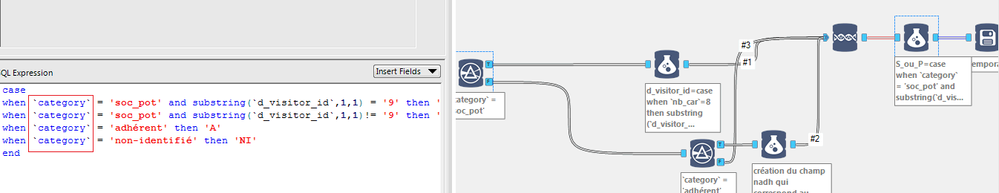

Standard In-DB connection configuration for PostgreSQL / Greenplum makes "Datastream-In" In-DB tool to load data line by line instead of using Bulk mode.

As a result, loading data in a In-DB stream is very slow.

Exemple

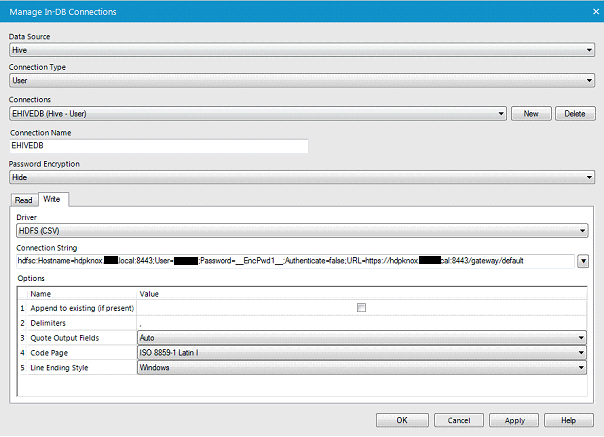

Connection configuration

Workflow

100 000 lines are sent to Greenplum using a "Datastream-in" In-DB tool.

This is a demo workflow, the In-DB stream could be more complex and not replaceable by an Output Data In-Memory.

Load time : 11 minutes.

It's slow and spam the database with insert for each lines.

However, there is a workaround.

We can configure a In-Memory connection using the bulk mode :

And paste the connection string to the "write" tab of our In-DB Connection :

Load time : 24 seconds.

It's fast as it uses the Bulk mode.

This workaround has been validated by Greenplum team but not by Alteryx support team.

Could you please support this workaround ?

Tested on version 2021.3.3.63061

-

Category In Database

-

Data Connectors

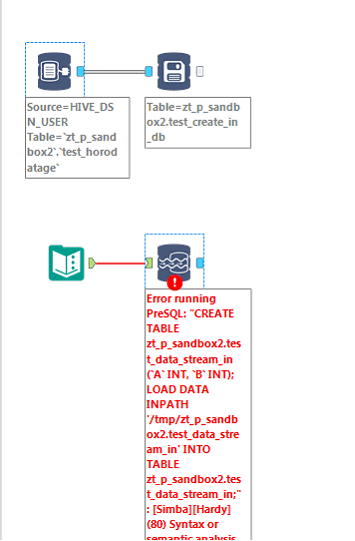

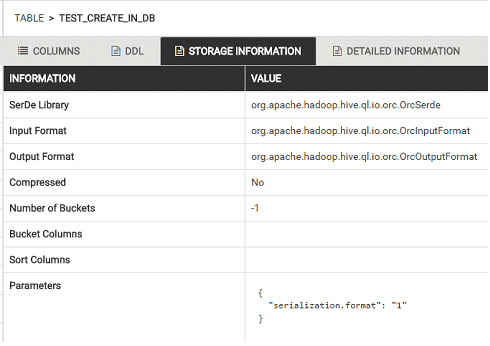

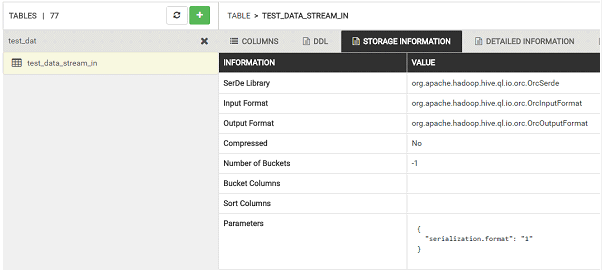

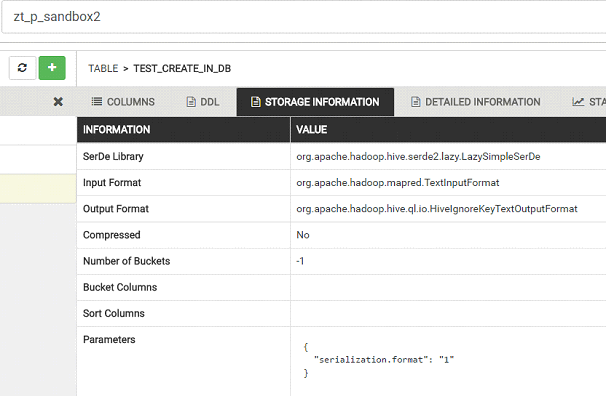

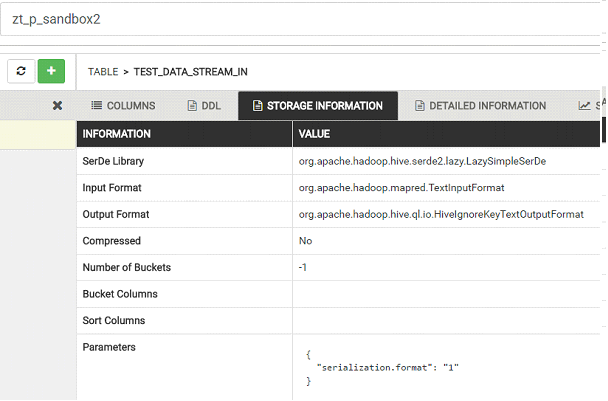

We face a big issue for our performances since we cannot as of today create tables in orc.

Connexion parameter for write :

Without option text file (default parameter in Simba) :

With the option, the WF doesn't fail but :

We want :

-to use the hdfs to write the data with data stream in

-to write the new tables with the write-indb in ORC

-

Category In Database

-

Category Input Output

-

Data Connectors

Add in-database tools for SAP HANA.

Please star that idea so we can prioritize this request accordingly

-

Category In Database

-

Data Connectors

Hello.

The category word is a SQL Keyword (at least on hive). However it is put in quote ( this quote ` ) and the workflow will work without a single issue to the end. The blue color may be misleading to some users.

-

Category In Database

-

Data Connectors

While In-db tools are very helpful and cut down the time needed to write complex SQL , there are some steps that are faster by directly writing SQL like window functions- OVER (PARTITION BY .....). In Alteryx, we need to create multiple joins and summaries to perform a window function. It would be immensely helpful if there was a SQL editor tool for in-db workflows where we can edit the SQL code at any point in the workflow, or even better, if they can add an "edit" function to every in-db tool where we can customize the SQL code generated and then send to the next tool.

This will cut down the time immensely and streamline the workflow to make Alteryx a true contender for the ETL solution space.

-

API SDK

-

Category Developer

-

Category In Database

-

Category Transform

Would be nice if Alteryx had the ability to run a Teradata stored procedure and/or macro with a the ability to accept input parameters. Appears this ability exists for MS SQL Server. Seems odd that I can issue a SQL statement to the database via a pre or post processing command on an input or output, but can't call a stored procedure or execute a macro. Only way we can seem to call a stored procedure is by creating a Teradata BTEQ script and using the Run Command tool to execute that script. Works, but a bit messy and doesn't quite fit the no-coding them of Alteryx.

-

API SDK

-

Category Developer

-

Category In Database

-

Data Connectors

It would make life a bit easier and provide a more seamless experience if Gallery admins could create and share In-Database connections from "Data Connections" the same way in-memory connections can be shared.

I'm aware of workarounds (create System DSN on server machine, use a connection file, etc.), but those approaches require additional privileges and/or tech savvy that line-of-business users might not have.

Thanks!

-

Category In Database

-

Data Connectors

Currently the Databricks in-database connector allows for the following when writing to the database

- Append Existing

- Overwrite Table (Drop)

- Create New Table

- Create Temporary Table

This request is to add a 5th option that would execute

- Create or Replace Table

Why is this important?

- Create or Replace is similar to the Overwrite Table (Drop) in that it fully replaces the existing table however, the key differences are

- Drop table completely removes the table and it's data from Databricks

- Any users or processes connected to that table live will fail during the writing process

- No history is maintained on the table, a key feature of the Databricks Delta Lake

- Create or Replace does not remove the table

- Any users or processes connected to that table live will not fail as the table is not dropped

- History is maintained for table versions which is a key feature of Databricks Delta Lake

- Drop table completely removes the table and it's data from Databricks

While this request was specific to testing on Azure Databricks the documentation for Azure and AWS for Databricks both recommend using "Replace" instead of "Drop" and "Create" for Delta tables in Databricks.

-

Category In Database

-

Data Connectors

Preface: I have only used the in-DB tools with Teradata so I am unsure if this applies to other supported databases.

When building a fairly sophisticated workflow using in-DB tools, sometimes the workflow may fail due to the underlying queries running up against CPU / Memory limits. This is most common when doing several joins back to back as Alteryx sends this as one big query with various nested sub queries. When working with datasets in the hundereds of millions and billions of records, this can be extremely taxing for the DB to run as one huge query. (It is possible to get arround this by using in-DB write out to a temporary table as an intermediate step in the workflow)

When a routine does hit a in-DB resource limit and the DB kills the query, it causes Alteryx to immediately fail the workflow run. Any "temporary" tables Alteryx creates are in reality perm tables that Alteryx usually just drops at the end of a successful run. If the run does not end successfully due to hitting a resource limit, these "Temporary" (perm) tables are not dropped. I only noticed this after building out a workflow and running up against a few resource limits, I then started getting database out of space errors. Upon looking into it, I found all the previously created "temporary" tables were still there and taking up many TBs of space.

My proposed solution is for Alteryx's in-DB tools to drop any "temporary" tables it has created when a run ends - regardless of if the entire module finished successfully.

Thanks,

Ryan

-

Category Connectors

-

Category In Database

-

Data Connectors

-

Engine

- New Idea 376

- Accepting Votes 1,784

- Comments Requested 21

- Under Review 178

- Accepted 47

- Ongoing 7

- Coming Soon 13

- Implemented 550

- Not Planned 107

- Revisit 56

- Partner Dependent 3

- Inactive 674

-

Admin Settings

22 -

AMP Engine

27 -

API

11 -

API SDK

228 -

Category Address

13 -

Category Apps

114 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

252 -

Category Data Investigation

79 -

Category Demographic Analysis

3 -

Category Developer

217 -

Category Documentation

82 -

Category In Database

215 -

Category Input Output

655 -

Category Interface

246 -

Category Join

108 -

Category Machine Learning

3 -

Category Macros

155 -

Category Parse

78 -

Category Predictive

79 -

Category Preparation

402 -

Category Prescriptive

2 -

Category Reporting

204 -

Category Spatial

83 -

Category Text Mining

23 -

Category Time Series

24 -

Category Transform

92 -

Configuration

1 -

Content

2 -

Data Connectors

982 -

Data Products

4 -

Desktop Experience

1,604 -

Documentation

64 -

Engine

134 -

Enhancement

406 -

Event

1 -

Feature Request

218 -

General

307 -

General Suggestion

8 -

Insights Dataset

2 -

Installation

26 -

Licenses and Activation

15 -

Licensing

15 -

Localization

8 -

Location Intelligence

82 -

Machine Learning

13 -

My Alteryx

1 -

New Request

226 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

26 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

85 -

UX

227 -

XML

7

- « Previous

- Next »

- abacon on: DateTimeNow and Data Cleansing tools to be conside...

-

TonyaS on: Alteryx Needs to Test Shared Server Inputs/Timeout...

-

TheOC on: Date time now input (date/date time output field t...

- EKasminsky on: Limit Number of Columns for Excel Inputs

- Linas on: Search feature on join tool

-

MikeA on: Smarter & Less Intrusive Update Notifications — Re...

- GMG0241 on: Select Tool - Bulk change type to forced

-

Carlithian on: Allow a default location when using the File and F...

- jmgross72 on: Interface Tool to Update Workflow Constants

-

pilsworth-bulie

n-com on: Select/Unselect all for Manage workflow assets

| User | Likes Count |

|---|---|

| 6 | |

| 5 | |

| 3 | |

| 2 | |

| 2 |