Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: Top Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

When passing a data connection to the Dynamic Input Tool as a string and using the 'Change Entire File Path' option, the password parameter of the connection string is not encrypted and is displayed in the metadata source information.

We have since changed our macro that was using this method, but wanted to raise awareness of this situation. I suggest that the same procedure used to encrypt the password in all other connection methods be called if the workflow is configured to pass a password through the input as a string.

-

API SDK

-

Category Developer

Hi All,

This is a fairly straightforward request. I'd like to be able to pass through interface tool values to the workflow events the same way I would pass it through to a tool in the workflow (%Question.<tool name>%). One use-case for this is that we are calling a workflow and passing in an ID, and if this workflow fails, I'd like to trigger an event that will call back to the application and say this specific workflow for this ID failed.

The temporary solution is to have the workflow write to a temp file and have the event reference that temp file, but this is clunky and risky if there are parallel runs occurring.

Best,

devKev

-

API SDK

-

Category Developer

-

Category Interface

-

Desktop Experience

Same button should work with tool container 'disable' and/or collapse.

-

API SDK

-

Category Developer

To track the problem down, I had to use the sample tool to grab x number of recs and see if it would run through the Tile tool. I had to keep skipping and selecting first N recs until I narrowed the problem down to 20 records. As it turned out. all values were 0 in a specific group. I found a workaround by pulling all recs per group with a value of 0 and bypassing these with the Tile tool. Instead of doing that - could you add an ExceptionHandler and specify which RecNo it crashed on?

Can you also add option to use 1, 2, or 3 std dev in addition to smart? This way all my groups will be uniform.

-

API SDK

-

Category Developer

-

API SDK

-

Category Developer

Implement a process to have looping in the workflow without resorting to Macros. Although macros do, generally, solve the issue, I find them confusing and non-intuitive.

I would suggest looping through the use of two new tools: A StartLoop and EndLoop tool.

The start loop would have two (or more) input anchors. One anchor would be for the initial input and the other(s) for additional iterative inputs. The start loop would hold all iterative inputs until the original inputs have passed the gate and then resubmit them in order returned to the start loop.

The end loop would have three output anchors. One anchor would be for data exiting the loop upon reaching the exit condition. Another loop would be for the iterative (return) data. Note that transformations can be performed on the data BEFORE it re-enters the loop. The third would be an "overloop" exit anchor. This would be for any data that failed to meet the exit condition within the (configurable) maximum iteration expression. The data from the overloop anchor could be dealt with as required by the business rules for the unsatisfied data after being output from the EndLoop tool

The primary configurations would be on the EndLoop tool, where you would indicate the exit condition and the maximum iteration expression. The tool would also create an iteration counter field. As part of the configuration you could have a check box to "retain iteration count field on exit". If checked, the field would be maintained. If not checked, the field would be dropped for the data as it exits the loop.

This would making looping a bit more intuitive and it would be graphically self-documenting as well. Worth a mention at least.

-

API SDK

-

Category Developer

-

Category Developer

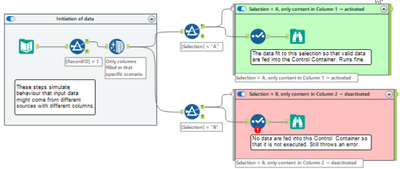

Sometimes, Control Containers produce error messages even if they are deactivated by feeding an empty table into their input connection.

(Note that this is a made up example of something which can happen if input tables might be from different sources and have different columns so that they need separated treatment.)

According to the product team, this is expected behaviour since a selection does not allow zero columns selected. This might be true (which I doubt a bit), but it is at least counter-intuitive. If this behaviour cannot be avoided in total, I have a proposal which would improve the user experience without changing the entire workflow validation logic.

(The support engineer understands the point and has raised a defect.)

Instead of writing messages inside Control Containers directly to the log output (on screen, in logfile) and to mark the workflow as erroneous, I propose to introduce a message (message, warning, error) stack for tools inside Control Containers:

- When the configuration validation is executed:

- Messages (messages, warnings, errors) produced outside of Control Containers are output to the screen log and to the log files (as today).

- Messages (messages, warning, errors) produced inside of Control Containers are not yet output but stored in a message stack.

- At the moment when it is decided whether a Control container is activated or deactivated:

- If Control Container activated: Write the previously stored message stack for this Control Container to the screen and to the log output, and increase error and warning counts accordingly.

- If Control Container deactivated: Delete the message stack for this Control Container (w/o reporting anything to the log and w/o increasing error and warning count).

This would result in a different sequence of messages than today (because everything inside activated Control Containers would be reported later than today). Since there’s no logical order of messages anyways, this would not matter. And it would avoid the apparently illogical case that deactivated Control Containers produce errors.

-

Category Developer

-

Enhancement

Hi there,

When creating a database connection - Alteryx's default behaviour is to create an ODBC DSN-linked connection.

However DSN-linked connections do not work on a large server env - because this would require administrators to create these DSNs on every worker node and on every disaster recovery node, and update them all every time a canvas changes.

they are also not fully safe becuase part of the configuration of your canvas is held in the DSN - and so you cannot just rely on the code that's under version control.

So:

Could we add a feature to Alteryx Designer that allows a user to expand a DSN into a fully-declared conneciton string?

In other words - if the connection string is listed as

- odbc:DSN=DSNSnowFlakeTest;UID=Username;PWD=__EncPwd1__|||NEWTESTDB.PUBLIC.MYTESTTABLE

Then offer the user the ability to expand this out by interrogating the ODBC Connection manager to instead have the fully described connection string like this:

odbc:DRIVER={SnowflakeDSIIDriver};UID=Username;pwd=__EncPwd1__;authenticator=Snowflake;WAREHOUSE=compute_wh;SERVER=xnb27844.us-east-1.snowflakecomputing.com;SCHEMA=PUBLIC;DATABASE=NewTestDB;Staging=local;Method=user

NOTE: This is exactly what users need to do manually today anyway to get to a DSN-less conneciton string - they have to craete a file DSN to figure out all the attributes (by opening it up in Notepad) and then paste these into the connection string manually.

Thanks all

Sean

-

Admin Settings

-

Category Developer

-

Enhancement

-

User Settings

The Idea behind the Password Masking is - we have "Download Tool" from the "Developer Tab" - which is used to Download files from the given site. For example, let's take Mainframe. I have a scenario where the Alteryx Workflow should connect to the Mainframe FTP Server, download the required file which is used for downstream transformation. For the download, I get the Username and Password information from the Database table (to reduce manual intervention and prevent errors). While passing the Username and Password as a parameter to the Download Tool Macro (Custom Macro - accepts the Username/Password, Filename dynamically) - the Alteryx Workflow will obviously show the username and password in the result window (as it is considered as an output data from Input Tool). Now I want that particular password field to be masked, so whenever the particular Workflow is shared to the User - the password field remains unexposed. I know there's a way to mask a particular field using "MD5 HASH" formula, but that helps to mask anything related to Dataset and not a password (as it may consider it as a new string and not a valid password). This feature would be really beneficial to Developers who use the download tool often. A New Tool or a Custom Macro - embedding this feature would be great for users who needs Masking functionality.

-

API SDK

-

Category Developer

Hi!

Can you please add a tool that stops the flow? And I don't mean the "Cancel running worfklow on error" option.

Today you can stop the flow on error with the message tool, but there's not all errors you want to stop for.

Eg. I can use 'Block until done' activity to check if there's files in a specific folder, and if not I want to stop the flow from reading any more inputs.

Today I have to make workarounds, it would be much appreciated to have a easy stop-if tool!

This could be an option on the message tool (a check box, like the Transient option).

Cheers,

EJ

-

API SDK

-

Category Developer

Hello,

please remove the hard limit of 5 output files from the Python tool, if possible.

It would be very helpful for the user to forward any amount of tables in any format with different columns each.

Best regards,

-

API SDK

-

Category Developer

In Many of our tools,Before processing any file We create backup and move it to some backup with the datetime stamp.

Can we have such option like "CreateBackup" with timestamp in input and output tools?

-

API SDK

-

Category Developer

-

Category Input Output

-

Category Preparation

Currently there is a maximum amount that can be passed into the Dynamic Input, 1MB. I often hit this limit and it is infuriating. If this was upped to 5MB that would solve a lot of my issues, but 50MB would be AMAZING.

Thoughts?

-Nick

-

API SDK

-

Category Developer

I'm stealing this idea from Tableau's number formatting, it's a timesaver.

In the DateTime tool if I've initially selected a value besides Custom in the "Select the format..." list then when I click Custom rather than having the Custom textbox be blank I'd like to have it automatically populated with whatever formatting string I just selected. Here's an example screenshot:

-

API SDK

-

Category Developer

-

Category Parse

-

Category Time Series

Hello gurus -

Pretty much every coding framework supports this. If we really want Alteryx to embrace no-code, we've got to have some ability to control commit / rollbacks across transactions. As it stands currently, it is pretty easy to write out parent records, fail to be able to write out children, and wind up with a database state that makes the end users very sad.

Thanks!

brian

-

API SDK

-

Category Connectors

-

Category Developer

-

Category Input Output

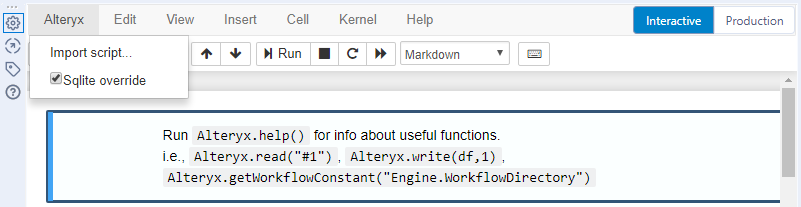

When reading and writing large data frames to/from a python script in Alteryx it seems that there are limitations to the SQLite component of the tool. Given that this selection is recommended only when the user is having issues in the python tool why is the option selected by default? A colleague and I spent a couple of hours trying to work through an issue with importing a data frame larger than 1000x1000 and once we found this option (SQLite override) and unchecked it the data was written back to Alteryx without any problems.

Hint provided by the tool, "This changes the intermediate data format between Alteryx and Jupyter from yxdb to SQLite. Use only if running into issues. See help for more details."

Error message provided by the tool

After unchecking the option the workflow ran without any errors.

Recommendation: the python tool should default to SQLite override unchecked

-

API SDK

-

Category Developer

-

Tool Improvement

Hi - think it would be great to have to open only one debug window, and where I add to my workflow, the debug automatically updates to include the new features of my workflow.

As it is now, I believe that I have to open a new Debug window where I have added new components to my workflow.

-

API SDK

-

Category Developer

Hello All,

We are new to Alteryx and we could see that the Supported Data Sources from IBM are of below :

- IBM DB2

- IBM Netezza/Pure Data Systems

- IBM SPSS

How about adding IBM Sterling to this?

We want Alteryx to support connection with IBM Sterling OMS which will help the Business requirements

Can anyone post some suggestions on this? How we can connect to Sterling?

Thanks,

Praveen C

-

API SDK

-

Category Connectors

-

Category Developer

-

Category In Database

Idea:

As a method of deploying preprocessing and ML models it would be awesome to be able to convert a workflow to java...

Rationale:

models are needed to be deployed into Complex event processes or decision systems. Even for SAS there is a need to implement the datastep algorithms and procs to run in JVM.

Quickwin:

It is possible to convert a workflow into a PMML file and then use JMML package to convert that to Java. Yet the full workflow with all preprocessing alternatives and a series of ML methods may not be captured fully.

Competitor example:

For SAS case here is a similar solution: http://www.dullesresearch.com/carolina-features/

-

API SDK

-

Category Developer

- New Idea 209

- Accepting Votes 1,836

- Comments Requested 25

- Under Review 152

- Accepted 55

- Ongoing 7

- Coming Soon 8

- Implemented 473

- Not Planned 123

- Revisit 67

- Partner Dependent 4

- Inactive 674

-

Admin Settings

19 -

AMP Engine

27 -

API

11 -

API SDK

217 -

Category Address

13 -

Category Apps

111 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

239 -

Category Data Investigation

75 -

Category Demographic Analysis

2 -

Category Developer

206 -

Category Documentation

77 -

Category In Database

212 -

Category Input Output

632 -

Category Interface

236 -

Category Join

101 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

75 -

Category Predictive

76 -

Category Preparation

384 -

Category Prescriptive

1 -

Category Reporting

198 -

Category Spatial

80 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

87 -

Configuration

1 -

Data Connectors

948 -

Desktop Experience

1,493 -

Documentation

64 -

Engine

123 -

Enhancement

276 -

Feature Request

212 -

General

307 -

General Suggestion

4 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

10 -

Localization

8 -

Location Intelligence

79 -

Machine Learning

13 -

New Request

177 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

21 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

73 -

UX

220 -

XML

7

- « Previous

- Next »

- vijayguru on: YXDB SQL Tool to fetch the required data

- apathetichell on: Github support

- Fabrice_P on: Hide/Unhide password button

- cjaneczko on: Adjustable Delay for Control Containers

-

Watermark on: Dynamic Input: Check box to include a field with D...

- aatalai on: cross tab special characters

- KamenRider on: Expand Character Limit of Email Fields to >254

- TimN on: When activate license key, display more informatio...

- simonaubert_bd on: Supporting QVDs

- simonaubert_bd on: In database : documentation for SQL field types ve...