Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: New Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

Currently, when creating scatter graphs you are unable to order the plots based on a sub-group of the data (ie the legend). It would be nice to have the ability to pick which part of the legend is displayed first, above the other data plots. Could we also have the option to take the 3D element off the scatter graph plots?

Thanks,

Oliver

It would be great to get a random x number of records or x % of records for every grouped field in the sample tool.

Right now, the sample tool is lacking the random % feature and the random % tool is lacking the group by feature.

Just like the File Geodatabase, Esri has an enterprise version for servers, in our case, Oracle. We would like the option to output to an Oracle GDB like we can to a normal Oracle DB. This would greatly assist in our process flows.

Please extend the Workflow Dependencies functionality to include dependencies of used macros in the worflow too. Currenctly macros are simply marked as dependencies by themselves, but the underlying dependencies (e.g. data sources) of these macros are not included.

We have a large ETL process developed with Alteryx that applies several layers of custom and complex macros and several data sources referenced using aliases. Currently the process is deployed locally (non-server) and executed ad-hoc, but will be moved to the server platform at some point.

Recently I had to prep an employee for running the process. This requires creating aliases and associated connections and making sure that access to needed network locations is in place (storing macros, temp files, etc.). Hence I needed to identify all aliases and components/macros used. As everything is wrapped nicely by a single workflow, I hoped that the workflow dependencies functionality would cover dependencies in the macro nodes within, but unfortunately it didn't and I had to look through the dependencies of 10-15 macros.

I have no idea how many people are using the .Net API to build custom tools, but found an issue with its assembly scanning.

It doesnt pick up classes implementing IPlugin in an abstract base class. Can be worked around by moving the interface onto the concrete implementation but think it should pick up any concrete class implementing the IPlugIn regardless of whether on the class itself or a base class.

I can see that the Venn diagram is very nice for a new user to understand the Join tool (which is a super-great tool by the way). But I would like to be able to close up the Venn diagram to give more room to see the variables listed below.

Thanks!

Susan

Under options/restore defaults, it would be nice if the canvass could be reset (I sometimes lose windows), but the favorites be left intact.

Thanks!

Susan

I have a very large geospatial point dataset (~950GB) . When I do a spatial match on this dataset to a small polygon, the entire large geospatial point dataset has to be read into the tool so that the geospatial query can be performed. I suspect that the geospatial query could be significantly speed up of the geospatial data could be indexed (referenced) to a grid (or multiple grids) so that the geoquery could identify the general area of overlap, then extract the data for just that area before performing the precise geoquery. I believe Oracle used (uses) this method of storing and referencing geospatial data.

Hi,

So I was working on a project which uses the "Download" tool. I needed to measure precisely the response time for each record so I set up a "timestamp" value using the DateTimeNow() function before the actual download. After download was complete, i tried to measure the response time by using the DateTimeDiff() function. However, using this method, i was not able to get a precise (up to a millisecond) performance reading since the DateTime format gets rounded to a second.

It would be great to have a way of precisly measure the time taken for each record to go through a tool or a set of tool and having that value be a part of the output file

This is not a terribly important thing, but in the formula tool, the nitty gritty details of the Expression almost never fit into the annotation space. It would be nice if the annotation by default just contained the names of the variables being derived. So, e.g. if I'm deriving "CalcVar1", "CalcVar2", "CalcVar3" and so forth, the default annotation would just be:

This gives a much cleaner (by default) canvas. The same concept applies to the MultiRow and MultiField Formula tools, perhaps others. Just a thought and obviously not very important. Thanks.

I see the mention of VR but has anyone talked about touch screen capabilities with Alteryx? Would make even the tough projects more fun!

I find the concept of Batch and/or Iterative macros, when done specifically for the simple purpose of iteration, to be a fair bit of overhead. If we could extract the fundamental qualities of a loop and get that into an "Iteration Tool," it could become a well-used tool from the pallette.

Implementation Ideas:

- Assume that the iteration is over the rows of a given input data set.

- For the "body of the loop" allow multiple expressions, each of which iteratively assigns the i'th position of a given variable (which could be either existing or derived just like the Formula tool and it's expression).

- Allow referencing of the loop index variable from within expressions

An example problem this could solve is from: http://community.alteryx.com/t5/Data-Preparation-Blending/Looping-and-dynamically-changing-output/m-.... As discussed therein, the concept of "row dependent iteration" makes this difficult to solve with standard tools.

If the input data set from that example were sent into the proposed Iteration Tool... it would automatically loop over the dataset rows; and three expressions could be supplied in the Tool configuration to solve the problem:

VarE: IF [i] > 1 THEN VarF[i-1] + VarG[i-1] ELSE VarE ENDIF VarF: VarA + VarB VarG: VarC + VarD

For implementation purposes, this would be logically equivalent to:

VarE[i]: IF [i] > 1 THEN VarF[i-1] + VarG[i-1] ELSE VarE[i] ENDIF VarF[i]: VarA[i] + VarB[i] VarG[i]: VarC[i] + VarD[i]

(so, basically, the i'th row is assumed unless otherwise provided in the expression syntax).

I hope this isn't too outlandish - I've tried to think through how this could be accomplished (1) as a tool that is not too fiendishly difficult for Alteryx to implement and (2) which would also be easy for us, the end users, to utilize. Thanks!

Our Alteryx users query a number of different data sources. Some of these include external servers outside our control.

To avoid any issues regarding locking, we use the Read Uncommitted function as part of the Data Input tool as part of our baseline design, probably 95% of the time or more.

It would be very beneficial for our organization if there was a way for us to set this option to be checked by default, so that it was one less thing users needed to remember when configuring a quick data pull.

There is a need when visualizing in-Database workflows to be able to visualize sorted data. This sorting could be done 1 of 2 ways: In a browse tool, or as a stand-alone Sort tool. Either would address the need. Without such a tool being present, the only way to sort the data is to "Data Stream Out" and then visualize the data in Alteryx. However, this process violates the premise of the usefulness of the in-DB toolkit, which is to keep your data in-DB and process using the DB engine. Streaming out big data in order to add a sort is not efficient.

Granted, the in-DB processing doesn't care whether data is sorted or not. However, when attempting to find extreme values after an aggregation, or when trying to identify something as simple as whether null values are present in a field, then a sort becomes extremely useful, and a necessary tool for human consumption of data (regardless of the database's processing needs).

Thanks very much for hearing my idea!

DELETE from Source_Data Where ID in

SELECT ID from My_Temp_Table where FLAG = 'Y'

....

Essentially, I want to update a DB table with either an update or with the deletion of rows. I can't delete all of the data. My work around will be to create/insert into a table the keys that i want to delete and try to use a input/output tool with SQL that performs the delete. Any other suggestions are welcome, but a tool is best.

Thanks,

Mark

When moving external data into the database, the underlying SQL looks like:

CREATE GLOBAL TEMPORARY TABLE "AYX16020836880b41e08246b59ee8c"

...

My client would like to add a prefix to the table as:

CREATE GLOBAL TEMPORARY TABLE MMMM999_DM_USER."AYX16020836880b41e08246b59ee8c"

where MMMM999_DM_USER is supplied in the configuration.

A service account automatically sets the current session to something like MMMM999 (alter session set curent schema=MMMM999;)

I've seen several posts and questions concerning NULL dates. Is 09/31/2010 a valid date? I know that 02/29/206 isn't valid and that 02/00/2006 isn't either, but I really don't like finding out about these in conversion warning messages.

I might suggest a function that returns True or False on the date check and let the user configure appropriate rules to rethink the attempted date prior to committing the field to the date data type.

Cheers,

Mark

If you have a complex SQL query with a number of dynamic substitutions (e.g. Update WHERE Clause, Replace a Specific String), it would be nice to be able to optionally ouput the SQL that is being executed. This would be particuarly useful for debugging.

Hello

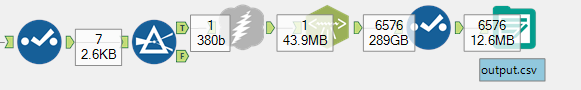

My problem: I've used the download-tool to download a 40mb XML. Parsing the DonwloadData-field containing this XML results in about 6600 records. The XML-Parse Tool passes the orginal DownloadData field to each record, resulting in quite a bit of memory usage:

Suggestion: An option in the XML-parse Tool to not pass the parsed field in its output.

Marco

As a MicroStrategy customer it would be nice if Alteryx would support output for either the .mstr file format or better direct creation and publication of the iCUBE, intelligent cube format on their server solution. This would be similar to the existing features of writing a twbx or TDE file extract and publish to Tableau server.

Opposite if the Input connector could read from an intelligence cube on the MSTR iServer as datasource that would be great as well.

Below a link to their SOA webservices documentation to pull data into applications, perhaps an option.

- New Idea 274

- Accepting Votes 1,815

- Comments Requested 23

- Under Review 173

- Accepted 58

- Ongoing 6

- Coming Soon 19

- Implemented 483

- Not Planned 115

- Revisit 61

- Partner Dependent 4

- Inactive 672

-

Admin Settings

20 -

AMP Engine

27 -

API

11 -

API SDK

218 -

Category Address

13 -

Category Apps

113 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

247 -

Category Data Investigation

77 -

Category Demographic Analysis

2 -

Category Developer

208 -

Category Documentation

80 -

Category In Database

214 -

Category Input Output

640 -

Category Interface

239 -

Category Join

103 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

76 -

Category Predictive

77 -

Category Preparation

394 -

Category Prescriptive

1 -

Category Reporting

198 -

Category Spatial

81 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

89 -

Configuration

1 -

Content

1 -

Data Connectors

963 -

Data Products

2 -

Desktop Experience

1,537 -

Documentation

64 -

Engine

126 -

Enhancement

330 -

Feature Request

213 -

General

307 -

General Suggestion

6 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

12 -

Localization

8 -

Location Intelligence

80 -

Machine Learning

13 -

My Alteryx

1 -

New Request

194 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

23 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

80 -

UX

223 -

XML

7

- « Previous

- Next »

-

NicoleJ on: Disable mouse wheel interactions for unexpanded dr...

- TUSHAR050392 on: Read an Open Excel file through Input/Dynamic Inpu...

- NeoInfiniTech on: Extended Concatenate Functionality for Cross Tab T...

- AudreyMcPfe on: Overhaul Management of Server Connections

-

AlteryxIdeasTea

m on: Expression Editors: Quality of life update - StarTrader on: Allow for the ability to turn off annotations on a...

- simonaubert_bd on: Download tool : load a request from postman/bruno ...

- rpeswar98 on: Alternative approach to Chained Apps : Ability to ...

-

caltang on: Identify Indent Level

- simonaubert_bd on: OpenAI connector : ability to choose a non-default...

| User | Likes Count |

|---|---|

| 23 | |

| 5 | |

| 5 | |

| 5 | |

| 5 |