Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: Hot Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

Alteryx offers the ability to add new formulae (e.g. the Abacus addin) and new tools (e.g. the marketplace; custom macros etc) - which is a very valuable and valued way to extend the capability of the platform.

However - if you add a new function or tool that has the same name as an existing function / tool - this can lead to a confusing user experience (a namespace conflict)

Would it be possible to add capability to Alteryx to help work around this - two potential vectors are listed below:

- Check for name conflicts when loading tools or when loading Alteryx - and warn the user. e.g. "The Coalesce function in package CORE Alteryx conflicts with the same function name in XXX package - this may cause mysterious behaviours"

- Potentially allow prefixes to address a function if there are same names - e.g. CoreAlteryx.Coalesce or Abacus.Coalesce - and if there is a function used in a function tool in a way that is ambiguous (e.g. "Coalesce") then give the user a simple dialog that allows them to pick which one they meant, and then Alteryx can self-cleanup.

I would really love to have a tool "Dynamic change type" or "Dynamic re-type" which is used just as "Dynamic Rename".

- "Take Type from First Row of Data": By definition, all columns are of a string type initially. Sets the type of the column according to the string in the first row of data.

Col 1 Col 2 Col 3 Col 4 Double Int32 V_String Date 123.456 17 Hello 2023-10-30 3.4e17 123 Bye 2024-01-01 - "Take Type from Right Input Metadata": Changes the types of the left input table to the ones by right input.

- "Take Type from Right Input Rows": Changes the types based on a table with columns "Name" and "New Type".

Name New Type Col 1 Double Col 2 Int32 Col 3 V_String Col 4 Date

Hi there,

When connecting to data sources using DCM - could we please add the ability to make JDBC connections?

see:

https://community.alteryx.com/t5/Engine-Works/JDBC-Connections-in-Alteryx/ba-p/968782

As mentioned in these threads - JDBC is very common in large enterprises - and in many cases is better supported by the technology teams / developer community and so is much easier to make a connection. Added to this - there are many databases (e.g. DB2) where JDBC connections are just much easier

Please could you add JDBC connections to the DCM tooling?

Thank you

Sean

cc: @wesley-siu @_PavelP

Hello All,

I'm using the dynamic input tool for SQL requests in my Workflow (WF).

I'm using the "Replace a Specific String" to replace elements in the SQL statement dynamically depeding on results of prevoius tools, user input etc.

So the statement looks like

select * from Schema_Name_xx where invoice_number = 'invoice_number_xx'

Since Schema_Name_xx is no valid Schema in the Database, the statement (= Validation) won't work. Only if I replace Schema_Name_xx by e.g. Invoice_Data_Current it will work, same with the invoice number, invoice_number_xx is replaced by e.g. 4711.

Therefore, validation makes no sense and will never work, only if the WF is running, the correct Schema is inserted in the SQL statement by the "Replace a Specific String" function.

It would be great to disable it in the users settings or wherever in the Designer, changing a config file would also be great :-)

Pls. note: I'm thinking (since I'm not allowed anyway ;-)) about changing/disabeling anything in the Alteryx Server settings.

Reason:

1. Speed: Validating a WF with SQL statements that don't work takes time (every time I save it), sometimes I get even a timeout...

2. WF error entries: Each upload with a failed validation creates an entry in the WF result list which makes it harder to seperate them from the "real" WF errors...

Thanks & Best Regards,

Thomas

Hello,

A few years ago, Alteryx was 4 released per year and now it's only 2 per year (in 2023, as of today, only one !!)

The reasons why I would the cadence to be back to quarter release :

-a quarter cadence means waiting less time to profit of the Alteryx new features so more value

-quarter cadence is now an industry standard on data software.

-for partners, the new situation means less customer upgrade opportunities, so less cash but also less contacts with customers.

Best regards,

Simon

Currently when debug mode is entered in analytic apps and macros, the direct inputs to the app/macro when the error occurred are hardcoded into a workflow in debug mode, so that errors can be more easily detected.

However, inputs into analytic apps also create global variables which can be used in the more code-heavy aspects of Alteryx such as the Formula Tool. These are not updated in the same way which can cause workflows to break in debug mode - it would be really helpful if global variables could be updated in the same way as the inputs into tools are.

Is it possible to add a search feature to the Summarize Tool that is similar to the search feature in the Select Tool? Selecting specific fields to summarize in small datasets is fine, but if I am dealing with a table that has 200 fields searching for a specific field can be cumbersome. Type in a few key letters to filter the available fields would be helpful.

Changing the Macro Input tool in an existing macro is dangerous and can result in unmapped fields or lost connections in workflows using the macro. For example, we have a widely used macro for which we'd like to change the name of an input field, change it's default type from Date to DateTime, make it optional while keeping other fields mandatory. Currently, we cannot find a solution which would not require us to fix each workflow using the macro after changing it. We should be able to change the field names, field types (e.g. String to V_WString, Date to DateTime), select optional fields and do other modifications to Macro Input without having to update each workflow using the macro. The new Macro Input UI could be enhanced with a window similar to that of Select tool's. Technically, the Macro Input fields could have a unique ID by which they would be recognised in workflows, so the field names would just be aliases that could be changed without losing the mapping. In summary, we are restricted to our initial setup of Macro Input and it is very complicated to change it afterwards, especially if the macro is used widely.

In short:

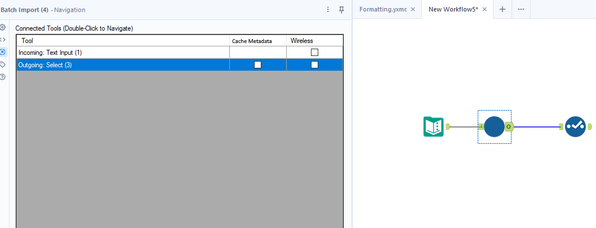

Add an option to cache the metadata for a particular tool so that it doesn't forget when using tool that have dynamic metadata such as batch macros or alteryx metadata engine can't resolve such as python tool.

Longer explanation:

The Problem:

One of the issues I often encounter when making dynamic workflows or ones that require calling external services is that Alteryx often forgets the metadata of what columns to expect. This causes the workflow to forget configuration of downstream tools when a workflow is first opened or when the metadata engine refreshes. There is currently the option to disable the metadata engine from automatically refreshing but this isn't a good option because you miss out on much of the value it brings.

Some of the common tools where I encounter this issue:

- Json parse

- Batch macros

- Python tool

- Regex parsing to rows

Solution:

Instead could we add an option to cache the metadata for a particular tool, this would save the metadata from the last time the workflow ran to within the workflows XML so that it persists when closed and reopened. Then when the metadata engine runs when it gets to this tool instead of resolving the metadata from the tool it instead uses the saved version in the XML. Obviously when it actually runs it would ignore this and any errors would still occur.

This could be an option in navigation pane of each tool. Mockup below:

This would make developing dynamic workflows far easier and resolve issues of configuration being lost when the metadata changes and alteryx forgets the options.

Hello all,

A few weeks ago Alteryx announced inDB support for GBQ. This is an awesome idea, however to make it run, you should use Oauth2 Authentication means GBQ API should be enabled. As of now, it is possible to use Simba ODBC to connect GBQ. My idea is to enhance the connection/authentication method as we have today with Simba ODBC for Google BigQuery and support inDB. It is not easy to implement by IT considering big organizations, number of GBQ projects and to enable API for each application. By enhancing the functionality with ODBC, this will be an awesome solution.

Thank you for voting

Albert

When I import an Excel file in to Alteryx I get an error: “shared strings root=x:sst” and Alteryx cannot read the file.

I can work around this by manually opening and saving the excel before importing it into Alteryx but this is not ideal, especially considering the automation implications.

I believe this may be happening because the XLSX generated by the source of the report has a prefix “x:” in all the tags in the Shared String XML embedded in Excel. See: https://learn.microsoft.com/en-us/office/open-xml/working-with-the-shared-string-table

Essentially, it would appear Alteryx is not able to read generated Excel sheets which has the prefix "x:" (e.g. from a bot). The second file which has been opened and saved in Excel manually can be read by Alteryx correctly.

Example of file as exported from ”BOT”:

How the same file looks once it is manually opened and saved:

Ideally Alteryx would read the file as is, i.e. with the "X:SST" tag seen above as having to manually open and save the excel before it can be read is rather clunky.

Thanks!

Have you ever had the business deliver an Excel (EEK!) file to be passed into Alteryx with a different number of header rows (because it looks pretty and is convenient)? Never, you say? Lies!

I would suggest adding an option to the Input Data Tool that would give us the ability concatenate multiple header rows. This would help enable accurate data profiling for columns when output and eliminate loss from unnecessary conversion errors. Currently, the options allow us to Start Data Input on Line X; however, if the header for the column is on multiple rows, they would have to be manually entered after input due to only being able to select the lowest possible row to assure the data is accurately passed. The solution would be to be able to specify the number of rows that contain headers, concatenate them to a single row (ignoring null and carriage return) and then output that as the header.

The current functionality, in a situation where each row has a variable number of header rows, causes forced errors such as a scientific string conversion of a numeric value.

Would be nice to have a way to cache-uncache all inputs or a selected group of tools. Caching and Uncaching workflows with many input tools or slow data-read tools gets to be a bit cumbersome. Would be a nice QoL improvement :)

I looked around for something like this but didn't see a solution, so thought I'd recommend. Please let me know if something like this exists already natively in designer desktop.

Hi there,

When creating a database connection - Alteryx's default behaviour is to create an ODBC DSN-linked connection.

However DSN-linked connections do not work on a large server env - because this would require administrators to create these DSNs on every worker node and on every disaster recovery node, and update them all every time a canvas changes.

they are also not fully safe becuase part of the configuration of your canvas is held in the DSN - and so you cannot just rely on the code that's under version control.

So:

Could we add a feature to Alteryx Designer that allows a user to expand a DSN into a fully-declared conneciton string?

In other words - if the connection string is listed as

- odbc:DSN=DSNSnowFlakeTest;UID=Username;PWD=__EncPwd1__|||NEWTESTDB.PUBLIC.MYTESTTABLE

Then offer the user the ability to expand this out by interrogating the ODBC Connection manager to instead have the fully described connection string like this:

odbc:DRIVER={SnowflakeDSIIDriver};UID=Username;pwd=__EncPwd1__;authenticator=Snowflake;WAREHOUSE=compute_wh;SERVER=xnb27844.us-east-1.snowflakecomputing.com;SCHEMA=PUBLIC;DATABASE=NewTestDB;Staging=local;Method=user

NOTE: This is exactly what users need to do manually today anyway to get to a DSN-less conneciton string - they have to craete a file DSN to figure out all the attributes (by opening it up in Notepad) and then paste these into the connection string manually.

Thanks all

Sean

It would be very helpful to have an output of the workflow into a step by step document. so someone who does not have access to Alteryx can undestand the steps taken to create the flow hence the result or output.

Hi, I was looking for this but couldn't find a similar idea, so I post a new one. If someone knows about a similar idea, please ask the moderators to mer

CountChars(<String>, <char to count>,<case sensitive>)

Where <char to count> and <case sensitive> are optional parameters.

If <char to count> is not provided, the funtion will return the total character count within the <String>.

If <char to count> is provided, it'll return the number of ocurrences of that character within the <String>.

PS: For those tempted to suggest a workaround, I've been using REGEX_CountMatches() for this. Actually, the focus is to simplify user's experience and workflow performance providing a native function, instead of using REGEX which it's very demmanding on resources.

Multi-Fill Tool

Please consider a new Multi-Fill tool, not for Apps, but for regular workflows, manually run or scheduled.

Similar to the Interface tool-combination of the Text Box & Action (Update value) tools, this Multi-Fill tool would enable the user to update, for example, the User Name and Password in one place for multiple Download tools. It could also be used to update other tool variables like Filter, Sort, Unique, etc.

Containers are a great feature. They allow us to create larger workflows in smaller canvases, and manage the flow and appearance of our work. However the design whether intentional or flawed that allows the container window to interact with the layers behind it is annoying. Connection wires should not redirect within a container because of things on the canvas behind the container. Likewise if I have a container open, I should not be able to grab a tool or container behind the open container through the container canvas. Please fix this flaw.

Hi all,

At present, Alteryx does not support DSN-free connections to Snowflake using the Bulk Connector. This is a critical functionality for any large company that uses Alteryx - and so I'm hoping that this can be changed in the product in an upcoming release. As a corollary - every DB connection type has to be able to work without DSNs for any medium or large size server instance - so it's worth extending this to check every DB connection type available in Alteryx.

Here are the details:

What is DSN-Free?

In order to be able to run our Alteryx canvasses on a multi-node server - we have to avoid using DSNs - so we generally expand connection strings that look like this:

odbc:DSN=DSNSnowFlakeTest;UID=Username;PWD=__EncPwd1__|||NEWTESTDB.PUBLIC.MYTESTTABLE

to instead have the fully described connection string like this:

odbc:DRIVER={SnowflakeDSIIDriver};UID=Username;pwd=__EncPwd1__;authenticator=Snowflake;WAREHOUSE=compute_wh;SERVER=xnb27844.us-east-1.snowflakecomputing.com;SCHEMA=PUBLIC;DATABASE=NewTestDB;Staging=local;Method=user

For Snowflake BL:

Now - for the Snowflake Bulk Loader the same process does not work and Alteryx gives the classic error below

With DSN:

snowbl:DSN=DSNSnowFlakeTest;UID=Username;pwd=__EncPwd1__;Staging=local;Method=user|||NEWTESTDB.PUBLIC.MYTESTTABLE

Without DSN:

snowbl:driver=SnowflakeDSIIDriver;UID=SeanBAdamsJPMC;pwd=__EncPwd1__;SERVER=xnb27844.us-east-1.snowflakecomputing.com;WAREHOUSE=compute_wh;SCHEMA=PUBLIC;DATABASE=NewTestDB;Staging=local;Method=user|||NEWTESTDB.PUBLIC.MYTESTTABLE

Many thanks

Sean

- New Idea 291

- Accepting Votes 1,791

- Comments Requested 22

- Under Review 166

- Accepted 55

- Ongoing 8

- Coming Soon 7

- Implemented 539

- Not Planned 111

- Revisit 59

- Partner Dependent 4

- Inactive 674

-

Admin Settings

20 -

AMP Engine

27 -

API

11 -

API SDK

220 -

Category Address

13 -

Category Apps

113 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

247 -

Category Data Investigation

79 -

Category Demographic Analysis

2 -

Category Developer

209 -

Category Documentation

80 -

Category In Database

215 -

Category Input Output

645 -

Category Interface

240 -

Category Join

103 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

76 -

Category Predictive

79 -

Category Preparation

395 -

Category Prescriptive

1 -

Category Reporting

199 -

Category Spatial

81 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

89 -

Configuration

1 -

Content

1 -

Data Connectors

968 -

Data Products

3 -

Desktop Experience

1,551 -

Documentation

64 -

Engine

127 -

Enhancement

343 -

Feature Request

213 -

General

307 -

General Suggestion

6 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

13 -

Localization

8 -

Location Intelligence

80 -

Machine Learning

13 -

My Alteryx

1 -

New Request

204 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

24 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

81 -

UX

223 -

XML

7

- « Previous

- Next »

- Shifty on: Copy Tool Configuration

- simonaubert_bd on: A formula to get DCM connection name and type (and...

-

NicoleJ on: Disable mouse wheel interactions for unexpanded dr...

- haraldharders on: Improve Text Input tool

- simonaubert_bd on: Unique key detector tool

- TUSHAR050392 on: Read an Open Excel file through Input/Dynamic Inpu...

- jackchoy on: Enhancing Data Cleaning

- NeoInfiniTech on: Extended Concatenate Functionality for Cross Tab T...

- AudreyMcPfe on: Overhaul Management of Server Connections

-

AlteryxIdeasTea

m on: Expression Editors: Quality of life update

| User | Likes Count |

|---|---|

| 7 | |

| 7 | |

| 5 | |

| 3 | |

| 3 |