Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

Hello,

A few years ago, Alteryx was 4 released per year and now it's only 2 per year (in 2023, as of today, only one !!)

The reasons why I would the cadence to be back to quarter release :

-a quarter cadence means waiting less time to profit of the Alteryx new features so more value

-quarter cadence is now an industry standard on data software.

-for partners, the new situation means less customer upgrade opportunities, so less cash but also less contacts with customers.

Best regards,

Simon

When moving a tool container, all of the tools within it become mis-aligned with the canvas grid. Moving any single tool immediately re-aligns it to the grid, which puts it out of alignment with the rest of the tools in the container.

Example: Put 3 tools in a row in a tool container, all aligned horizontally. Next, move the container. Now, move the middle tool, then try to place it back in alignment with the other two. You won't be able to, because they are out of alignment with the canvas grid.

Please fix this.

I'm sure there's a reason behind it, but can we please be allowed to run calculations on null values in a formula tool? right now, if we sum three values (1 + 3 + [null]) it produces [null], can the formula tool just ignore the null values? the only way around this is to fill the [null] cells with a value and that adds an additional step to what should be a fairly straight forward process. That value would have to be different for a multiplication formula vs an addition formula in order to not change the answer materially whereas ignoring the value is a more consistent solution.

Added in Alteryx Version 2020.3, the Browse tool no longer shows a profile of the complete dataset (it is capped when the record data size reached 300MB).

My proposed solution is an optional override of the record size limit on the browse tool (which will make the profiling take longer, but actually profile the entire dataset). I would also like a general user setting to set the default behavior of the browse tool to either be limited or unlimited.

Below is the newly included documentation of the Data Profiling Limit, which I'm proposing can be overridden.

Data Profiling Limit

Data Profiling in the Browse tool is capped at 300 MB. This allows you to process very large datasets faster. For each record in the incoming dataset, we process the record and add the record size to a counter. Once the counter reaches 300 MB, we stop processing records.

It is important to note that there is no specific number of records that we can process. This depends on the dataset since a record size can range from 1 byte to a few thousand bytes. This record size is different from the file size, displayed in the Results grid and Data Profiling Holistic View. The file size is generally different since it has been compressed to optimize spacing.

In other words, 300 MB of record size is not the same as 300 MB of file size.

This new tool can cause confusion when looking at the data profile (e.g. if you expect the sum to be $3 million, but the browse tool is only showing 2% of your total records in the profile tool, the profile sum may only show $60 thousand).

The sampled version with a cutoff of 300MB is rarely useful if you are using browse tools to get a quick sense of the variable profiles on medium sized datasets (around 1 million records) since this rarely will fit into the 300MB record size limit.

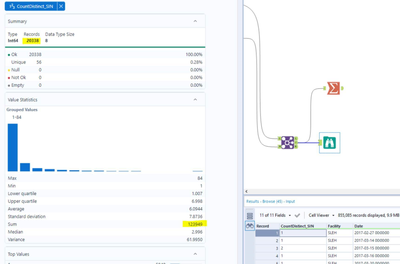

An example can be shown in the image below, where the dataset contains 855,085 records, but the browse tool is profiling only the first 20,338.

Again, being able to override this 300MB record size limit would fix the problem created in the 2020.3 change to the browse tool.

When using the text mining tools, I have found that the behaviour of using a template only applies to documents with the same page number.

So in my use case I've got a PDF file with 100+ claim statements which are all laid out the same (one page per statement). When setting up the template I used one page to set the annotations, and then input this into the T anchor of the Image to Text tool. Into the D anchor of this tool is my PDF document with 100+ pages. However when examining the output I only get results for page 1.

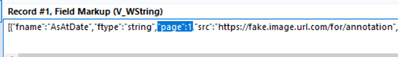

On examining the JSON for the template I can see that there is reference to the template page number:

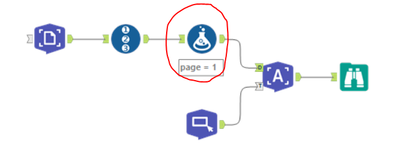

And playing around with a generate rows tool and formula to replace the page number with pages 1 - 100 in the JSON doesn't work. I then discovered that if I change the page number on the image input side then I get the desired results.

However an improvement to the tool, as I suspect this is a common use case for the image to text tool, is to add an option in the configuration of the image to text tool to apply the same template to all pages.

DELETE from Source_Data Where ID in

SELECT ID from My_Temp_Table where FLAG = 'Y'

....

Essentially, I want to update a DB table with either an update or with the deletion of rows. I can't delete all of the data. My work around will be to create/insert into a table the keys that i want to delete and try to use a input/output tool with SQL that performs the delete. Any other suggestions are welcome, but a tool is best.

Thanks,

Mark

There is duplicated action in the table tool to force the user decide the decimal places.

In the normal situation, all the data preparation process has been completed prior to the Table tool, we just want to leverage on this tool to format the header or incorporate conditional formatting. However, once the Table tool is connected and we have to re-configure the decimal places for all the numeric columns, the column names will be varied from year to year and it brings additional manual intervention to the workflow.

We recommend to provide flexibility for us to take the original upstream data source without changing the underlying data set.

Lack of tools in Alteryx to extract data from True PDF. The current set of tools (Computer Vision) only allow us to extract data from images which is not ideal for True PDF documents in terms of accuracy.

Add Unicode category to the cleansing tool

Please add a configuration to the RedShift bulk load to EITHER use access keys or an IAM EC2 role for access.

We should not have to specify access keys when we are in an IAM enabled environment.

Thanks

As each version of Alteryx is rolled out, it would be much easier for our users and admin team to validate the new version, if Alteryx allowed parallel installs of many different versions of the software.

So - our team is currently on 11.3 - if we could roll out 11.5 in parallel then we could very easily allow users to revert to 11.3 if there are issues, or else remove 11.3 after 2-3 weeks if no issues.

The same goes for versions which are in BETA.

This would be a huge help!

cc: @avinashbonu ; @Deeksha ; @revathi

It can be daunting to find the tool that is currently being processed by the engine in workflows that contain hundreds of tools with many ins, outs, and branches. During runtime, I want to be shown the tool that is running on the canvas. This functionality should be in the form of a button or something to direct focus to that area. It should not be the default.

To avoid some errors occurring during upgrade or even installation, it would be great to add an option in the installer to go with a fresh installation (remove any previous Alteryx Designer).

If selected, option would:

- Warn users that everything Alteryx related is going to be deleted

- Generate a log of what is going to be removed

- Rename folders and registry keys listed there: https://community.alteryx.com/t5/Alteryx-Designer/Complete-Uninstall-of-Alteryx-Designer/ta-p/402897

(rename instead of delete to avoid "bad surprises")

A similar option could exist when one would like to uninstall Alteryx Designer.

This would remove the frustration of having to rely on a "white knight" when something happens in the middle of an upgrade or an installation.

Thanks,

PaulN

My team uses a shared macro repository (say F:\AlteryxMacros), and we recently ran into an issue with the default save location for macros. While we save most macros to our repository, there are times when folks save their macros elsewhere (let's say C:\MyAwesomeWorkflow). The issue we've encountered is that if you go to file >> save as with a macro, it will ALWAYS default to the macro repository, even when my macro is currently saved elsewhere (C:\MyAwesomeWorkflow). Speaking for a friend, people have accidentally saved things to the macro repository by accident. Or, they waste time navigating from the macro repository to the their current folder.

If a macro is saved somewhere, please change the file >> save as to default to the current folder. Thanks!

Alteryx doesnt support querying tables within Apache Ignite via Ignite ODBC connector. Connectivity from Ignite being an in memory database with Alteryx would help in better connectivity via ODBC.

It would be really great if this was a native capability for the output tool so we don't have to replicate all the output too fields as macro inputs

I use a mouse which has a horizontal scroll wheel. This allows me to quickly traverse the columns of excel documents, webpages, etc.

This interaction is not available in Alteryx Designer and when working with wide data previews it would improve my UX drastically.

Please support GZIP files in the input tool for both Designer and Server.

I get several large .gz files every day containing our streaming server logs. I need to parse and import these using Alteryx (we currently use Sawmill). Extracting each of these files would take a huge amount of space and time.

This was previously requested and marked as "now available", but what is available only addressed a small part of the request. First, that request was for both ZIP and GZIP. What is now available is only ZIP. Second, it requested both input and output, what is now available is input only. Third, while not explicitly stated in the request, it needs to function in Alteryx Server in order to be scheduled on a daily basis.

Here is the issue I have, when you are using a Join tool and you have multiple columns that you are joining on (to the point that they don't all show in the

Configuration window), i have a tendency to use the mouse scroll wheel to move down to see additional columns i am joining on. The mouse scroll controls different things depending on where your cursor is. If your cursor is over the Left or Right columns then the scroll button will change the Fields you are using to join on. I have messed up more workflows then i care to mention due to this. I do not think it is appropriate for the scroll wheel to effect and change the fields in the configuration window and it should only be used to scroll up and down in the configuration window.

Please upgrade the "curl.exe" that are packaged with Designer from 7.15 to 7.55 or greater to allow for -k flags. Also please allow the -k functionality for the Atleryx Download tool.

-k, --insecure

(TLS) By default, every SSL connection curl makes is verified to be secure. This option allows curl to proceed and operate even for server connections otherwise considered insecure.

The server connection is verified by making sure the server's certificate contains the right name and verifies successfully using the cert store.

Regards,

John Colgan

- New Idea 376

- Accepting Votes 1.784

- Comments Requested 21

- Under Review 178

- Accepted 47

- Ongoing 7

- Coming Soon 13

- Implemented 550

- Not Planned 107

- Revisit 56

- Partner Dependent 3

- Inactive 674

-

Admin Settings

22 -

AMP Engine

27 -

API

11 -

API SDK

228 -

Category Address

13 -

Category Apps

114 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

252 -

Category Data Investigation

79 -

Category Demographic Analysis

3 -

Category Developer

217 -

Category Documentation

82 -

Category In Database

215 -

Category Input Output

655 -

Category Interface

246 -

Category Join

108 -

Category Machine Learning

3 -

Category Macros

155 -

Category Parse

78 -

Category Predictive

79 -

Category Preparation

402 -

Category Prescriptive

2 -

Category Reporting

204 -

Category Spatial

83 -

Category Text Mining

23 -

Category Time Series

24 -

Category Transform

92 -

Configuration

1 -

Content

2 -

Data Connectors

982 -

Data Products

4 -

Desktop Experience

1.604 -

Documentation

64 -

Engine

134 -

Enhancement

406 -

Event

1 -

Feature Request

218 -

General

307 -

General Suggestion

8 -

Insights Dataset

2 -

Installation

26 -

Licenses and Activation

15 -

Licensing

15 -

Localization

8 -

Location Intelligence

82 -

Machine Learning

13 -

My Alteryx

1 -

New Request

226 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

26 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

85 -

UX

227 -

XML

7

- « Vorherige

- Nächste »

- abacon auf: DateTimeNow and Data Cleansing tools to be conside...

-

TonyaS auf: Alteryx Needs to Test Shared Server Inputs/Timeout...

-

TheOC auf: Date time now input (date/date time output field t...

- EKasminsky auf: Limit Number of Columns for Excel Inputs

- Linas auf: Search feature on join tool

-

MikeA auf: Smarter & Less Intrusive Update Notifications — Re...

- GMG0241 auf: Select Tool - Bulk change type to forced

-

Carlithian auf: Allow a default location when using the File and F...

- jmgross72 auf: Interface Tool to Update Workflow Constants

-

pilsworth-bulie

n-com auf: Select/Unselect all for Manage workflow assets

| Benutzer | Anzahl |

|---|---|

| 6 | |

| 5 | |

| 4 | |

| 3 | |

| 2 |